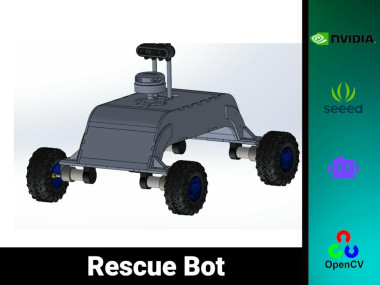

Rescue Bot

About the project

Rescue Bot is an autonomous ground rover designed to navigate post-disaster terrain, locate survivors and enhance disaster response

Project info

Difficulty: Difficult

Platforms: Arduino, DFRobot, Intel, Microsoft, Raspberry Pi, ROS, Seeed Studio, Jupyter, NVIDIA, OpenCV

Estimated time: 2 days

License: MIT license (MIT)

Items used in this project

Hardware components

View all

Software apps and online services

View all

Hand tools and fabrication machines

Story

The heart of our technological ambition lies in our mission to engineer a groundbreaking robot with a dual purpose, seamlessly blending safety and environmental awareness. Our vision unfolds as we conceive a robotic companion meticulously designed to revolutionize the mining industry.

Introducing "Project Rescuebot": A cutting-edge initiative driven by the imperative to enhance both the well-being of the workforce and the ecological sustainability of mining operations. At its core, our robot is a posture-perfecting sentinel, equipped with state-of-the-art sensors and algorithms meticulously crafted to accurately measure and analyze the posture of individuals working within mining environments.

But our commitment doesn't end there. Recognizing the environmental impact of mining activities, we have incorporated a sophisticated environmental monitoring system into our robotic marvel. With this system, we are capable of measuring greenhouse gas emissions within the mining environment.

"Rescue bot" is not merely a robot; it is a sentinel for the safety and health of mine workers, ensuring their well-being through posture monitoring, while concurrently championing environmental stewardship by actively measuring and mitigating greenhouse gas emissions. In this narrative of innovation, our purpose is clear: to forge a path toward a safer, more sustainable future for the mining industry and beyond.

Source :www.business-standard.com

Source :www.business-standard.com

To address this challenge, we have developed a rover that can navigate difficult terrain to locate and rescue survivors and collect essential environmental data. Additionally, the rover can be manually controlled for navigation purposes, while a manipulator mounted on top can collect soil samples and other critical items. Moreover, the rover is equipped to measure various atmospheric parameters such as equivalent calculated carbon dioxide, Total Volatile Organic Compound, relative humidity, barometric pressure, temperature and air quality. The rover can also be programmed to respond accordingly based on the situation. In disaster response and recovery efforts, the rover can deliver emergency supplies and provide a communication system for survivors to stay connected with the outside world. Furthermore, the rover can also transport aerial robots to expand the scope and effectiveness of the rescue operation, and all the software libraries used in the robot are open source (Robotic Operating System).

Our Journey

- To begin with, we conducted a thorough assessment of the total torque requirements for the motor to power the robot while bearing a minor payload.

- Subsequently, we selected a motor that satisfied the determined specifications. To enable autonomous navigation, it was crucial to opt for a motor with an encoder and an onboard encoder reader driver that transmits data via UART.

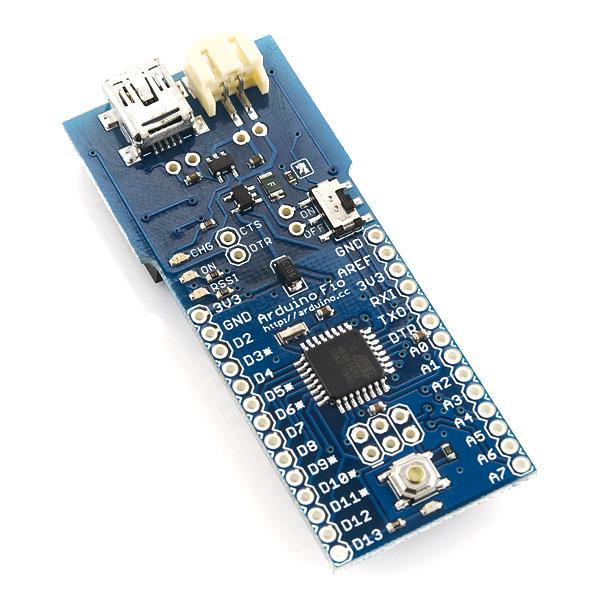

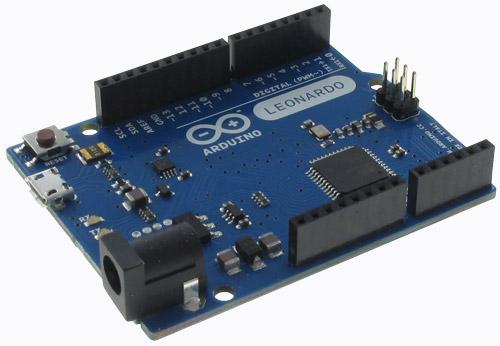

- Subsequently, we decided to employ an Arduino Mega microcontroller as a node of the master processor.

- During my initial experimentation with Raspberry Pi, we observed a significant disparity between the processing power and heat dissipation rates of the device and thus elected to transition to the Jetson Nano platform.

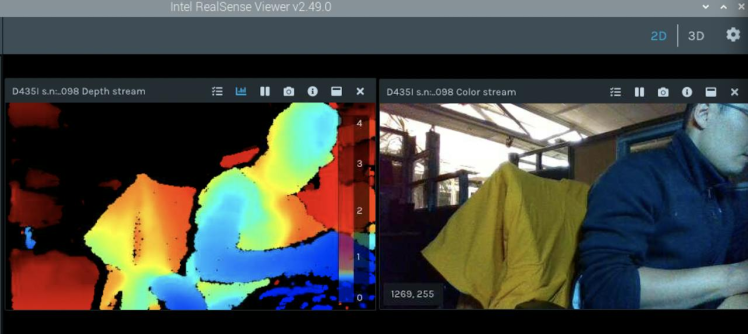

- In order to test the sensory equipment, we experimented with both RPLidar A2 and Intel Realsense D435i (depth camera), ultimately electing to use the depth camera due to its superior performance.

The calculation for motor selection and subsystem selection

Weight Estimation

● Driver 23gm*4=92gm

● Motor 180gm*4=720gm

● Arduino mega 37gm

● Battery 870gm

● Body 1760gm

● Buck converter 40gm

● Wheel 158gm*4=632gm

● Wire and sleeve 150gm

● Depth camera 73gm

● Jetson Nano 110gm

● Sensors and gps 100gm

Total approx Weight - 5204gm (5.204kg)(with some extra weight)

Choose the motorbased on thedatasheet below

Motor datasheet

Motor datasheet

The robot has a skid steering mechanism with 4 wheels

● To the total torque is 4*1.23Nm=4.92Nm

● Total maximum load current= 7.5A

● No load current is 0.8A

Total weight the robot can carry= Total wheel Torque/(Wheel radius*Co-efficient of friction)

Co-efficient of friction K

● For concrete 0.8

● For gravel 0.6

● Earth road dry 0.68

Now we chose a wheel with a wheel diameter of 125mm ( Radius of 0.0625m) So the total weight it can carry is = 4.92/(0.0625*0.8) Ideally it can carry 98.4 kg in concrete read but we can expect 40% of the ideal condition can carry

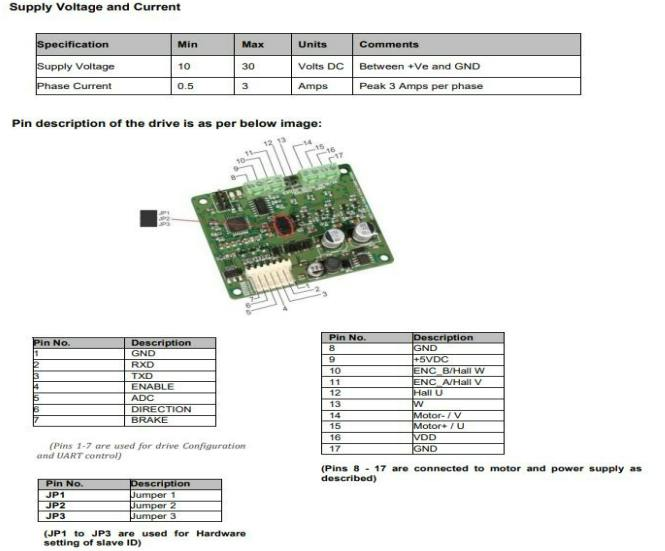

Motor Driver

Rhino Motion Controls DC Servo Drive RMCS-2303 with UART ASCII is a high-performance DC servo drive (10–30 V DC) designed for optimized operation of Rhino DC Servo motors with encoder feedback.

Driver datasheet

Driver datasheet

Battery

Li-Ion 11.1V 10000Mah (2C) with inbuilt charger-protection

● Discharge current upto 20A

● 12X Li-ion 3.7V 2500mAh cells (3S4P)

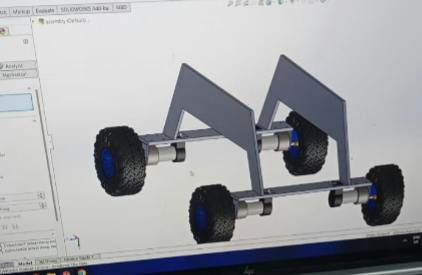

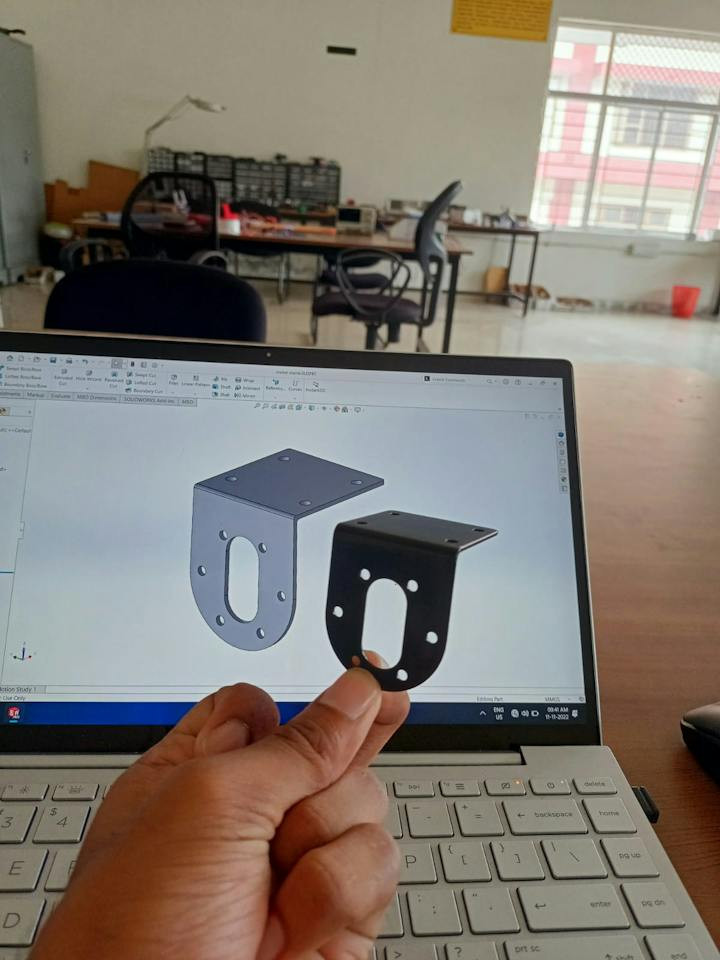

CAD Design

For designing we use Solidworks, Initially we start with a basic frame and motor mount like below

1 / 2 • Basic structure

Basic structure

Motor Mount

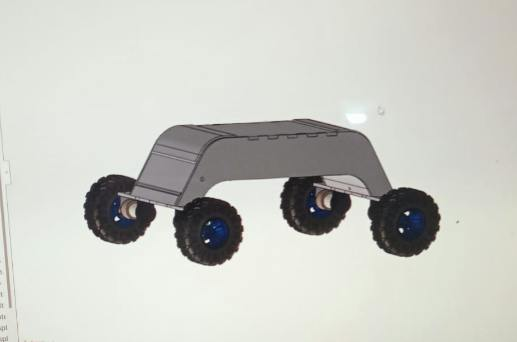

Then complete the over all structure in press fit design using 6mm acrylic sheet, After completing the structure the robot look like below.

Base Robot

Base Robot

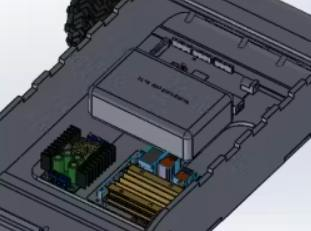

Now we have place all the hardware properly inside the Robot, For that you can download the design file of jetson nano arduino and driver from grabcad. And then assemble the rover in solidworks.

Hardware placement

Hardware placement

After Designing we manufacture this using Lacer cutting and 3D printing.

Lacer Cutting

Lacer Cutting

After some 3D printing we got a basic structure of the rover

Rover Structure

Rover Structure

After completing the printing of all mechanical part, Ready for assembly

Parts

Parts

After complete structural assembly of the rover

1 / 2

Assembly

Now moving on to mapping section and programming.

Mapping Device Selection

We have selected RPLidar A2 M8 for 2D mapping

Now it's Time for ROS installation in Jetson nano, For that we Muhammed Zain have written a comprehensive Documentation on ROS installation on jetson nano.

For installation please do refer the Introduction to ROS ? : Part 1

After Following the documentation now we have to install ROS Serial Arduino in Our Jetson Nano for that refer Rosserial Arduino : Part 2.

Now you have Successfully install ROS and ROS serial in your Jetson Nano.

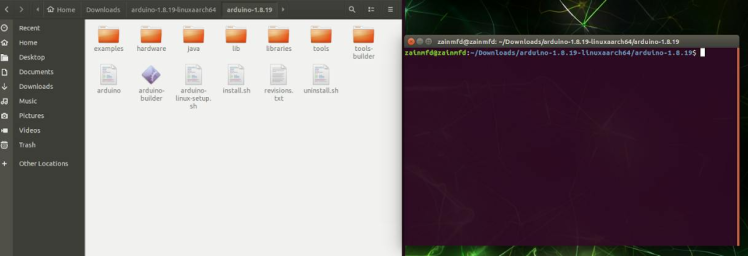

Install Arduino In Jetson Nano. For that click here

Now Follow the step below for download installation.

Select Linux ARM 64 bits

Select Linux ARM 64 bits

After Downloading Extract the file and open terminal inside the arduino-1.8.19

Extract the and open terminal Here

Extract the and open terminal Here

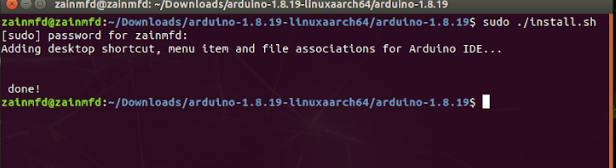

Then type code below

sudo ./install.shYou will see a result like below

After successful installation you can see this

After successful installation you can see this

Also after opening the Arduino ide add ROS library By referring the part 2 documentation.

Now time to setup ROSSerial node.

//This is a example code fron ROSWIKI

#include <ros.h>

#include <std_msgs/String.h>

ros::NodeHandle nh;

std_msgs::String str_msg;

ros::Publisher chatter("chatter", &str_msg);

char hello[13] = "hello world!";

void setup()

{

nh.initNode();

nh.advertise(chatter);

}

void loop()

{

str_msg.data = hello;

chatter.publish( &str_msg );

nh.spinOnce();

delay(1000);

}After this Run the code below

roscoreThen run rosserial with the port name

rosrun rosserial_python serial_node.py /dev/ttyUSB0Here the port is /dev/ttyUSB0

After this we have to receive the message on the subscriber for that enter the code below.

rostopic echo chatterThis will the return Hello World! in terminal like below.

Now We can Write the code to control the Motor that we select.

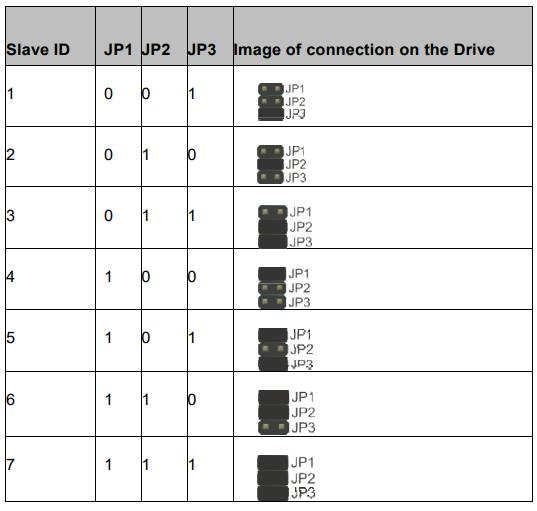

From the datasheet, all motors are controlling by each Slaves like below

Slave ID selection

Slave ID selection

We can connect 7 motors at a time to an Arduino Mega Via UART and we can control the motor according to the PWM signal.

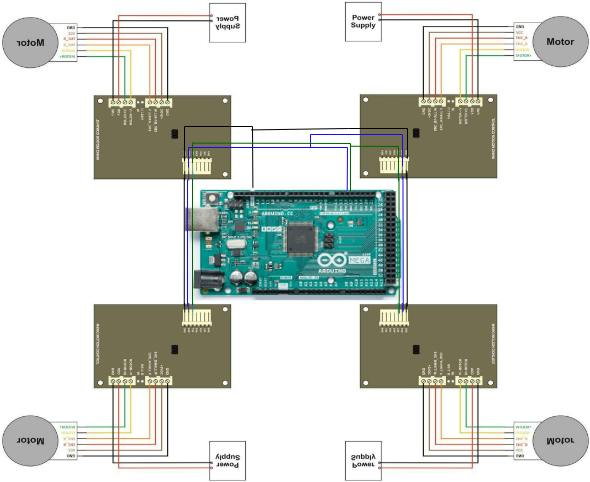

For Our rover we require 4 motor to manoeuvre in al terrain. accordingly we connect the motor and driver into Arduino Mega UART 2 (Rx2, Tx2).

Now it's time to Program this controller for this first refer the datasheet from Rhino

And also install the Arduino library for this driver.

Rosserial Arduino Setup For Motor Drive

Connect all four motors same as above circuit then program the circuit like below. in the below program we are controlling all the four wheel using skid steer mechanism.

#include <ros.h>

#include <RMCS2303drive.h>

#include <geometry_msgs/Twist.h>

#include <std_msgs/Int32.h>

#include "MapFloat.h"

RMCS2303 rmcs; // creation of motor driver object

// slave ids to be set on the motor driver refer to the manual in the reference section

byte slave_id1 = 1;

byte slave_id2 = 2;

byte slave_id3 = 3;

byte slave_id4 = 4;

ros::NodeHandle nh; // Node handle Object

geometry_msgs::Twist msg; // msg variable of data type twist

std_msgs::Int32 lwheel; // for storing left encoder value

std_msgs::Int32 rwheel; // for storing right encoder value

// Publisher object with topic names left_ticks and right_ticks for publishing Enc Values

ros::Publisher left_ticks("left_ticks", &lwheel);

ros::Publisher right_ticks("right_ticks", &rwheel);

// Make sure to specify the correct values here

//*******************************************

double wheel_rad = 0.0625, wheel_sep = 0.300; // wheel radius and wheel sepration in meters.

//******************************************

double w_r = 0, w_l = 0;

double speed_ang;

double speed_lin;

double leftPWM;

double rightPWM;

void messageCb(const geometry_msgs::Twist& msg) // cmd_vel callback function definition

{

speed_lin = max(min(msg.linear.x, 1.0f), -1.0f); // limits the linear x value from -1 to 1

speed_ang = max(min(msg.angular.z, 1.0f), -1.0f); // limits the angular z value from -1 to 1

// Kinematic equation for finding the left and right velocities

w_r = (speed_lin / wheel_rad) + ((speed_ang * wheel_sep) / (2.0 * wheel_rad));

w_l = (speed_lin / wheel_rad) - ((speed_ang * wheel_sep) / (2.0 * wheel_rad));

if (w_r == 0)

{

rightPWM = 0;

rmcs.Disable_Digital_Mode(slave_id1,0);

rmcs.Disable_Digital_Mode(slave_id2,0); // if right motor velocity is zero set right pwm to zero and disabling motors

rmcs.Disable_Digital_Mode(slave_id3,0);

rmcs.Disable_Digital_Mode(slave_id4,0);

}

else

rightPWM = mapFloat(fabs(w_r), 0.0, 18.0, 1500,17200); // mapping the right wheel velocity with respect to Motor PWM values

if (w_l == 0)

{

leftPWM = 0;

rmcs.Disable_Digital_Mode(slave_id1,0);

rmcs.Disable_Digital_Mode(slave_id2,0); // if left motor velocity is zero set left pwm to zero and disabling motors

rmcs.Disable_Digital_Mode(slave_id3,0);

rmcs.Disable_Digital_Mode(slave_id4,0);

}

else

leftPWM = mapFloat(fabs(w_l), 0.0, 18.0, 1500,

17200); // mapping the right wheel velocity with respect to Motor PWM values

rmcs.Speed(slave_id1,rightPWM);

rmcs.Speed(slave_id2,rightPWM);

rmcs.Speed(slave_id3,leftPWM);

rmcs.Speed(slave_id4,leftPWM);

if (w_r > 0 && w_l > 0)

{

rmcs.Enable_Digital_Mode(slave_id1,1);

rmcs.Enable_Digital_Mode(slave_id2,1); // forward condition

rmcs.Enable_Digital_Mode(slave_id3,0);

rmcs.Enable_Digital_Mode(slave_id4,0);

}

else if (w_r < 0 && w_l < 0)

{

rmcs.Enable_Digital_Mode(slave_id1,0);

rmcs.Enable_Digital_Mode(slave_id2,0); // backward condition

rmcs.Enable_Digital_Mode(slave_id3,1);

rmcs.Enable_Digital_Mode(slave_id4,1);

}

else if (w_r > 0 && w_l < 0)

{

rmcs.Enable_Digital_Mode(slave_id1,1);

rmcs.Enable_Digital_Mode(slave_id2,1); // Leftward condition

rmcs.Enable_Digital_Mode(slave_id3,1);

rmcs.Enable_Digital_Mode(slave_id4,1);

}

else if (w_r < 0 && w_l > 0)

{

rmcs.Enable_Digital_Mode(slave_id1,0);

rmcs.Enable_Digital_Mode(slave_id2,0); // rightward condition

rmcs.Enable_Digital_Mode(slave_id3,0);

rmcs.Enable_Digital_Mode(slave_id4,0);

}

else

{

rmcs.Brake_Motor(slave_id1,0);

rmcs.Brake_Motor(slave_id2,0);

rmcs.Brake_Motor(slave_id3,0);

rmcs.Brake_Motor(slave_id4,0); // if none of the above break the motors both in clockwise n anti-clockwise direction

rmcs.Brake_Motor(slave_id1,1);

rmcs.Brake_Motor(slave_id2,1);

rmcs.Brake_Motor(slave_id3,1);

rmcs.Brake_Motor(slave_id4,1);

}

}

ros::Subscriber<geometry_msgs::Twist> sub("cmd_vel",&messageCb); // creation of subscriber object sub for recieving the cmd_vel

void setup()

{

rmcs.Serial_selection(0); // 0 -> for Harware serial tx1 rx1 of arduino mega

rmcs.Serial0(9600);

rmcs.begin(&Serial2, 9600);

nh.initNode(); // initialzing the node handle object

nh.subscribe(sub); // subscribing to cmd vel with sub object

nh.advertise(left_ticks); // advertise the left_ticks topic

nh.advertise(right_ticks); // advertise the left_ticks topic

}

void loop()

{

lwheel.data =

rmcs.Position_Feedback(slave_id4); // the function reads the encoder value from the motor with slave id 4

rwheel.data =

-rmcs.Position_Feedback(slave_id2); // the function reads the encoder value from the motor with slave id 4

left_ticks.publish(&lwheel); // publish left enc values

right_ticks.publish(&rwheel); // publish right enc values

nh.spinOnce();

}For the above code refer Jerin Peter's documentation

After uploading this code to Arduino mega and wiring you have to install teleop twist keyboard using the below comment.

sudo apt-get install ros-melodic-teleop-twist-keyboardThen Run

roscoreThen run the serial node by entering the comment below

rosrun rosserial_python serial_node.py /dev/ttyACM0At this time you will get an error like the permission denied at that time enter the below comment and then type the comment that shown above,

sudo chmod 777 /dev/ttyACM0Now our subscriber is ready to receive the cmd_vel (comment velocity).

Then we have to run the publisher node that is teleop twist keyboard. For that Run the Code below.

rosrun teleop_twist_keyboard teleop_twist_keyboard.pyThen by using the keyboard control you will get a result like below.

Motor TestAlso now check the working with The over all robot

Initial testingAfter this testing we found that we have to add a triangulation member of top of each wheel to make the rover more structurally stable like below.

Weak point

Weak point

For to avoid the breaking added member

Added Member

Added Member

This member make the structure strong.

Now connect the lidar with jetson nano and visualise the data in Rviz

Now install the Rp lidar package for ROS, that open terminal and type the comment below.

cd catkin_ws/srcThen clone the github repo here, for cloning enter the comment below.

git clone https://github.com/robopeak/rplidar_rosAfter successful cloning you will get a result like below.

Installation of rplidar_ros

Installation of rplidar_ros

Then make the workspace by moving back to catkin_ws, Then type

catkin_makeThis will make the sourced file

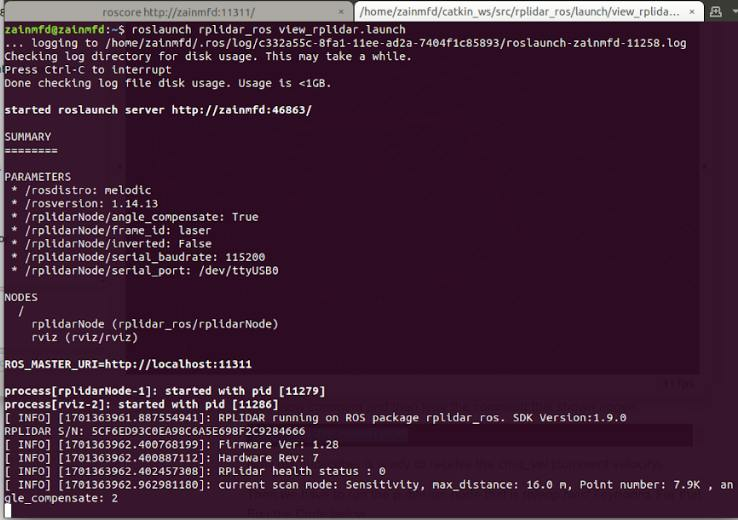

After this we want to run the rplidar_viewer, for that enter the code below

roslaunch rplidar_ros view_rplidar.launchthis will result like below, if you found any error give the permission to the port and run the above comment again.

view_rplidar running

view_rplidar running

this will also give a result on rviz like below

rplidar ViewerIMU Sensor Setup(BNO 055)

Connection

- Connect BNO055 Vin to pin 17 (3.3V) of the Jetson Nano.

- Connect BNO055 GND to pin 25 (GND) of the Jetson Nano.

- Connect BNO055 SDA to pin 3 (SDA) of the Jetson Nano.

- Connect BNO055 SCL to pin 5 (SCL) of the Jetson Nano.

To setup and install go to the workspace by entering the below comment

cd ~/catkin_ws/srcThen clone the repository

git clone https://github.com/dheera/ros-imu-bno055.gitAlso, for visualisation of IMU data in RVIZ install theIMU plugin using the below comment.

sudo apt-get install ros-melodic-rviz-imu-pluginAfter this make the the newly installed package, for that go back to the workspace by entering the below comment.

cd ~/catkin_ws/Then make the package using the below comment

catkin_makeNow you successfully install the bno055 ROS package.

For to execute this, We have to run

roscoreThen execute a the static tf publisher for that enter the comment below

rosrun tf static_transform_publisher 0 0 0 0 0 0 imuNow, launch the IMU by entering the comment below.

roslaunch imu_bno055 imu.launchNow we can see the data from our imu sensor on another terminal using the comment below

rostopic echo /imu/dataThen open the Rviz

rvizThen, Change the frame to imu.

Click the Add button in the bottom left of rviz window.

Click imu under rviz_imu_plugin.

Click ok.

Change the topic of the imu to /imu/data.

Now you can see the result like beow.

IMU data visualization in rvizNow we setup basic sensor and all all actuator, Now the Time for mapping.

For Mapping initially we tried with Gmapping But due to the higher reliability of map on inaccurate wheel odometry map was getting distorted. So we move on with Hector mapping.

For installation goto workspace

cd ~/catkin_ws/srcThen clone the repository

git clone https://github.com/tu-darmstadt-ros-pkg/hector_slam.gitNow go back to catkin_ws and make the package.

For to edit the parameter we have to goto the mapping_default.launch file.

sudo gedit ~/catkin_ws/src/hector_slam/hector_mapping/launch/mapping_default.launchAnd Edit the file like below.

<arg name="base_frame" default="base_footprint"/>

<arg name="odom_frame" default="nav"/>to

<arg name="base_frame" default="base_link"/>

<arg name="odom_frame" default="base_link"/>Also,

<!--<node pkg="tf" type="static_transform_publisher" name="map_nav_broadcaster" args="0 0 0 0 0 0 map nav 100"/>-->Remove the comment and add the cordinate

<node pkg="tf" type="static_transform_publisher" name="base_to_laser_broadcaster" args="0.05228 0 0.1296 0 0 0 base_link laser 100"/>Then save this file.

Now map the environment.

For this Run

roscore

rosrun rosserial_python serial_node.py /dev/ttyACM0

rosrun teleop_twist_keyboard teleop_twist_keyboard.py

roslaunch rplidar_ros rplidar.launch

roslaunch hector_mapping mapping_default.launch

rvizThen on the left bottom click on Add then goto by topic then add map.

Then navigate the robot using teleop and map the area, After successful mapping you will get a map of you environment like below in RVIZ.

Video of mapping

Hector mappingFinal Map

Map of our college Basketball Court

Map of our college Basketball Court

For to save this map enter the comment below:

rosrun map_server map_saver -f mapnamethen it will save the map.

Now we can setup the navigation stack, For that we Are using move_base and amcl, Also setup the driver train controller.

for to launch the tf_control use the below launch file

<launch>

<!-- odometry-ish-->

<param name="~base_frame_id" value="base_footprint"/>

<param name="~odom_frame_id" value="odom"/>

<param name="encoder_min" value="-1073741824"/>

<param name="encoder_max" value="1073741824"/>

<param name="ticks_meter" value="913683" />

<param name="~base_width" value="0.41" />

<node pkg="differential_drive" type="diff_tf.py" name="diff_drive" output="screen">

<!-- <remap from="/odom" to="/wheelodom"/> -->

</node>

<node pkg="tf2_ros" type="static_transform_publisher" name="base_footprint_to_base_link" args="0 0 0.15 0 0 0 /base_footprint /base_link"/>

</launch>Also add the standard differential tf python code in the same workspace.

#!/usr/bin/env python

"""

diff_tf.py - follows the output of a wheel encoder and

creates tf and odometry messages.

some code borrowed from the arbotix diff_controller script

A good reference: http://rossum.sourceforge.net/papers/DiffSteer/

Copyright (C) 2012 Jon Stephan.

This program is free software: you can redistribute it and/or modify

it under the terms of the GNU General Public License as published by

the Free Software Foundation, either version 3 of the License, or

(at your option) any later version.

This program is distributed in the hope that it will be useful,

but WITHOUT ANY WARRANTY; without even the implied warranty of

MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

GNU General Public License for more details.

You should have received a copy of the GNU General Public License

along with this program. If not, see <http://www.gnu.org/licenses/>.

----------------------------------

Portions of this code borrowed from the arbotix_python diff_controller.

diff_controller.py - controller for a differential drive

Copyright (c) 2010-2011 Vanadium Labs LLC. All right reserved.

Redistribution and use in source and binary forms, with or without

modification, are permitted provided that the following conditions are met:

* Redistributions of source code must retain the above copyright

notice, this list of conditions and the following disclaimer.

* Redistributions in binary form must reproduce the above copyright

notice, this list of conditions and the following disclaimer in the

documentation and/or other materials provided with the distribution.

* Neither the name of Vanadium Labs LLC nor the names of its

contributors may be used to endorse or promote products derived

from this software without specific prior written permission.

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND

ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED

WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

DISCLAIMED. IN NO EVENT SHALL VANADIUM LABS BE LIABLE FOR ANY DIRECT, INDIRECT,

INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT

LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA,

OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF

LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE

OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF

ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

"""

import rospy

import roslib

#roslib.load_manifest('differential_drive')

from math import sin, cos, pi

from geometry_msgs.msg import Quaternion

from geometry_msgs.msg import Twist

from nav_msgs.msg import Odometry

from tf.broadcaster import TransformBroadcaster

from std_msgs.msg import Int32

#############################################################################

class DiffTf:

#############################################################################

#############################################################################

def __init__(self):

#############################################################################

rospy.init_node("diff_tf")

self.nodename = rospy.get_name()

rospy.loginfo("-I- %s started" % self.nodename)

#### parameters #######

self.rate = rospy.get_param('~rate',10.0) # the rate at which to publish the transform

self.ticks_meter = float(rospy.get_param('ticks_meter', 612377)) # The number of wheel encoder ticks per meter of travel

self.base_width = float(rospy.get_param('~base_width', 0.245)) # The wheel base width in meters

self.base_frame_id = rospy.get_param('~base_frame_id','base_link') # the name of the base frame of the robot

self.odom_frame_id = rospy.get_param('~odom_frame_id', 'odom') # the name of the odometry reference frame

self.encoder_min = rospy.get_param('encoder_min', -32768)

self.encoder_max = rospy.get_param('encoder_max', 32768)

self.encoder_low_wrap = rospy.get_param('wheel_low_wrap', (self.encoder_max - self.encoder_min) * 0.3 + self.encoder_min )

self.encoder_high_wrap = rospy.get_param('wheel_high_wrap', (self.encoder_max - self.encoder_min) * 0.7 + self.encoder_min )

self.t_delta = rospy.Duration(1.0/self.rate)

self.t_next = rospy.Time.now() + self.t_delta

# internal data

self.enc_left = None # wheel encoder readings

self.enc_right = None

self.left = 0 # actual values coming back from robot

self.right = 0

self.lmult = 0

self.rmult = 0

self.prev_lencoder = 0

self.prev_rencoder = 0

self.x = 0 # position in xy plane

self.y = 0

self.th = 0

self.dx = 0 # speeds in x/rotation

self.dr = 0

self.then = rospy.Time.now()

# subscriptions

rospy.Subscriber("left_ticks", Int32, self.lwheelCallback)

rospy.Subscriber("right_ticks", Int32, self.rwheelCallback)

self.odomPub = rospy.Publisher("odom", Odometry,queue_size=10)

self.odomBroadcaster = TransformBroadcaster()

#############################################################################

def spin(self):

#############################################################################

r = rospy.Rate(self.rate)

while not rospy.is_shutdown():

self.update()

r.sleep()

#############################################################################

def update(self):

#############################################################################

now = rospy.Time.now()

if now > self.t_next:

elapsed = now - self.then

self.then = now

elapsed = elapsed.to_sec()

# calculate odometry

if self.enc_left == None:

d_left = 0

d_right = 0

else:

d_left = (self.left - self.enc_left) / self.ticks_meter

d_right = (self.right - self.enc_right) / self.ticks_meter

self.enc_left = self.left

self.enc_right = self.right

# distance traveled is the average of the two wheels

d = ( d_left + d_right ) / 2

# this approximation works (in radians) for small angles

th = ( d_right - d_left ) / self.base_width

# calculate velocities

self.dx = d / elapsed

self.dr = th / elapsed

if (d != 0):

# calculate distance traveled in x and y

x = cos( th ) * d

y = -sin( th ) * d

# calculate the final position of the robot

self.x = self.x + ( cos( self.th ) * x - sin( self.th ) * y )

self.y = self.y + ( sin( self.th ) * x + cos( self.th ) * y )

if( th != 0):

self.th = self.th + th

# publish the odom information

quaternion = Quaternion()

quaternion.x = 0.0

quaternion.y = 0.0

quaternion.z = sin( self.th / 2 )

quaternion.w = cos( self.th / 2 )

self.odomBroadcaster.sendTransform(

(self.x, self.y, 0),

(quaternion.x, quaternion.y, quaternion.z, quaternion.w),

rospy.Time.now(),

self.base_frame_id,

self.odom_frame_id

)

odom = Odometry()

odom.header.stamp = now

odom.header.frame_id = self.odom_frame_id

odom.pose.pose.position.x = self.x

odom.pose.pose.position.y = self.y

odom.pose.pose.position.z = 0

odom.pose.pose.orientation = quaternion

odom.child_frame_id = self.base_frame_id

odom.twist.twist.linear.x = self.dx

odom.twist.twist.linear.y = 0

odom.twist.twist.angular.z = self.dr

self.odomPub.publish(odom)

#############################################################################

def lwheelCallback(self, msg):

#############################################################################

enc = msg.data

if (enc < self.encoder_low_wrap and self.prev_lencoder > self.encoder_high_wrap):

self.lmult = self.lmult + 1

if (enc > self.encoder_high_wrap and self.prev_lencoder < self.encoder_low_wrap):

self.lmult = self.lmult - 1

self.left = 1.0 * (enc + self.lmult * (self.encoder_max - self.encoder_min))

self.prev_lencoder = enc

#############################################################################

def rwheelCallback(self, msg):

#############################################################################

enc = msg.data

if(enc < self.encoder_low_wrap and self.prev_rencoder > self.encoder_high_wrap):

self.rmult = self.rmult + 1

if(enc > self.encoder_high_wrap and self.prev_rencoder < self.encoder_low_wrap):

self.rmult = self.rmult - 1

self.right = 1.0 * (enc + self.rmult * (self.encoder_max - self.encoder_min))

self.prev_rencoder = enc

#############################################################################

#############################################################################

if __name__ == '__main__':

""" main """

diffTf = DiffTf()

diffTf.spin()Then add Move_base to the workspace

For that refer the official documentation from ROS Wiki.

Then also add amcl along with the move base in the same workspace

Also there will be a standerd pathplaning that come along which is called TEB.

This come with all in one package from ROS Link.

Now we are good to go but we have to fuse the wheel odom, imu and lidar for that refer to Addsion documentation on Sensor Fusion Using the ROS Robot Pose EKF Package.

Now we can execute all the file for the Autonomous navigation.

roscore

roslaunch hector_mapping mapping_default.launch

sudo chmod 777 /dev/ttyUSB0

roslaunch rplidar_ros rplidar.launch

rosrun teleop_twist_keyboard teleop_twist_keyboard.py

sudo chmod 777 /dev/ttyACM0

rosrun rosserial_python serial_node.py /dev/ttyACM0

roslaunch hector_mapping mapping_default.launch

roslaunch differential_drive tf_controller.launch

roslaunch navigation move_base.launch

roslaunch navigation amcl.launch

rosrun map_server map_server mapname.yaml

rvizFull execution video of autonomous navigation

Navigation 1Navigation 2Testing in outdoor in multiple terrain

Terrain 1

Terrain 1Terrain 2

Terrain 2Terrain 3

Terrain 3Now to improve the Path panning we introduce A* Algorithm

Path Planning using A* Algorithm

Step 1: Write a global planner

catkin_create_pkg relaxed astar nav_core roscpp rospy std_msgsStep 2: Write the corresponding header files and source files under the function package

We named it as RAstar_ros.h

#include <netdb.h>

#include <netinet/in.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <sys/socket.h>

#include <sys/types.h>

#include <unistd.h>

#include <string>

/** include ros libraries**********************/

#include <actionlib/client/simple_action_client.h>

#include <geometry_msgs/PoseStamped.h>

#include <geometry_msgs/PoseWithCovarianceStamped.h>

#include <geometry_msgs/Twist.h>

#include <move_base_msgs/MoveBaseAction.h>

#include <move_base_msgs/MoveBaseActionGoal.h>

#include <move_base_msgs/MoveBaseGoal.h>

#include <nav_msgs/GetPlan.h>

#include <nav_msgs/OccupancyGrid.h>

#include <nav_msgs/Odometry.h>

#include <nav_msgs/Path.h>

#include <ros/ros.h>

#include <tf/tf.h>

#include <tf/transform_datatypes.h>

#include <tf/transform_listener.h>

#include "sensor_msgs/LaserScan.h"

#include "sensor_msgs/PointCloud2.h"

/** ********************************************/

#include <boost/foreach.hpp>

//#define forEach BOOST_FOREACH

/** for global path planner interface */

#include <angles/angles.h>

#include <costmap_2d/costmap_2d.h>

#include <costmap_2d/costmap_2d_ros.h>

#include <geometry_msgs/PoseStamped.h>

#include <nav_core/base_global_planner.h>

//#include <pcl_conversions/pcl_conversions.h>

#include <base_local_planner/costmap_model.h>

#include <base_local_planner/world_model.h>

#include <set>

using namespace std;

using std::string;

#ifndef RASTAR_ROS_CPP

#define RASTAR_ROS_CPP

/**

* @struct cells

* @brief A struct that represents a cell and its fCost.

*/

struct cells {

int currentCell;

float fCost;

};

// STEP1-2.your class should inherits from the interface

// "nav_core::BaseGlobalPlanner"

namespace RAstar_planner {

class RAstarPlannerROS : public nav_core::BaseGlobalPlanner {

public:

RAstarPlannerROS(ros::NodeHandle&); // this constructor is may be not needed

RAstarPlannerROS();

RAstarPlannerROS(std::string name, costmap_2d::Costmap2DROS* costmap_ros);

ros::NodeHandle ROSNodeHandle;

/** 公有继承 nav_core::BaseGlobalPlanner 接口 **/

/** overriden classes from interface nav_core::BaseGlobalPlanner **/

void initialize(std::string name, costmap_2d::Costmap2DROS* costmap_ros);

bool makePlan(const geometry_msgs::PoseStamped& start,

const geometry_msgs::PoseStamped& goal,

std::vector<geometry_msgs::PoseStamped>& plan);

void getCorrdinate(float& x, float& y);

int convertToCellIndex(float x, float y);

void convertToCoordinate(int index, float& x, float& y);

bool isCellInsideMap(float x, float y);

void mapToWorld(double mx, double my, double& wx, double& wy);

vector<int> RAstarPlanner(int startCell, int goalCell);

vector<int> findPath(int startCell, int goalCell, float g_score[]);

vector<int> constructPath(int startCell, int goalCell, float g_score[]);

float calculateHCost(int cellID, int goalCell) {

int x1 = getCellRowID(goalCell);

int y1 = getCellColID(goalCell);

int x2 = getCellRowID(cellID);

int y2 = getCellColID(cellID);

return abs(x1 - x2) + abs(y1 - y2);

// return min(abs(x1-x2),abs(y1-y2))*sqrt(2) +

// max(abs(x1-x2),abs(y1-y2))-min(abs(x1-x2),abs(y1-y2));

}

void addNeighborCellToOpenList(multiset<cells>& OPL, int neighborCell,

int goalCell, float g_score[]);

vector<int> findFreeNeighborCell(int CellID);

bool isStartAndGoalCellsValid(int startCell, int goalCell);

float getMoveCost(int CellID1, int CellID2);

float getMoveCost(int i1, int j1, int i2, int j2);

bool isFree(int CellID); // returns true if the cell is Free

bool isFree(int i, int j);

int getCellIndex(int i,

int j) // get the index of the cell to be used in Path

{

return (i * width) + j;

}

int getCellRowID(int index) // get the row ID from cell index

{

return index / width;

}

int getCellColID(int index) // get colunm ID from cell index

{

return index % width;

}

float originX;

float originY;

float resolution;

costmap_2d::Costmap2DROS* costmap_ros_;

double step_size_, min_dist_from_robot_;

costmap_2d::Costmap2D* costmap_;

// base_local_planner::WorldModel* world_model_;

bool initialized_;

int width;

int height;

};

}; // namespace RAstar_planner

#endifStep 3: Creating the C++ file

- The core ROS libraries required by the path planner need to be included. The path planner needs to use <costmap_2d/costmap_2d_ros.h> and <costmap_2d/costmap_2d.h> and the costmap 2d::Costmap2D class is used as a input map class. When defined as a plugin, the route planner class will automatically have access to this map.

- <nav_core/base_global_planner.h> is used to import the interface nav_core::BaseGlobalPlanner, which is the parent class that the plug-in must inherit.

- Then improve the initialize and makePlan functions in the cpp file.RAstar_ros.cpp

#include <netdb.h>

#include <netinet/in.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <sys/socket.h>

#include <sys/types.h>

#include <unistd.h>

#include <fstream>

#include <iomanip>

#include <iostream>

#include <string>

// STEP1-1.include core ROS libraries -> nav_core roscpp rospy std_msgs

#include "RAstar_ros.h"

// STEP1-4.include <pluginlib/class_list_macros.h>

#include <pluginlib/class_list_macros.h>

// register this planner as a BaseGlobalPlanner plugin

/*注册一个插件

STEP1-5.register this planner as a BaseGlobalPlanner plugin by adding

PLUGINLIB_EXPORT_CLASS(RAstar_planner::RAstarPlannerROS,nav_core::BaseGlobalPlanner)*/

PLUGINLIB_EXPORT_CLASS(RAstar_planner::RAstarPlannerROS,

nav_core::BaseGlobalPlanner)

int value;

int mapSize;

bool* OGM;

static const float INFINIT_COST = INT_MAX; //!< cost of non connected nodes

float infinity = std::numeric_limits<float>::infinity();

float tBreak; // coefficient for breaking ties

ofstream MyExcelFile("RA_result.xlsx", ios::trunc);

int clock_gettime(clockid_t clk_id, struct timespect* tp);

timespec diff(timespec start, timespec end) {

timespec temp;

if ((end.tv_nsec - start.tv_nsec) < 0) {

temp.tv_sec = end.tv_sec - start.tv_sec - 1;

temp.tv_nsec = 1000000000 + end.tv_nsec - start.tv_nsec;

} else {

temp.tv_sec = end.tv_sec - start.tv_sec;

temp.tv_nsec = end.tv_nsec - start.tv_nsec;

}

return temp;

}

inline vector<int> findFreeNeighborCell(int CellID);

namespace RAstar_planner {

// Default Constructor

RAstarPlannerROS::RAstarPlannerROS() {}

RAstarPlannerROS::RAstarPlannerROS(ros::NodeHandle& nh) { ROSNodeHandle = nh; }

// STEP1-3.overriden the methods "initialize" and "makePlan"

RAstarPlannerROS::RAstarPlannerROS(std::string name,

costmap_2d::Costmap2DROS* costmap_ros) {

initialize(name, costmap_ros);

}

void RAstarPlannerROS::initialize(std::string name,

costmap_2d::Costmap2DROS* costmap_ros) {

if (!initialized_) {

costmap_ros_ = costmap_ros;

costmap_ = costmap_ros_->getCostmap();

ros::NodeHandle private_nh("~/" + name);

originX = costmap_->getOriginX();

originY = costmap_->getOriginY();

width = costmap_->getSizeInCellsX();

height = costmap_->getSizeInCellsY();

resolution = costmap_->getResolution();

mapSize = width * height;

tBreak = 1 + 1 / (mapSize);

value = 0;

OGM = new bool[mapSize];

for (unsigned int iy = 0; iy < costmap_->getSizeInCellsY(); iy++) {

for (unsigned int ix = 0; ix < costmap_->getSizeInCellsX(); ix++) {

unsigned int cost = static_cast<int>(costmap_->getCost(ix, iy));

// cout<<cost;

if (cost == 0)

OGM[iy * width + ix] = true;

else

OGM[iy * width + ix] = false;

}

}

MyExcelFile << "StartIDtStartXtStartYtGoalIDtGoalXtGoalYtPlannertime("

"ms)tpathLengthtnumberOfCellst"

<< endl;

ROS_INFO("RAstar planner initialized successfully");

initialized_ = true;

} else

ROS_WARN("This planner has already been initialized... doing nothing");

}

// STEP1-3.1Here we override the "makePlan" method,in which you should write or

// call your path planning algorithm

bool RAstarPlannerROS::makePlan(const geometry_msgs::PoseStamped& start,

const geometry_msgs::PoseStamped& goal,

std::vector<geometry_msgs::PoseStamped>& plan) {

if (!initialized_) {

ROS_ERROR(

"The planner has not been initialized, please call initialize() to use "

"the planner");

return false;

}

ROS_DEBUG("Got a start: %.2f, %.2f, and a goal: %.2f, %.2f",

start.pose.position.x, start.pose.position.y, goal.pose.position.x,

goal.pose.position.y);

plan.clear();

if (goal.header.frame_id != costmap_ros_->getGlobalFrameID()) {

ROS_ERROR(

"This planner as configured will only accept goals in the %s frame, "

"but a goal was sent in the %s frame.",

costmap_ros_->getGlobalFrameID().c_str(), goal.header.frame_id.c_str());

return false;

}

tf::Stamped<tf::Pose> goal_tf;

tf::Stamped<tf::Pose> start_tf;

poseStampedMsgToTF(goal, goal_tf);

poseStampedMsgToTF(start, start_tf);

// convert the start and goal positions

float startX = start.pose.position.x;

float startY = start.pose.position.y;

float goalX = goal.pose.position.x;

float goalY = goal.pose.position.y;

getCorrdinate(startX, startY);

getCorrdinate(goalX, goalY);

int startCell;

int goalCell;

if (isCellInsideMap(startX, startY) && isCellInsideMap(goalX, goalY)) {

startCell = convertToCellIndex(startX, startY);

goalCell = convertToCellIndex(goalX, goalY);

MyExcelFile << startCell << "t" << start.pose.position.x << "t"

<< start.pose.position.y << "t" << goalCell << "t"

<< goal.pose.position.x << "t" << goal.pose.position.y;

} else {

ROS_WARN("the start or goal is out of the map");

return false;

}

/////////////////////////////////////////////////////////

// call relaxed Astar global planner

if (isStartAndGoalCellsValid(startCell, goalCell)) {

vector<int> bestPath;

bestPath.clear();

//两个参数为起始点和终点

bestPath = RAstarPlanner(startCell, goalCell);

// if the global planner find a path

if (bestPath.size() > 0) {

// convert the path

for (int i = 0; i < bestPath.size(); i++) {

float x = 0.0;

float y = 0.0;

int index = bestPath[i];

convertToCoordinate(index, x, y);

geometry_msgs::PoseStamped pose = goal;

// your path should be stored in the "plan" vector

pose.pose.position.x = x;

pose.pose.position.y = y;

pose.pose.position.z = 0.0;

pose.pose.orientation.x = 0.0;

pose.pose.orientation.y = 0.0;

pose.pose.orientation.z = 0.0;

pose.pose.orientation.w = 1.0;

plan.push_back(pose);

}

float path_length = 0.0;

std::vector<geometry_msgs::PoseStamped>::iterator it = plan.begin();

geometry_msgs::PoseStamped last_pose;

last_pose = *it;

it++;

for (; it != plan.end(); ++it) {

path_length += hypot((*it).pose.position.x - last_pose.pose.position.x,

(*it).pose.position.y - last_pose.pose.position.y);

last_pose = *it;

}

cout << "The global path length: " << path_length << " meters" << endl;

MyExcelFile << "t" << path_length << "t" << plan.size() << endl;

// publish the plan

return true;

}

else {

ROS_WARN("The planner failed to find a path, choose other goal position");

return false;

}

}

else {

ROS_WARN("Not valid start or goal");

return false;

}

}

void RAstarPlannerROS::getCorrdinate(float& x, float& y) {

x = x - originX;

y = y - originY;

}

int RAstarPlannerROS::convertToCellIndex(float x, float y) {

int cellIndex;

float newX = x / resolution;

float newY = y / resolution;

cellIndex = getCellIndex(newY, newX);

return cellIndex;

}

void RAstarPlannerROS::convertToCoordinate(int index, float& x, float& y) {

x = getCellColID(index) * resolution;

y = getCellRowID(index) * resolution;

x = x + originX;

y = y + originY;

}

bool RAstarPlannerROS::isCellInsideMap(float x, float y) {

bool valid = true;

if (x > (width * resolution) || y > (height * resolution)) valid = false;

return valid;

}

void RAstarPlannerROS::mapToWorld(double mx, double my, double& wx,

double& wy) {

costmap_2d::Costmap2D* costmap = costmap_ros_->getCostmap();

wx = costmap->getOriginX() + mx * resolution;

wy = costmap->getOriginY() + my * resolution;

}

vector<int> RAstarPlannerROS::RAstarPlanner(int startCell, int goalCell) {

vector<int> bestPath;

// float g_score [mapSize][2];

float g_score[mapSize];

for (uint i = 0; i < mapSize; i++) g_score[i] = infinity;

timespec time1, time2;

/* take current time here */

clock_gettime(CLOCK_PROCESS_CPUTIME_ID, &time1);

bestPath = findPath(startCell, goalCell, g_score);

clock_gettime(CLOCK_PROCESS_CPUTIME_ID, &time2);

cout << "time to generate best global path by Relaxed A* = "

<< (diff(time1, time2).tv_sec) * 1e3 +

(diff(time1, time2).tv_nsec) * 1e-6

<< " microseconds" << endl;

MyExcelFile << "t"

<< (diff(time1, time2).tv_sec) * 1e3 +

(diff(time1, time2).tv_nsec) * 1e-6;

return bestPath;

}

/*******************************************************************************/

// Function Name: findPath

// Inputs: the map layout, the start and the goal Cells and a boolean to

// indicate if we will use break ties or not Output: the best path Description:

// it is used to generate the robot free path

/*********************************************************************************/

vector<int> RAstarPlannerROS::findPath(int startCell, int goalCell,

float g_score[]) {

value++;

vector<int> bestPath;

vector<int> emptyPath;

cells CP;

multiset<cells> OPL;

int currentCell;

// calculate g_score and f_score of the start position

g_score[startCell] = 0;

CP.currentCell = startCell;

CP.fCost = g_score[startCell] + calculateHCost(startCell, goalCell);

// add the start cell to the open list

OPL.insert(CP);

currentCell = startCell;

// while the open list is not empty continuie the search or g_score(goalCell)

// is equal to infinity

while (!OPL.empty() && g_score[goalCell] == infinity) {

// choose the cell that has the lowest cost fCost in the open set which is

// the begin of the multiset

currentCell = OPL.begin()->currentCell;

// remove the currentCell from the openList

OPL.erase(OPL.begin());

// search the neighbors of the current Cell

vector<int> neighborCells;

neighborCells = findFreeNeighborCell(currentCell);

for (uint i = 0; i < neighborCells.size();

i++) // for each neighbor v of current cell

{

// if the g_score of the neighbor is equal to INF: unvisited cell

if (g_score[neighborCells[i]] == infinity) {

g_score[neighborCells[i]] =

g_score[currentCell] + getMoveCost(currentCell, neighborCells[i]);

addNeighborCellToOpenList(OPL, neighborCells[i], goalCell, g_score);

} // end if

} // end for

} // end while

if (g_score[goalCell] !=

infinity) // if g_score(goalcell)==INF : construct path

{

bestPath = constructPath(startCell, goalCell, g_score);

return bestPath;

} else {

cout << "Failure to find a path !" << endl;

return emptyPath;

}

}

/*******************************************************************************/

// Function Name: constructPath

// Inputs: the start and the goal Cells

// Output: the best path

// Description: it is used to construct the robot path

/*********************************************************************************/

vector<int> RAstarPlannerROS::constructPath(int startCell, int goalCell,

float g_score[]) {

vector<int> bestPath;

vector<int> path;

path.insert(path.begin() + bestPath.size(), goalCell);

int currentCell = goalCell;

while (currentCell != startCell) {

vector<int> neighborCells;

neighborCells = findFreeNeighborCell(currentCell);

vector<float> gScoresNeighbors;

for (uint i = 0; i < neighborCells.size(); i++)

gScoresNeighbors.push_back(g_score[neighborCells[i]]);

int posMinGScore =

distance(gScoresNeighbors.begin(),

min_element(gScoresNeighbors.begin(), gScoresNeighbors.end()));

currentCell = neighborCells[posMinGScore];

// insert the neighbor in the path

path.insert(path.begin() + path.size(), currentCell);

}

for (uint i = 0; i < path.size(); i++)

bestPath.insert(bestPath.begin() + bestPath.size(),

path[path.size() - (i + 1)]);

return bestPath;

}

/*******************************************************************************/

// Function Name: calculateHCost

// Inputs:the cellID and the goalCell

// Output: the distance between the current cell and the goal cell

// Description: it is used to calculate the hCost

/*********************************************************************************/

/*

float RAstarPlannerROS::calculateHCost(int cellID, int goalCell)

{

int x1=getCellRowID(goalCell);

int y1=getCellColID(goalCell);

int x2=getCellRowID(cellID);

int y2=getCellColID(cellID);

//if(getNeighborNumber()==4)

//The diagonal shortcut distance between two grid points (x1,y1) and (x2,y2)

is:

// return min(abs(x1-x2),abs(y1-y2))*sqrt(2) +

max(abs(x1-x2),abs(y1-y2))-min(abs(x1-x2),abs(y1-y2));

//else

//manhatten distance for 8 neighbor

return abs(x1-x2)+abs(y1-y2);

}

*/

/*******************************************************************************/

// Function Name: addNeighborCellToOpenList

// Inputs: the open list, the neighbors Cell, the g_score matrix, the goal cell

// Output:

// Description: it is used to add a neighbor Cell to the open list

/*********************************************************************************/

void RAstarPlannerROS::addNeighborCellToOpenList(multiset<cells>& OPL,

int neighborCell, int goalCell,

float g_score[]) {

cells CP;

CP.currentCell = neighborCell; // insert the neighbor cell

CP.fCost = g_score[neighborCell] + calculateHCost(neighborCell, goalCell);

OPL.insert(CP);

// multiset<cells>::iterator it = OPL.lower_bound(CP);

// multiset<cells>::iterator it = OPL.upper_bound(CP);

// OPL.insert( it, CP );

}

/*******************************************************************************

* Function Name: findFreeNeighborCell

* Inputs: the row and columun of the current Cell

* Output: a vector of free neighbor cells of the current cell

* Description:it is used to find the free neighbors Cells of a the current Cell

*in the grid Check Status: Checked by Anis, Imen and Sahar

*********************************************************************************/

vector<int> RAstarPlannerROS::findFreeNeighborCell(int CellID) {

int rowID = getCellRowID(CellID);

int colID = getCellColID(CellID);

int neighborIndex;

vector<int> freeNeighborCells;

for (int i = -1; i <= 1; i++)

for (int j = -1; j <= 1; j++) {

// check whether the index is valid

if ((rowID + i >= 0) && (rowID + i < height) && (colID + j >= 0) &&

(colID + j < width) && (!(i == 0 && j == 0))) {

neighborIndex = getCellIndex(rowID + i, colID + j);

if (isFree(neighborIndex)) freeNeighborCells.push_back(neighborIndex);

}

}

return freeNeighborCells;

}

/*******************************************************************************/

// Function Name: isStartAndGoalCellsValid

// Inputs: the start and Goal cells

// Output: true if the start and the goal cells are valid

// Description: check if the start and goal cells are valid

/*********************************************************************************/

bool RAstarPlannerROS::isStartAndGoalCellsValid(int startCell, int goalCell) {

bool isvalid = true;

bool isFreeStartCell = isFree(startCell);

bool isFreeGoalCell = isFree(goalCell);

if (startCell == goalCell) {

// cout << "The Start and the Goal cells are the same..." << endl;

isvalid = false;

} else {

if (!isFreeStartCell && !isFreeGoalCell) {

// cout << "The start and the goal cells are obstacle positions..." <<

// endl;

isvalid = false;

} else {

if (!isFreeStartCell) {

// cout << "The start is an obstacle..." << endl;

isvalid = false;

} else {

if (!isFreeGoalCell) {

// cout << "The goal cell is an obstacle..." << endl;

isvalid = false;

} else {

if (findFreeNeighborCell(goalCell).size() == 0) {

// cout << "The goal cell is encountred by obstacles... "<< endl;

isvalid = false;

} else {

if (findFreeNeighborCell(startCell).size() == 0) {

// cout << "The start cell is encountred by obstacles... "<< endl;

isvalid = false;

}

}

}

}

}

}

return isvalid;

}

float RAstarPlannerROS::getMoveCost(int i1, int j1, int i2, int j2) {

float moveCost = INFINIT_COST; // start cost with maximum value. Change it to

// real cost of cells are connected

// if cell2(i2,j2) exists in the diagonal of cell1(i1,j1)

if ((j2 == j1 + 1 && i2 == i1 + 1) || (i2 == i1 - 1 && j2 == j1 + 1) ||

(i2 == i1 - 1 && j2 == j1 - 1) || (j2 == j1 - 1 && i2 == i1 + 1)) {

// moveCost = DIAGONAL_MOVE_COST;

moveCost = 1.4;

}

// if cell 2(i2,j2) exists in the horizontal or vertical line with

// cell1(i1,j1)

else {

if ((j2 == j1 && i2 == i1 - 1) || (i2 == i1 && j2 == j1 - 1) ||

(i2 == i1 + 1 && j2 == j1) || (i1 == i2 && j2 == j1 + 1)) {

// moveCost = MOVE_COST;

moveCost = 1;

}

}

return moveCost;

}

float RAstarPlannerROS::getMoveCost(int CellID1, int CellID2) {

int i1 = 0, i2 = 0, j1 = 0, j2 = 0;

i1 = getCellRowID(CellID1);

j1 = getCellColID(CellID1);

i2 = getCellRowID(CellID2);

j2 = getCellColID(CellID2);

return getMoveCost(i1, j1, i2, j2);

}

// verify if the cell(i,j) is free

bool RAstarPlannerROS::isFree(int i, int j) {

int CellID = getCellIndex(i, j);

return OGM[CellID];

}

// verify if the cell(i,j) is free

bool RAstarPlannerROS::isFree(int CellID) { return OGM[CellID]; }

}; // namespace RAstar_planner

bool operator<(cells const& c1, cells const& c2) { return c1.fCost < c2.fCost; }- The constructor GlobalPlanner(std::string name, costmap_2d::Costmap2DROS* costmap_ros) is used to initialize the cost map, which is the cost map (costmap_ros) used for planning and the name of the planner (name).

- The default constructor GlobalPlanner() uses default values as initialization. The method initialize(std::string name, costmap_2d::Costmap2DROS* costmap_ros) is the initialization function of BaseGlobalPlanner, which is used to initialize costmap. The parameters are the cost map (costmap_ros) used for planning and the name of the planner (name).

- Next, the bool makePlan(start, goal, plan) method must be rewritten. The final plan will be stored in the method's parameter std::vector<geometry_msgs::PoseStamped>& plan.

- Register the planner as a BaseGlobalPlanner plugin: This is done with the directive PLUGINLIB_EXPORT_CLASS(global_planner::GlobalPlanner, nav_core::BaseGlobalPlanner).

- To do this, the library #include<pluginlib/class_list_macros.h> must be includedmakePlan() method implementation: The start and target parameters are used to obtain the initial position and target position. In this illustrative implementation, the starting position (plan.push_back(start)) is used as the starting planning variable. Then, in the for loop, 20 new virtual positions will be statically inserted into the plan, and then the target position will be inserted into the plan as the last position. This planning path will then be sent to the move_base global planning module, which will publish it via the ROS topic nav_msgs/Path, and will then be received by the local planning module.

- Now that the global planning class has been completed, the second step is to create a plug-in for the global planning class and integrate it into the global planning module nav_core::BaseGlobalPlanner of the move_base package.

To compile the global planning library created above, it must be added to CMakeLists.txt. Add code:

add_library(global_planner_lib src/path_planner/global_planner/global_planner.cpp)Go to the catkin worspace and type:

catkin_make- After completion, there will be a librelaxed_astar_lib under devel/lib. Continue writing the plug-in description file

Step 4: Create the plugin description file

- Check the relaxed_astar_planner_plugin.xml

<library path="lib/librelaxed_astar_lib">

<class name="RAstar_planner/RAstarPlannerROS" type="RAstar_planner::RAstarPlannerROS" base_class_type="nav_core::BaseGlobalPlanner">

<description>This is Relaxed Astar global planner plugin by iroboapp project.</description>

</class>

</library>- Register plugins to the ROS package system

In order for pluginlib to query all ROS packages for all available plugins on the system, each package must explicitly specify which plugins it exports and which package libraries contain those plugins. Plugin providers must point to their plugin description files in package.xml within their export tag block.

- Add the following sentence to the export tag in the package.xml file

<nav_core plugin="${prefix}/relaxed_astar_planner_plugin.xml"/>NOTE: For the above export command to work properly, the provider package must directly depend on the package containing the plugin interface, which in the case of the global planner is nav_core. Therefore, its package.xml must contain the following:

<build_depend>nav_core</build_depend>

<run_depend>nav_core</run_depend>This will tell the compiler about the dependencies of the nav_core package.

- Go back to the working directory and compile catkin_make once, and then query the plug-in:

rospack plugins --attrib=plugin nav_coreYou can see the relaxed_astar plug-in and its location. The plugin has been successfully written.

Step 5 : Adding Plugin to move_base

- Open your own move_base.launch configuration file and add it after move_base starts the node

<node pkg="move_base" type="move_base" respawn="false" name="move_base" output="screen">

<param name="base_global_planner" value="RAstar_planner/RAstarPlannerROS"/>Save and close move_base.launch.xml.

- It should be noted that the name of the planner is RAstar_planner/RAstarPlannerROS, which is consistent with the name specified in the relaxed_astar_planner_plugin.xml file.

By completing these steps, astar path planning would be successfully implemented.

Install all the libraries for Intel RealSense D435i Camera

- Register the server's public key:

sudo mkdir -p /etc/apt/keyrings

curl -sSf https://librealsense.intel.com/Debian/librealsense.pgp | sudo tee /etc/apt/keyrings/librealsense.pgp > /dev/nullMake sure apt HTTPS support is installed:

sudo apt-get install apt-transport-https- Make sure apt HTTPS support is installed:

sudo apt-get install apt-transport-https- Add the server to the list of repositories:

echo "deb [signed-by=/etc/apt/keyrings/librealsense.pgp] https://librealsense.intel.com/Debian/apt-repo `lsb_release -cs` main" |

sudo tee /etc/apt/sources.list.d/librealsense.list

sudo apt-get update- Install the libraries (see section below if upgrading packages):

sudo apt-get install librealsense2-dkms

sudo apt-get install librealsense2-utilsThe above two lines will deploy librealsense2 udev rules, build and activate kernel modules, runtime library and executable demos and tools.

Reconnect the Intel RealSense depth camera and run:

realsense-viewerto verify the installation.

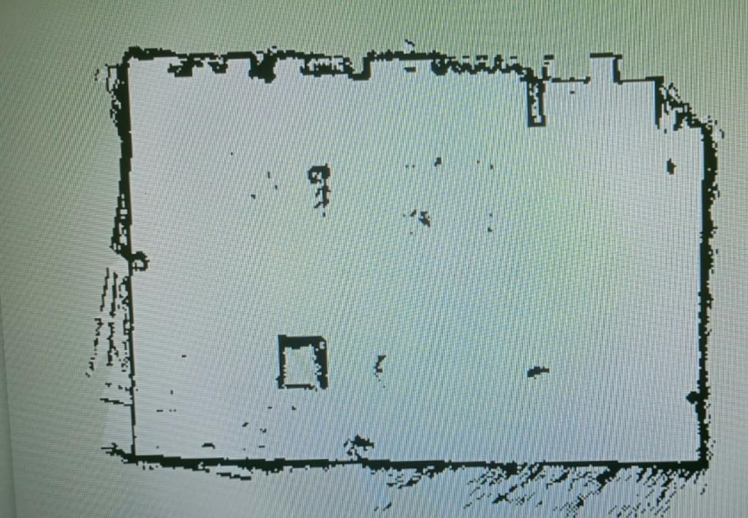

Realsense interface

Realsense interface

What is Jupyter Notebook?

The Jupyter Notebook is the original web application for creating and sharing computational documents. It offers a simple, streamlined, document-centric experience.

(From the website : https://jupyter.org)

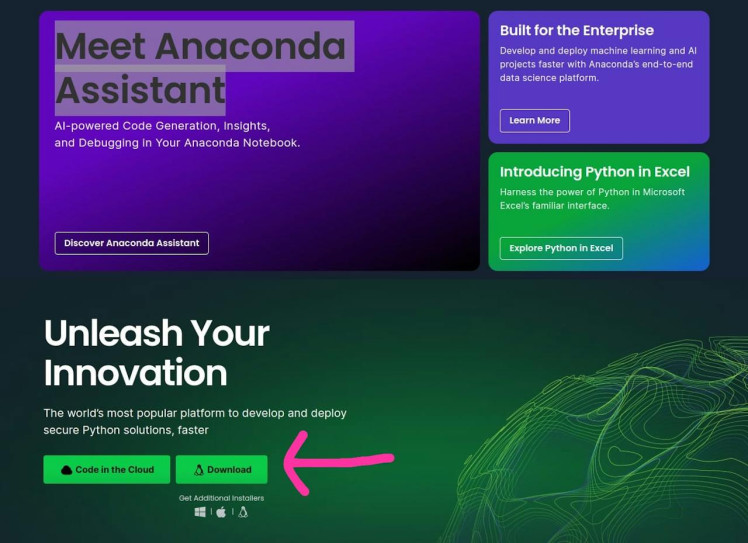

We are using Jupyter Notebook through Anaconda, which is a popular Python distribution for data science and scientific computing.

Installation of Anaconda

First, Visit Anaconda distribution website and download it

https://www.anaconda.com/#

https://www.anaconda.com/#

After installation, locate the file in the "Downloads" folder and open a terminal in that directory.After installation, locate the file in the "Downloads" folder and open a terminal in that directory.

bash Anaconda3-2023.07-2-Linux-x86_64.shPress enter to confirm the location. After Installation check whether Anaconda is Installed by typing

anaconda-navigatorInstallation of Ananconda IDE is completed.We have to create a new folder for our posture detection model. After creating a new folder, click "Open in terminal". Launch jupyter notebook

jupyter notebookCreate a new folder inside Jupyter notebook.

After installation open the terminal again and install the following libraries:(Open CV, Matplotlib and Numpy)

pip install opencv-python

pip install matplotlib

pip install numpyInstall the python wrapper for realsense by using the command:

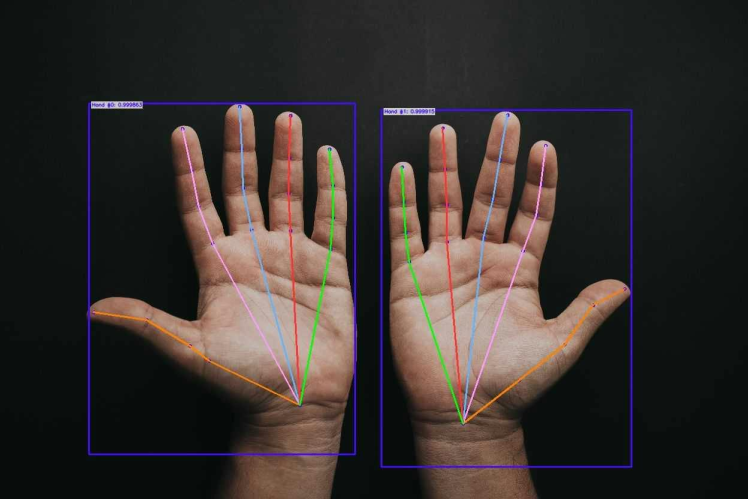

pip3 install pyrealsense2Installation of OpenPose Posture Detection Model

What is OpenPose?

At its core, OpenPose is a groundbreaking pose estimation tool. It uses advanced neural networks to detect human bodies, hands, and facial keypoints in images and videos. Imagine a system that can track every movement of a dancer or the subtle expressions of a speaker – that's OpenPose in action. To make it more relatable, think of it as teaching computers to understand and interpret human body language in a way that was never possible before.OpenPose is a real-time multi-person keypoint detection library for body, face, and hand estimation. It is capable of detecting 135 keypoints.

Working of Open-Pose

Working of Open-Pose

Download the repo given below and paste it inside our project folder

https://github.com/quanhua92/human-pose-estimation-opencvGo back to our Jupyter notebook folder and check whether the libraries are installed perfectly. You can do this by adding (Press Shift+Enter for running the command)

import cv as cv2 ## Importing opencv

import matplotlib.pyplot as plt ## Importing matplotlib

import pyrealsense2 as rsRun and execute the following code:

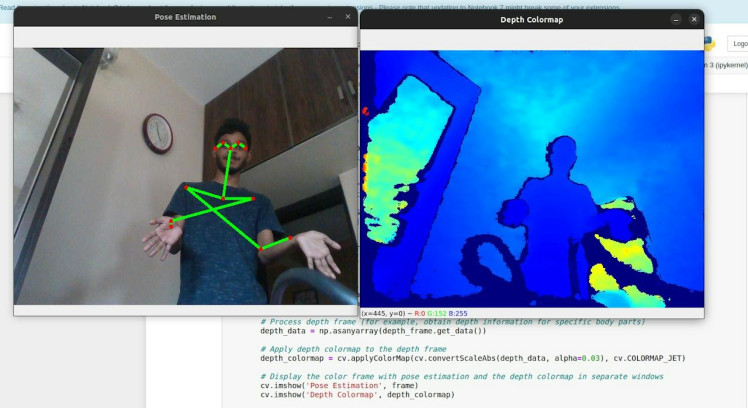

""" Posture detection model using Realsense D435i

Authors : Muhammed Zain and Vishnuraj A

Date : 10/11/2023

Last Update : 29/11/2023 """

# Load the pose detection model

net = cv.dnn.readNetFromTensorflow("graph_opt.pb") # Replace with your model path

inWidth = 368

inHeight = 368

thr = 0.2

# (Your BODY_PARTS and POSE_PAIRS definitions here)

BODY_PARTS = {

"Nose": 0, "Neck": 1, "RShoulder": 2, "RElbow": 3, "RWrist": 4,

"LShoulder": 5, "LElbow": 6, "LWrist": 7, "RHip": 8, "RKnee": 9,

"RAnkle": 10, "LHip": 11, "LKnee": 12, "LAnkle": 13, "REye": 14,

"LEye": 15, "REar": 16, "LEar": 17, "Background": 18

}

POSE_PAIRS = [

["Neck", "RShoulder"], ["Neck", "LShoulder"], ["RShoulder", "RElbow"],

["RElbow", "RWrist"], ["LShoulder", "LElbow"], ["LElbow", "LWrist"],

["Neck", "RHip"], ["RHip", "RKnee"], ["RKnee", "RAnkle"], ["Neck", "LHip"],

["LHip", "LKnee"], ["LKnee", "LAnkle"], ["Neck", "Nose"], ["Nose", "REye"],

["REye", "REar"], ["Nose", "LEye"], ["LEye", "LEar"]

]

# Initialize RealSense pipeline

pipe = rs.pipeline()

config = rs.config()

config.enable_stream(rs.stream.color, 640, 480, rs.format.bgr8, 30)

config.enable_stream(rs.stream.depth, 640, 480, rs.format.z16, 30)

pipe.start(config)

try:

while True:

# Wait for frames to be available

frames = pipe.wait_for_frames()

color_frame = frames.get_color_frame()

depth_frame = frames.get_depth_frame()

if not color_frame or not depth_frame:

continue

# Convert color frame to a numpy array

frame = np.asanyarray(color_frame.get_data())

frameWidth = frame.shape[1]

frameHeight = frame.shape[0]

blob = cv.dnn.blobFromImage(frame, 1.0, (inWidth, inHeight), (127.5, 127.5, 127.5), swapRB=True, crop=False)

net.setInput(blob)

out = net.forward()

out = out[:, :19, :, :] # Keep only the first 19 elements

assert(len(BODY_PARTS) == out.shape[1])

points = []

for i in range(len(BODY_PARTS)):

heatMap = out[0, i, :, :]

_, conf, _, point = cv.minMaxLoc(heatMap)

x = (frameWidth * point[0]) / out.shape[3]

y = (frameHeight * point[1]) / out.shape[2]

points.append((int(x), int(y)) if conf > thr else None)

for pair in POSE_PAIRS:

partFrom = pair[0]

partTo = pair[1]

idFrom = BODY_PARTS[partFrom]

idTo = BODY_PARTS[partTo]

if points[idFrom] and points[idTo]:

cv.line(frame, points[idFrom], points[idTo], (0, 255, 0), 3)

cv.ellipse(frame, points[idFrom], (3, 3), 0, 0, 360, (0, 0, 255), cv.FILLED)

cv.ellipse(frame, points[idTo], (3, 3), 0, 0, 360, (0, 0, 255), cv.FILLED)

# Process depth frame (for example, obtain depth information for specific body parts)

depth_data = np.asanyarray(depth_frame.get_data())

# Apply depth colormap to the depth frame

depth_colormap = cv.applyColorMap(cv.convertScaleAbs(depth_data, alpha=0.03), cv.COLORMAP_JET)

# Display the color frame with pose estimation and the depth colormap in separate windows

cv.imshow('Pose Estimation', frame)

cv.imshow('Depth Colormap', depth_colormap)

if cv.waitKey(1) & 0xFF == ord('q'):

break

finally:

pipe.stop()

cv.destroyAllWindows()We will get a result like this after successful deployement:

Result

Result

We could complete the ros integration and opencv using cv_bridge. We could interface ROS and OpenCV by converting ROS images into OpenCV images, and vice versa, using cv_bridge.

Integrating an environmental monitoring system

We have incorporated a sophisticated system within our robotic infrastructure designed to meticulously assess and monitor key environmental parameters, including but not limited to temperature, humidity, and greenhouse gas emissions.For this purpose we have used Seeed Wio Terminal, Seeed SHT40 and Seeed Multichannel gas sensor. We have used MQTT protocol for communication you could use LoRa for better range

UI is created using node-red. For further information regarding node-red refer the following documentation: Indoor monitoring system Using WioTerminal and Node-red

#include <PubSubClient.h>

#include <rpcWiFi.h>

#include <SensirionI2CSht4x.h>

#include <Wire.h>

#include "sensirion_common.h"

#include "sgp30.h"

#include <TFT_eSPI.h>

TFT_eSPI tft;

TFT_eSprite spr = TFT_eSprite(&tft);

SensirionI2CSht4x sht4x;

int randnumber;

const char *ssid = "zain"; // your network SSID

const char *password = "123456789"; // your network password

const char *ID = "node1"; // Name of our device, must be unique

const char *server = "test.mosquitto.org"; // Server URL

WiFiClient wifiClient;

PubSubClient client(wifiClient);

unsigned long lastMsg = 0;

#define MSG_BUFFER_SIZE (50)

char msg[MSG_BUFFER_SIZE];

int value = 0;

const float VRefer = 3.3; // Voltage of ADC reference

const int pinAdc = A0; // Analog pin connected to the Grove Gas Sensor (O2)

void callback(char* topic, byte* payload, unsigned int length) {

Serial.print("Message arrived [");

Serial.print(topic);

Serial.print("] ");

for (int i = 0; i < length; i++) {

Serial.print((char)payload[i]);

}

Serial.println();

}

void reconnect() {

// Loop until we're reconnected

while (!client.connected()) {

Serial.print("Attempting MQTT connection...");

// Attempt to connect

if (client.connect(ID)) {

Serial.println("connected");

}

else {

Serial.print("failed, rc=");

Serial.print(client.state());

Serial.println(" try again in 5 seconds");

// Wait 5 seconds before retrying

delay(5000);

}

}

}

void setup() {

Serial.begin(115200);

pinMode(WIO_KEY_A, INPUT_PULLUP);

pinMode(WIO_BUZZER, OUTPUT);

tft.begin();

tft.setRotation(3);

tft.fillScreen(TFT_BLACK);

tft.setFreeFont(&FreeSansBoldOblique18pt7b);

tft.setTextColor(TFT_WHITE);

tft.drawString("life line", 70, 10 , 1);

//Line

for (int8_t line_index = 0; line_index < 5 ; line_index++)

{

tft.drawLine(0, 50 + line_index, tft.width(), 50 + line_index, TFT_GREEN);

}

//VCO & CO Rect

tft.drawRoundRect(5, 60, (tft.width() / 2) - 20 , tft.height() - 65 , 10, TFT_WHITE); // L1

//tVCO Text

tft.setFreeFont(&FreeSansBoldOblique12pt7b);

tft.setTextColor(TFT_RED);

tft.drawString("tVOC", 7 , 65 , 1);

tft.setTextColor(TFT_GREEN);

tft.drawString("ppm", 55, 108, 1);

//CO2 Text

tft.setFreeFont(&FreeSansBoldOblique12pt7b);

tft.setTextColor(TFT_RED);

tft.drawString("CO2eq", 7 , 150 , 1);

tft.setTextColor(TFT_GREEN);

tft.drawString("ppm", 55, 193, 1);

// Temp rect

tft.drawRoundRect((tft.width() / 2) - 10 , 60, (tft.width() / 2) / 2 , (tft.height() - 65) / 2 , 10, TFT_BLUE); // s1

tft.setFreeFont(&FreeSansBoldOblique9pt7b);

tft.setTextColor(TFT_RED) ;

tft.drawString("Temp", (tft.width() / 2) - 1 , 70 , 1); // Print the test text in the custom font

tft.setTextColor(TFT_GREEN);

tft.drawString("o", (tft.width() / 2) + 30, 95, 1);

tft.drawString("C", (tft.width() / 2) + 40, 100, 1);

//o2 rect

tft.drawRoundRect(((tft.width() / 2) + (tft.width() / 2) / 2) - 5 , 60, (tft.width() / 2) / 2 , (tft.height() - 65) / 2 , 10, TFT_BLUE); // s2

tft.setFreeFont(&FreeSansBoldOblique9pt7b);

tft.setTextColor(TFT_RED);

tft.drawString("O2", ((tft.width() / 2) + (tft.width() / 2) / 2) , 70 , 1); // Print the test text in the custom font

tft.setTextColor(TFT_GREEN);

tft.drawString("%", ((tft.width() / 2) + (tft.width() / 2) / 2) + 30 , 120, 1);

//Humi Rect

tft.drawRoundRect((tft.width() / 2) - 10 , (tft.height() / 2) + 30, (tft.width() / 2) / 2 , (tft.height() - 65) / 2 , 10, TFT_BLUE); // s3

tft.setFreeFont(&FreeSansBoldOblique9pt7b);

tft.setTextColor(TFT_RED) ;

tft.drawString("Humi", (tft.width() / 2) - 1 , (tft.height() / 2) + 40 , 1); // Print the test text in the custom font

tft.setTextColor(TFT_GREEN);

tft.drawString("%", (tft.width() / 2) + 30, (tft.height() / 2) + 70, 1);

//methane Rect

tft.drawRoundRect(((tft.width() / 2) + (tft.width() / 2) / 2) - 5 , (tft.height() / 2) + 30, (tft.width() / 2) / 2 , (tft.height() - 65) / 2 , 10, TFT_BLUE); // s4

tft.setFreeFont(&FreeSansBoldOblique9pt7b);

tft.setTextColor(TFT_RED) ;

tft.drawString("Methane", ((tft.width() / 2) + (tft.width() / 2) / 2) , (tft.height() / 2) + 40 , 1); // Print the test text in the custom font

tft.setTextColor(TFT_GREEN);

tft.drawString("ppm", ((tft.width() / 2) + (tft.width() / 2) / 2) + 30 , (tft.height() / 2) + 90, 1);

while (!Serial) {

delay(100);

}

Serial.print("Attempting to connect to SSID: ");

Serial.println(ssid);

WiFi.begin(ssid, password);

// attempt to connect to Wifi network:

while (WiFi.status() != WL_CONNECTED) {

Serial.print(".");

WiFi.begin(ssid, password);

// wait 1 second for re-trying

delay(1000);

}

Serial.print("Connected to ");

Serial.println(ssid);

delay(500);

client.setServer(server, 1883);

client.setCallback(callback);

Wire.begin();

uint16_t error;

char errorMessage[256];

sht4x.begin(Wire);

uint32_t serialNumber;

error = sht4x.serialNumber(serialNumber);

if (error) {

Serial.print("Error trying to execute serialNumber(): ");

errorToString(error, errorMessage, 256);

Serial.println(errorMessage);

} else {

Serial.print("Serial Number: ");

Serial.println(serialNumber);

}

s16 err;

u16 scaled_ethanol_signal, scaled_h2_signal;

Serial.println("serial start!!");

while (sgp_probe() != STATUS_OK) {

Serial.println("SGP failed");

while (1);

}

err = sgp_measure_signals_blocking_read(&scaled_ethanol_signal, &scaled_h2_signal);

if (err == STATUS_OK) {

Serial.println("get ram signal!");

} else {

Serial.println("error reading signals");

}

err = sgp_iaq_init();

Serial.println("Grove - Gas Sensor Test Code...");

}

void loop() {

s16 err = 0;

u16 tvoc_ppb, co2_eq_ppm;

err = sgp_measure_iaq_blocking_read(&tvoc_ppb, &co2_eq_ppm);

if (err == STATUS_OK) {

// Successfully read IAQ values

} else {

Serial.println("error reading IAQ valuesn");

}

uint16_t error;

char errorMessage[256];

float temperature;

float humidity;

float randnumbr;

float concentration;

error = sht4x.measureHighPrecision(temperature, humidity);

if (error) {

Serial.print("Error trying to execute measureHighPrecision(): ");

errorToString(error, errorMessage, 256);

Serial.println(errorMessage);

}

char envDataBuf[1000];

randnumber = random(180, 200);

randnumbr = random(1.00, 3);

// Read oxygen concentration from Grove Gas Sensor (O2)

float conc = readConcentration();

concentration=conc;

// Convert button state to 1 (activated) or 0 (not activated)

int buttonState = (digitalRead(WIO_KEY_A) == LOW) ? 1 : 0;

int toxic;

if(co2_eq_ppm>900){

analogWrite(WIO_BUZZER, 128);

tft.fillScreen(TFT_RED);

tft.setFreeFont(&FreeSansBoldOblique24pt7b);

tft.setTextColor(TFT_WHITE);

tft.drawString("DANGER", 65, 100 , 1);

delay(500);

analogWrite(WIO_BUZZER, 0);

tft.fillScreen(TFT_BLACK);

delay(500);

toxic=1;

}

else{

toxic=0;

tft.setFreeFont(&FreeSansBoldOblique18pt7b);

tft.setTextColor(TFT_WHITE);

tft.drawString("life line", 70, 10 , 1);

//Line

for (int8_t line_index = 0; line_index < 5 ; line_index++)

{

tft.drawLine(0, 50 + line_index, tft.width(), 50 + line_index, TFT_GREEN);

}

//VCO & CO Rect

tft.drawRoundRect(5, 60, (tft.width() / 2) - 20 , tft.height() - 65 , 10, TFT_WHITE); // L1

//tVCO Text

tft.setFreeFont(&FreeSansBoldOblique12pt7b);

tft.setTextColor(TFT_RED);

tft.drawString("tVOC", 7 , 65 , 1);

tft.setTextColor(TFT_GREEN);

tft.drawString("ppm", 55, 108, 1);

//CO2 Text

tft.setFreeFont(&FreeSansBoldOblique12pt7b);

tft.setTextColor(TFT_RED);

tft.drawString("CO2eq", 7 , 150 , 1);

tft.setTextColor(TFT_GREEN);

tft.drawString("ppm", 55, 193, 1);

// Temp rect

tft.drawRoundRect((tft.width() / 2) - 10 , 60, (tft.width() / 2) / 2 , (tft.height() - 65) / 2 , 10, TFT_BLUE); // s1

tft.setFreeFont(&FreeSansBoldOblique9pt7b);

tft.setTextColor(TFT_RED) ;

tft.drawString("Temp", (tft.width() / 2) - 1 , 70 , 1); // Print the test text in the custom font

tft.setTextColor(TFT_GREEN);

tft.drawString("o", (tft.width() / 2) + 30, 95, 1);

tft.drawString("C", (tft.width() / 2) + 40, 100, 1);

//o2 rect

tft.drawRoundRect(((tft.width() / 2) + (tft.width() / 2) / 2) - 5 , 60, (tft.width() / 2) / 2 , (tft.height() - 65) / 2 , 10, TFT_BLUE); // s2

tft.setFreeFont(&FreeSansBoldOblique9pt7b);

tft.setTextColor(TFT_RED);

tft.drawString("O2", ((tft.width() / 2) + (tft.width() / 2) / 2) , 70 , 1); // Print the test text in the custom font

tft.setTextColor(TFT_GREEN);

tft.drawString("%", ((tft.width() / 2) + (tft.width() / 2) / 2) + 30 , 120, 1);

//Humi Rect

tft.drawRoundRect((tft.width() / 2) - 10 , (tft.height() / 2) + 30, (tft.width() / 2) / 2 , (tft.height() - 65) / 2 , 10, TFT_BLUE); // s3

tft.setFreeFont(&FreeSansBoldOblique9pt7b);

tft.setTextColor(TFT_RED) ;

tft.drawString("Humi", (tft.width() / 2) - 1 , (tft.height() / 2) + 40 , 1); // Print the test text in the custom font

tft.setTextColor(TFT_GREEN);

tft.drawString("%", (tft.width() / 2) + 30, (tft.height() / 2) + 70, 1);

//methane Rect

tft.drawRoundRect(((tft.width() / 2) + (tft.width() / 2) / 2) - 5 , (tft.height() / 2) + 30, (tft.width() / 2) / 2 , (tft.height() - 65) / 2 , 10, TFT_BLUE); // s4

tft.setFreeFont(&FreeSansBoldOblique9pt7b);

tft.setTextColor(TFT_RED) ;

tft.drawString("Methane", ((tft.width() / 2) + (tft.width() / 2) / 2) , (tft.height() / 2) + 40 , 1); // Print the test text in the custom font

tft.setTextColor(TFT_GREEN);