Exploring the Future of Embedded Machine Learning

Machine learning has become an excellent tool to tackle many data-intensive and highly complex problems across virtually every market. The impact of machine learning has been enormous, from autonomous electric vehicles to healthcare and smart cities. The need to process data at the edge for faster data processing and achieve quick, reliable results has grown in the last decade. The developments and research in microprocessor architecture have brought in the era of running machine learning applications on the smallest microcontrollers.

Running sophisticated and complex machine learning algorithms on microcontrollers has enabled improved efficiency to precisely target the application. With the increasing number of users in the field of embedded machine learning has promised new levels of insights and data sets for the training of specialized models. In simple words, embedded machine learning involves executing machine learning models on embedded devices ranging from smartphones to small microcontrollers.

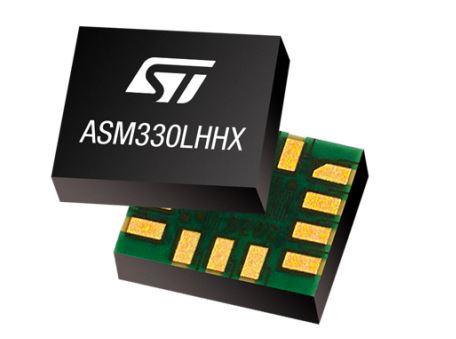

Although the advantages of machine learning in embedded devices are exciting, the user needs to look at the requirements and find the appropriate hardware carefully. ML-powered sensors integrated into the application can improve efficiency and performance. The increase in community support for embedded machine learning makes it possible to run increasingly complex deep learning models directly on microcontrollers. TinyML has brought in two independent communities that worked on ultra-low-power microcontrollers and machine learning algorithms. In the following article, we will take a deep dive into edge computing devices.

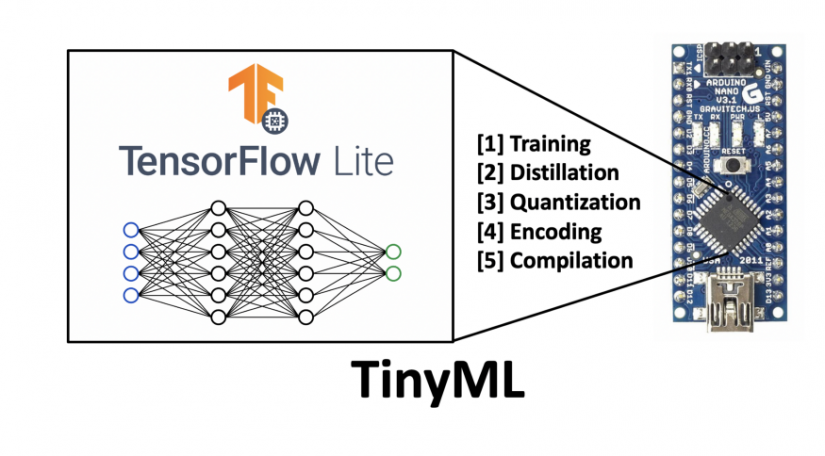

What’s the big thing with TinyML?

TinyML has brought in some key advantages to the field of embedded systems. With improved latency and reliability, the user can quickly implement high-end AI applications on the hardware. The capability to produce real-time analytics using machine learning models has enabled applications like autonomous vehicular systems. Some of the data that was inaccessible due to bandwidth constraints can now provide more information due to AI processing on incoming data.

(Image Credit: Towards Data Science)

Before machine learning was brought on to the embedded devices, the incoming data was sent to the cloud through the network. But with the introduction of embedded machine learning, it saves the cost of data transmission as the data can now be directly processed at the edge. Without data transmission, privacy and security are maintained on the data, which was a risk factor in cloud processing.

The importance of running machine learning models on the microcontroller has increased because of the factors mentioned above: cost, bandwidth, and power constraints. Another reason to deploy ML algorithms on microcontrollers is that they consume very little energy. For example, a face recognition model implemented on an ultra-low-power microcontroller can run the model continuously for a really long time without replacing the battery.

Explore Embedded Machine Learning with Edge Impulse

The next important question is how to get started with embedded machine learning after purchasing an embedded device capable of running AI algorithms. Most of the market's embedded devices and development boards can be programmed and interfaced with Edge Impulse. The Edge Impulse platform has enabled developers to develop next-generation innovative solutions with embedded machine learning. With the dataset and development board, you can train the model and run it on the device.

(Image Credit: Edge Impulse)

Using the team collaboration feature on Edge Impulse lets the user and the team build an intelligent AI solution. Also, if you plan to open source the project, the work can be published as a public version. But there are some minimum hardware requirements to work on Edge Impulse. According to the website, anything from Cortex-M0+ for vibration analysis to Cortex-M4F for audio, Cortex-M7 for image classification, to Cortex-A for object detection in the video is the essential requirement to get started. Going deeper into the frameworks Edge Impulse employs to train machine learning models, including TensorFlow and Keras for neural networks, TensorFlow with Google's Object Detection API for object detection model, and sklearn for 'classic' non-neural network machine learning algorithms.

However, if you already have a pre-trained model, you cannot import it but rather upload model architecture and then retrain. With Edge Impulse, you can also integrate the project with other cloud services to access and play with the data. There is also a way to calculate the power consumption of the Edge Impulse machine learning process on the device, but it cannot be effortless. For more details on the Edge Impulse platform and support development boards, visit the official page.

You can view the Edge Impulse projects submitted from the Electromaker community here.

Predicting the scope of Embedded Machine Learning

The potential of embedded machine learning is so far-reaching that it is almost impossible to imagine a sector that won’t be affected. There is already a rich set of embedded devices and development boards that are capable of executing machine learning and deep learning applications.

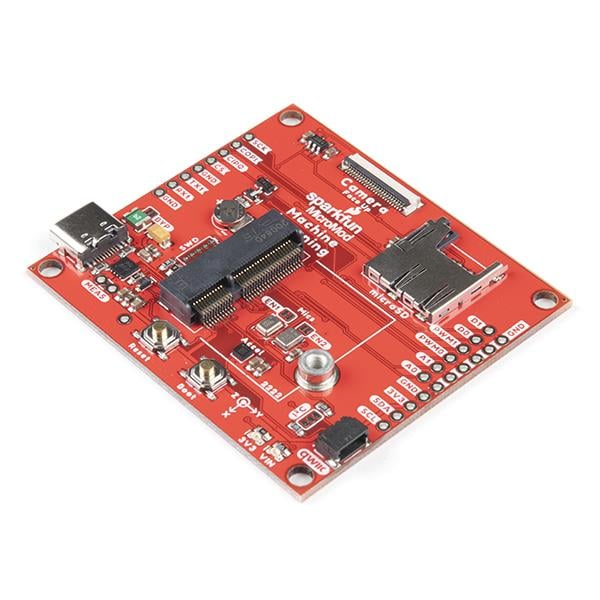

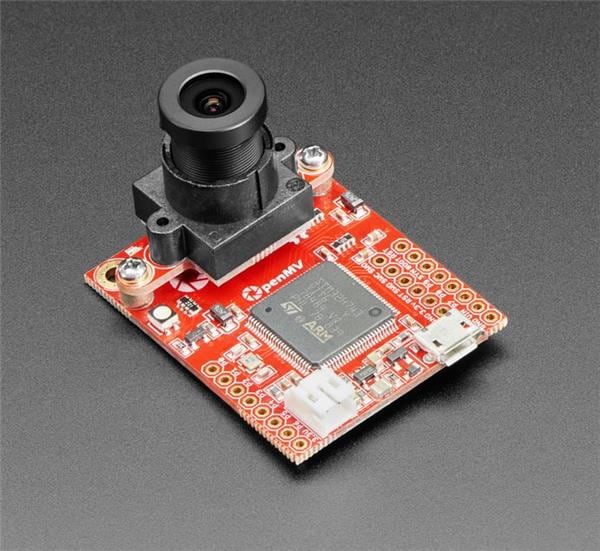

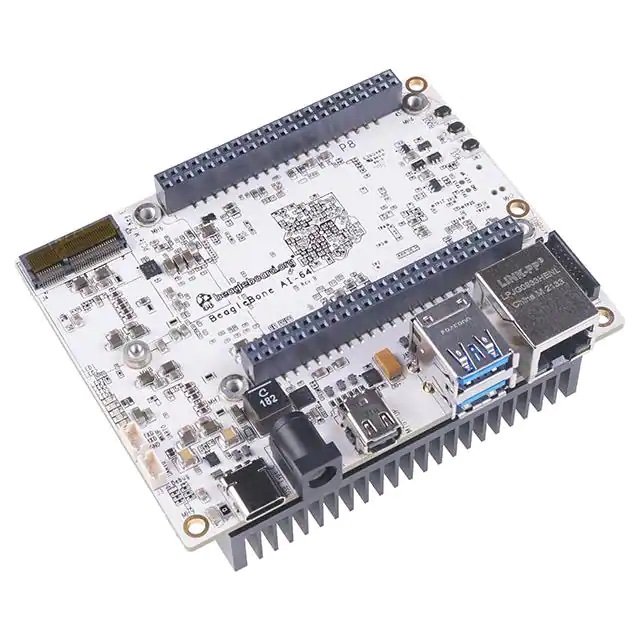

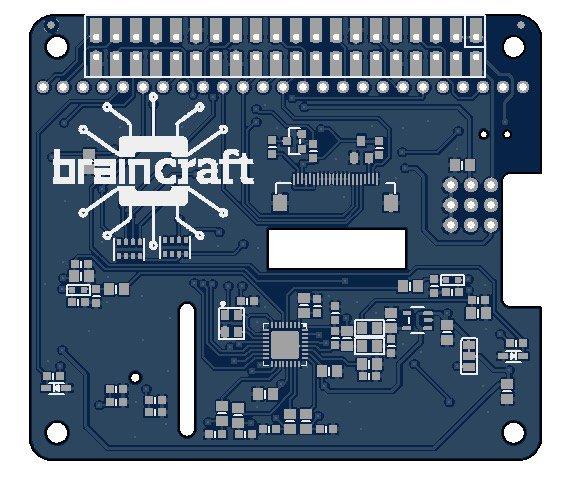

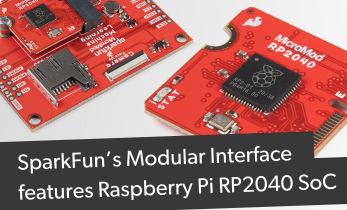

You can find many boards on the Electromaker store, like SparkFun's Edge Development Board that supports deep learning applications like speech transcription and gesture recognition. Another example is the Arduino Nano 33 BLE Sense board based on a Nordic Semiconductor SoC to develop TinyML applications. There has also been new research in the platform-based design of systems-on-chip for embedded machine learning.

Last year, researchers from Columbia University published research that involved designing ESP4ML, an open-source system-level design flow to build and program SoC architectures for embedded applications that require the hardware acceleration of machine learning and signal processing algorithms. The scope of machine learning deployed in embedded devices is bright, with no sector left without being impacted by the revolutionary TinyML.

Final thoughts on Embedded Machine Learning

Due to the increasing amount of data at the edge, it is necessary to run machine learning algorithms at the end-point, an embedded device. This big opportunity is on its way to a boom in the next decade with its foot in various sectors like retail, agriculture, smart cities, and healthcare. It is expected to see quite a lot of development in this space to cater to the ever-growing needs of humankind.

Leave your feedback...