Train AI Models with an ESP32 Camera and Edge Impulse

AI-powered edge computing is rapidly gaining traction, enabling real-time data processing directly on devices without reliance on cloud services. One standout project in this field is the ESP32 Edge AI Camera, developed by Mukes Sankla and featured on the Electromaker Project Hub. This project turns an ESP32-based development board into a low-cost, AI-ready camera designed specifically for model training and real-time inference.

Watch Ian talk about the Edge AI ESP32 camera in this episode of The Electromaker Show

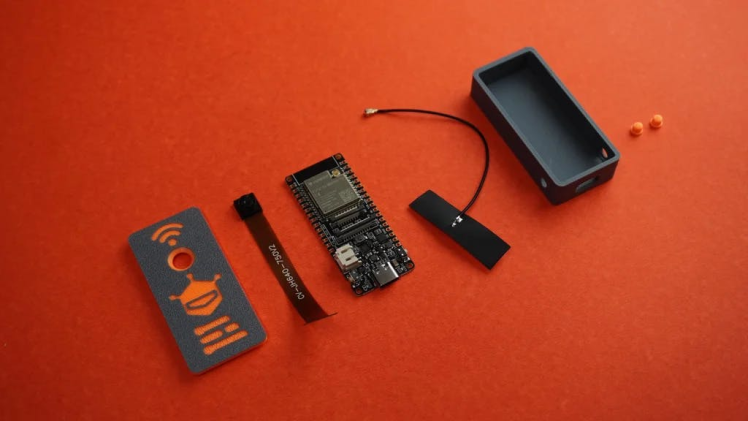

Using a combination of a FireBeetle 2 ESP32 board, a camera module, and a 3D-printed enclosure, the project simplifies the process of capturing and labeling image data for AI training. A custom Python Flask app eliminates the need for manual SD card transfers, allowing seamless integration with Edge Impulse for dataset collection and training.

In this article, we explore how this project works, its potential real-world applications, and how it could be expanded for even more robust AI-driven data collection.

The Hardware Setup – ESP32 and Custom 3D-Printed Case

The ESP32 Edge AI Camera project is built around the FireBeetle 2 ESP32 board, but it can be adapted to work with other ESP32-based boards with camera support. The ESP32 is an ideal choice for edge AI applications due to its low power consumption, built-in Wi-Fi, and compatibility with camera modules.

A key aspect of this project is its custom 3D-printed enclosure. Designed for easy mounting and protection, the enclosure ensures the camera is securely housed for continuous data collection. While the current design is primarily for indoor use, it could be upgraded with an IP68-rated case for outdoor applications, making it resistant to dust and water.

Unlike traditional AI data collection setups, which often rely on expensive industrial cameras, this project keeps costs low by using an affordable microcontroller and camera combination. It also removes the need for external power sources, as the ESP32 operates directly from a host computer.

By integrating the ESP32 with a compact, protective enclosure, this project bridges the gap between DIY prototyping and real-world AI implementation.

Software and AI Integration with Edge Impulse

The ESP32 Edge AI Camera is designed to streamline the process of collecting, labelling, and training AI models. Unlike traditional workflows that require manually transferring images via SD cards, this project uses a Python Flask-based web application to automate data handling.

With the web interface, users can remotely trigger image captures from the ESP32 camera. Once captured, images are directly uploaded to Edge Impulse, a platform specializing in machine learning for embedded systems. By entering Edge Impulse credentials into the software, users can immediately store images for dataset generation, eliminating the tedious manual process.

Once the model is trained, it can be deployed back onto the ESP32 board, enabling real-time AI inference at the edge. This means the device can process and recognize objects, motion, or patterns without needing constant cloud connectivity.

This workflow makes the ESP32 Edge AI Camera an efficient solution for AI development, allowing users to iterate quickly and refine models with minimal effort.

Real-World Applications and Future Potential

The ESP32 Edge AI Camera offers a practical and cost-effective way to collect and process visual data for AI applications. With its ability to capture and train models efficiently, it has the potential to be used in a wide range of real-world scenarios.

Model Training for Object Recognition: This setup allows users to build datasets for custom AI models, making it useful for applications such as security surveillance, automated quality control, and environmental monitoring. The ability to collect images in real-time means models can be continuously improved without manual data transfers.

Deploying AI Models on ESP32: Once trained, models can be deployed back onto the ESP32 to perform real-time inference. This makes it possible to develop AI-powered cameras for detecting motion, identifying objects, or even recognizing specific environmental conditions without the need for cloud computing.

Expanding the Project: The project could be further enhanced by integrating a more ruggedized case for outdoor applications or adding support for motion-triggered image capture. Additionally, integrating wireless power options could make it even more versatile for long-term deployments in remote areas.

With these features, the ESP32 Edge AI Camera is not just a prototype—it’s a scalable solution for real-world AI-powered applications.

Did you enjoy this article?

Make sure you subscribe to The Electromaker Show for similar content and subscribe to our monthly newsletter!

Leave your feedback...