The AI Camera That Dreams What You See

Most photographs taken these days use some form of AI. Since most images and videos taken are recorded on smartphones - with their increasing arms race of hardware and software aimed at providing the perfect photo - AI photography is not a new concept. Smartphones still, however, rely on traditional means. Light enters the sensor, and an image is captured and processed by AI to look as good as possible with minimal work.

What if you could do away with the light entirely?

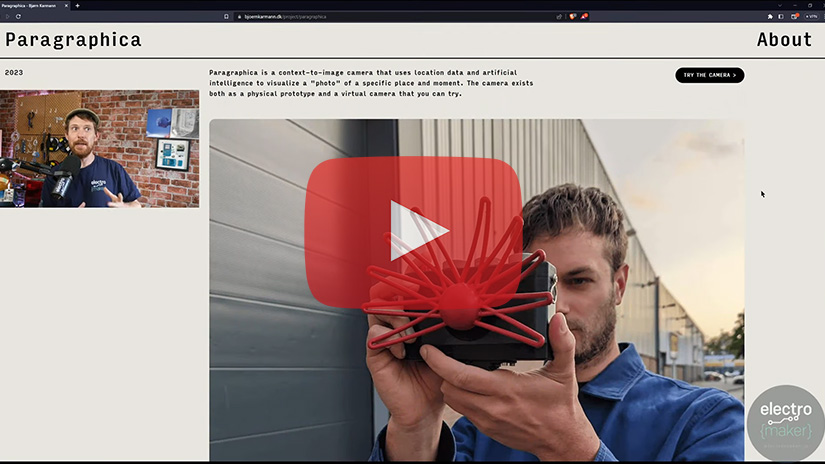

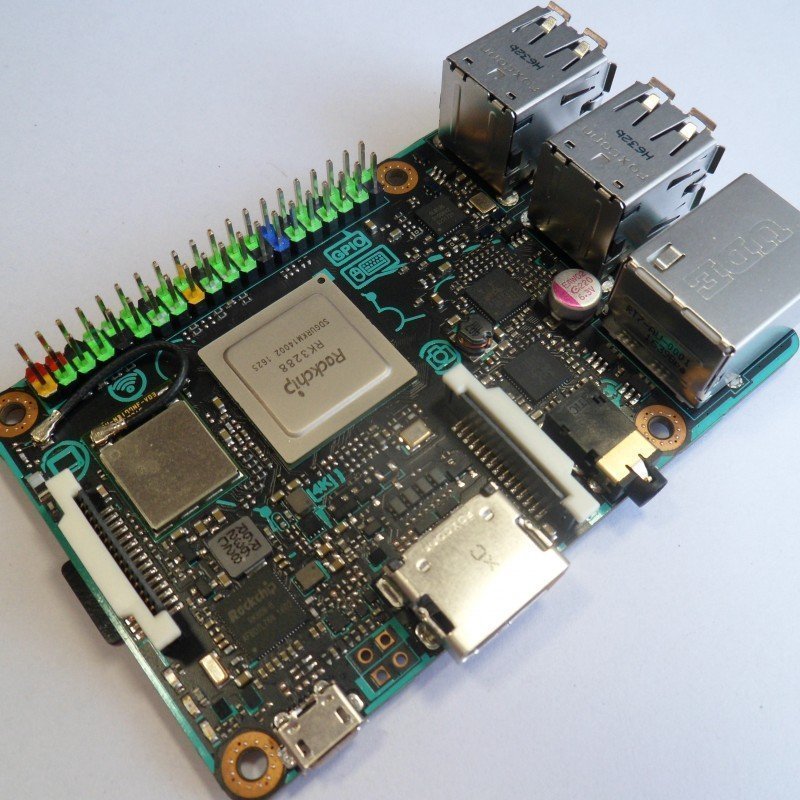

That, in essence, is the core of Bjørn Karmann's Paragraphica - a "context-to-image" camera that generates images using Stable Diffusion, a text-to-image AI tool. Instead of light entering a lens, the Raspberry Pi 4 at the core of Paragraphica will use data like location, weather, and time of day to generate an image based on what it predicts you can see. As with most modern AI tools, the results are stunning and accurate to almost an eerie degree.

The striking front panel is designed to mimic the Star-Nosed Mole's titular organ - its wide red nose capable of sensing over 10 local positions at once in the search for tasty prey. It's a fittingly Sci-Fi addition to what is actually a fairly faithful representation of a retro camera body with three chunky rotary encoders on top, designed and made from scratch by Karmann.

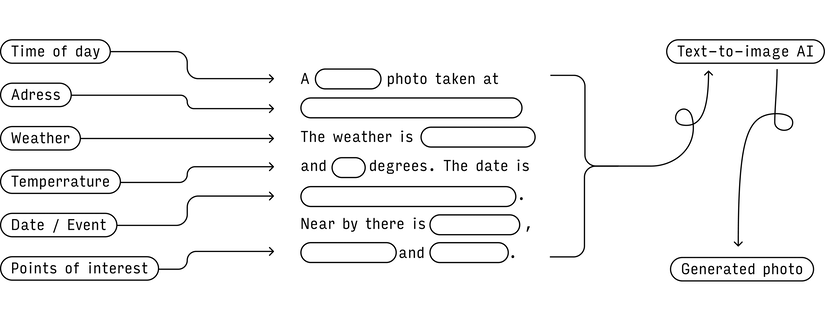

From a software perspective, we can only go on what Bjørn has told us, as it's not an open-source project at this time. From the description, it seems like the lynchpin to the project is the Python code querying various APIs for location, weather, and a few other details before adding it to a pre-designed prompt for the Stable Diffusion API.

The three encoders control parameters to influence the AI output, comprising radius - how far in a circle around the user can it look for points of interest, a noise seed from 0.1-1 to pass to Stable Diffusion, and a 'sharpness' dial, controlling how closely the AI tool stays to the description given.

One aspect that wasn't covered in the video is the online version of Paragraphica, available via Bjørn's Website. It's a paid tool - as the artist has to pay for the API access, but I decided to pay the €2 to get a 'roll of film' and give it a go. Here's a side-by-side result:

Now, I did choose the vintage effect, which may skew things a little, but Paragraphica's online version produced the left image, and the right is Google street view for the location Paragraphica detected. Bearing in mind that the camera never 'saw' the area, it's pretty compelling!

Ultimately, this isn't meant to be an alternative to photography, and the artist is clear about this in the Twitter thread introducing the project:

Thanks for all the amazing feedback ❤️❤️❤️

— Bjørn Karmann (@BjoernKarmann) May 31, 2023

There are a lot of questions in the threads, so I like to clarify that this is a passion art project, with no intention of making a product or challenging photography. Rather it's questioning the role of AI in a time of creative tension

It's a very valid area of investigation that will only grow as AI influences more and more media creation. After all, this isn't meant to replace a photograph, but is an AI generated photograph based on location data any less special than a photo taken by someone in a place you are not?

We used to gather around the fire and listen to folk tales about far away places. Soon, whether we like it or not, AIs more powerful than we can ever understand will be showing us places that have never existed. I think I'm ok with that.

Leave your feedback...