Relic Cyberware

About the project

Bring some Cyberpunk to life and some Keanu to your ears. Arasaka would be proud.

Project info

Difficulty: Moderate

Platforms: Pi Supply, Raspberry Pi, TensorFlow, Pimoroni

Estimated time: 4 hours

License: GNU General Public License, version 3 or later (GPL3+)

Items used in this project

Hardware components

View all

Software apps and online services

|

|

Plier, Cutting | |

|

|

Plier, Needle Nose | |

|

|

Multitool, Screwdriver | |

|

|

Solder Wire, Lead Free | |

|

|

fakeyou | |

|

|

Lakul | |

|

|

OpenAI Whisper | |

|

|

TensorFlow | |

|

|

Bot Engine | |

|

|

Chatbot 2022 Edition | |

|

|

PostgreSQL | |

|

|

Docker |

View all

Story

Your Body Can Be Chrome...

Warning: Some minor spoilers for Cyberpunk 2077 maylay ahead...

The goal of this project was to create wearable Cyberpunk 2077 inspired Cyberware that has an interactive AI with a voice in the wearers head via earphones; powered by ChatGPT.

It is inspired by the Relic - a piece of technology within the game that stores the consciousness of someone and allows them to integrate and interact with someone who has the chip installed in their Cyberware.

In the game a character called Johnny Silverhand (played by Keanu Reeves) inhabits the Relic and talks to your character directly to their mind using the tech. He is also able to be seen; although only by the user as he's essentially being projected in their mind.

I started work on it a couple of years back but it's taken quite some time and trial and error to get right in terms of hardware and software - it was originally using my Bot_Engine and Chatbot, until ChatGPT got released and I realised the obvious solution was to integrate that instead.

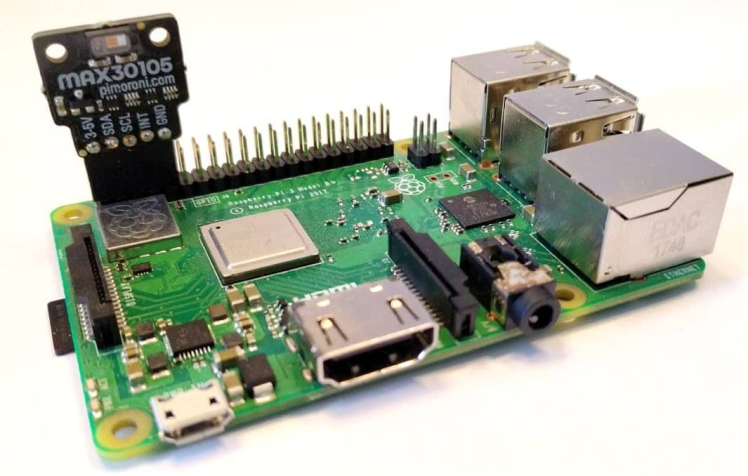

The goal was to have a MAX30105 sensor to use as a heartbeat detector, that would sit on the temple of the wearers head. It will also be handy for additional responses if the users heartrate goes higher or lower.

Quick Warning:

This code AND hardware should not be used for medical diagnosis. It's for fun/novelty use only, so bear that in mind while using it.

Also bear in mind that ChatGPT will respond with the style of whatever context you

have set within config/chatgpt_config.py, so I can't be sure what it will say. And I

can't take any responsibility for any other context that is set or what it says.You’ll Follow This Breadcrumb Trail

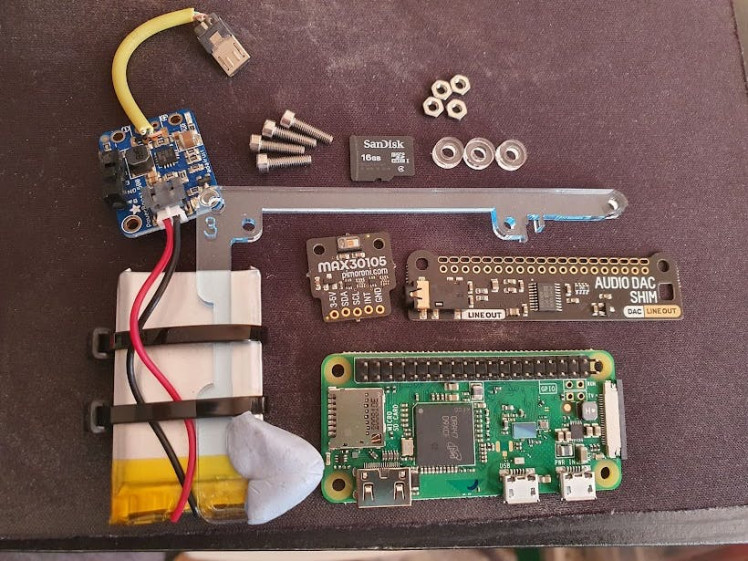

The key part of this was the Raspberry Pi Zero 2 - which provides enough CPU power and RAM to run speech inferencing and some other things in the form factor that the original Pi Zero couldn't.

I was originally trying to use a slice of a PiBow to hold it to my ear - which was fine if it was just the Pi Zero on its own but when you start adding in sensors, the case and especially battery tech it gets too heavy to stay on the head.

Where it all began.

So I had to find another solution; and that solution was a nice big metal hairband I got from Amazon.

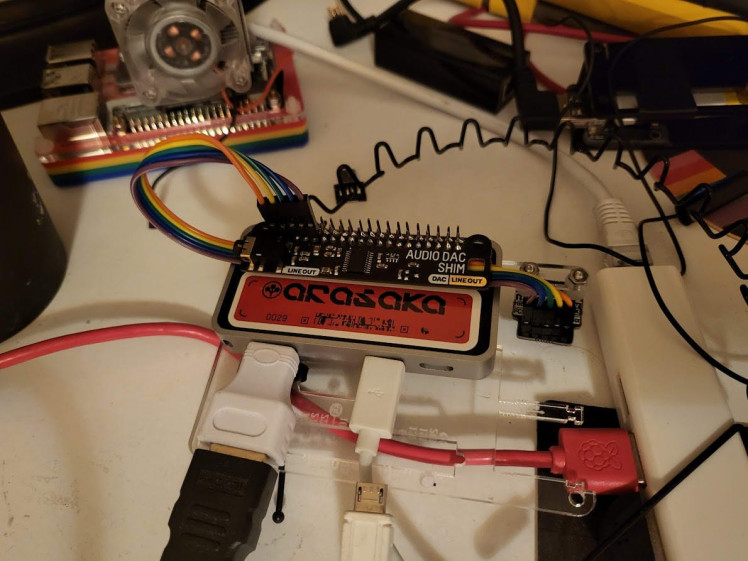

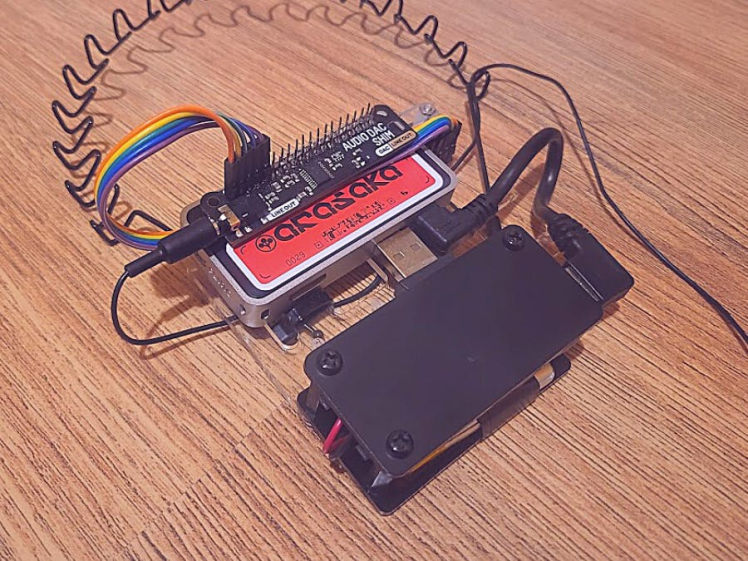

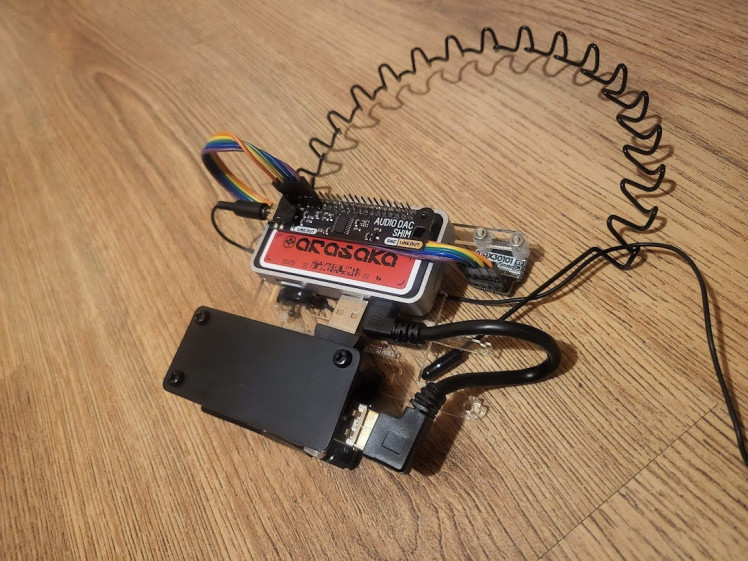

You can see I got a nice metal case, mounted the whole thing on a more substantial slice of a PiBow and added some extra stuff from a Pi Zero case in order to hold the battery and the LiPo Powerboost 500.

The 30105 sensor hooked up to the front facing inwards towards the wearers head:

This is hooked up to the Pi relatively easy as seen here:

I've just extended it out with some extra wiring.

The Pi and case mounted to the PiBow slice:

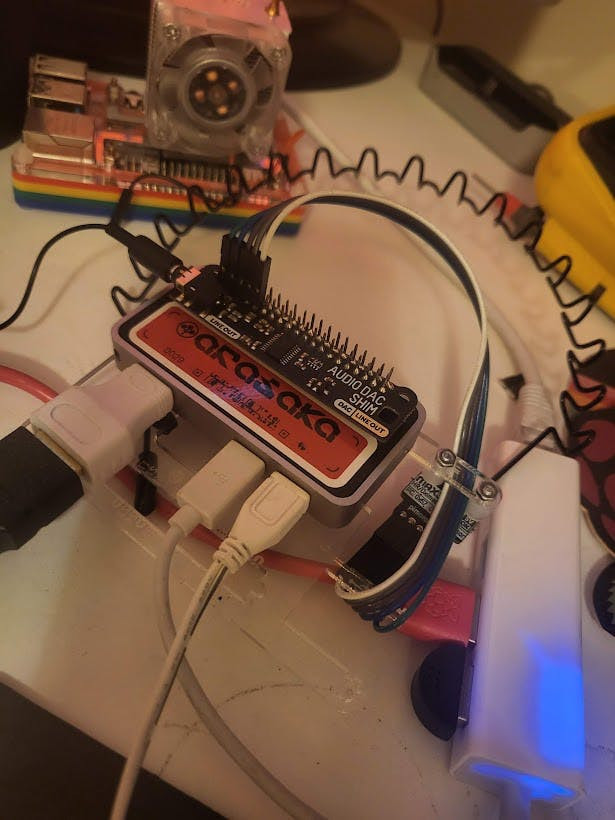

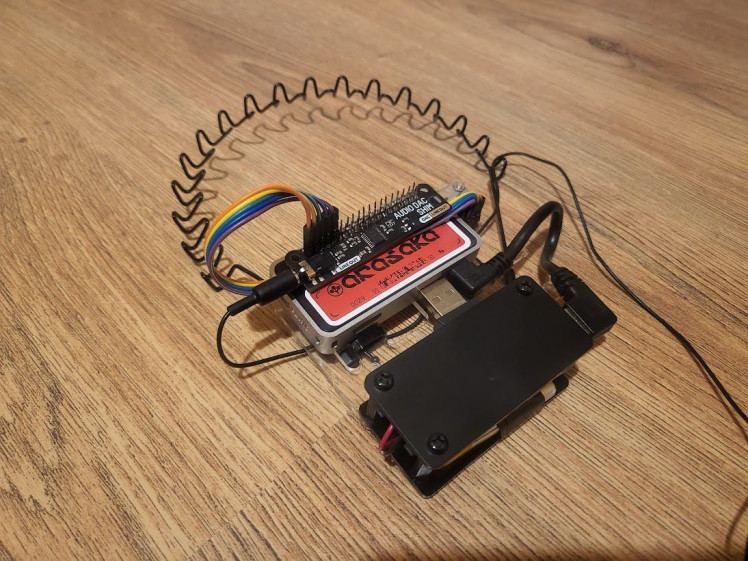

Here's the Audio DAC Shim on the original headset, this would later have to be moved onto an extended header in order to allow for the sensor to be connected to pins via jumper cables:

How the battery and main pieces hook up with an angled USB A to USB Micro cable:

The headpiece in its final form, also with an Arasaka sticker on it:

And a final bit of tidying up for the sensor & wires:

And the final final hardware with the USB mic plugged in with a USB Micro shim, the headband and the Headphones:

Lemme Pretend I Exist Sometimes, Ok?

The code can be found at my GitHub.

Check the code out with:

git clone --recursive https://github.com/LordofBone/Relic_Cyberwear.gitAnd you can ensure all submodules are updated with:

git submodule update --remote --recursiveIt contains the new-ish OpenAI Whisper speech to text system; which is reasonably accurate, while also working completely offline.

I have build my own integration for this: called Lakul, which enables some really nice and easy integration with my existing projects. I may do a full write up here one day on this.

A thing to be aware of here is that sometimes Whisper gets things wrong and what you say may not be what it inferences, so be aware of that and be aware that what you say will go to ChatGPT, so be careful - I of course take no responsibility for what you say to ChatGPT via this program.

I was also getting a bit tired of the classic e-speak, War Games style TTS I was using; as much as I love it - something a bit smoother was needed, so I am now using Nix-TTS; which gives a much more natural sounding voice with only a few glitches and weird pronunciations.

You can also use FakeYou for generating voices with this, which is a separate branch of the code grab it with:

git clone --recursive --branch enhanced_tts https://github.com/LordofBone/Relic_Cyberwear.gitWith this the API can be used with FakeYou to generate a voice, for instance, Keanu Reeves and make a much more authentic Relic experience.

However; I've not included this in the main branch and in the video I only use testing samples as I am not quite sure of the implications of cranking in ChatGPT responses pretending to be a Cyberpunk 2077 character into a TTS system that mimics a real persons voice.

If someone can give me some details on this, I have seen peoples Twitch streams with these voices for donations and stuff, so I guess its fine?

Either way make sure to read the T&C's at FakeYou before signing up and don't do anything silly with it.

I take no responsibility for what anybody does with FakeYou via modifying this program, also be warned that ChatGPT may generate odd things to be passed into FakeYou, which also depends on what is said to ChatGPT.

Copy/rename the file config/fakeyou_config_template.py to config/fakeyou_config.py and add your own username/password and voice ID - you can find TTS ID's on the site here.

Also ensure this var under config/launch_config.py is set to True:

fakeyou_mode = TrueFor the ChatGPT integration, there was no official API while this was being made so I am using the ChatGPT wrapper by mmabrouk. Although hilariously literally while I was filming the video for this I got an email saying the API for ChatGPT and also Whisper was now available. Typical.

The link above has installation instructions but also links out to a video on the install here that goes over getting it working with Unity and on Windows and a write up here.

Essentially it uses web browser automation wrapped up in a python script to interact with ChatGPT.

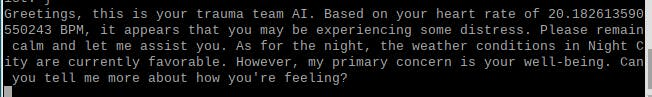

Here's the config for ChatGPT to give it a role and information from the heart beat sensor:

request_context = "you are a trauma team ai from the game cyberpunk 2077 integrated into a cyberware chip in my head; "

"reply to this message in the same tone and style as the character"

post_context = "also taking into account my heart rate bpm of ${heart_rate}, keeping the response under 500 characters"So it essentially wraps any user input from the speech to text in this context, which gives it a certain character to act as for its responses; so this can be configured to change the nature of the AI as well as any extra variables for it to take into account.

So setup a venv and install the requirements file (although I ended up having to install things on root because the ChatGPT wrapper required root pip install? Not an issue if you have an OS install specific for this, but if you want to keep your root python stuff clean be aware of this):

sudo apt install virtualenv

virtualenv venv

pip install -r requirements.txtAnd then setup the DAC SHIM per the instructions here:

git clone https://github.com/pimoroni/pirate-audio

cd pirate-audio/mopidy

sudo ./install.shTo download all the required models for the TTS system run the setup_models.py script:

python setup_models.pyThe main code can be run with simply:

python main.pyAnd this of course can be set to launch on boot of the RPi.

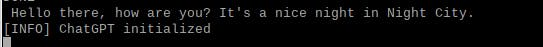

Essentially the code runs a loop where it calls on Whisper to listen, takes the users voice input, wraps it in the context and sends it off to ChatGPT via the Wrapper. It then waits for the response and when it has it, passes it into the TTS system then loops back around to listening.

And for shutting down and rebooting etc. there is a command checker so if you say to it "shutdown", or "reboot" it will follow the instructions, removing the need for a physical off button.

There is also a test mode that will just run the above once, rather than in a loop.

Also there is configuration for changing what the system says on boot.

Swap meat for chrome

So that video was a lot of fun to make (outside of finding bugs in real time during).

I found that responses over a certain amount of characters would totally wreck Nix-TTS and cause it to crash out; but even shorter responses could sometimes result in it breaking. Although some of the responses from the Keanu voice broke up a bit too - so it may be as issue with playsound rather than TTS.

Asking ChatGPT to keep the responses under 500 characters seemed to help this although it still took a long time to generate the TTS locally. The Keanu voice is really good too, although it is a bit robotic at times, it still sounds really good.

As heard in the video the responses that ChatGPT makes when its in the role are really cool. It's able to fully act out responses without any of the usual polite stuff it applies. Very cool and actually feels like you are getting responses from the character.

So the main issue here is that the Pi Zero 2, while relatively powerful with a quad core arm SoC and 512mb RAM, still takes its time to process certain things. So the speech to text can take a couple of minutes to process, the TTS can take a while and even the ChatGPT wrapper can take a bit of processing.

So the interaction isn't exactly real-time, but I am hoping for a Pi Zero 3 in future with some beefier h/w or maybe even another small form factor device that I can use for this. A Pi 4 would be significantly faster, but this would be much more difficult to implement has headwear.

Here are some shots of it on my head along with a Cyberpunk jacket:

And some extra close ups:

Even just wearing it around with the Cyberpunk jacket on is so much fun, as you can see in the video. Great stuff.

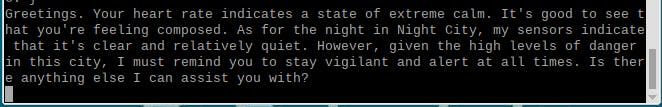

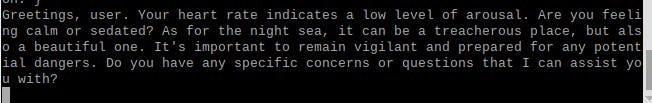

And here are some other responses outside of the video while I was wearing the Cyberware on my head...

Speech to Text input:

Text to Speech output from ChatGPT:

Sometimes the Speech to Text gets things a little wrong:

And the ChatGPT output runs with it:

And another output which shows the potential inaccuracy of the heart rate sensor:

I Just Want The World To Know I Was Here.

So overall, this works; but it is quite slow on the STT side of things - even when the STT is running on its own, so hopefully, in future a new RPi Zero 3 or a Zero 2 with a Gig of RAM comes out to help speed things up. I think a lot of the speed issues are caused by the lack of RAM causing paging but I also noticed this same issue with the Terminator Skull which ran on the Pi 4 8GB - while it was also threading and running other things.

Luckily the platform I have built this on for should allow for easy upgrades, hopefully to the point where I can swap the Pi while keeping the same case that attaches to the main Cyberware. So in future this can maybe be able to run conversations in real time and the Cyberware can be used to create all kinds of AI characters and assistants to interact with.

The other issue is with the ChatGPT wrapper I was using, in that every response given will start a new conversation with ChatGPT, rather than continuing the same thread - but I think there is code within that wrapper that allows for this; also with the official API out now it doesn't matter too much.

Also I could mitigate the speech to text time to process greatly by using the official API for that as well. So by using FakeYou, OpenAI's new Whisper API's and ChatGPT API I can greatly speed this up. So keep an eye out for an enhancement on this in future.

It would be cool to also add a visual element somehow - if I could crack how to get a properly focused screen to work nicely in the wearers eye. I've managed this before with the EDITH glasses and the Iron Man helmet, but they weren't big or detailed enough to show complex things beyond text; so this is an area that requires further R&D.

Eventually the updates and bug fixes added to this will get it to a better state and also be put into the eventual Relic Cyberware 2.0.

I think it is quite fitting, though, that this took ages to build and is quite buggy and slow and needs loads of updating - given that's how Cyberpunk itself was released back in 2020.

Let me know what you think and stick around for future projects! I am going to be looking into hooking this context/role based ChatGPT system into other projects such as my Terminator Skull.

Give yourself time. Ideas'll come. Life'll shake you, roll you, maybe embrace you.

Leave your feedback...