Presentation Controller For Powerpoint With The Tactigonskin

About the project

We made this project to show an example of how you can integrate the T-Skin into everyday tasks making them more interesting and intuitive.

Project info

Difficulty: Moderate

Estimated time: 1 hour

License: GNU General Public License, version 3 or later (GPL3+)

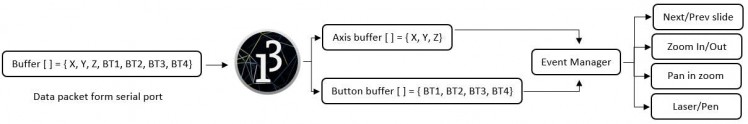

Items used in this project

Story

Introduction

Ever wondered how to control your PowerPoint presentation in a more natural way than those little TV remote like controllers? Well, we’ve got you cover! Here’s how you can use the T-Skin as a PPT controller with gesture control and tools support. You can go through slides, zoom in and out, pan into zoomed slides and use presentation tools such as the laser pointer and the pen.

Software Architecture

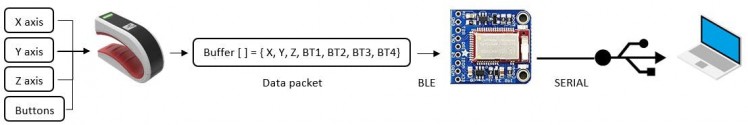

Here is a little scheme showing the data flow in this project:

Reading and sending of gyroscope and butons state

Reading and sending of gyroscope and butons state

T-Skin

The T-Skin gather information about its gyroscope and buttons state and packs all of that into a data packet that is sent over Bluetooth to a BLE-to-USB Serial converter connected to a PC. This operation is done once every thirtieth of a second to ensure the smoothness of the gesture.

Here you can see how the data is packed up for sending:

const uint8_t BUFF_SIZE = 20;

char buffData[BUFF_SIZE];

snprintf (buffData, BUFF_SIZE, "%04d %04d %04d %01d%01d%01dn", (int)(rollTmp * 10), (int)(pitchTmp * 10), (int)((yaw + 180) * 10), gpp1.read(), gpp2.read(), gpp3.read());

Serial.println(buffData);

bleManager.writeToPeripheral((unsigned char *)buffData, BUFF_SIZE);

First we declare a char array named buffChar with size of 20 and we fill it up with the data from the axis (roll, pitch and yaw) and the buttons state using snprintf. Then with bleManager.writeToPeripheral we send the buffer over BLE.

Processing

Data parsing

Data parsing

Once a data packet is delivered to the PC a Processing application collects it and proceeds with a parsing operation where axis and button state data are separated for easier manipulation later on. As soon as a new data packet is received the process is repeated.

Here you can see the data parsing operation:

roll = getNormalized(((Float.parseFloat(input.split(" ")[0]) / 10) - 180) - rollZero);

pitch = getNormalized(((Float.parseFloat(input.split(" ")[1]) / 10) - 180)-pitchZero);

yaw = getNormalized(((Float.parseFloat(input.split(" ")[2]) / 10) - 180) - yawZero);

buttonsString = input.split(" ")[3];

We store every angle and button state value into its own variable. To do so we use Float.parseFloat which receive as an input a string like this "1234 " and extracts a float (in this example 1234). Then the obtained value goes through a normalization function called getNormalized that do some calculation for a correct interpretation of the data.

Once the data are separated an event manager check for changes in the axis values and, if a certain threshold value is surpassed, it triggers the corresponding command: next/previous slide, zoom in/out, laser pointer activation and so on.

Here are some example of those functions:

private Robot r;

int event = ppModeGestureStateMachine(pitch);

if(event == 2){

println("zoom in");

r.keyPress(KeyEvent.VK_CONTROL);

r.keyPress(KeyEvent.VK_PLUS);

r.keyRelease(KeyEvent.VK_PLUS);

r.keyPress(KeyEvent.VK_PLUS);

r.keyRelease(KeyEvent.VK_PLUS);

r.keyPress(KeyEvent.VK_PLUS);

r.keyRelease(KeyEvent.VK_PLUS);

r.keyRelease(KeyEvent.VK_CONTROL);

delay(500);

}

In this snippet of code the zoom in function is executed after a quick check on how the T-Skin is moved by the user. This is done with the ppModeGestureStateMachine() function which, given one of the principal axes, returns an integer corresponding to the rotation direction. The zoom is done via a virtual keyboard keystroke (here we zoom three times into the slide).

The following shows how a circle can be "drawn" on screen with the laser pointer tool:

switch(i)

{

case BUTTON_3:

mouse = MouseInfo.getPointerInfo().getLocation();

r.mouseMove(startcircleX, startcircleY);

r.keyPress(KeyEvent.VK_CONTROL);

r.keyPress(KeyEvent.VK_L);

r.keyRelease(KeyEvent.VK_L);

r.keyRelease(KeyEvent.VK_CONTROL);

for (step = 0; step < maxstep; step++) {

float t = 2 * PI * step / maxstep;

int X = (int)(circleX + radius * cos(t));

int Y = (int)(circleY + radius * sin(t));

r.mouseMove(X, Y);

delay(35);

}

r.keyPress(KeyEvent.VK_CONTROL);

r.keyPress(KeyEvent.VK_L);

r.keyRelease(KeyEvent.VK_L);

r.keyRelease(KeyEvent.VK_CONTROL);

delay(500);

break;

default:

break;

}

In this snippet of code the drawing starts when the button labled as BUTTON_3 is pressed. First we move the mouse pointer to the start point of the circle, then we activate the laser tool with the corresponding keystroke. The circle coordinates are calculated and used to move the pointer on screen in the for loop. When the circle is complete we deactivate the laser tool with the same keystroke combination.

To avoid unintentional movements one of the four button on the T-Skin is dedicated to the activation of the gesture control function.

Conclusions

With the T-Skin you can now really captivate your audience during your next presentation. If you want, you could integrate the range extender shown in the previous article so you can navigate through slides even in a big crowded presentation room!

Code

Credits

The Tactigon

The Tactigon is a brand of Next Industries Milano. Next Industries is a born of a passion to develop devices and sensors fit for Iot Technology. Our team is made of incredible experts in movement detection for big structural monitoring systems, software development and wearable device.

Leave your feedback...