Iot Ai-assisted Deep Algae Bloom Detector W/ Blues Wireless

About the project

Take deep algae images w/ a borescope, collect water quality data, train a model, and get informed of the results over WhatsApp via Notecard

Project info

Difficulty: Expert

Platforms: Adafruit, Autodesk, DFRobot, Raspberry Pi, SparkFun, Twilio, Edge Impulse, Blues Wireless

Estimated time: 1 week

License: Creative Commons Attribution CC BY version 4.0 or later (CC BY 4+)

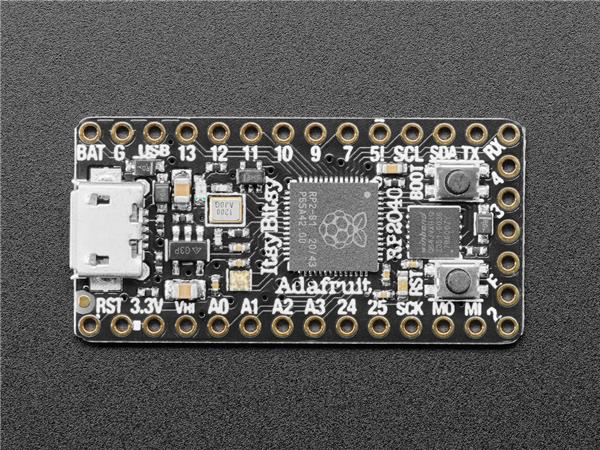

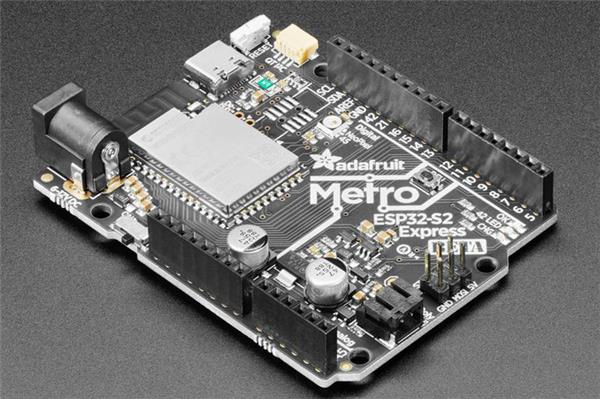

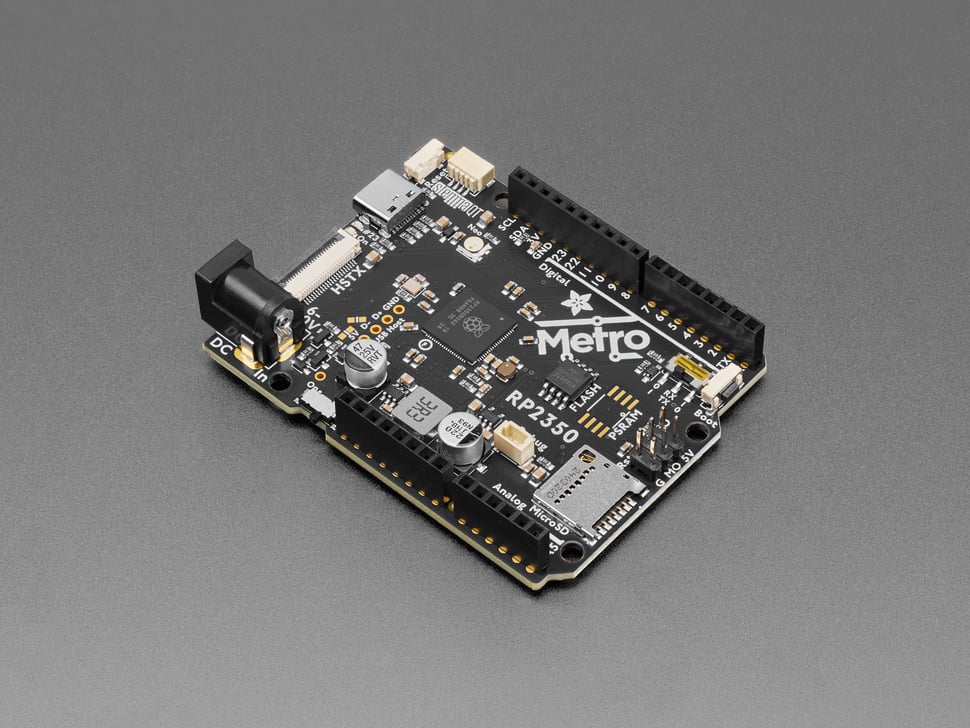

Items used in this project

Hardware components

View all

Software apps and online services

Story

In technical terms, algae can refer to different types of aquatic photosynthetic organisms, both macroscopic multicellular organisms like seaweed and microscopic unicellular organisms like cyanobacteria[1]. However, algae (or algal) bloom commonly refers to the rapid growth of microscopic unicellular algae. Although unicellular algae are mostly innocuous and naturally found in bodies of water like oceans, lakes, and rivers, some algae types can produce hazardous toxins stimulated by environmental factors such as light, temperature, and increasing nutrient levels[2]. Since algal toxins can harm marine life and the ecosystem detrimentally, algal bloom threatens people and endangered species, especially considering wide-ranging water pollution.

A harmful algal bloom (HAB) occurs when toxin-secreting algae grow exponentially due to ample nutrients from fertilizers, industrial effluent, or sewage waste brought by runoff in the body of water. Most of the time, the excessive algae growth becomes discernible in several weeks and can be green, blue-green, red, or brown, depending on the type of algae. Therefore, an algae bloom can only be detected visually in several weeks if a prewarning system is not available. Even though there is a prewarning algae bloom system for the surface of a body of water, algal bloom can occur beneath the surface due to discharged industrial sediment and dregs.

Since there are no nascent visual signs, it is harder to detect deep algal bloom before being entrenched in the body of water. Until detecting deep algal bloom, potentially frequent and low-level exposures to HAB toxins can cause execrable effects on marine life and health risks for people. When deep algae bloom is left unattended, its excessive growth may block sunlight from reaching other organisms and cause oxygen insufficiency. Unfortunately, uncontrolled algae accumulation can lead to mass extinctions caused by eutrophication.

After scrutinizing recent research papers on deep algae bloom, I noticed there are very few appliances focusing on detecting deep algal bloom. Therefore, I decided to build a budget-friendly and easy-to-use prewarning system to predict potential deep algal bloom with object detection in the hope of preventing the hazardous effects on marine life and the ecosystem.

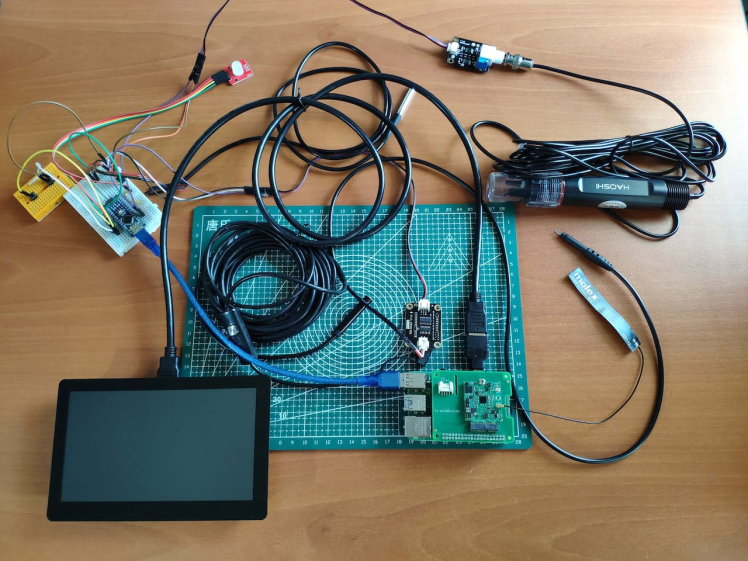

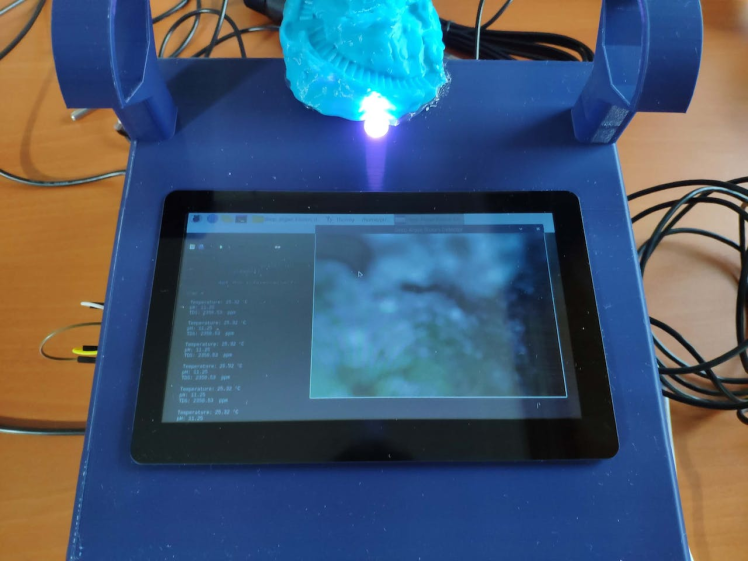

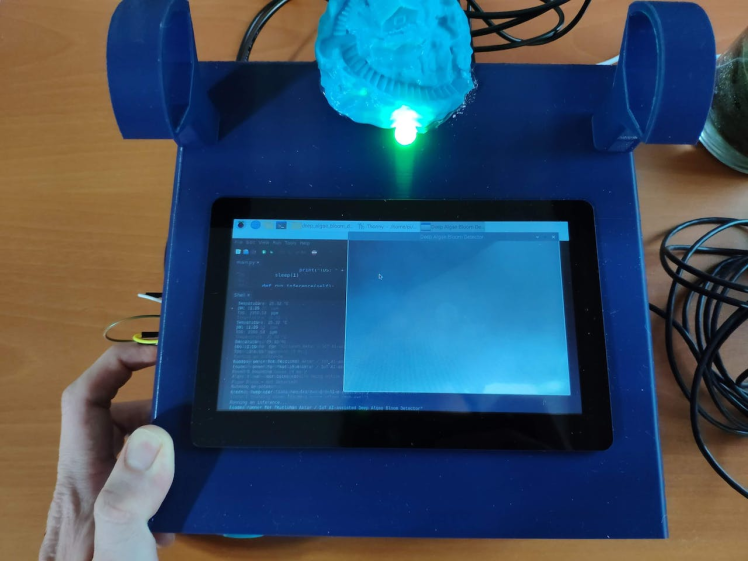

To detect deep algae bloom, I needed to collect data from the depth of water bodies in order to train my object detection model with notable validity. Therefore, I decided to utilize a borescope camera connected to my Raspberry Pi 4 to capture images of underwater algal bloom. Since Notecard provides cellular connectivity and a secure cloud service (Notehub.io) for storing or redirecting incoming information, I decided to employ Notecard with the Notecarrier Pi Hat to make the device able to collect data, run the object detection model outdoors, and inform the user of the detection results.

After completing my data set by taking pictures of existing deep algae bloom, I built my object detection model with Edge Impulse to predict potential algal bloom. I utilized Edge Impulse FOMO (Faster Objects, More Objects) algorithm to train my model, which is a novel machine learning algorithm that brings object detection to highly constrained devices. Since Edge Impulse is nearly compatible with all microcontrollers and development boards, I had not encountered any issues while uploading and running my model on Raspberry Pi.

After training and testing my object detection (FOMO) model, I deployed and uploaded the model on Raspberry Pi as a Linux (ARMv7) application (.eim). Therefore, the device is capable of detecting deep algal bloom by running the model independently without any additional procedures or latency.

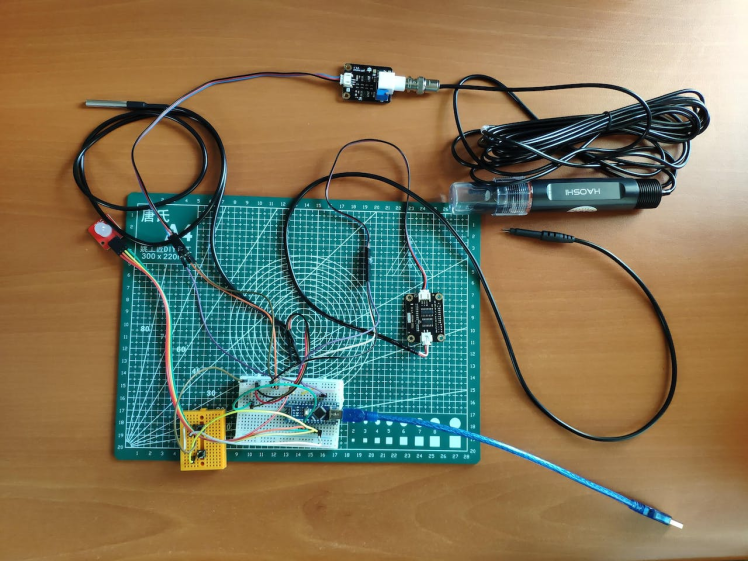

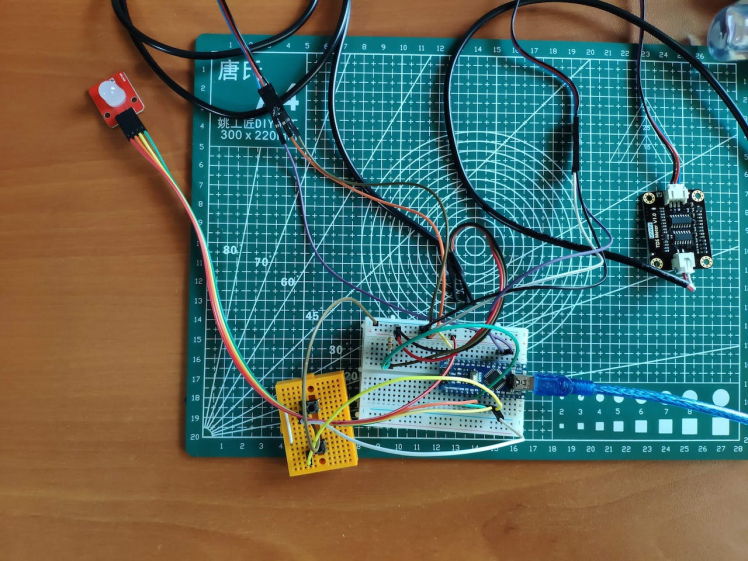

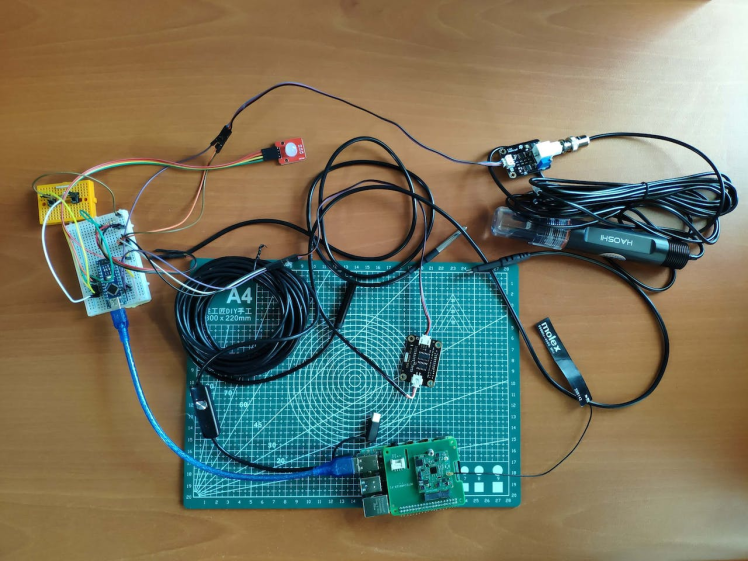

As mentioned earlier, warmer water temperatures and excessive nutrients stimulate hazardous algae bloom. Therefore, I decided to collect water quality data and add the collected information to the model detection result as a prescient warning for a potential algal bloom. To obtain pH, TDS (total dissolved solids), and water temperature measurements, I connected DFRobot water quality sensors and a DS18B20 waterproof temperature sensor to Arduino Nano since Raspberry Pi pins are occupied by the Notecarrier. Then, I utilized Arduino Nano to transfer the collected water quality data to Raspberry Pi via serial communication. Also, I connected two control buttons to Arduino Nano to send commands to Raspberry Pi via serial communication.

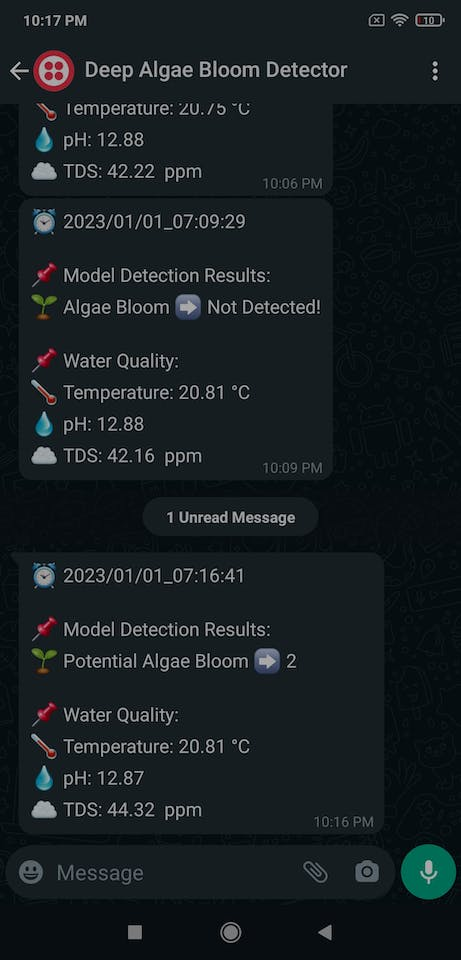

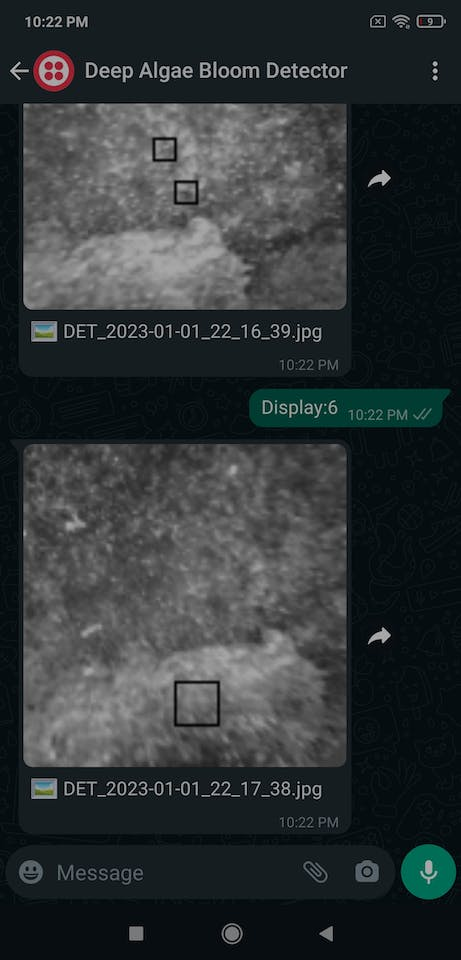

Since I focused on building a full-fledged AIoT device detecting deep algal bloom and providing WhatsApp communication via cellular connectivity, I decided to develop a webhook from scratch to inform the user of the model detection results with the collected water quality data and obtain given commands regarding the captured model detection images via WhatsApp.

This complementing webhook utilizes Twilio's WhatsApp API to send the incoming information transferred by Notecard over Notehub.io to the verified phone and obtain the given commands from the verified phone regarding the model detection images saved on the server. Also, the webhook processes the model detection images transferred by Raspberry Pi simultaneously via HTTP POST requests.

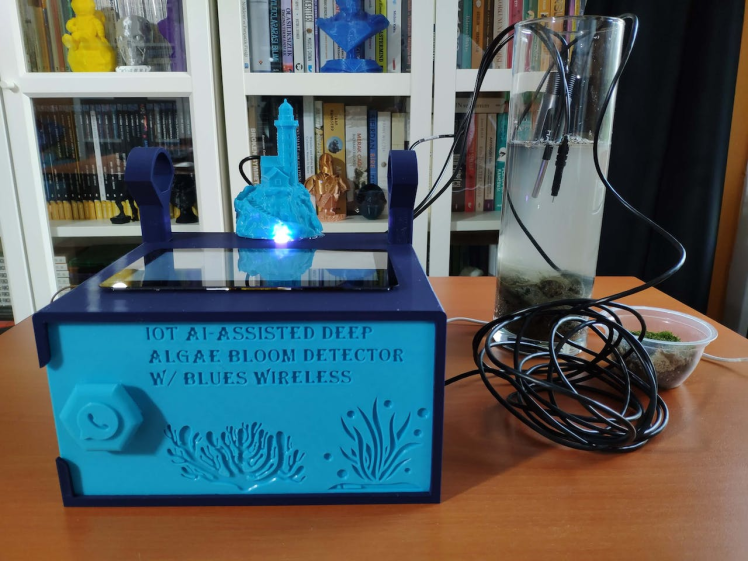

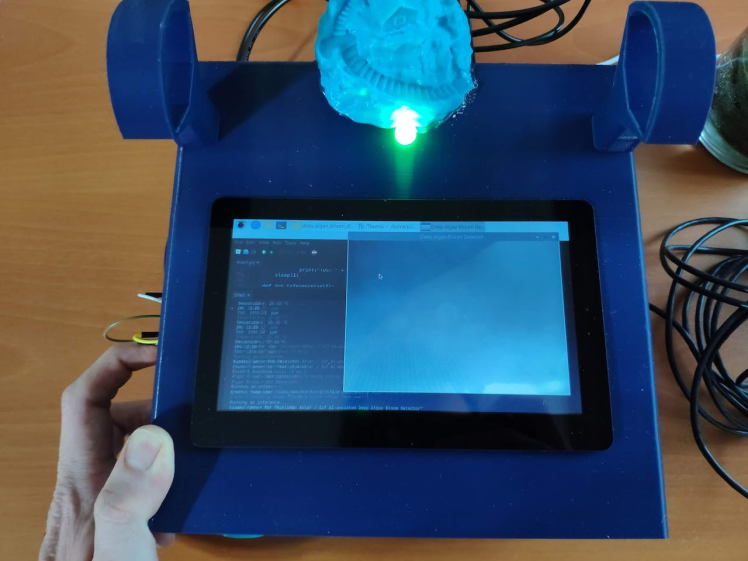

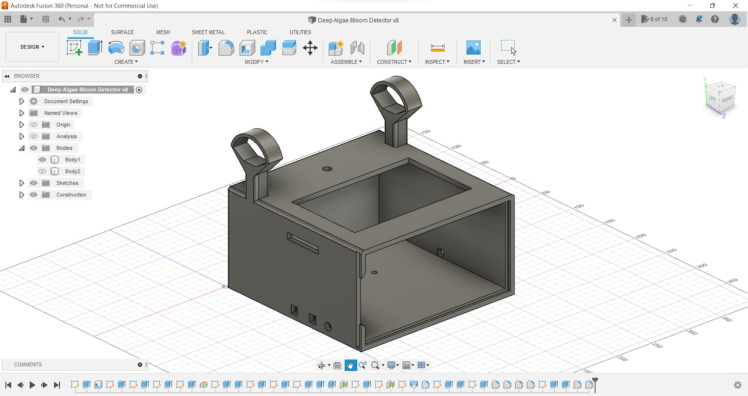

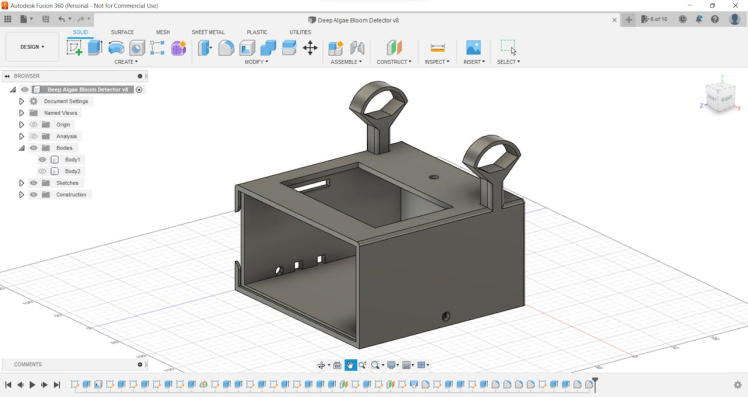

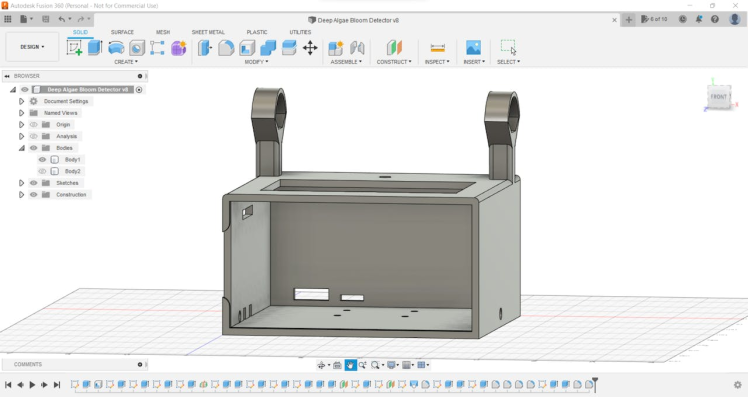

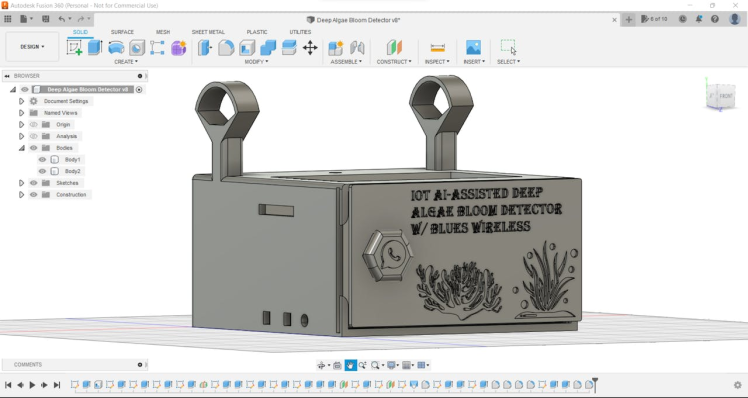

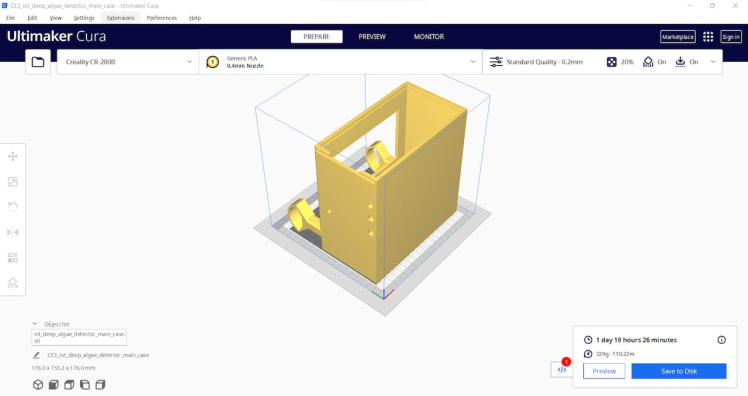

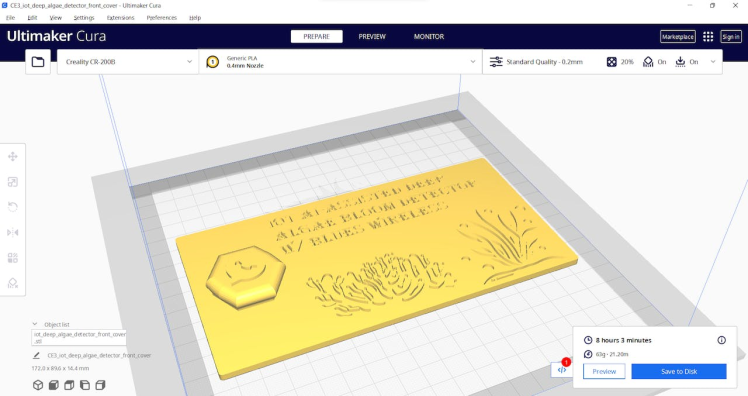

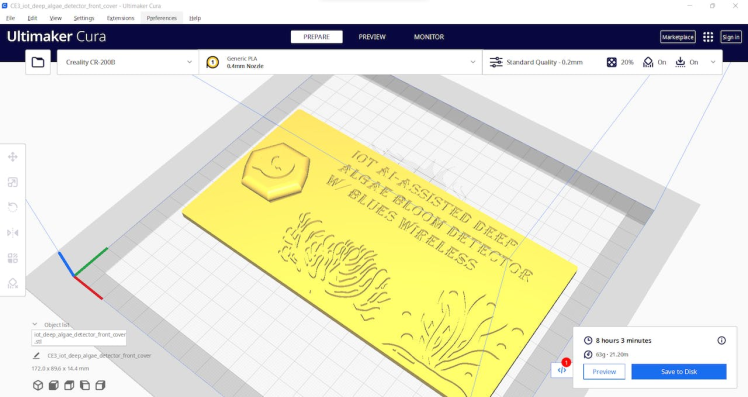

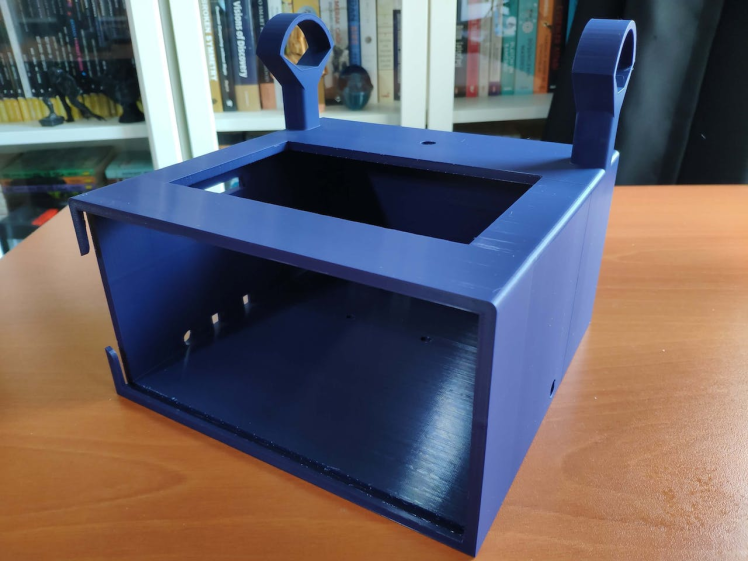

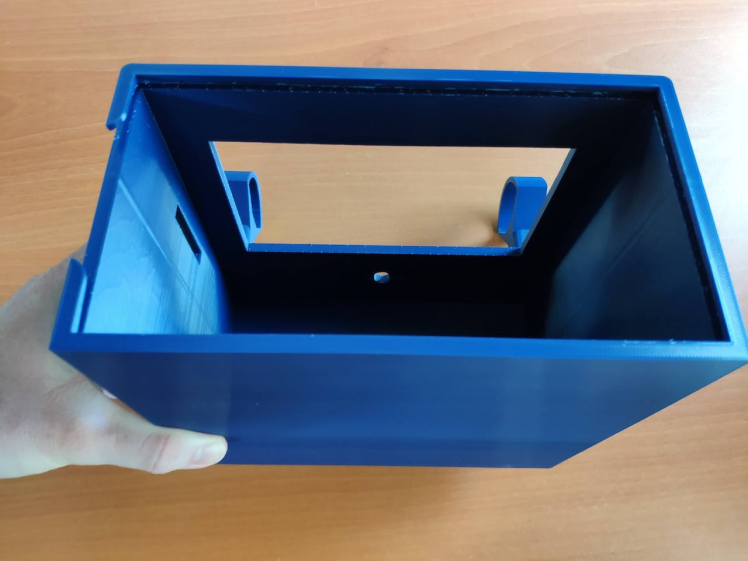

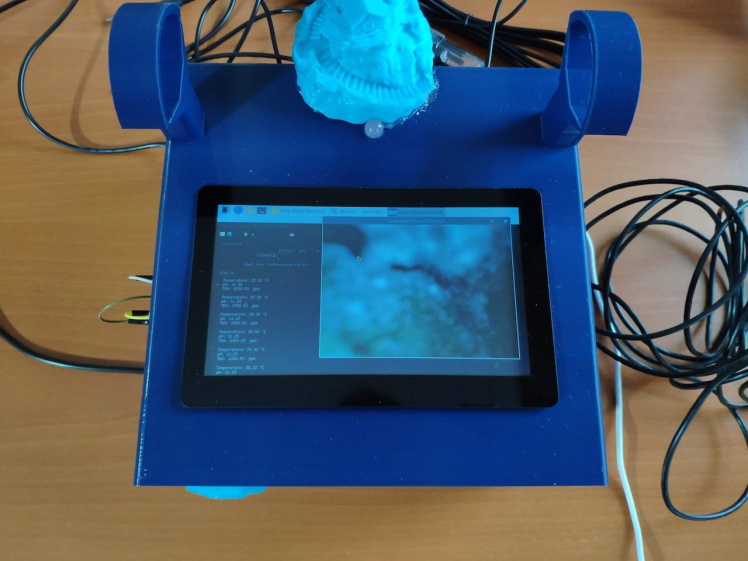

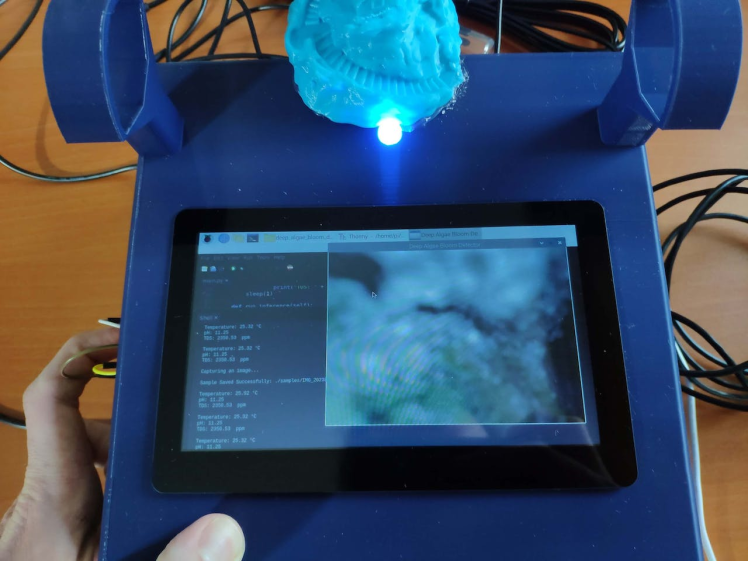

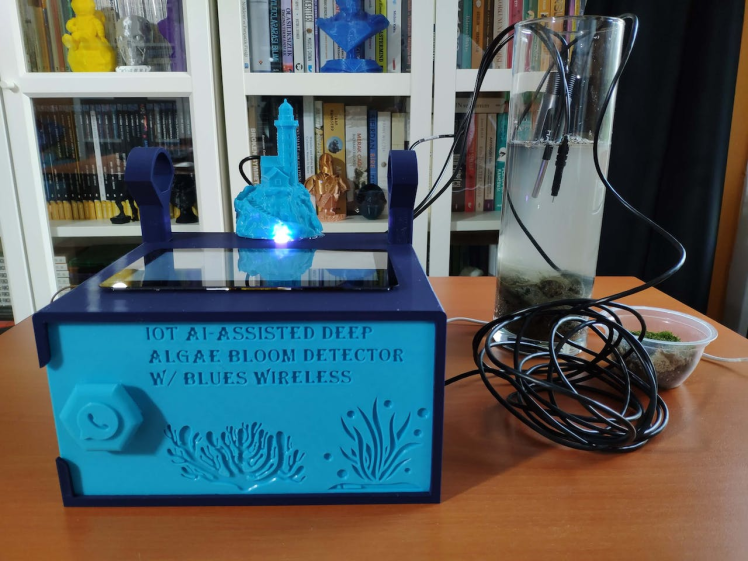

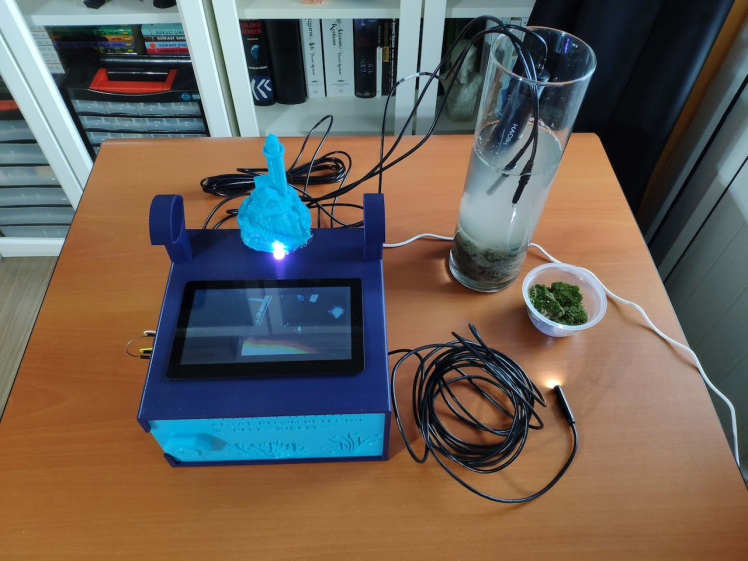

Lastly, to make the device as robust and sturdy as possible while operating outdoors, I designed an ocean-themed case with a sliding front cover and separate supporting mounts for water quality sensors and the borescope camera (3D printable).

So, this is my project in a nutshell ?

In the following steps, you can find more detailed information on coding, capturing algae images with a borescope camera, transferring data from Notecard to Notehub.io via cellular connectivity, building an object detection (FOMO) model with Edge Impulse, running the model on Raspberry Pi, and developing a full-fledged webhook to communicate with WhatsApp.

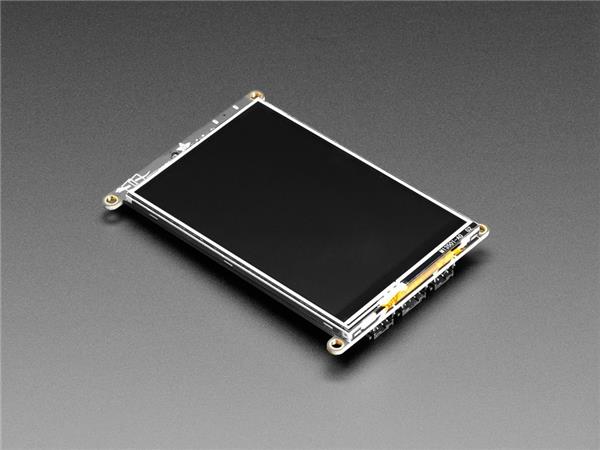

?? Huge thanks to DFRobot for sponsoring a 7'' HDMI Display with Capacitive Touchscreen.

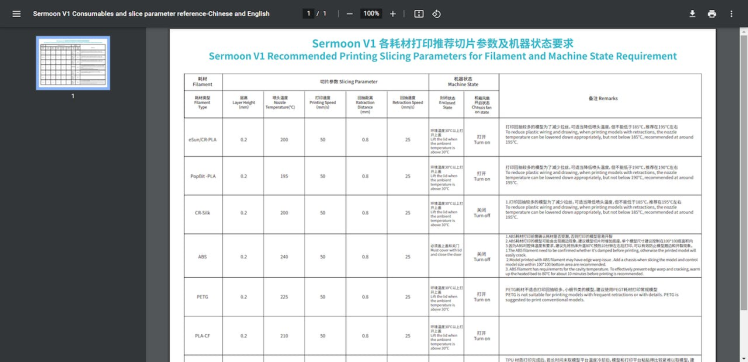

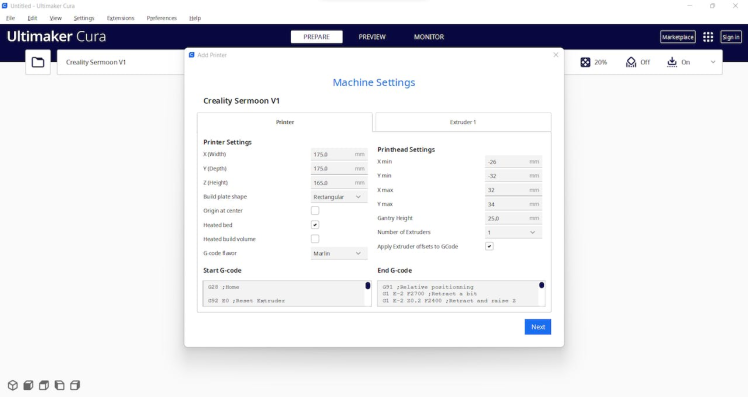

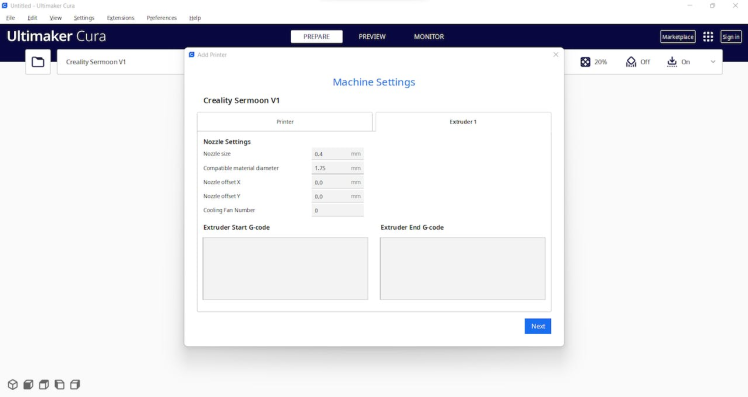

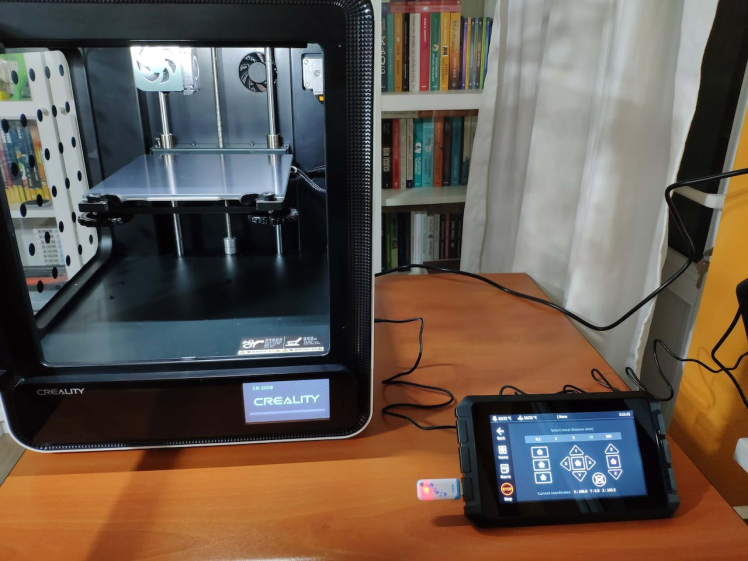

?? Also, huge thanks to Creality for sending me a Creality Sonic Pad, a Creality Sermoon V1 3D Printer, and a Creality CR-200B 3D Printer.

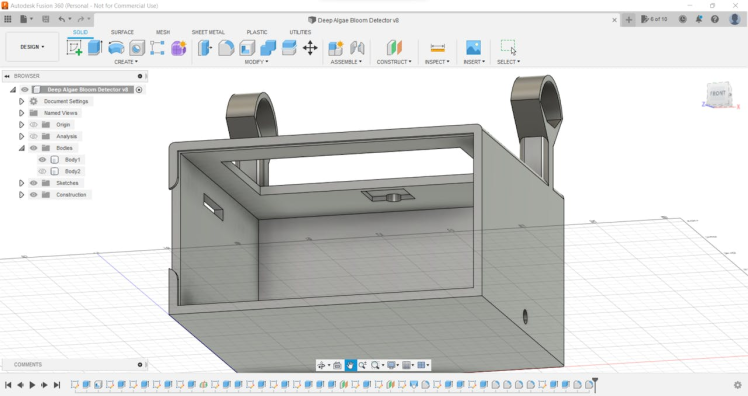

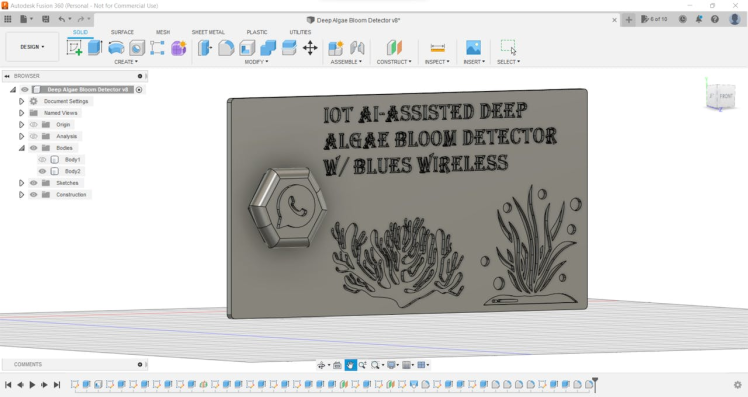

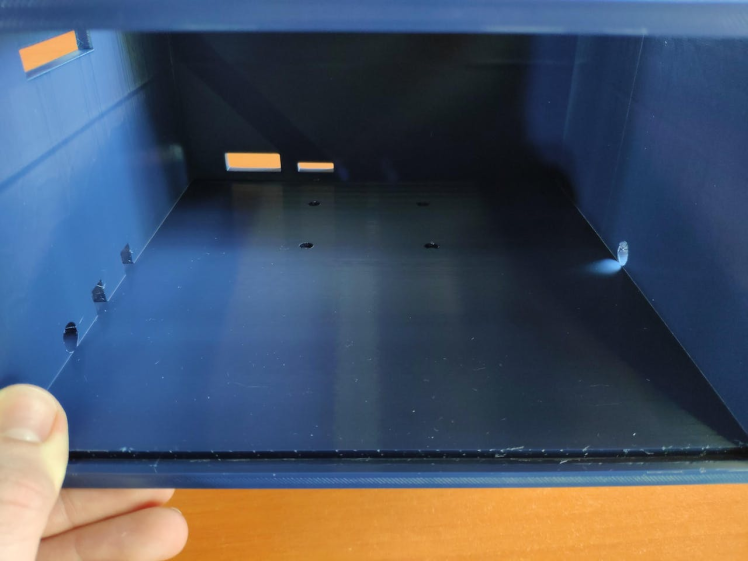

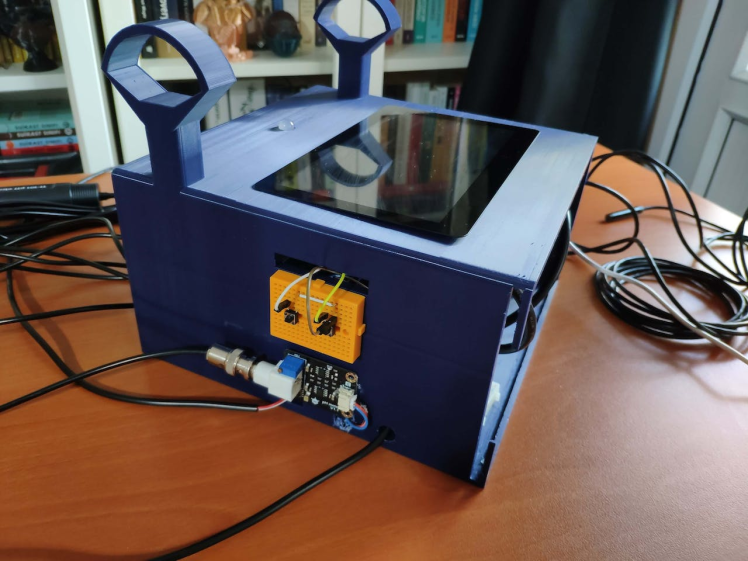

Since I focused on building a budget-friendly and accessible device that collects data from water bodies and informs the user of detected deep algae bloom via WhatsApp, I decided to design a robust and compact case allowing the user to utilize water quality sensors and the borescope camera effortlessly. To avoid overexposure to dust and prevent loose wire connections, I added a sliding front cover with a handle to the case. Then, I designed two separate supporting mounts on the top of the case so as to hang water quality sensors and the borescope camera. Also, I decided to emboss algae icons on the sliding front cover to highlight the algal bloom theme.

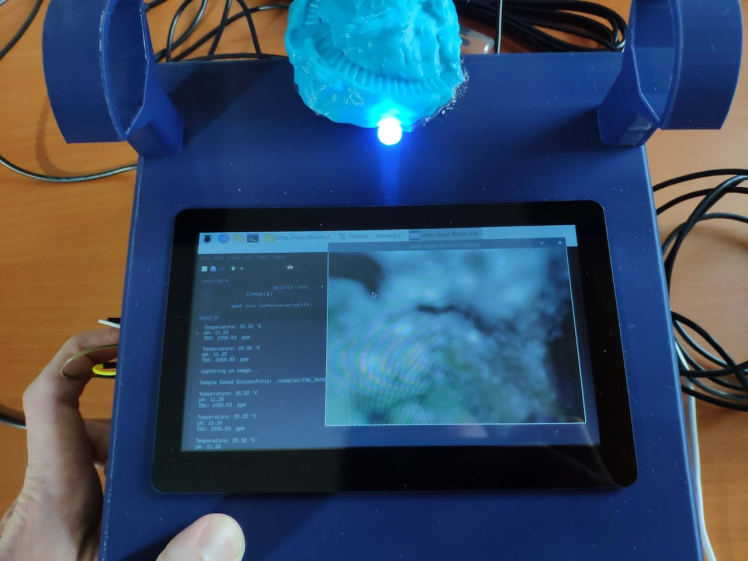

Since I needed to connect an HDMI screen to Raspberry Pi to observe the running operations, model detection results, and the video stream generated by the borescope camera, I added a slot on the top of the case to attach the HDMI screen seamlessly.

I designed the main case and its sliding front cover in Autodesk Fusion 360. You can download their STL files below.

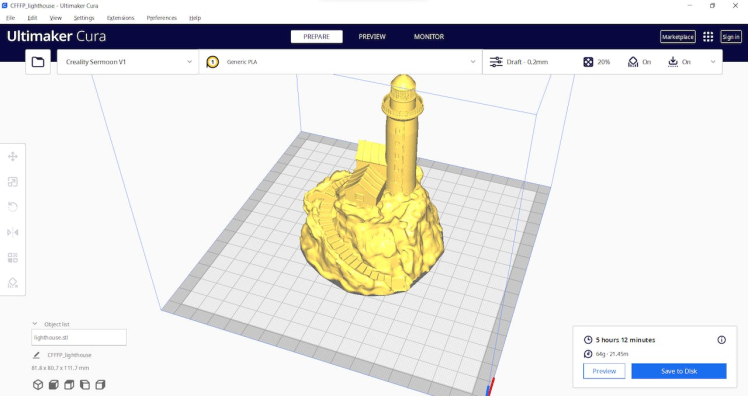

For the lighthouse figure affixed to the top of the main case, I utilized this model from Thingiverse:

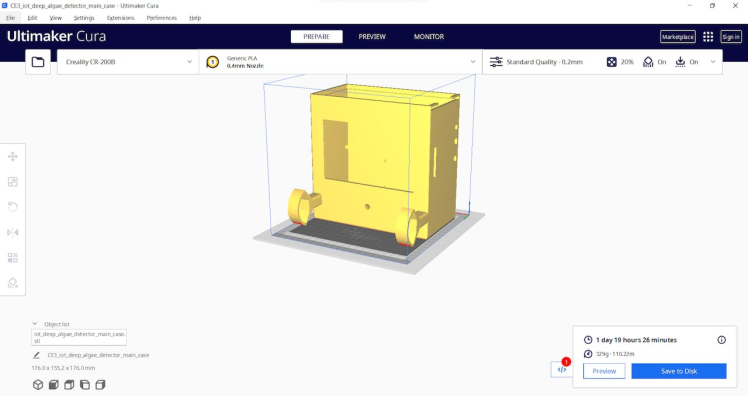

Then, I sliced all 3D models (STL files) in Ultimaker Cura.

Since I wanted to create a solid structure for the case with the sliding cover and apply a stylish ocean theme to the device, I utilized these PLA filaments:

- Light Blue

- Dark Blue

Finally, I printed all parts (models) with my Creality Sermoon V1 3D Printer and Creality CR-200B 3D Printer in combination with the Creality Sonic Pad. You can find more detailed information regarding the Sonic Pad in Step 2.1.

If you are a maker or hobbyist planning to print your 3D models to create more complex and detailed projects, I highly recommend the Sermoon V1. Since the Sermoon V1 is fully-enclosed, you can print high-resolution 3D models with PLA and ABS filaments. Also, it has a smart filament runout sensor and the resume printing option for power failures.

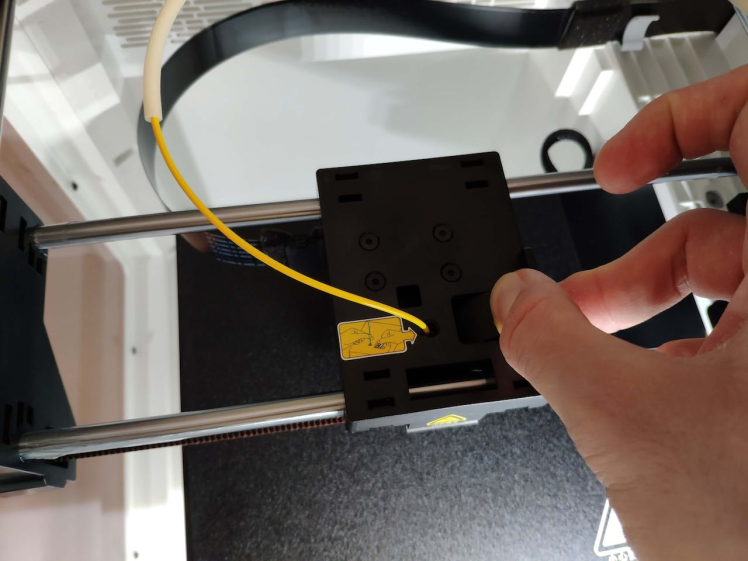

Furthermore, the Sermoon V1 provides a flexible metal magnetic suction platform on the heated bed. So, you can remove your prints without any struggle. Also, you can feed and remove filaments automatically (one-touch) due to its unique sprite extruder (hot end) design supporting dual-gear feeding. Most importantly, you can level the bed automatically due to its user-friendly and assisted bed leveling function.

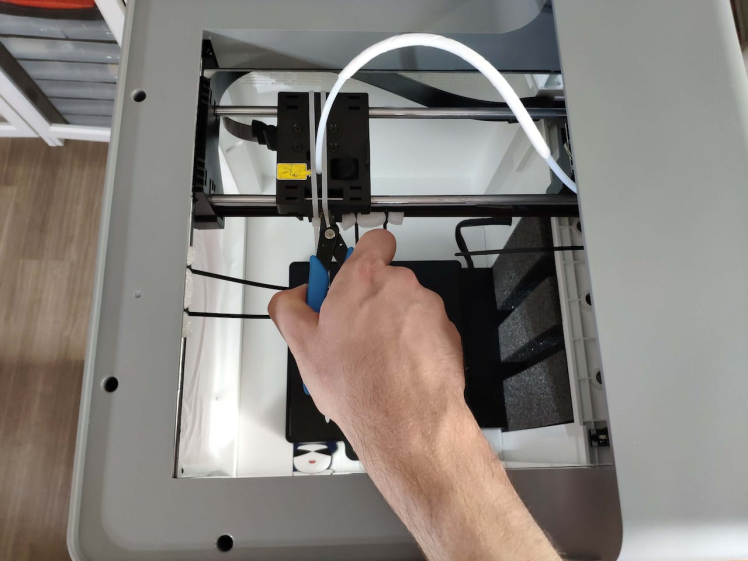

#️⃣ Before the first use, remove unnecessary cable ties and apply grease to the rails.

#️⃣ Test the nozzle and hot bed temperatures.

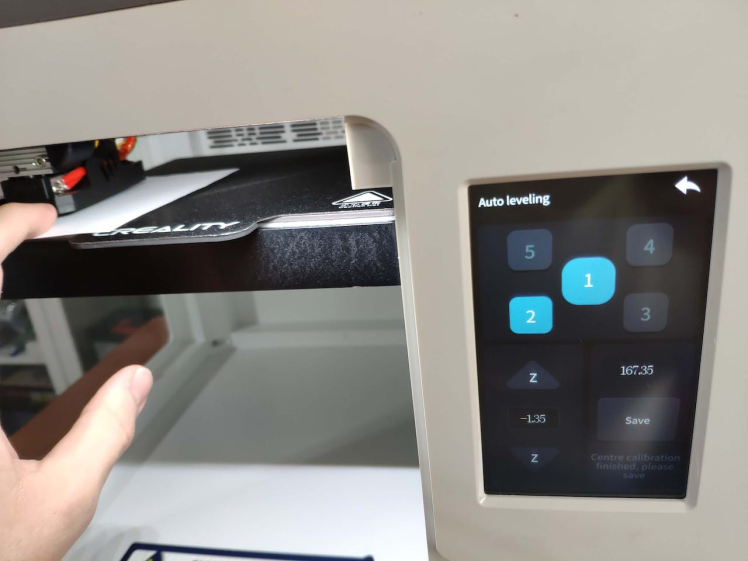

#️⃣ Go to Print Setup ➡ Auto leveling and adjust five predefined points automatically with the assisted leveling function.

#️⃣ Finally, place the filament into the integrated spool holder and feed the extruder with the filament.

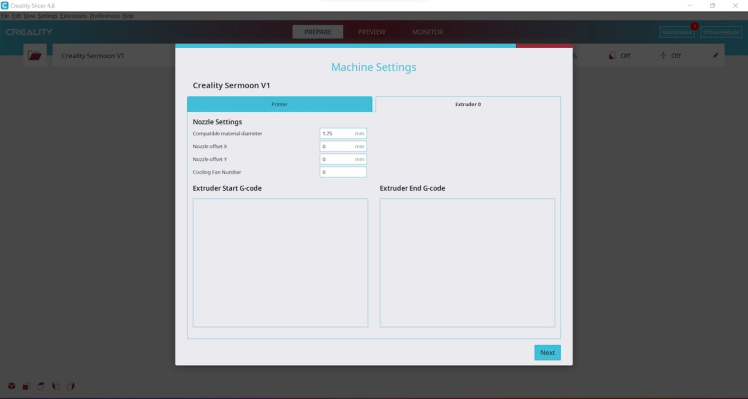

#️⃣ Since the Sermoon V1 is not officially supported by Cura, download the latest Creality Slicer version and copy the official printer settings provided by Creality, including Start G-code and End G-code, to a custom printer profile on Cura.

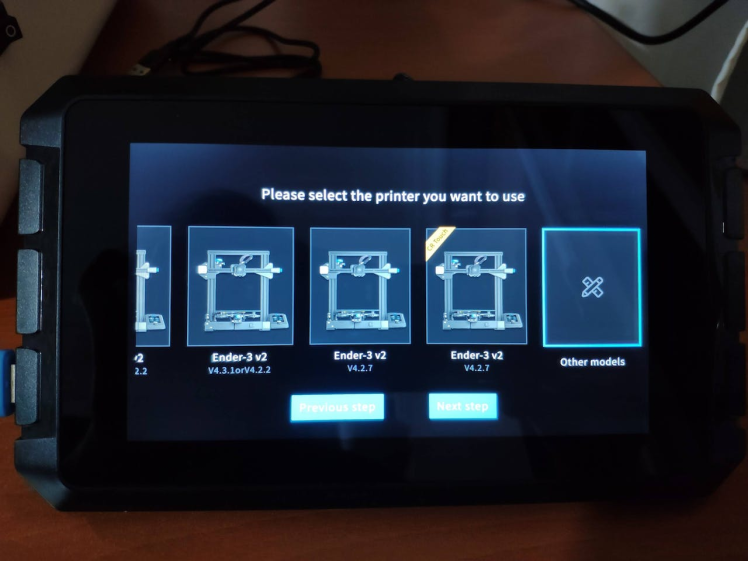

Since I wanted to improve my print quality and speed with Klipper, I decided to upgrade my Creality CR-200B 3D Printer with the Creality Sonic Pad.

Creality Sonic Pad is a beginner-friendly device to control almost any FDM 3D printer on the market with the Klipper firmware. Since the Sonic Pad uses precision-oriented algorithms, it provides remarkable results with higher printing speeds. The built-in input shaper function mitigates oscillation during high-speed printing and smooths ringing to maintain high model quality. Also, it supports G-code model preview.

Although the Sonic Pad is pre-configured for some Creality printers, it does not support the CR-200B officially yet. Therefore, I needed to add the CR-200B as a user-defined printer to the Sonic Pad. Since the Sonic Pad needs unsupported printers to be flashed with the self-compiled Klipper firmware before connection, I flashed my CR-200B with the required Klipper firmware settings via FluiddPI by following this YouTube tutorial.

If you do not know how to write a printer configuration file for Klipper, you can download the stock CR-200B configuration file from here.

#️⃣ After flashing the CR-200B with the Klipper firmware, copy the configuration file (printer.cfg) to a USB drive and connect the drive to the Sonic Pad.

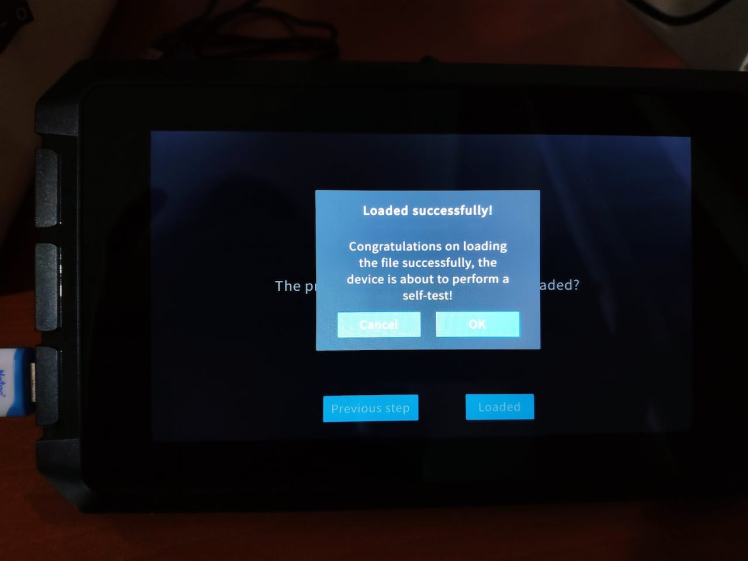

#️⃣ After setting up the Sonic Pad, select Other models. Then, load the printer.cfg file.

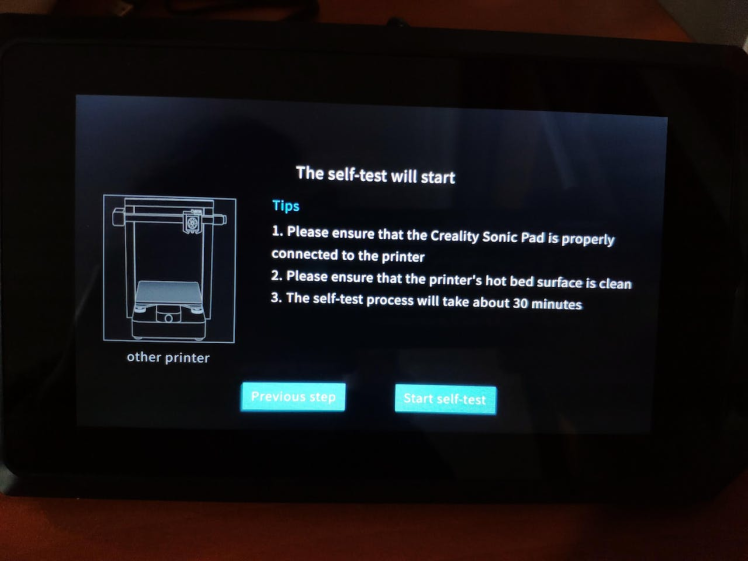

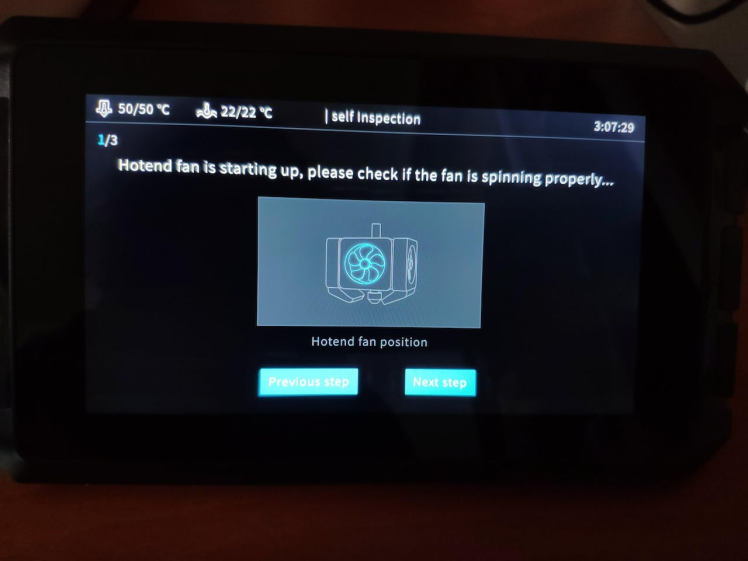

#️⃣ After connecting the Sonic Pad to the CR-200B successfully via a USB cable, the Sonic Pad starts the self-testing procedure, which allows the user to test printer functions and level the bed.

#️⃣ After completing setting up the printer, the Sonic Pad lets the user control all functions provided by the Klipper firmware.

#️⃣ In Cura, export the sliced model in the ufp format. After uploading .ufp files to the Sonic Pad via the USB drive, it converts them to sliced G-code files automatically.

#️⃣ Also, the Sonic Pad can display model preview pictures generated by Cura with the Create Thumbnail script.

Step 1.2: Assembling the case and making connections & adjustments// Connections

// Arduino Nano :

// DFRobot Analog pH Sensor Pro Kit

// A0 --------------------------- Signal

// DFRobot Analog TDS Sensor

// A1 --------------------------- Signal

// DS18B20 Waterproof Temperature Sensor

// D2 --------------------------- Data

// Keyes 10mm RGB LED Module (140C05)

// D3 --------------------------- R

// D5 --------------------------- G

// D6 --------------------------- B

// Control Button (R)

// D7 --------------------------- +

// Control Button (C)

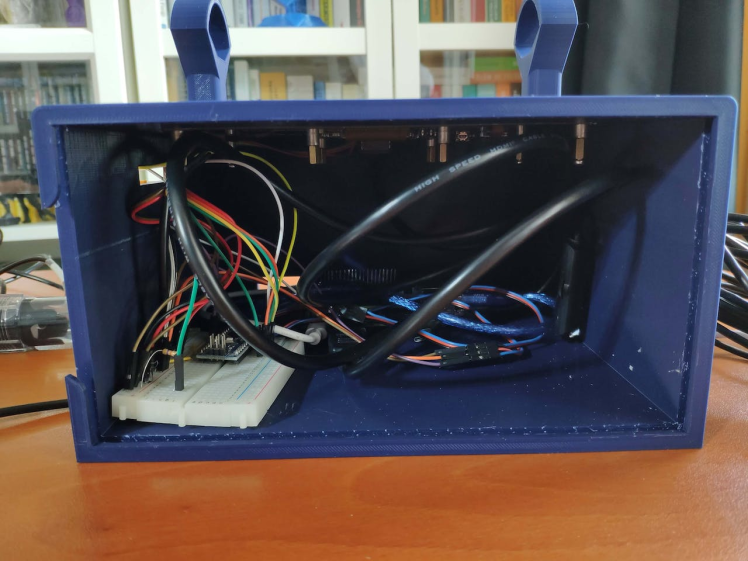

// D8 --------------------------- +To collect water quality data from water bodies in the field, I connected the analog pH sensor, the analog TDS sensor, and the DS18B20 waterproof temperature sensor to Arduino Nano.

To send commands to Raspberry Pi via serial communication and indicate the outcomes of operating functions, I added two control buttons (6x6) and a 10mm common anode RGB LED module (Keyes).

#️⃣ To calibrate the analog pH sensor so as to obtain accurate measurements, put the pH electrode into the standard solution whose pH value is 7.00. Then, record the generated pH value printed on the serial monitor. Finally, adjust the offset (pH_offset) variable according to the difference between the generated and actual pH values, for instance, 0.12 (7.00 - 6.88). The discrepancy should not exceed 0.3. For the acidic calibration, you can inspect the product wiki.

#️⃣ Since the analog TDS sensor needs to be calibrated for compensating water temperature to generate reliable measurements, I utilized a DS18B20 waterproof temperature sensor. As shown in the schematic below, before connecting the DS18B20 waterproof temperature sensor to Arduino Nano, I attached a 4.7K resistor as a pull-up from the DATA line to the VCC line of the sensor to generate accurate temperature measurements.

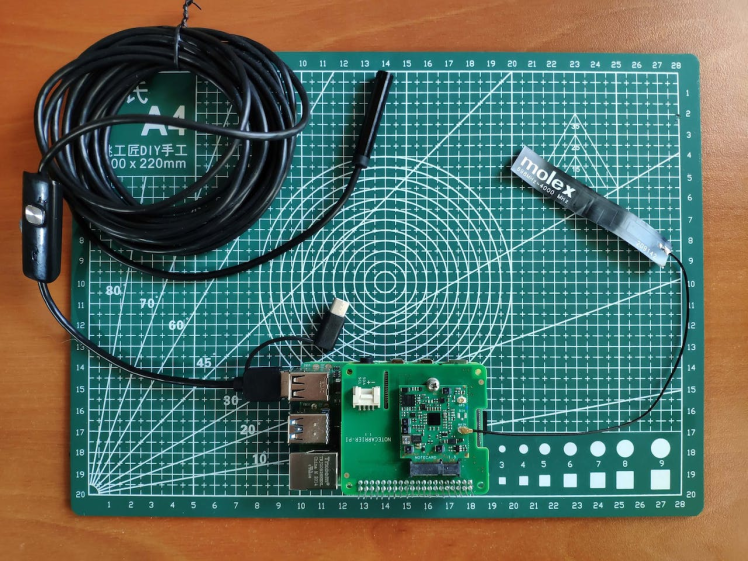

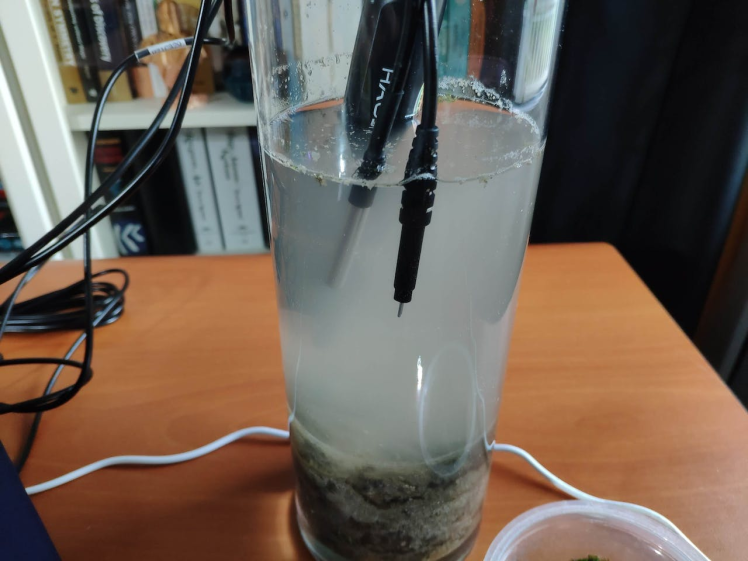

I connected the borescope camera and Notecard mounted on the Notecarrier Pi Hat (w/ Molex cellular antenna) to my Raspberry Pi 4. Since the embedded SIM card cellular service does not cover my country, I inserted an external SIM card into the Notecarrier Pi Hat.

Via a USB cable, I connected Arduino Nano to Raspberry Pi.

To observe running processes, I attached the 7'' HDMI display to Raspberry Pi via a Micro HDMI to HDMI cable.

After printing all parts (models), I fastened all components to their corresponding slots on the main case via a hot glue gun.

Then, I placed the sliding front cover via the dents on the main case.

Finally, I affixed the lighthouse figure to the top of the main case via the hot glue gun.

As mentioned earlier, the supporting mounts can be utilized to hang the borescope camera and water quality sensors while the device is dormant.

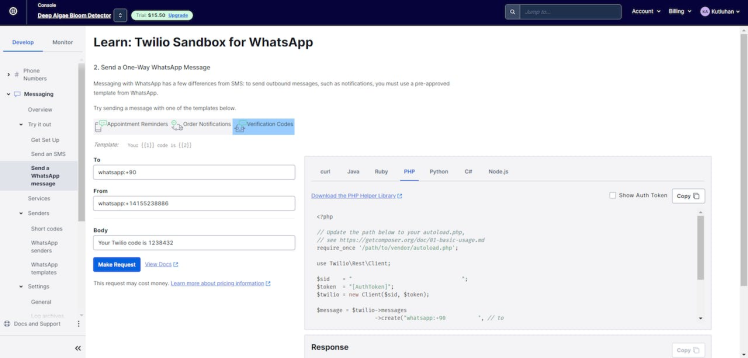

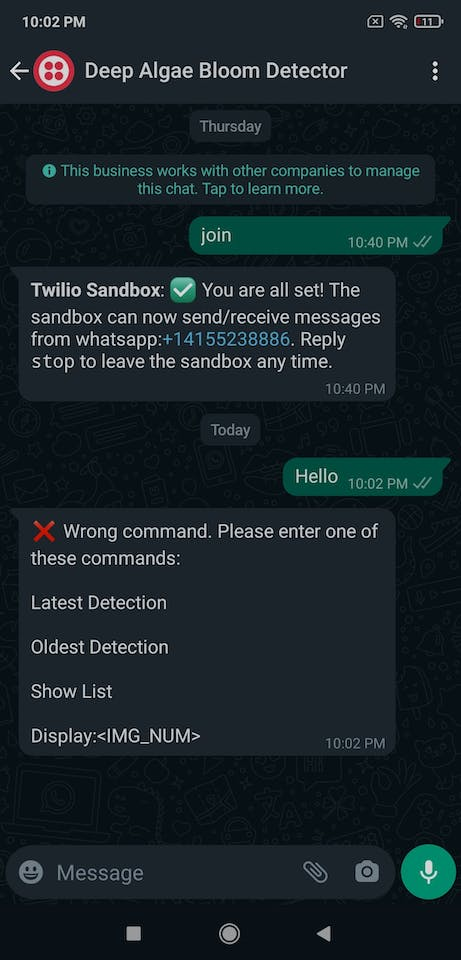

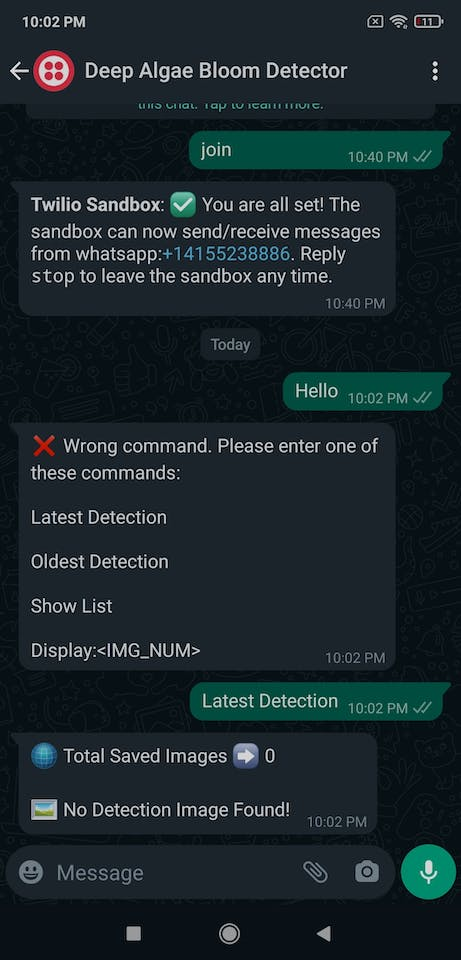

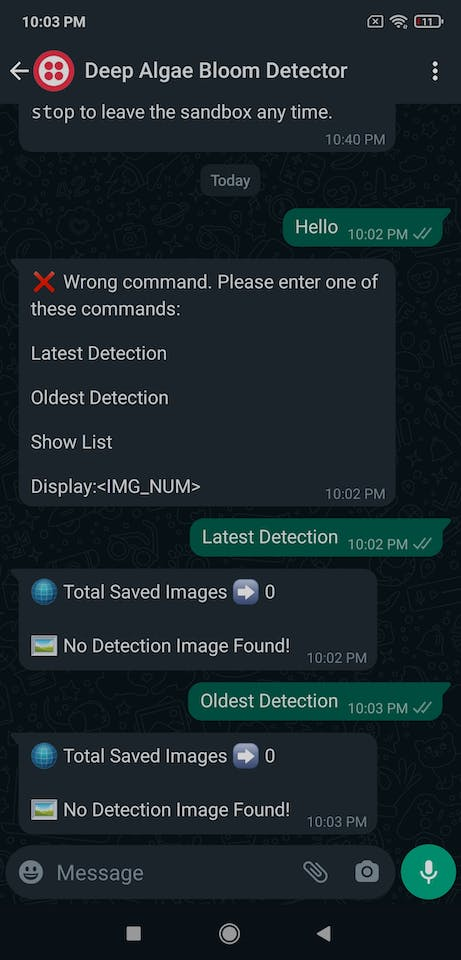

To get the model detection results with the collected water quality data and send commands regarding the model detection images saved on the server over WhatsApp, I utilized Twilio's WhatsApp API. Twilio gives the user a simple and reliable way to communicate with a Twilio-verified phone over WhatsApp via a webhook free of charge for trial accounts. Also, Twilio provides official helper libraries for different programming languages, including PHP.

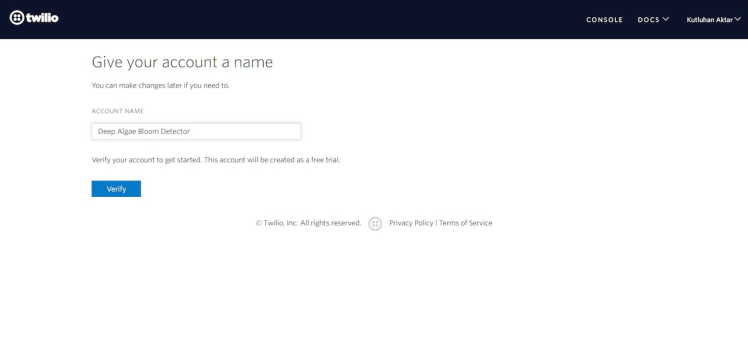

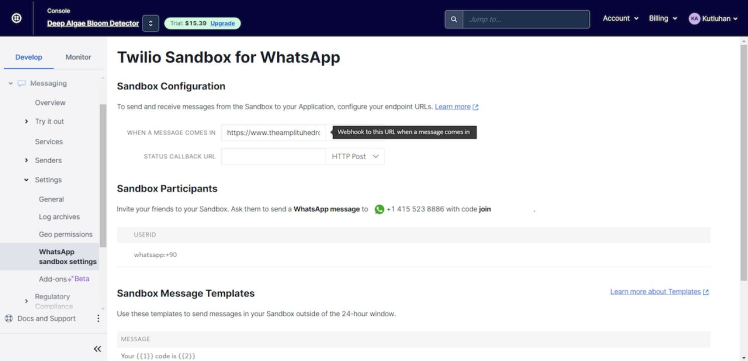

#️⃣ First of all, sign up for Twilio and create a new free trial account (project).

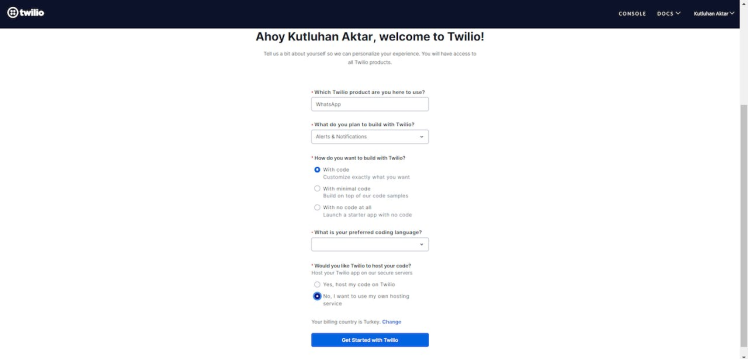

#️⃣ Then, verify a phone number for the account (project) and set the account settings for WhatsApp in PHP.

#️⃣ Go to Twilio Sandbox for WhatsApp and verify your device by sending the given code over WhatsApp, which activates a WhatsApp session.

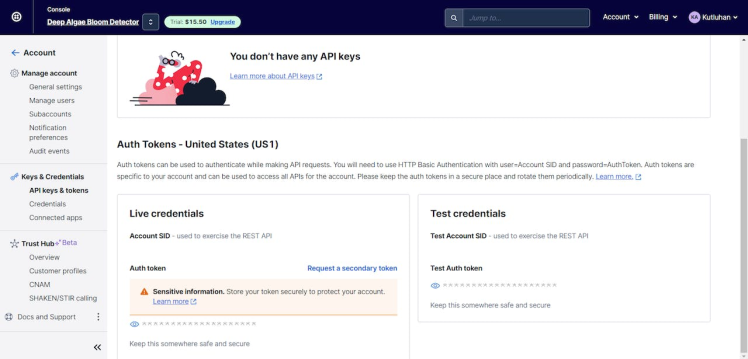

#️⃣ After verifying your device, download the Twilio PHP Helper Library and go to Account ➡ API keys & tokens to get the account SID and the auth token under Live credentials so as to communicate with the verified phone over WhatsApp.

#️⃣ Finally, go to WhatsApp sandbox settings and change the receiving endpoint URL under WHEN A MESSAGE COMES IN with the webhook URL.

https://www.theamplituhedron.com/twilio_whatsapp_sender/

You can get more information regarding the webhook in Step 3.

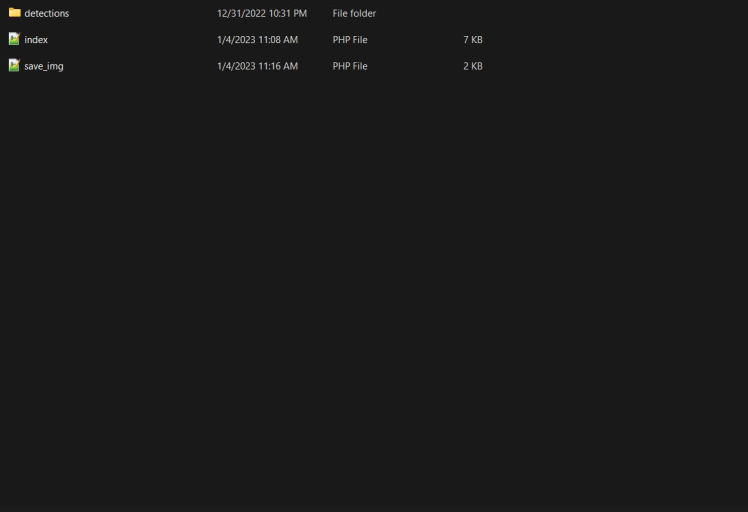

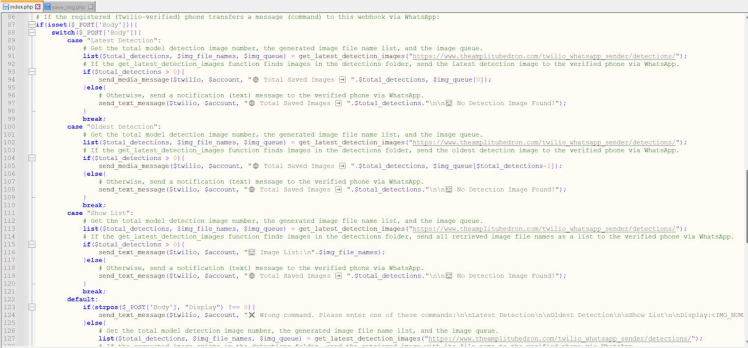

I developed a webhook in PHP, named twilio_whatsapp_sender, to obtain the model detection results with water quality data from Notecard via Notehub.io, inform the user of the received information via WhatsApp, save the model detection images transferred by Raspberry Pi, and get the commands regarding the model detection images saved on the server over WhatsApp via the Twilio PHP Helper Library.

Since Twilio requires a publicly available URL to redirect the incoming WhatsApp messages to a given webhook, I utilized my SSL-enabled server to host the webhook. However, you can employ an HTTP tunneling tool like ngrok to set up a public URL for the webhook.

As shown below, the webhook consists of one folder and two files:

- /detections

- index.php

- save_img.php

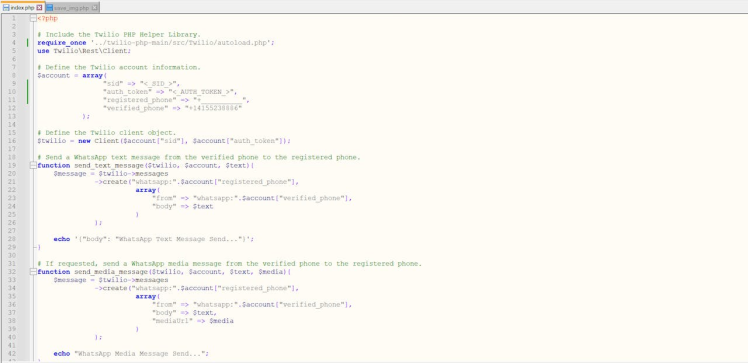

? Index.php

⭐ Include the Twilio PHP Helper Library.

require_once '../twilio-php-main/src/Twilio/autoload.php';

use TwilioRestClient;⭐ Define the Twilio account information (account SID, auth token, verified and registered phone numbers) and the Twilio client object.

$account = array(

"sid" => "<_SID_>",

"auth_token" => "<_AUTH_TOKEN_>",

"registered_phone" => "+__________",

"verified_phone" => "+14155238886"

);

# Define the Twilio client object.

$twilio = new Client($account["sid"], $account["auth_token"]);⭐ In the send_text_message function, send a WhatsApp text message from the verified (Twilio-sandbox) phone to the registered (Twilio-verified) phone.

function send_text_message($twilio, $account, $text){

$message = $twilio->messages

->create("whatsapp:".$account["registered_phone"],

array(

"from" => "whatsapp:".$account["verified_phone"],

"body" => $text

)

);

echo '{"body": "WhatsApp Text Message Send..."}';

}⭐ In the send_media_message function, send a WhatsApp media message from the verified phone to the registered phone if requested.

function send_media_message($twilio, $account, $text, $media){

$message = $twilio->messages

->create("whatsapp:".$account["registered_phone"],

array(

"from" => "whatsapp:".$account["verified_phone"],

"body" => $text,

"mediaUrl" => $media

)

);

echo "WhatsApp Media Message Send...";

}⭐ Obtain the model detection results and water quality data transferred by Notecard via Notehub.io through an HTTP GET request.

/twilio_whatsapp_sender/?results=<detection_results>&temp=<water_temperature>&pH=<pH>&TDS=<TDS>

⭐ Then, notify the user of the received information by adding the current date & time via WhatsApp.

if(isset($_GET["results"]) && isset($_GET["temp"]) && isset($_GET["pH"]) && isset($_GET["TDS"])){

$date = date("Y/m/d_h:i:s");

// Send the received information via WhatsApp to the registered phone so as to notify the user.

send_text_message($twilio, $account, "⏰ $datenn"

."? Model Detection Results:n? "

.$_GET["results"]

."nn? Water Quality:"

."n?️ Temperature: ".$_GET["temp"]

."n? pH: ". $_GET["pH"]

."n☁️ TDS: ".$_GET["TDS"]

);

}else{

echo('{"body": "Waiting Data..."}');

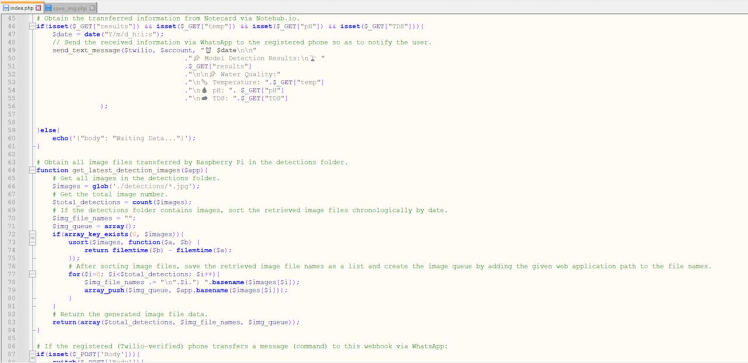

}⭐ In the get_latest_detection_images function:

⭐ Obtain all image files transferred by Raspberry Pi in the detections folder.

⭐ Calculate the total saved image number.

⭐ If the detections folder contains images, sort the retrieved image files chronologically by date.

⭐ After sorting image files, save the retrieved image file names as a list and create the image queue by adding the given webhook path to the file names.

⭐ Return all generated image file variables.

function get_latest_detection_images($app){

# Get all images in the detections folder.

$images = glob('./detections/*.jpg');

# Get the total image number.

$total_detections = count($images);

# If the detections folder contains images, sort the retrieved image files chronologically by date.

$img_file_names = "";

$img_queue = array();

if(array_key_exists(0, $images)){

usort($images, function($a, $b) {

return filemtime($b) - filemtime($a);

});

# After sorting image files, save the retrieved image file names as a list and create the image queue by adding the given web application path to the file names.

for($i=0; $i<$total_detections; $i++){

$img_file_names .= "n".$i.") ".basename($images[$i]);

array_push($img_queue, $app.basename($images[$i]));

}

}

# Return the generated image file data.

return(array($total_detections, $img_file_names, $img_queue));

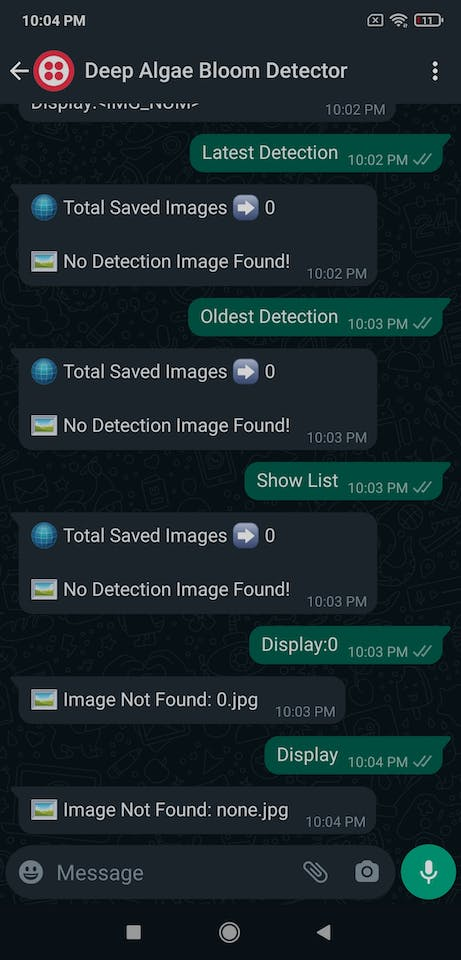

}⭐ If the user sends one of the registered commands to the webhook over WhatsApp via Twilio:

- Latest Detection

- Oldest Detection

- Show List

- Display:<IMG_NUM>

⭐ If the Latest Detection command is received, get the total model detection image number, the generated image file name list, and the image queue by running the get_latest_detection_images function.

⭐ Then, send the latest model detection image in the detections folder with the total image number to the Twilio-verified phone number as the response.

⭐ If the Oldest Detection command is received, get the total model detection image number, the generated image file name list, and the image queue by running the get_latest_detection_images function.

⭐ Then, send the oldest model detection image in the detections folder with the total image number to the Twilio-verified phone number as the response.

⭐ If the Show List command is received, get the total model detection image number, the generated image file name list, and the image queue by running the get_latest_detection_images function.

⭐ Then, send the retrieved image file name list as the response.

⭐ If there is no image in the detections folder, send a notification (text) message.

⭐ If the Display:<IMG_NUM> command is received, send the requested image with its file name if it exists in the given image file name list as the response. Otherwise, send a notification (text) message.

if(isset($_POST['Body'])){

switch($_POST['Body']){

case "Latest Detection":

# Get the total model detection image number, the generated image file name list, and the image queue.

list($total_detections, $img_file_names, $img_queue) = get_latest_detection_images("https://www.theamplituhedron.com/twilio_whatsapp_sender/detections/");

# If the get_latest_detection_images function finds images in the detections folder, send the latest detection image to the verified phone via WhatsApp.

if($total_detections > 0){

send_media_message($twilio, $account, "? Total Saved Images ➡️ ".$total_detections, $img_queue[0]);

}else{

# Otherwise, send a notification (text) message to the verified phone via WhatsApp.

send_text_message($twilio, $account, "? Total Saved Images ➡️ ".$total_detections."nn?️ No Detection Image Found!");

}

break;

case "Oldest Detection":

# Get the total model detection image number, the generated image file name list, and the image queue.

list($total_detections, $img_file_names, $img_queue) = get_latest_detection_images("https://www.theamplituhedron.com/twilio_whatsapp_sender/detections/");

# If the get_latest_detection_images function finds images in the detections folder, send the oldest detection image to the verified phone via WhatsApp.

if($total_detections > 0){

send_media_message($twilio, $account, "? Total Saved Images ➡️ ".$total_detections, $img_queue[$total_detections-1]);

}else{

# Otherwise, send a notification (text) message to the verified phone via WhatsApp.

send_text_message($twilio, $account, "? Total Saved Images ➡️ ".$total_detections."nn?️ No Detection Image Found!");

}

break;

case "Show List":

# Get the total model detection image number, the generated image file name list, and the image queue.

list($total_detections, $img_file_names, $img_queue) = get_latest_detection_images("https://www.theamplituhedron.com/twilio_whatsapp_sender/detections/");

# If the get_latest_detection_images function finds images in the detections folder, send all retrieved image file names as a list to the verified phone via WhatsApp.

if($total_detections > 0){

send_text_message($twilio, $account, "?️ Image List:n".$img_file_names);

}else{

# Otherwise, send a notification (text) message to the verified phone via WhatsApp.

send_text_message($twilio, $account, "? Total Saved Images ➡️ ".$total_detections."nn?️ No Detection Image Found!");

}

break;

default:

if(strpos($_POST['Body'], "Display") !== 0){

send_text_message($twilio, $account, "❌ Wrong command. Please enter one of these commands:nnLatest DetectionnnOldest DetectionnnShow ListnnDisplay:");

}else{

# Get the total model detection image number, the generated image file name list, and the image queue.

list($total_detections, $img_file_names, $img_queue) = get_latest_detection_images("https://www.theamplituhedron.com/twilio_whatsapp_sender/detections/");

# If the requested image exists in the detections folder, send the retrieved image with its file name to the verified phone via WhatsApp.

$key = explode(":", $_POST['Body'].":none")[1];

if(array_key_exists($key, $img_queue)){

send_media_message($twilio, $account, "?️ ". explode("/", $img_queue[$key])[5], $img_queue[$key]);

}else{

send_text_message($twilio, $account, "?️ Image Not Found: ".$key.".jpg");

}

}

break;

}

}

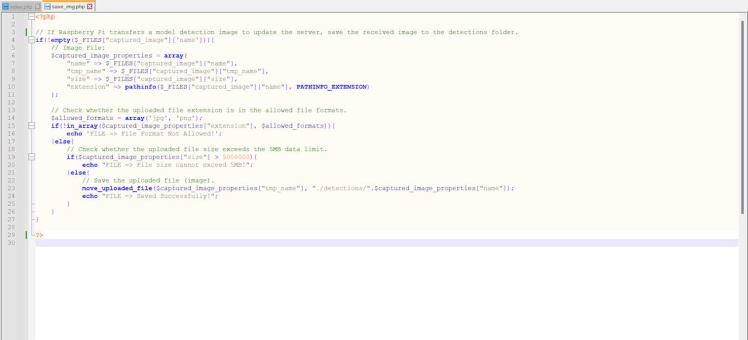

? save_img.php

/twilio_whatsapp_sender/save_img.php

⭐ After collecting model detection images, if Raspberry Pi transfers an image file via an HTTP POST request to update the detections folder on the server:

⭐ Check whether the uploaded file extension is in the allowed file formats — PNG or JPG.

⭐ Then, check whether the uploaded file size exceeds the 5 MB data limit.

⭐ Finally, save the uploaded image file to the detections folder.

if(!empty($_FILES["captured_image"]['name'])){

// Image File:

$captured_image_properties = array(

"name" => $_FILES["captured_image"]["name"],

"tmp_name" => $_FILES["captured_image"]["tmp_name"],

"size" => $_FILES["captured_image"]["size"],

"extension" => pathinfo($_FILES["captured_image"]["name"], PATHINFO_EXTENSION)

);

// Check whether the uploaded file extension is in the allowed file formats.

$allowed_formats = array('jpg', 'png');

if(!in_array($captured_image_properties["extension"], $allowed_formats)){

echo 'FILE => File Format Not Allowed!';

}else{

// Check whether the uploaded file size exceeds the 5MB data limit.

if($captured_image_properties["size"] > 5000000){

echo "FILE => File size cannot exceed 5MB!";

}else{

// Save the uploaded file (image).

move_uploaded_file($captured_image_properties["tmp_name"], "./detections/".$captured_image_properties["name"]);

echo "FILE => Saved Successfully!";

}

}

}

Via the Blues Wireless ecosystem, Notecard provides a secure cloud service for storing or redirecting incoming information and reliable cellular connectivity via the embedded SIM card or an external SIM card, depending on the region's coverage. Also, Blues Wireless provides various Notecarriers for different development boards, such as the Notecarrier Pi Hat. Therefore, I did not encounter any issues while connecting Notecard to my Raspberry Pi 4.

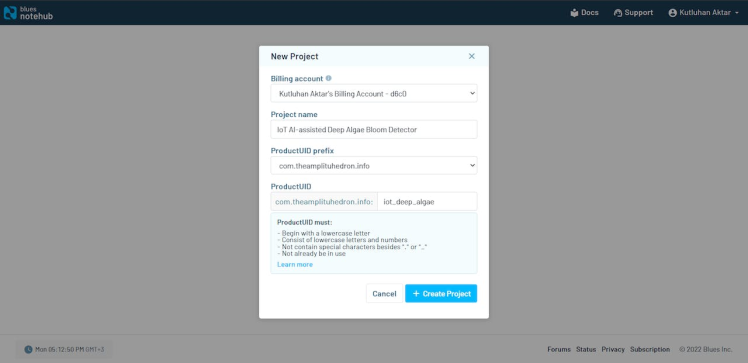

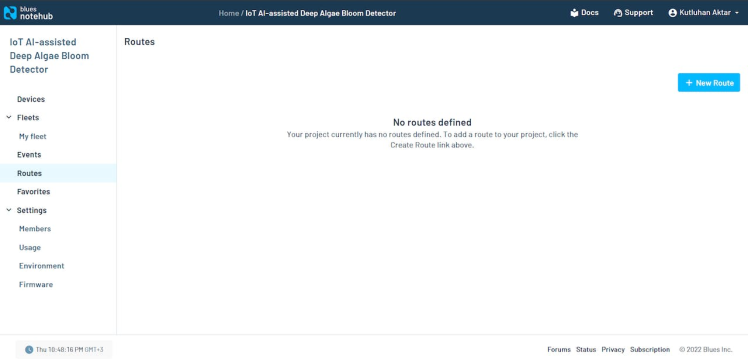

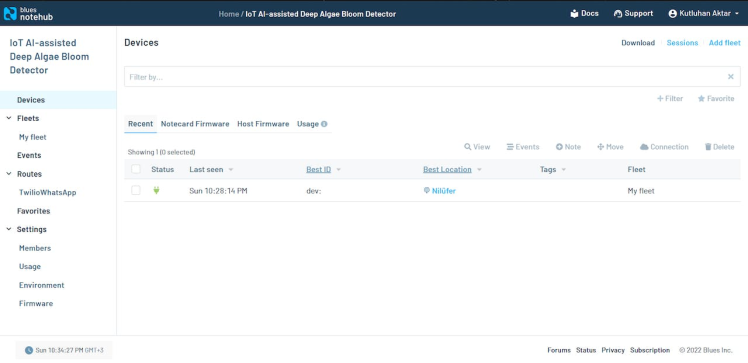

However, before proceeding with the following steps, I needed to create a Notehub.io project to utilize Notecard features with the secure cloud service.

#️⃣ First of all, create an account on Notehub.io.

#️⃣ Click the Create Project button. Then, enter the project name and the product UID.

iot_deep_algae

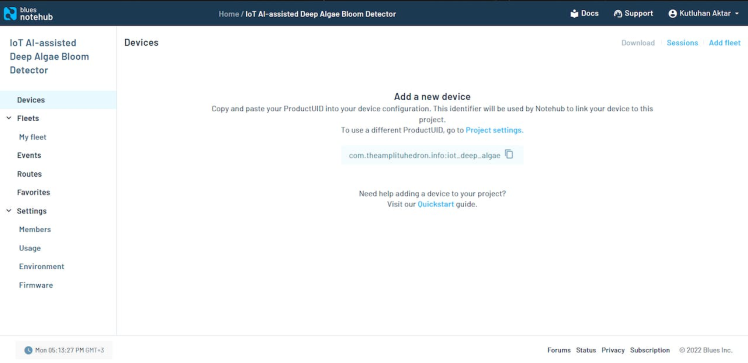

#️⃣ Before adding a new device, copy the product UID for the device configuration on Raspberry Pi.

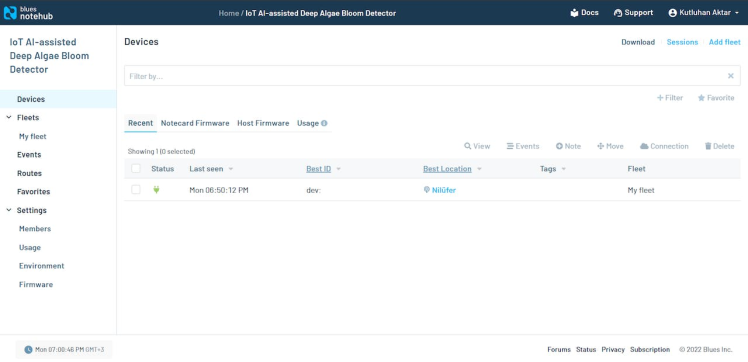

#️⃣ After connecting to the project successfully, Notehub.io shows the Notecard status.

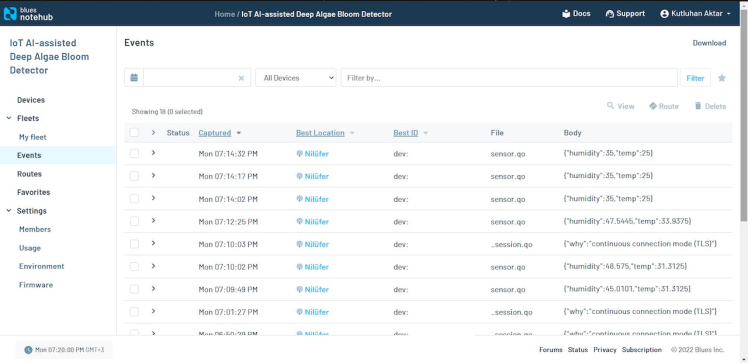

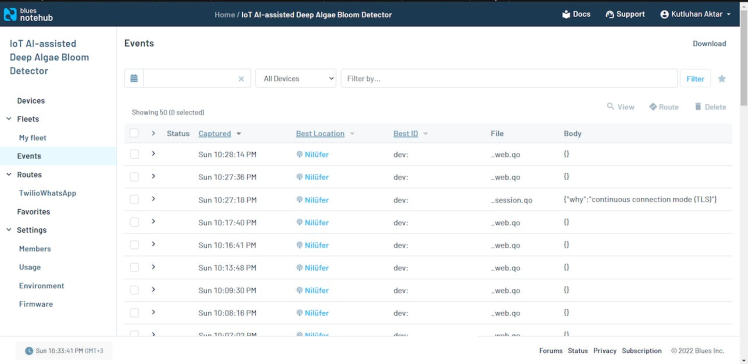

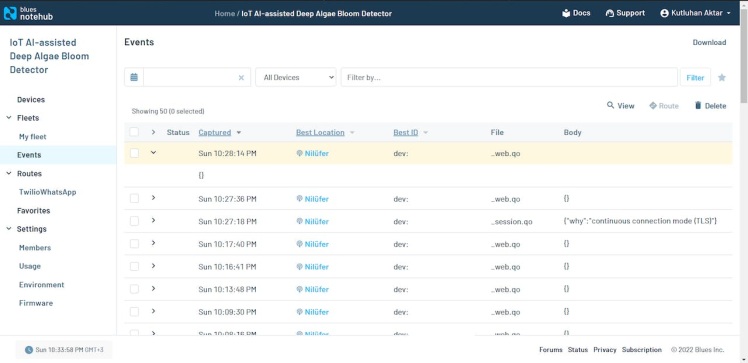

#️⃣ On the Events page, Notehub.io displays all Notes (JSON objects) in a Notefile with the .qo extension (outbound queue).

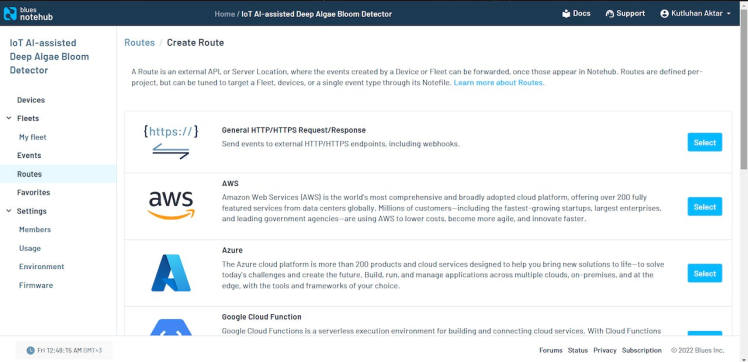

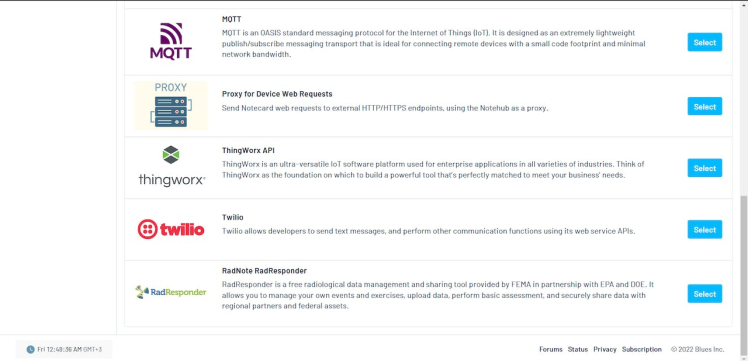

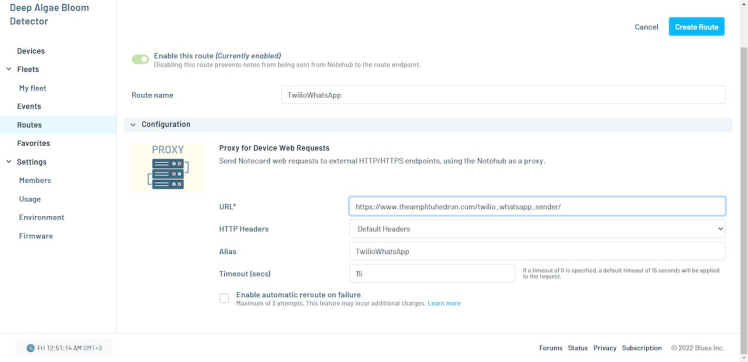

#️⃣ To send the information transferred by Notecard to a webhook via HTTP GET requests, go to the Routes page and click the New Route button.

#️⃣ Then, select Proxy for Device Web Requests from the given options.

#️⃣ Finally, enter the route name and the URL of the webhook.

TwilioWhatsApp

https://www.theamplituhedron.com/twilio_whatsapp_sender/

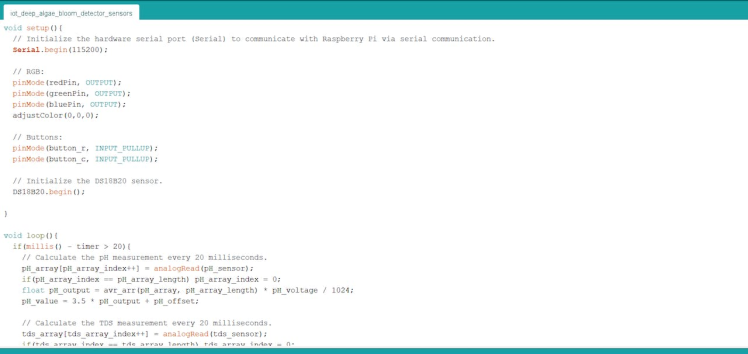

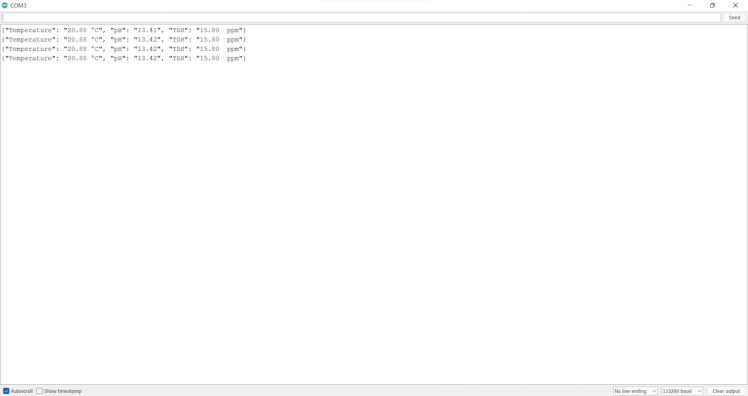

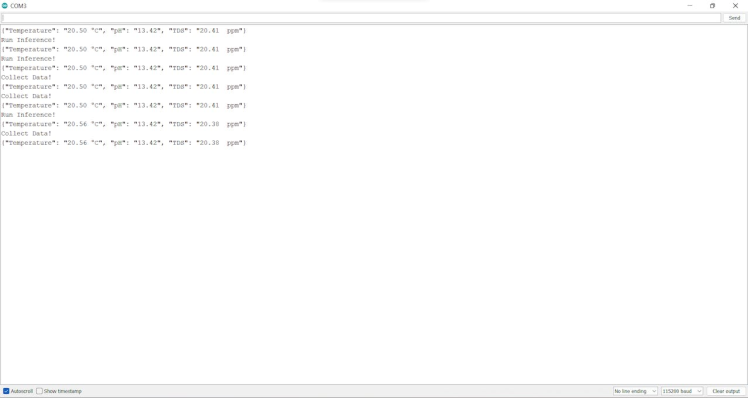

To gain a better perspective regarding potential deep algal bloom, I programmed Arduino Nano to collect water quality information and transfer the collected water quality sensor measurements in the JSON format to Raspberry Pi via serial communication every three seconds:

- Water Temperature (°C)

- pH

- TDS (ppm)

Since I needed to send commands to Raspberry Pi to capture deep algae images and run the object detection model, I utilized the control buttons attached to Arduino Nano to choose among commands. After selecting a command, Arduino Nano sends the selected command to Raspberry Pi via serial communication.

- Button (R) ➡ Run Inference!

- Button (C) ➡ Collect Data!

#️⃣ Download the required libraries for the DS18B20 waterproof temperature sensor:

OneWire | Download

DallasTemperature | Download

You can download the iot_deep_algae_bloom_detector_sensors.ino file to try and inspect the code for collecting water quality data and transferring commands via serial communication.

⭐ Include the required libraries.

#include <OneWire.h>

#include <DallasTemperature.h>⭐ Define the water quality sensor — pH and TDS (total dissolved solids) — settings.

#define pH_sensor A0

#define tds_sensor A1

// Define the pH sensor settings:

#define pH_offset 0.21

#define pH_voltage 5

#define pH_voltage_calibration 0.96

#define pH_array_length 40

int pH_array_index = 0, pH_array[pH_array_length];

// Define the TDS sensor settings:

#define tds_voltage 5

#define tds_array_length 30

int tds_array[tds_array_length], tds_array_temp[tds_array_length];

int tds_array_index = -1;⭐ Define the DS18B20 waterproof temperature sensor settings.

#define ONE_WIRE_BUS 2

OneWire oneWire(ONE_WIRE_BUS);

DallasTemperature DS18B20(&oneWire);⭐ Initialize the hardware serial port (Serial) to communicate with Raspberry Pi via serial communication.

Serial.begin(115200);⭐ Initialize the DS18B20 temperature sensor.

DS18B20.begin();⭐ Every 20 milliseconds:

⭐ Calculate the pH measurement.

⭐ Calculate the TDS (total dissolved solids) measurement.

⭐ Update the timer — timer.

if(millis() - timer > 20){

// Calculate the pH measurement every 20 milliseconds.

pH_array[pH_array_index++] = analogRead(pH_sensor);

if(pH_array_index == pH_array_length) pH_array_index = 0;

float pH_output = avr_arr(pH_array, pH_array_length) * pH_voltage / 1024;

pH_value = 3.5 * pH_output + pH_offset;

// Calculate the TDS measurement every 20 milliseconds.

tds_array[tds_array_index++] = analogRead(tds_sensor);

if(tds_array_index == tds_array_length) tds_array_index = 0;

// Update the timer.

timer = millis();

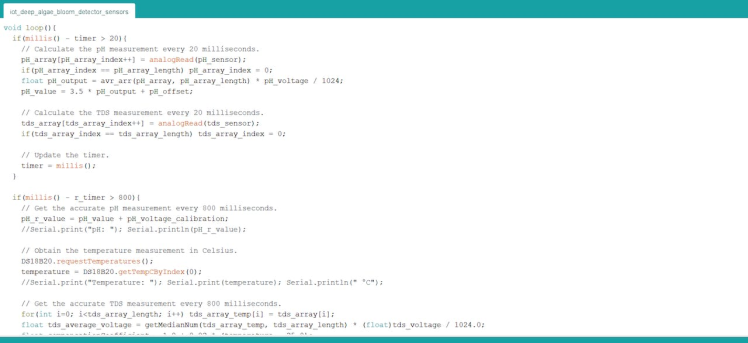

}⭐ Every 800 milliseconds:

⭐ Obtain the accurate (calibrated) pH measurement.

⭐ Obtain the water temperature measurement in Celsius, generated by the DS18B20 sensor.

⭐ Obtain the accurate (calibrated) TDS measurement by compensating water temperature.

⭐ Update the timer — r_timer.

if(millis() - r_timer > 800){

// Get the accurate pH measurement every 800 milliseconds.

pH_r_value = pH_value + pH_voltage_calibration;

//Serial.print("pH: "); Serial.println(pH_r_value);

// Obtain the temperature measurement in Celsius.

DS18B20.requestTemperatures();

temperature = DS18B20.getTempCByIndex(0);

//Serial.print("Temperature: "); Serial.print(temperature); Serial.println(" °C");

// Get the accurate TDS measurement every 800 milliseconds.

for(int i=0; i<tds_array_length; i++) tds_array_temp[i] = tds_array[i];

float tds_average_voltage = getMedianNum(tds_array_temp, tds_array_length) * (float)tds_voltage / 1024.0;

float compensationCoefficient = 1.0 + 0.02 * (temperature - 25.0);

float compensatedVoltage = tds_average_voltage / compensationCoefficient;

tds_value = (133.42*compensatedVoltage*compensatedVoltage*compensatedVoltage - 255.86*compensatedVoltage*compensatedVoltage + 857.39*compensatedVoltage)*0.5;

//Serial.print("TDS: "); Serial.print(tds_value); Serial.println(" ppmnn");

// Update the timer.

r_timer = millis();

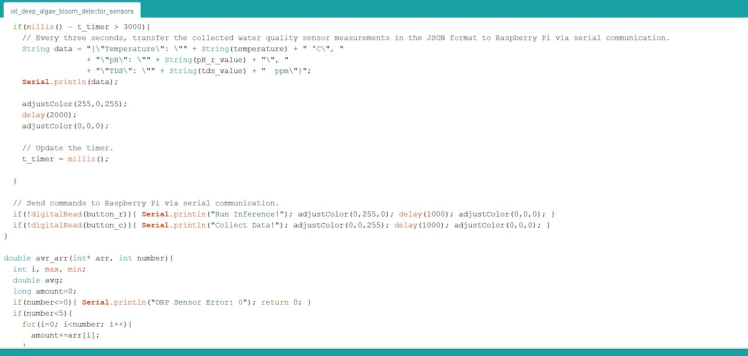

}⭐ Every three seconds, transfer the collected water quality sensor measurements in the JSON format to Raspberry Pi via serial communication.

{"Temperature": "22.25 °C", "pH": "8.36", "TDS": "121.57 ppm"}

⭐ Then, blink the RGB LED as magenta.

⭐ Update the timer — t_timer.

if(millis() - t_timer > 3000){

// Every three seconds, transfer the collected water quality sensor measurements in the JSON format to Raspberry Pi via serial communication.

String data = "{"Temperature": "" + String(temperature) + " °C", "

+ ""pH": "" + String(pH_r_value) + "", "

+ ""TDS": "" + String(tds_value) + " ppm"}";

Serial.println(data);

adjustColor(255,0,255);

delay(2000);

adjustColor(0,0,0);

// Update the timer.

t_timer = millis();

}⭐ If the control button (R) is pressed, send the Run Inference! command to Raspberry Pi via serial communication. Then, blink the RGB LED as green.

⭐ If the control button (C) is pressed, send the Collect Data! command to Raspberry Pi via serial communication. Then, blink the RGB LED as blue.

if(!digitalRead(button_r)){ Serial.println("Run Inference!"); adjustColor(0,255,0); delay(1000); adjustColor(0,0,0); }

if(!digitalRead(button_c)){ Serial.println("Collect Data!"); adjustColor(0,0,255); delay(1000); adjustColor(0,0,0); }

??? Also, the device prints notifications and sensor measurements on the Arduino IDE serial monitor for debugging.

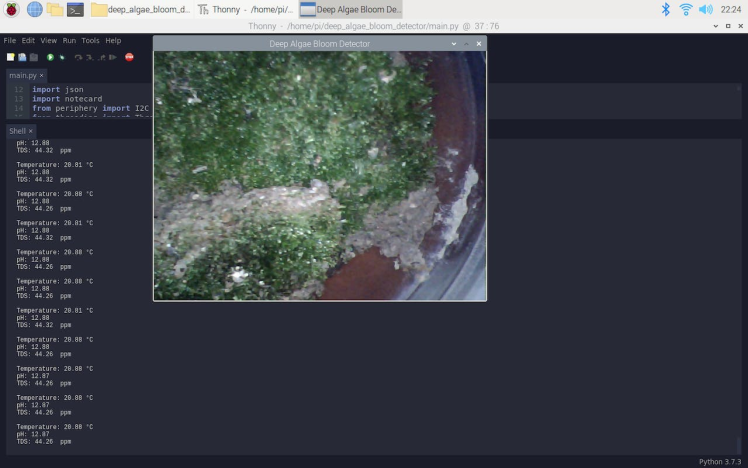

After managing to transfer information from Arduino Nano to Raspberry Pi via serial communication, I programmed Raspberry Pi to capture images with the borescope camera if it receives the Collect Data! command.

Since I utilized a single code file (main.py) to run all functions, you can find more detailed information regarding the code and Raspberry Pi settings in Step 8.

⭐ Initialize serial communication with Arduino Nano to obtain water quality sensor measurements and the given commands.

self.arduino_nano = serial.Serial("/dev/ttyUSB0", 115200, timeout=1000)⭐ Obtain the real-time video stream generated by the borescope camera.

self.camera = cv2.VideoCapture(0)⭐ In the display_camera_feed function:

⭐ Display the real-time video stream generated by the borescope camera.

⭐ Stop the video stream if requested.

⭐ Store the latest frame captured by the borescope camera.

def display_camera_feed(self):

# Display the real-time video stream generated by the borescope camera.

ret, img = self.camera.read()

cv2.imshow("Deep Algae Bloom Detector", img)

# Stop the video stream if requested.

if cv2.waitKey(1) != -1:

self.camera.release()

cv2.destroyAllWindows()

print("nCamera Feed Stopped!")

# Store the latest frame captured by the borescope camera.

self.latest_frame = img⭐ In the save_img_sample function:

⭐ Obtain the current date & time.

⭐ Then, save the recently captured image (latest frame) under the samples folder by appending the current date & time to its file name:

IMG_20221230_172403.jpg

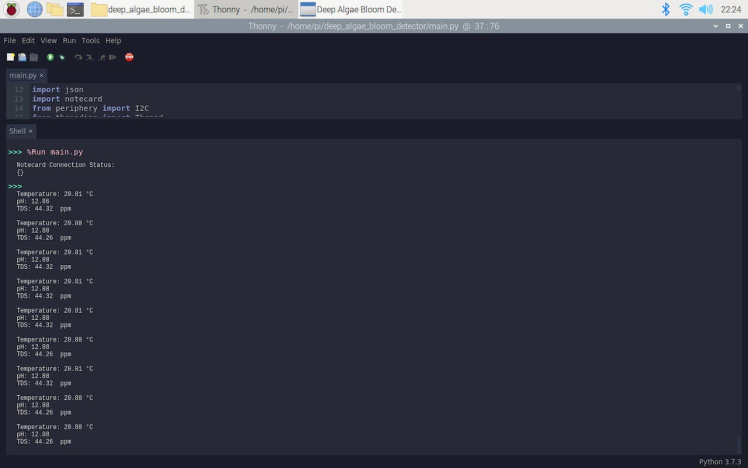

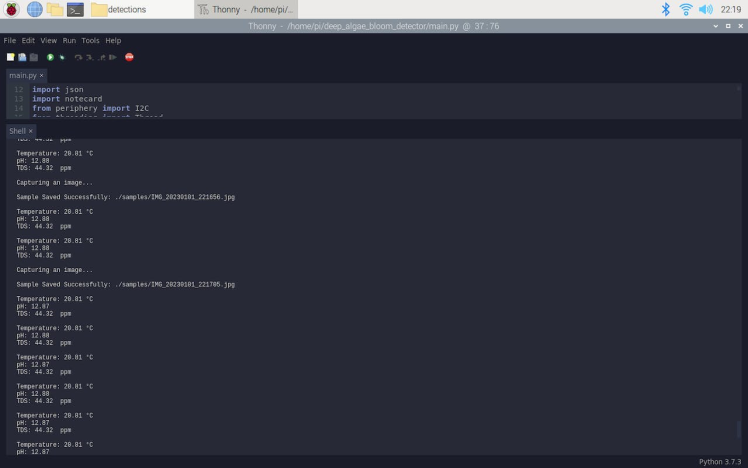

After running the main.py file on Raspberry Pi:

??? When Arduino Nano transfers the collected water quality data via serial communication, the device blinks the RGB LED as magenta.

??? Then, the device prints the received water quality information on the shell.

??? If the user presses the control button (C) to send the Collect Data! command, the device saves the latest frame captured by the borescope camera under the samples folder by appending the current date & time to its file name.

??? Then, the device blinks the RGB LED as blue and prints the image file path on the shell.

??? Also, the device shows the real-time video stream generated by the borescope camera on the screen.

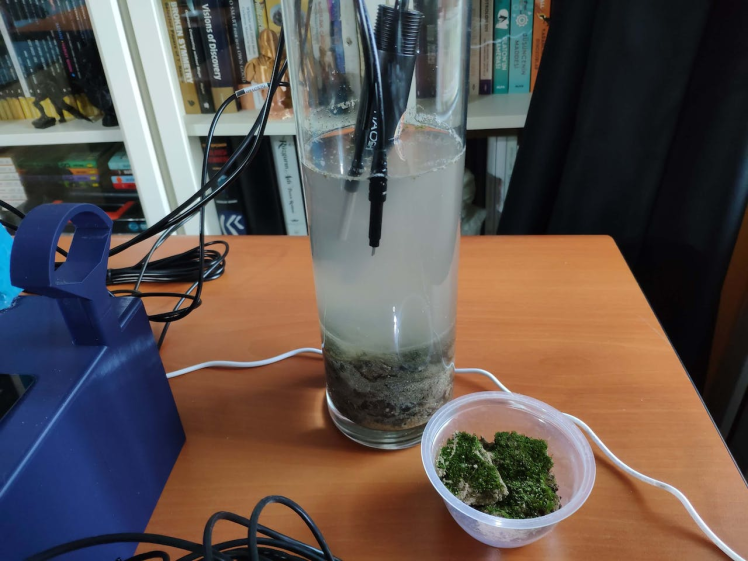

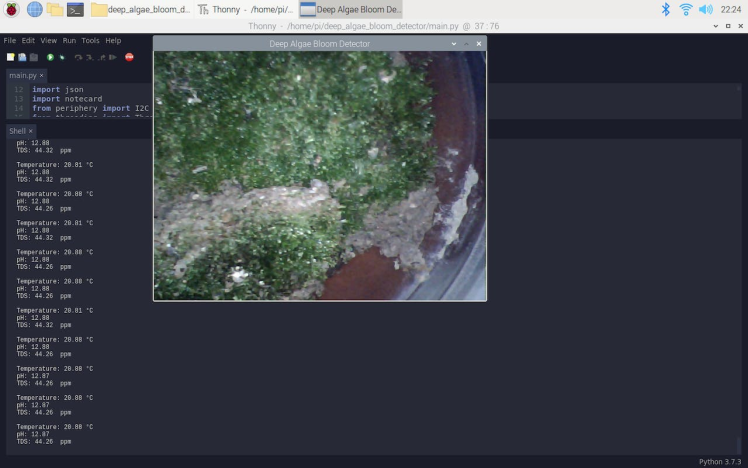

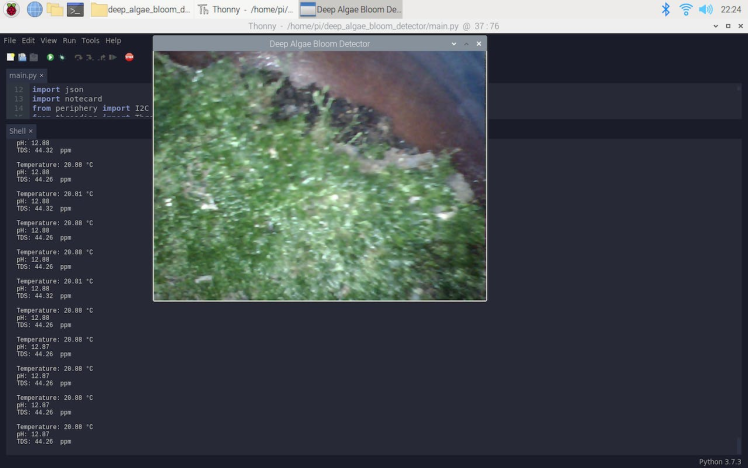

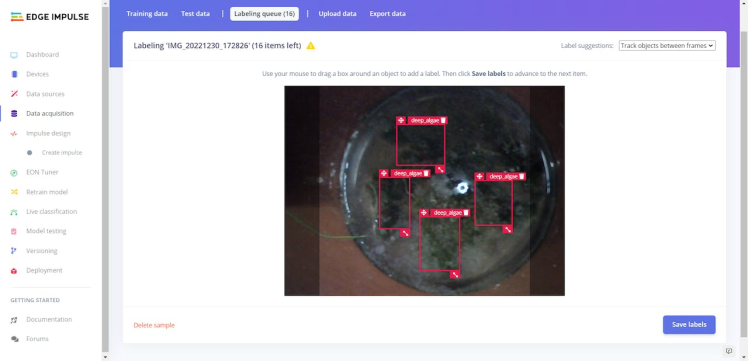

Since I needed to collect numerous deep algae bloom images to create a data set with notable validity, I decided to reproduce an algal bloom by enriching soil from a river near my hometown with fertilizer.

After leaving the enriched soil in a glass vase for a week, the algal bloom entrenched itself under the surface.

To test the borescope camera, I added more water to the glass vase. Then, I started to capture deep algae images and collect water quality data from the glass vase.

As far as my experiments go, the device operates faultlessly while collecting water quality data, capturing deep algal bloom images, and saving them on Raspberry Pi :)

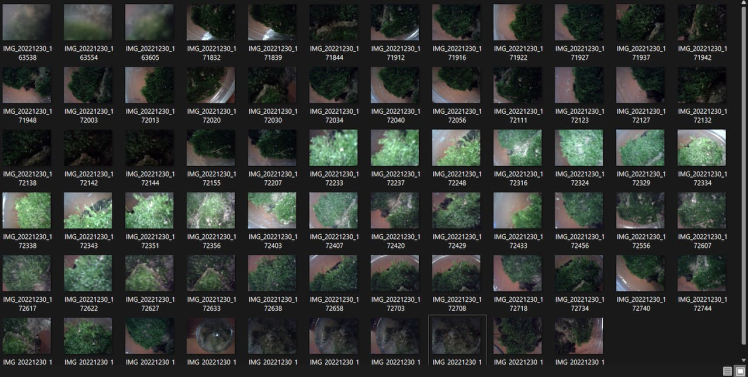

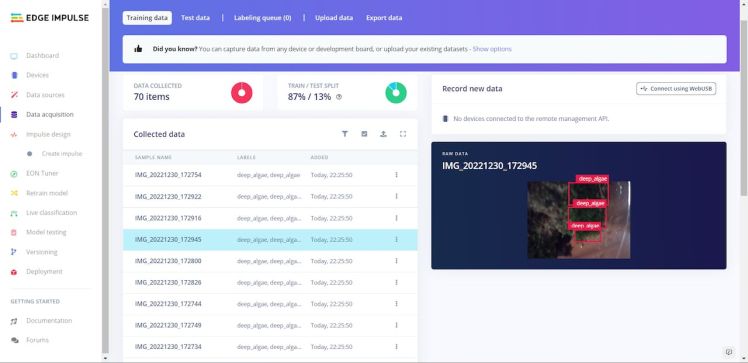

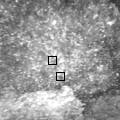

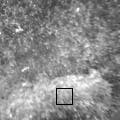

After capturing numerous deep algal bloom images from the glass vase for nearly two weeks, I elicited my relatively modest data set under the samples folder on Raspberry Pi, including training and testing samples for my object detection (FOMO) model.

When I completed capturing deep algae images and storing them on Raspberry Pi, I started to work on my object detection (FOMO) model to detect potential algal blooms so as to prevent their hazardous effects on marine life and the ecosystem.

Since Edge Impulse supports almost every microcontroller and development board due to its model deployment options, I decided to utilize Edge Impulse to build my object detection model. Also, Edge Impulse provides an elaborate machine learning algorithm (FOMO) for running more accessible and faster object detection models on Linux devices such as Raspberry Pi.

Edge Impulse FOMO (Faster Objects, More Objects) is a novel machine learning algorithm that brings object detection to highly constrained devices. FOMO models can count objects, find the location of the detected objects in an image, and track multiple objects in real time, requiring up to 30x less processing power and memory than MobileNet SSD or YOLOv5.

Even though Edge Impulse supports JPG or PNG files to upload as samples directly, each training or testing sample needs to be labeled manually. Therefore, I needed to follow the steps below to format my data set so as to train my object detection model accurately:

- Data Scaling (Resizing)

- Data Labeling

Since I focused on detecting deep algae bloom, I preprocessed my data set effortlessly to label each image sample on Edge Impulse by utilizing one class (label):

- deep_algae

Plausibly, Edge Impulse allows building predictive models optimized in size and accuracy automatically and deploying the trained model as a Linux ARMv7 application. Therefore, after scaling (resizing) and preprocessing my data set to label samples, I was able to build an accurate object detection model to detect potential deep algal bloom, which runs on Raspberry Pi without any additional requirements.

You can inspect my object detection (FOMO) model on Edge Impulse as a public project.

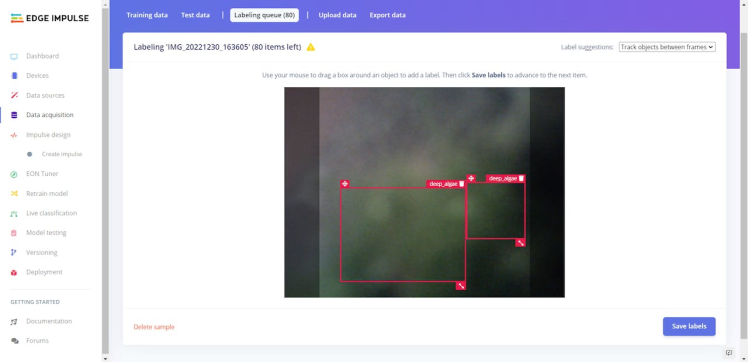

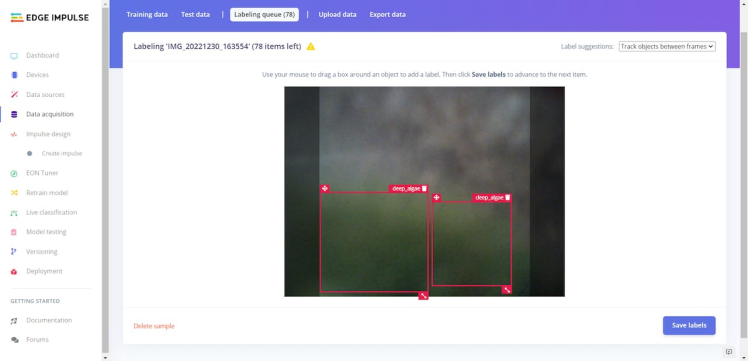

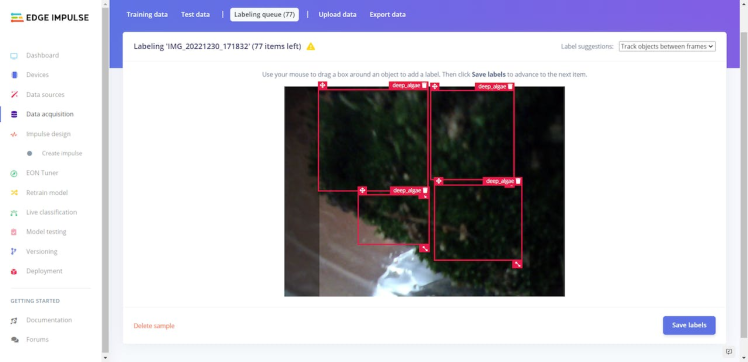

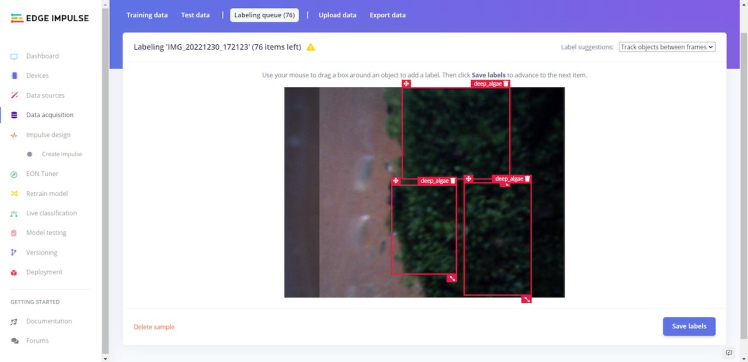

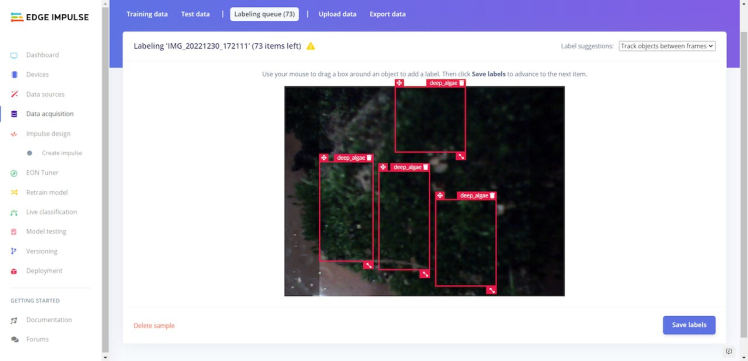

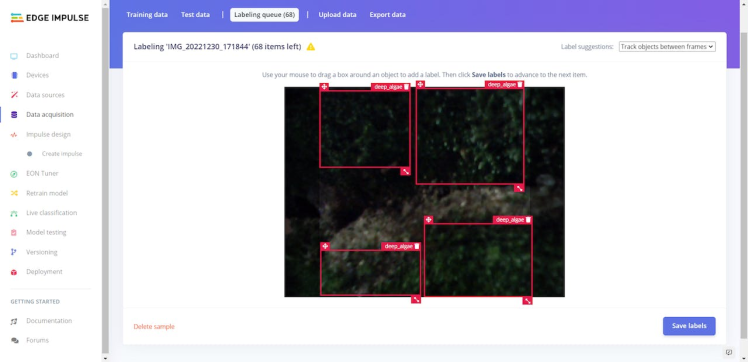

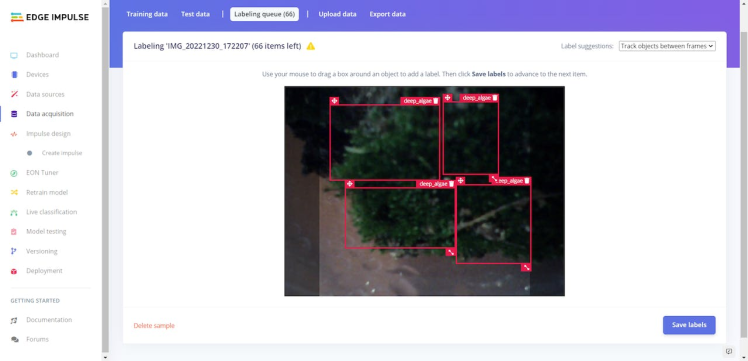

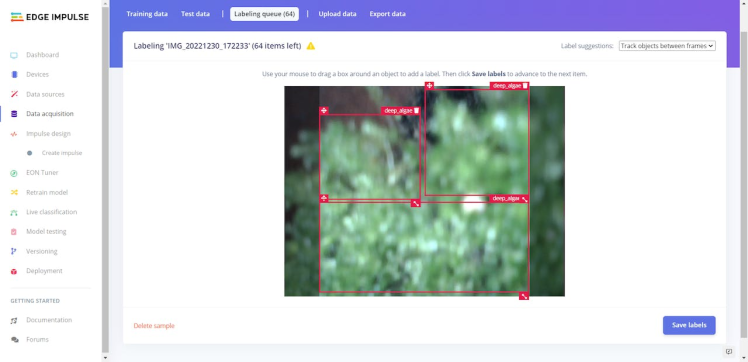

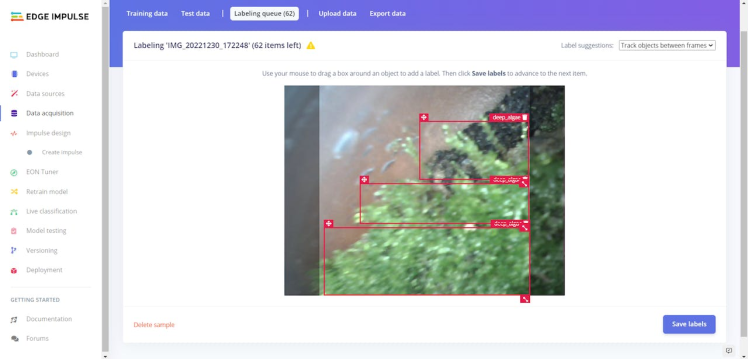

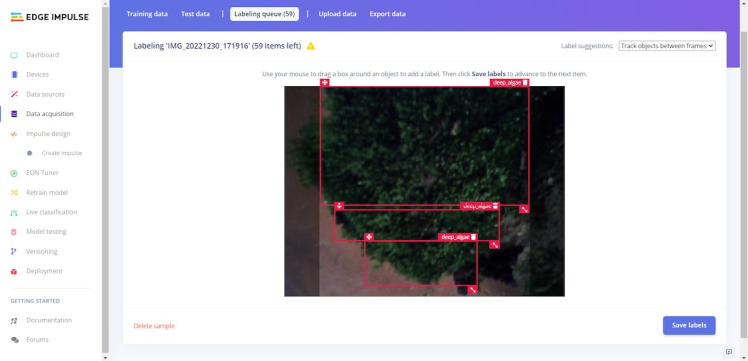

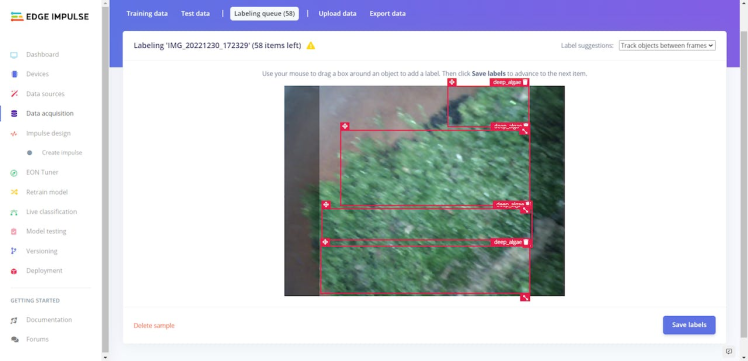

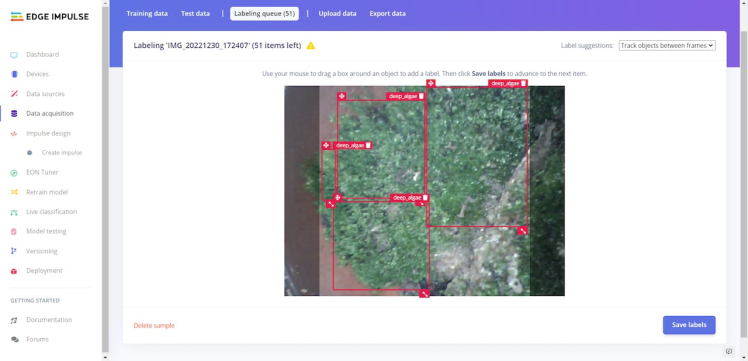

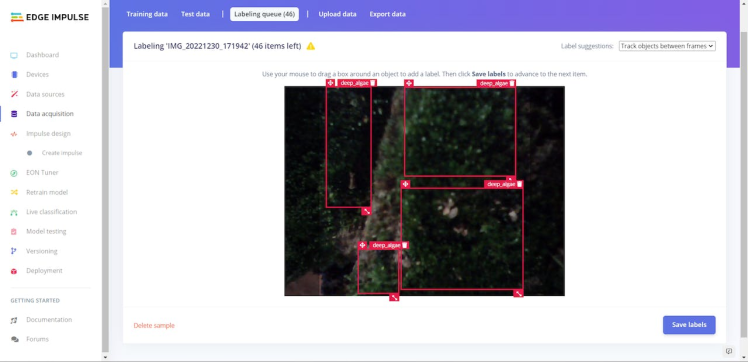

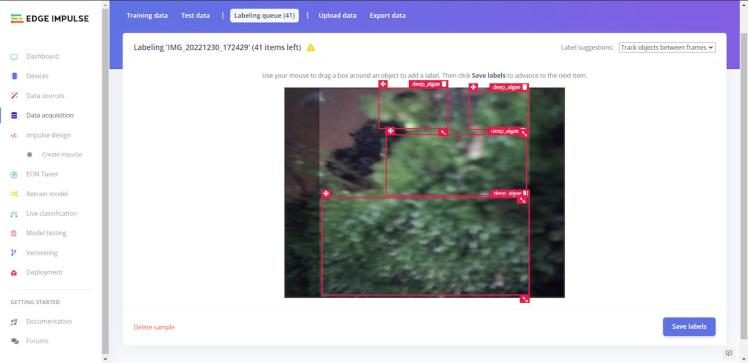

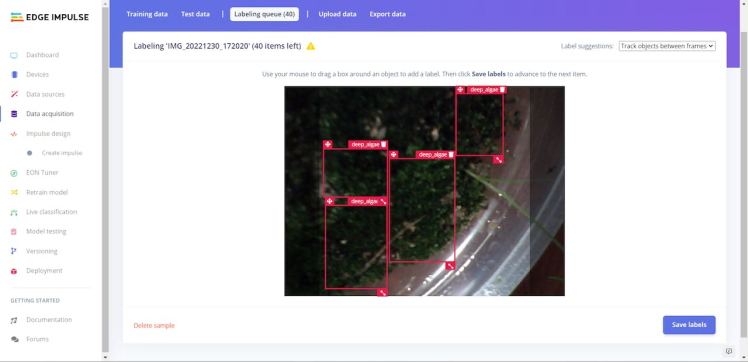

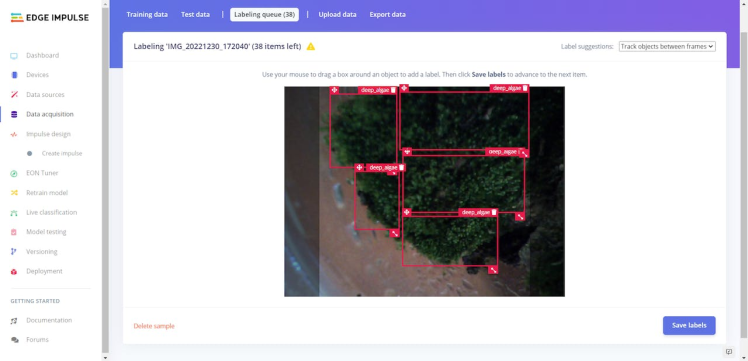

Step 7.1: Uploading images (samples) to Edge Impulse and labeling samplesAfter collecting training and testing image samples, I uploaded them to my project on Edge Impulse. Then, I labeled each sample.

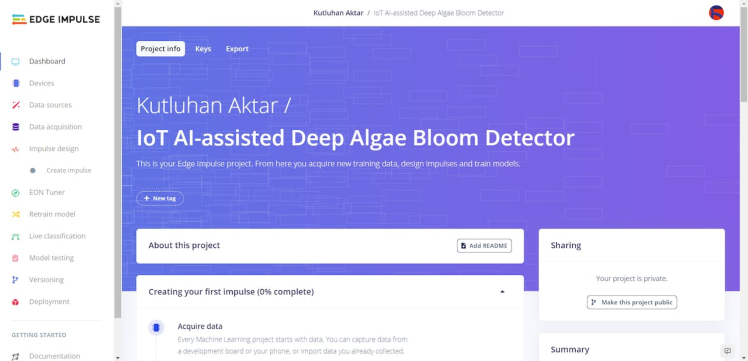

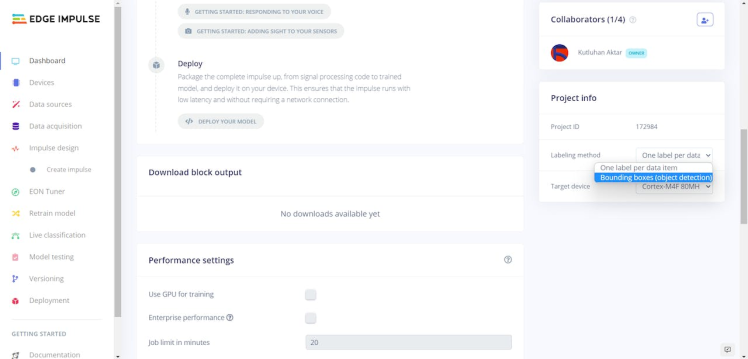

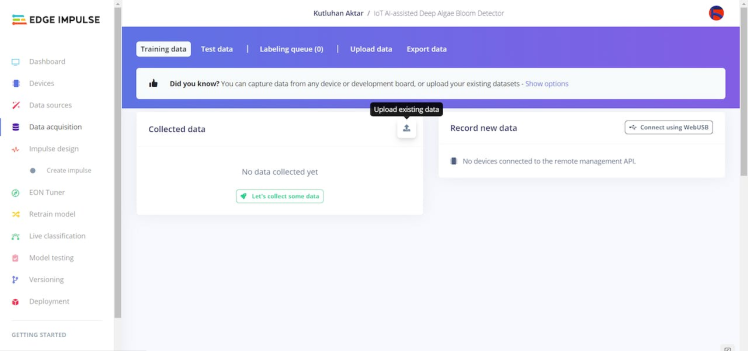

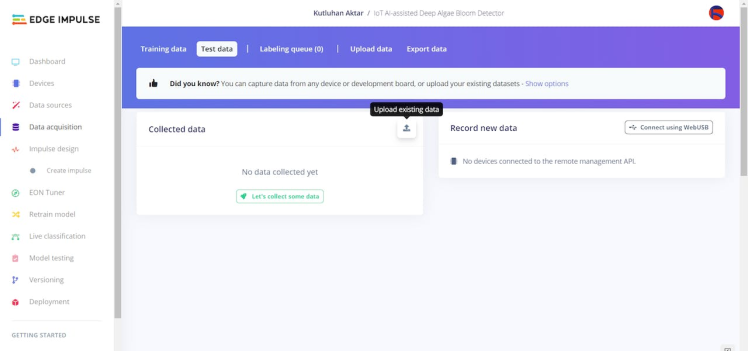

#️⃣ First of all, sign up for Edge Impulse and create a new project.

#️⃣ To be able to label image samples manually on Edge Impulse for object detection models, go to Dashboard ➡ Project info ➡ Labeling method and select Bounding boxes (object detection).

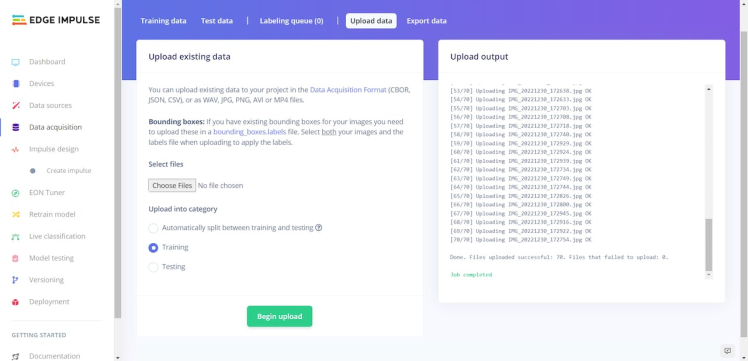

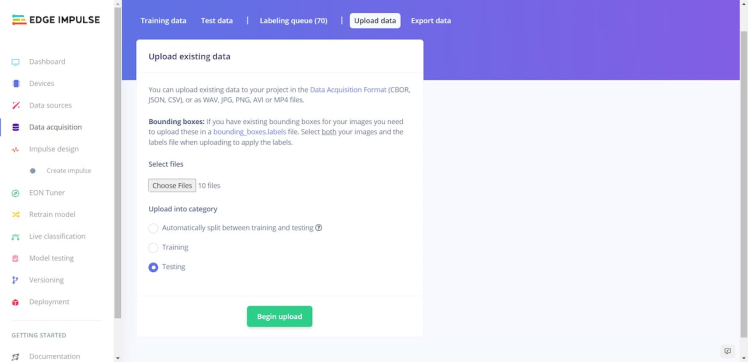

#️⃣ Navigate to the Data acquisition page and click the Upload existing data button.

#️⃣ Then, choose the data category (training or testing), select image files, and click the Begin upload button.

After uploading my data set successfully, I labeled each sample by utilizing the deep_algae class (label). In Edge Impulse, labeling an object is as easy as dragging a box around it and entering a label. Also, Edge Impulse runs a tracking algorithm in the background while labeling objects, so it moves bounding boxes automatically for the same objects in different images.

#️⃣ Go to Data acquisition ➡ Labeling queue (Object detection labeling). It shows all the unlabeled images (training and testing) remaining in the given data set.

#️⃣ Finally, select an unlabeled image, drag bounding boxes around objects, click the Save labels button, and repeat this process until the whole data set is labeled.

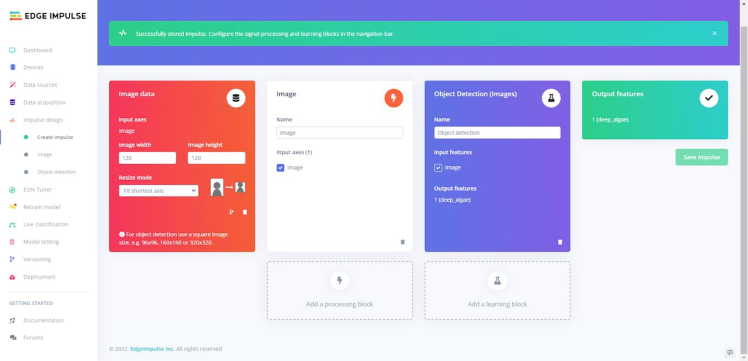

After labeling my training and testing samples successfully, I designed an impulse and trained it on detecting potential deep algal bloom.

An impulse is a custom neural network model in Edge Impulse. I created my impulse by employing the Image preprocessing block and the Object Detection (Images) learning block.

The Image preprocessing block optionally turns the input image format to grayscale and generates a features array from the raw image.

The Object Detection (Images) learning block represents a machine learning algorithm that detects objects on the given image, distinguished between model labels.

#️⃣ Go to the Create impulse page and set image width and height parameters to 120. Then, select the resize mode parameter as Fit shortest axis so as to scale (resize) given training and testing image samples.

#️⃣ Select the Image preprocessing block and the Object Detection (Images) learning block. Finally, click Save Impulse.

#️⃣ Before generating features for the object detection model, go to the Image page and set the Color depth parameter as Grayscale. Then, click Save parameters.

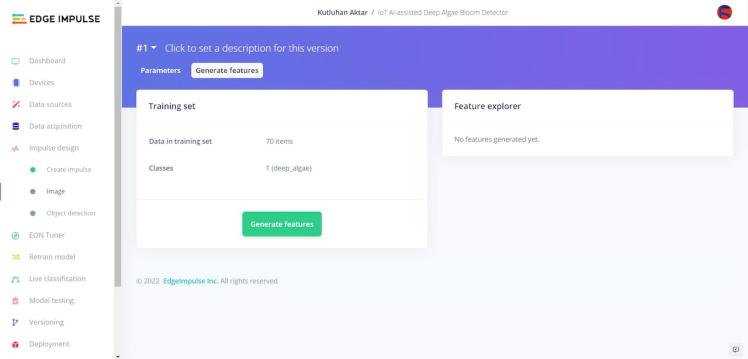

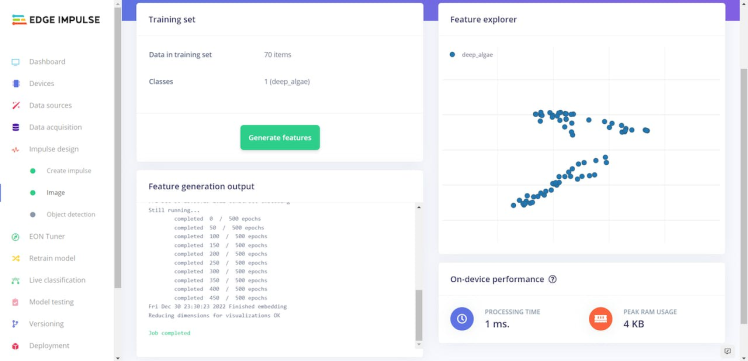

#️⃣ After saving parameters, click Generate features to apply the Image preprocessing block to training image samples.

#️⃣ Finally, navigate to the Object detection page and click Start training.

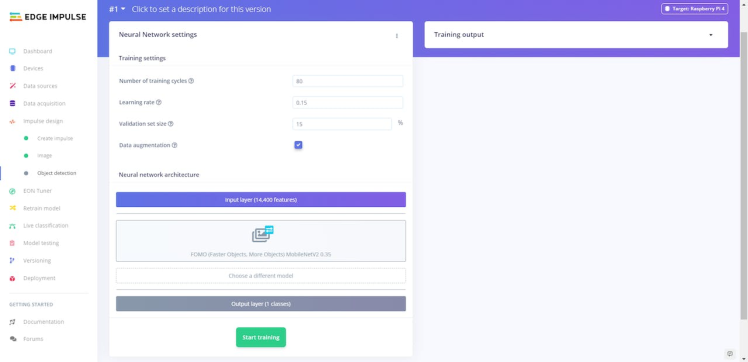

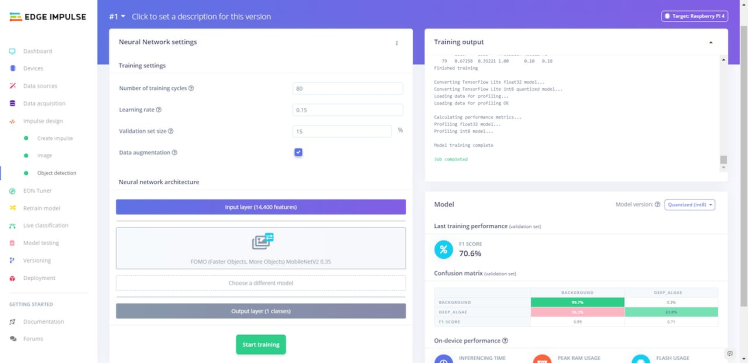

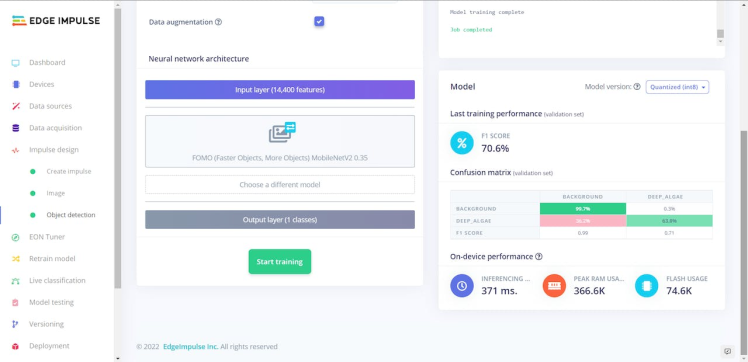

According to my experiments with my object detection model, I modified the neural network settings and architecture to build an object detection model with high accuracy and validity:

? Neural network settings:

- Number of training cycles ➡ 80

- Learning rate ➡ 0.15

- Validation set size ➡ 15

? Neural network architecture:

- FOMO (Faster Objects, More Objects) MobileNetV2 0.35

After generating features and training my FOMO model with training samples, Edge Impulse evaluated the F1 score (accuracy) as 70.6%.

The F1 score (accuracy) is approximately 70.6% due to the minuscule volume of training samples showing algae groups in green and brownish green with a similar color tone to the background soil. Due to the color scheme, I found out the model misinterprets some algae groups with the background soil. Therefore, I am still collecting samples to improve my data set.

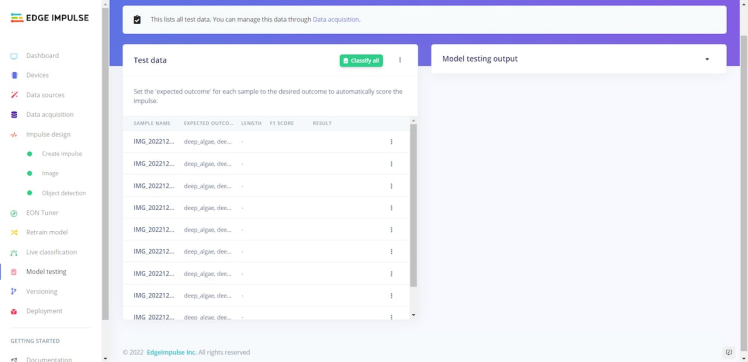

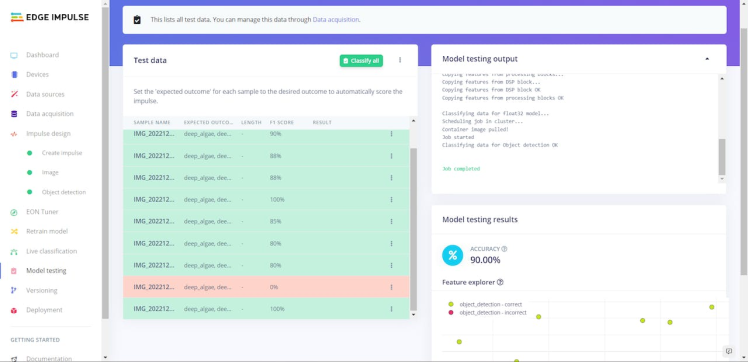

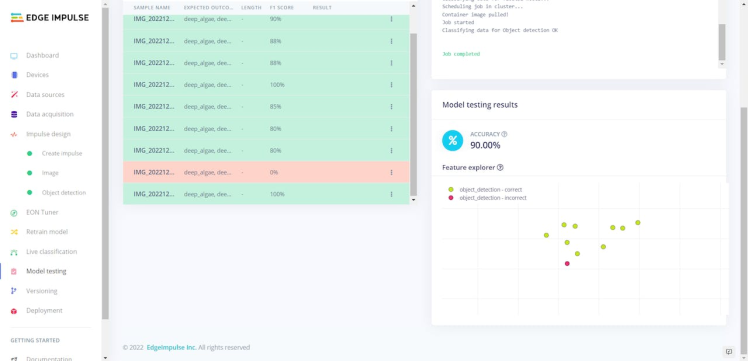

After building and training my object detection model, I tested its accuracy and validity by utilizing testing image samples.

The evaluated accuracy of the model is 90%.

#️⃣ To validate the trained model, go to the Model testing page and click Classify all.

After validating my object detection model, I deployed it as a fully optimized Linux ARMv7 application (.eim).

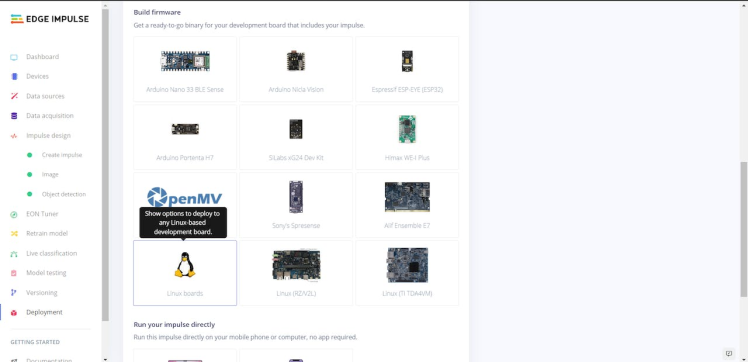

#️⃣ To deploy the validated model as a Linux ARMv7 application, navigate to the Deployment page and select Linux boards.

#️⃣ On the pop-up window, open the deployment options for all Linux-based development boards.

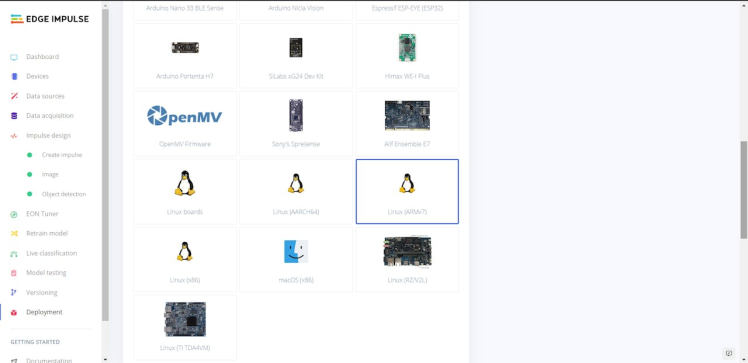

#️⃣ From the new deployment options, select Linux (ARMv7).

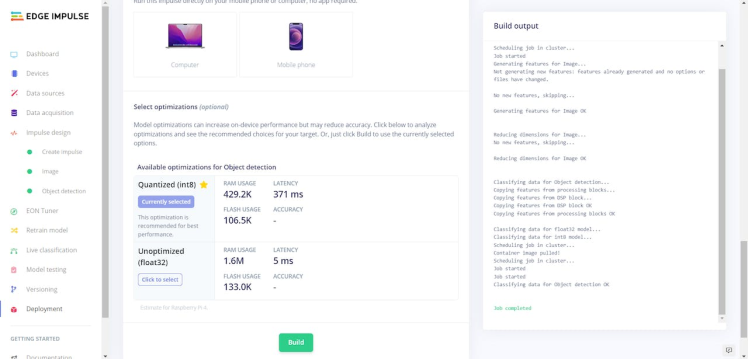

#️⃣ Then, choose the Quantized (int8) optimization option to get the best performance possible while running the deployed model.

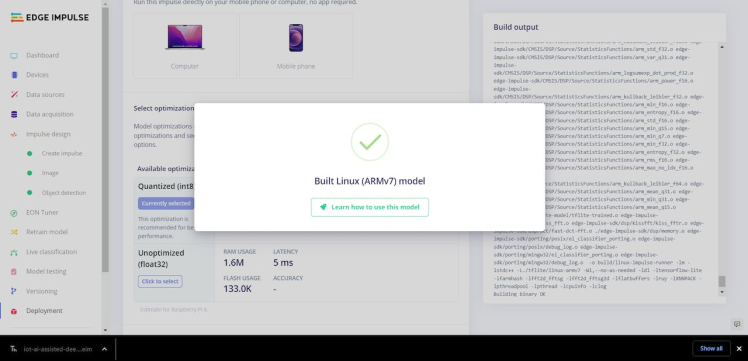

#️⃣ Finally, click Build to download the model as a Linux ARMv7 application (.eim).

After building, training, and deploying my object detection (FOMO) model as a Linux ARMv7 application on Edge Impulse, I needed to upload the generated application to Raspberry Pi to run the model directly so as to create an easy-to-use and capable device operating with minimal latency, memory usage, and power consumption.

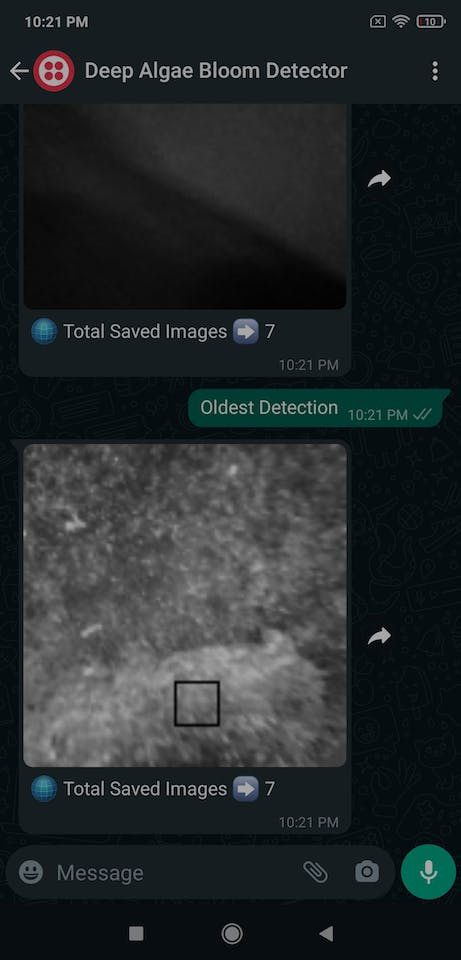

FOMO object detection models can count objects under the assigned classes and provide the detected object's location using centroids. Therefore, I was able to modify the captured images to highlight the detected algae blooms with bounding boxes in Python.

Since Edge Impulse optimizes and formats preprocessing, configuration, and learning blocks into an EIM file while deploying a model as a Linux ARMv7 application, I was able to import my object detection model effortlessly to run inferences in Python.

However, before proceeding with the following steps, I needed to set Notecard and Edge Impulse on my Raspberry Pi 4.

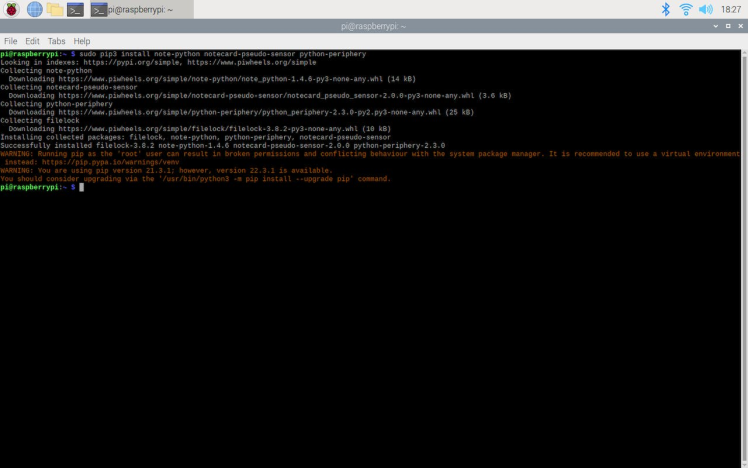

#️⃣ To communicate with Notecard over I2C, install the required libraries on Raspberry Pi via the terminal.

sudo pip3 install note-python notecard-pseudo-sensor python-periphery

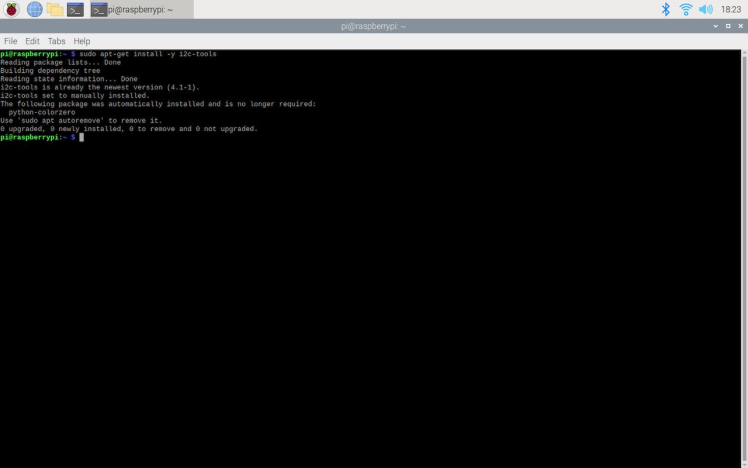

#️⃣ Enable the I2C interface on Raspberry Pi Configuration and install the I2C helper tools via the terminal.

sudo apt-get install -y i2c-tools

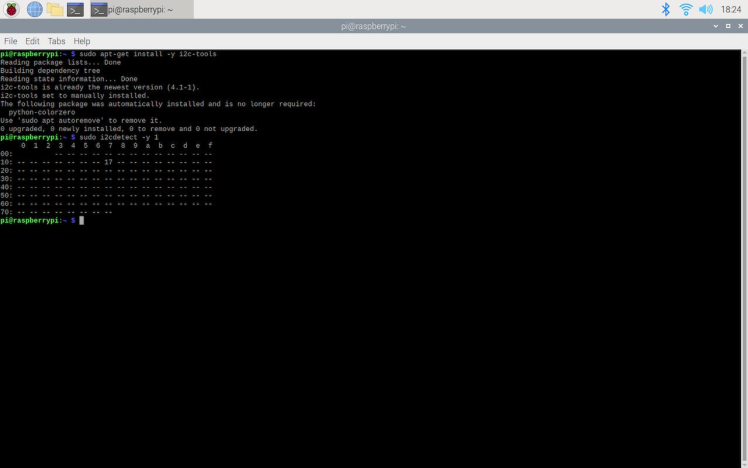

#️⃣ Then, check whether Notecard is detected successfully or not via the terminal.

sudo i2cdetect -y 1

The terminal should show the I2C address of Notecard (0x17) in the output.

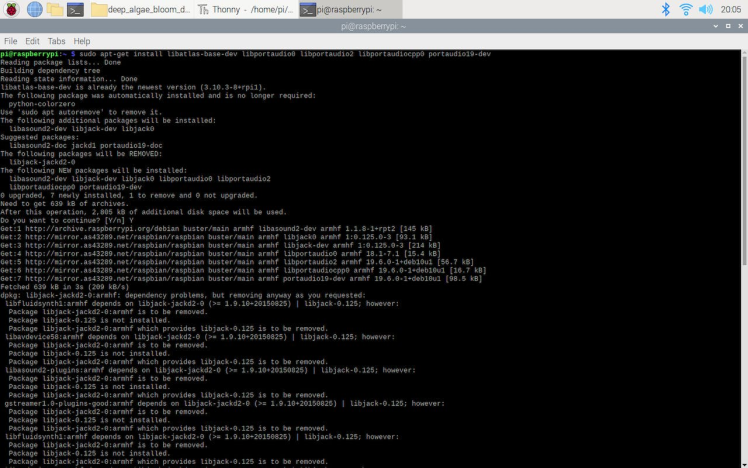

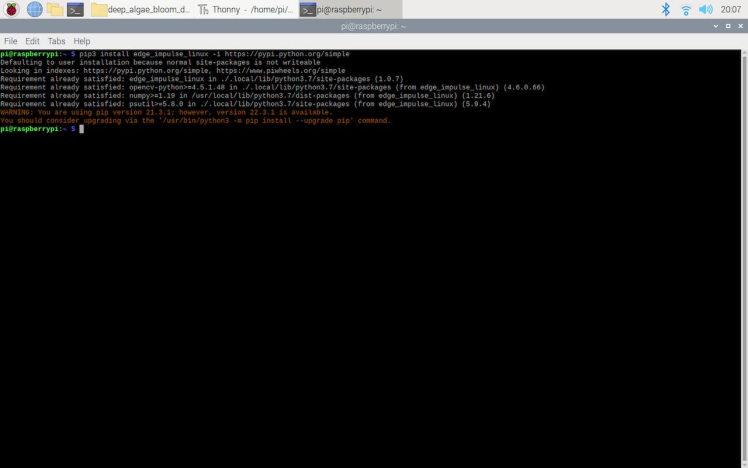

#️⃣ To run inferences, install the Edge Impulse Linux Python SDK on Raspberry Pi via the terminal.

sudo apt-get install libatlas-base-dev libportaudio0 libportaudio2 libportaudiocpp0 portaudio19-dev

pip3 install edge_impulse_linux -i https://pypi.python.org/simple

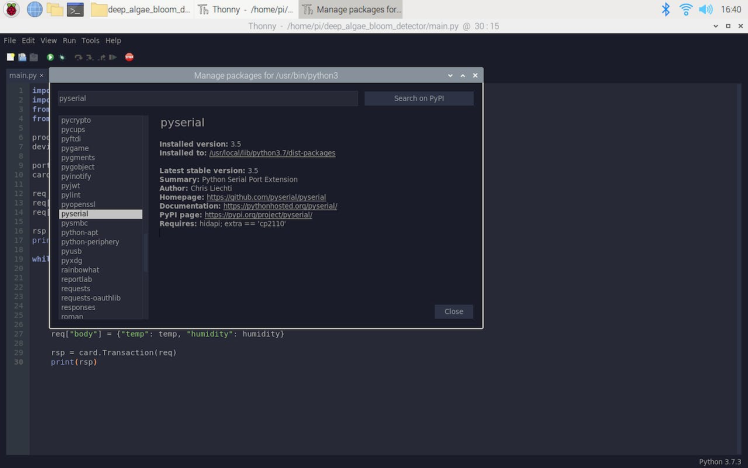

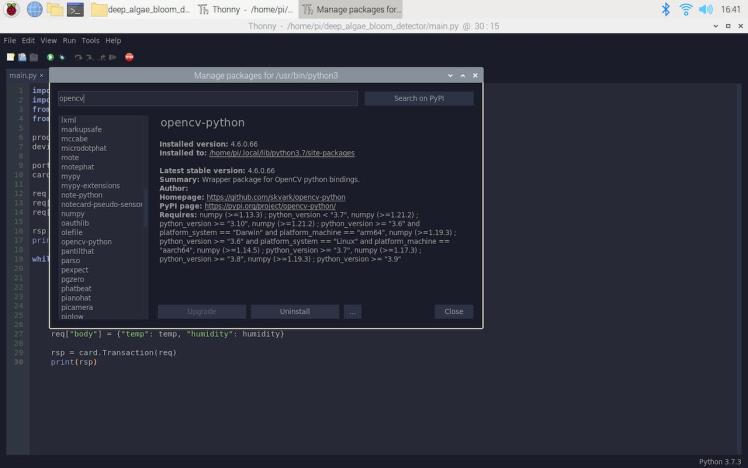

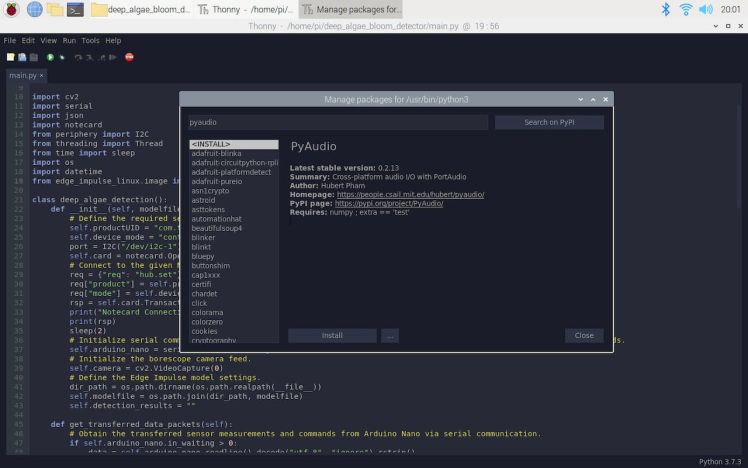

#️⃣ Since I decided to run my program on Thonny due to its built-in shell, I was able to install the remaining required libraries via Thonny's package manager.

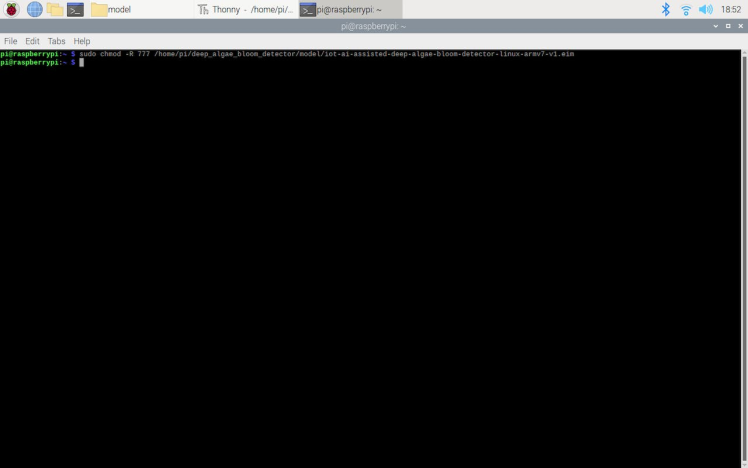

#️⃣ Since Thonny cannot open the EIM files as non-root, change the Linux ARMv7 application's permissions to allow Thonny to execute the file as a program via the terminal.

sudo chmod -R 777 /home/pi/deep_algae_bloom_detector/model/iot-ai-assisted-deep-algae-bloom-detector-linux-armv7-v1.eim

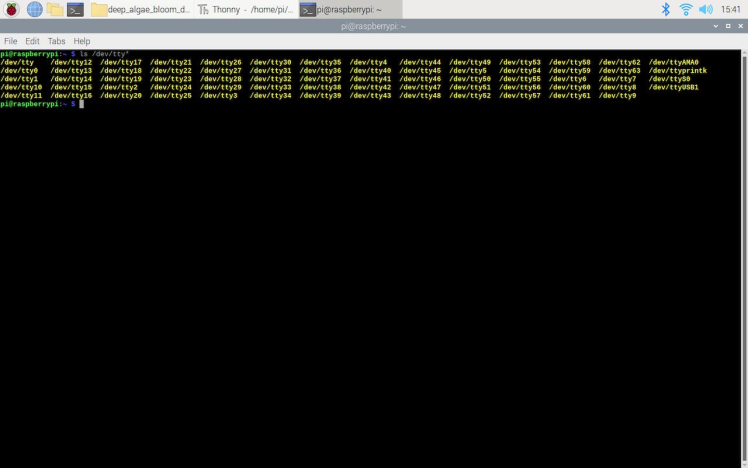

#️⃣ To receive the information transferred by Arduino Nano via serial communication, find Arduino Nano's port via the terminal.

ls /dev/tty*

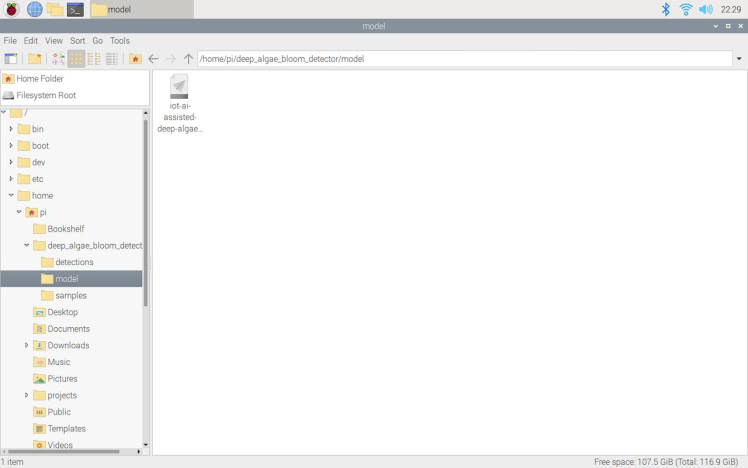

As shown below, the application on Raspberry Pi consists of three folders and two code files:

- /detections

- /model

- /samples

- main.py

- update_web_detection_folder.py

After completing setting up Notecard and uploading the application (.eim) successfully, I programmed Raspberry Pi to capture algae bloom images via the borescope camera and run inferences so as to detect potential deep algal bloom.

Also, after running inferences successfully, I employed Notecard to transmit the detection results and the collected water quality data to the webhook via Notehub.io, which sends the received information to the user via Twilio's WhatsApp API.

You can download the main.py file to try and inspect the code for capturing images and running Edge Impulse neural network models on Raspberry Pi.

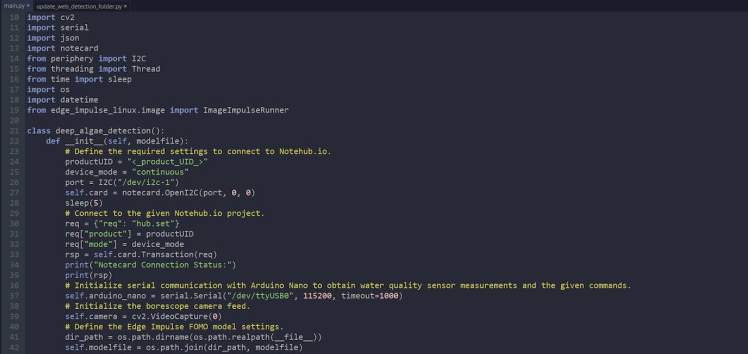

⭐ Include the required modules.

import cv2

import serial

import json

import notecard

from periphery import I2C

from threading import Thread

from time import sleep

import os

import datetime

from edge_impulse_linux.image import ImageImpulseRunner⭐ In the __init__ function:

⭐ Define the required settings to connect to Notehub.io.

⭐ Connect to the Notehub.io project with the given product UID.

⭐ Define the Edge Impulse FOMO model settings and path (Linux ARMv7 application).

def __init__(self, modelfile):

# Define the required settings to connect to Notehub.io.

productUID = "<_product_UID_>"

device_mode = "continuous"

port = I2C("/dev/i2c-1")

self.card = notecard.OpenI2C(port, 0, 0)

sleep(5)

# Connect to the given Notehub.io project.

req = {"req": "hub.set"}

req["product"] = productUID

req["mode"] = device_mode

rsp = self.card.Transaction(req)

print("Notecard Connection Status:")

print(rsp)

# Initialize serial communication with Arduino Nano to obtain water quality sensor measurements and the given commands.

self.arduino_nano = serial.Serial("/dev/ttyUSB0", 115200, timeout=1000)

# Initialize the borescope camera feed.

self.camera = cv2.VideoCapture(0)

# Define the Edge Impulse FOMO model settings.

dir_path = os.path.dirname(os.path.realpath(__file__))

self.modelfile = os.path.join(dir_path, modelfile)

self.detection_results = ""⭐ In the get_transferred_data_packets function, obtain the transferred water quality sensor measurements and commands from Arduino Nano via serial communication.

def get_transferred_data_packets(self):

# Obtain the transferred sensor measurements and commands from Arduino Nano via serial communication.

if self.arduino_nano.in_waiting > 0:

data = self.arduino_nano.readline().decode("utf-8", "ignore").rstrip()

if(data.find("Run") >= 0):

print("nRunning an inference...")

self.run_inference()

if(data.find("Collect") >= 0):

print("nCapturing an image... ")

self.save_img_sample()

if(data.find("{") >= 0):

self.sensors = json.loads(data)

print("nTemperature: " + self.sensors["Temperature"])

print("pH: " + self.sensors["pH"])

print("TDS: " + self.sensors["TDS"])

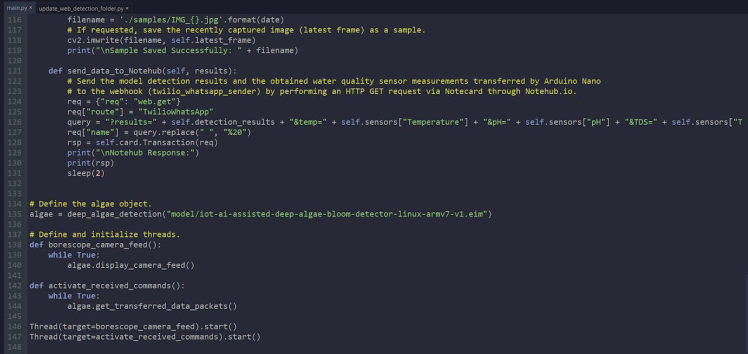

sleep(1)⭐ In the send_data_to_Notehub function, send the model detection results and water quality sensor measurements transferred by Arduino Nano to the Notehub.io project via cellular connectivity. Then, Notehub.io performs an HTTP GET request to transfer the received information to the webhook (twilio_whatsapp_sender).

def send_data_to_Notehub(self, results):

# Send the model detection results and the obtained water quality sensor measurements transferred by Arduino Nano

# to the webhook (twilio_whatsapp_sender) by performing an HTTP GET request via Notecard through Notehub.io.

req = {"req": "web.get"}

req["route"] = "TwilioWhatsApp"

query = "?results=" + self.detection_results + "&temp=" + self.sensors["Temperature"] + "&pH=" + self.sensors["pH"] + "&TDS=" + self.sensors["TDS"]

req["name"] = query.replace(" ", "%20")

rsp = self.card.Transaction(req)

print("nNotehub Response:")

print(rsp)

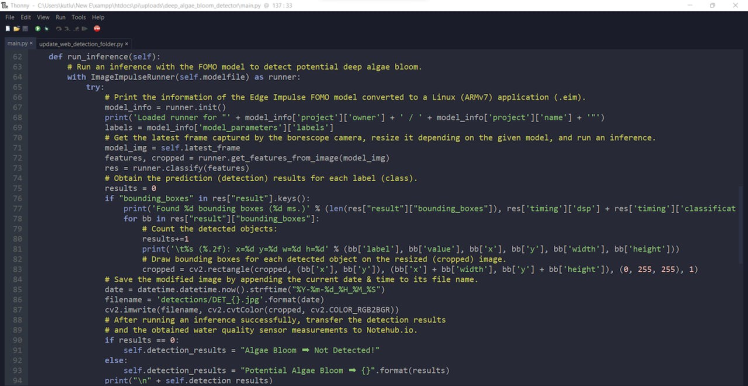

sleep(2)⭐ In the run_inference function:

⭐ Print the information of the Edge Impulse FOMO model converted to a Linux (ARMv7) application (.eim).

⭐ Get the latest frame captured by the borescope camera, resize it depending on the given model, and run an inference.

⭐ Obtain the prediction (detection) results for each label (class).

⭐ Count the detected objects on the frame.

⭐ Draw bounding boxes for each detected object on the resized (cropped) image.

⭐ Save the modified model detection image to the detections folder by appending the current date & time to its file name:

DET_2023-01-01_22_13_46.jpg

⭐ After running an inference successfully, transfer the detection results and the obtained water quality sensor measurements to Notehub.io.

⭐ Finally, stop the running inference.

def run_inference(self):

# Run an inference with the FOMO model to detect potential deep algae bloom.

with ImageImpulseRunner(self.modelfile) as runner:

try:

# Print the information of the Edge Impulse FOMO model converted to a Linux (ARMv7) application (.eim).

model_info = runner.init()

print('Loaded runner for "' + model_info['project']['owner'] + ' / ' + model_info['project']['name'] + '"')

labels = model_info['model_parameters']['labels']

# Get the latest frame captured by the borescope camera, resize it depending on the given model, and run an inference.

model_img = self.latest_frame

features, cropped = runner.get_features_from_image(model_img)

res = runner.classify(features)

# Obtain the prediction (detection) results for each label (class).

results = 0

if "bounding_boxes" in res["result"].keys():

print('Found %d bounding boxes (%d ms.)' % (len(res["result"]["bounding_boxes"]), res['timing']['dsp'] + res['timing']['classification']))

for bb in res["result"]["bounding_boxes"]:

# Count the detected objects:

results+=1

print('t%s (%.2f): x=%d y=%d w=%d h=%d' % (bb['label'], bb['value'], bb['x'], bb['y'], bb['width'], bb['height']))

# Draw bounding boxes for each detected object on the resized (cropped) image.

cropped = cv2.rectangle(cropped, (bb['x'], bb['y']), (bb['x'] + bb['width'], bb['y'] + bb['height']), (0, 255, 255), 1)

# Save the modified image by appending the current date & time to its file name.

date = datetime.datetime.now().strftime("%Y-%m-%d_%H_%M_%S")

filename = 'detections/DET_{}.jpg'.format(date)

cv2.imwrite(filename, cv2.cvtColor(cropped, cv2.COLOR_RGB2BGR))

# After running an inference successfully, transfer the detection results

# and the obtained water quality sensor measurements to Notehub.io.

if results == 0:

self.detection_results = "Algae Bloom ➡ Not Detected!"

else:

self.detection_results = "Potential Algae Bloom ➡ {}".format(results)

print("n" + self.detection_results)

self.send_data_to_Notehub(self.detection_results)

# Stop the running inference.

finally:

if(runner):

runner.stop()#️⃣ Since displaying a real-time video stream generated by the borescope camera, communicating with Arduino Nano to obtain water quality data and commands, and running the Edge Impulse object detection model cannot be executed in a single loop, I utilized the Python Thread class to run simultaneous processes (functions).

def borescope_camera_feed():

while True:

algae.display_camera_feed()

def activate_received_commands():

while True:

algae.get_transferred_data_packets()

Thread(target=borescope_camera_feed).start()

Thread(target=activate_received_commands).start()

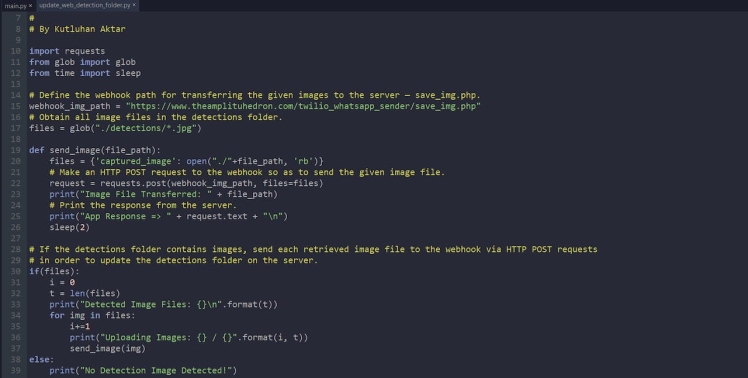

Since I was not able to send a model detection image to the webhook via Notecard after running an inference due to cellular connectivity data limitations, I decided to transfer all model detection images in the detections folder to the webhook concurrently via Wi-Fi connectivity.

You can download the update_web_detection_folder.py file to try and inspect the code for transferring images to a webhook simultaneously on Raspberry Pi.

⭐ Include the required modules.

import requests

from glob import glob

from time import sleep⭐ Define the webhook path for transferring the given images to the server:

/twilio_whatsapp_sender/save_img.php

webhook_img_path = "https://www.theamplituhedron.com/twilio_whatsapp_sender/save_img.php"⭐ Obtain all image files in the detections folder.

files = glob("./detections/*.jpg")⭐ In the send_image function, make an HTTP POST request to the webhook so as to send the given image file.

⭐ Then, print the response from the server.

def send_image(file_path):

files = {'captured_image': open("./"+file_path, 'rb')}

# Make an HTTP POST request to the webhook so as to send the given image file.

request = requests.post(webhook_img_path, files=files)

print("Image File Transferred: " + file_path)

# Print the response from the server.

print("App Response => " + request.text + "n")

sleep(2)⭐ If the detections folder contains images, send each retrieved image file to the webhook via HTTP POST requests concurrently in order to update the detections folder on the server.

if(files):

i = 0

t = len(files)

print("Detected Image Files: {}n".format(t))

for img in files:

i+=1

print("Uploading Images: {} / {}".format(i, t))

send_image(img)

else:

print("No Detection Image Detected!")

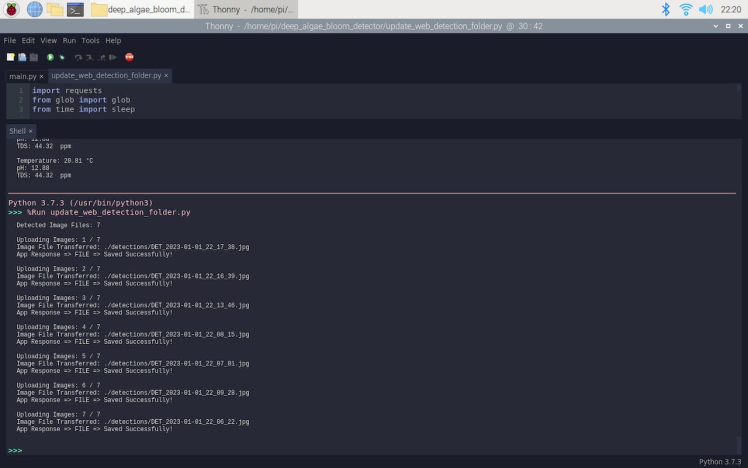

After running the update_web_detection_folder.py file:

??? Raspberry Pi transfers each model detection image in the detections folder to the webhook via HTTP POST requests simultaneously to update the detections folder on the server.

??? Also, Raspberry Pi prints the total image file number, the retrieved image file paths, and the server response on the shell for debugging.

My Edge Impulse object detection (FOMO) model scans a captured image and predicts possibilities of trained labels to recognize an object on the given captured image. The prediction result (score) represents the model's "confidence" that the detected object corresponds to the given label (class) [0], as shown in Step 7:

- deep_algae

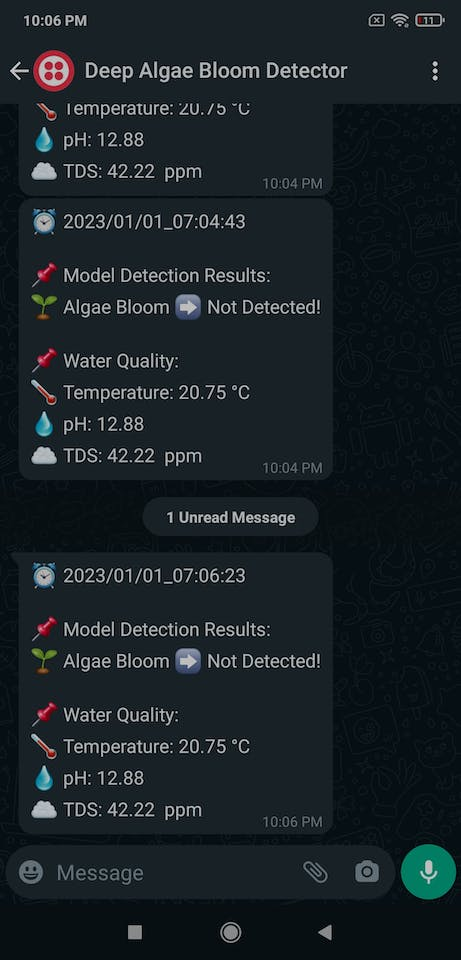

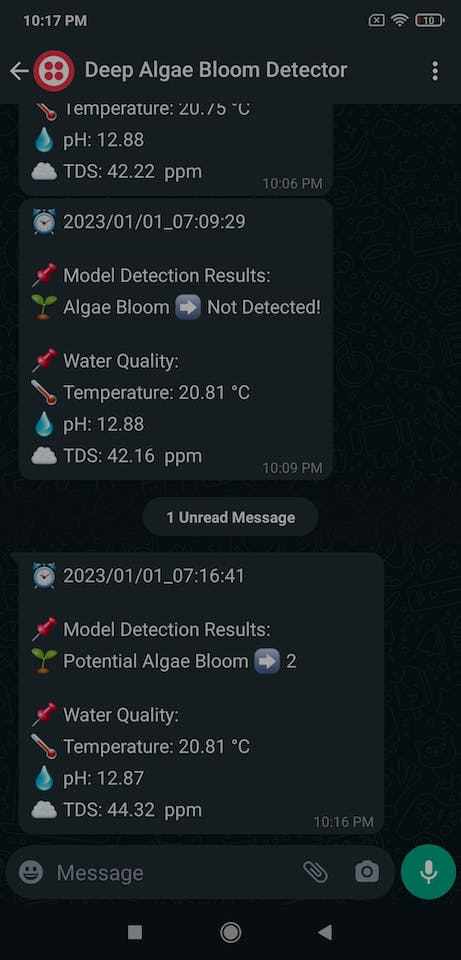

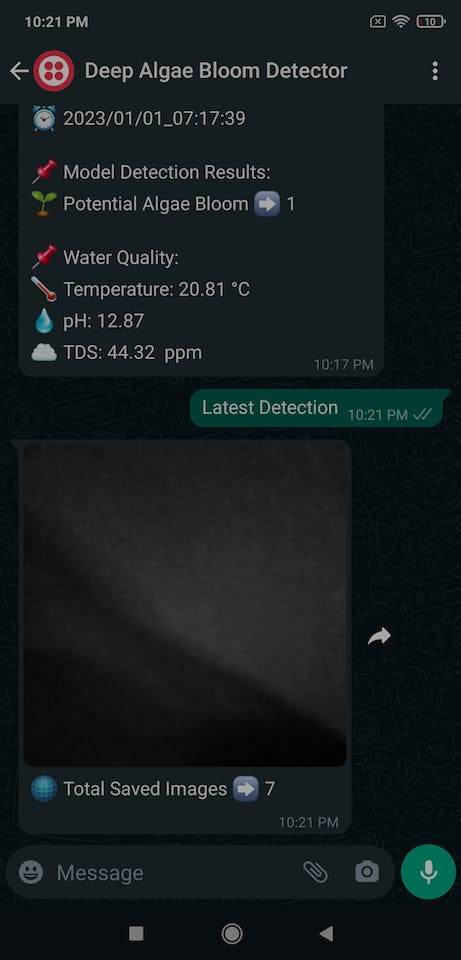

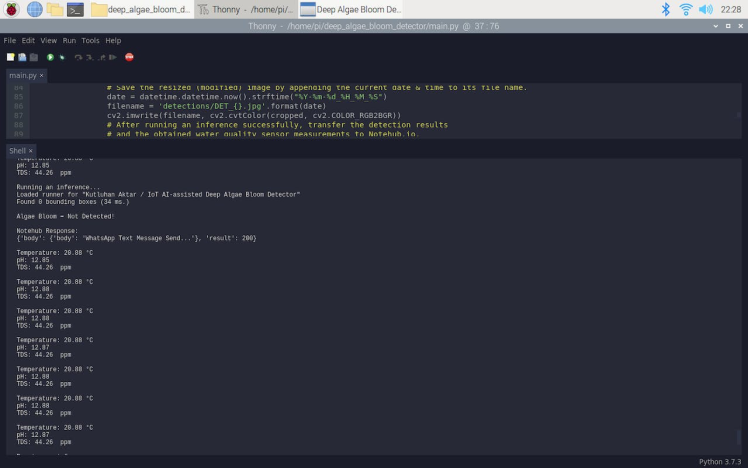

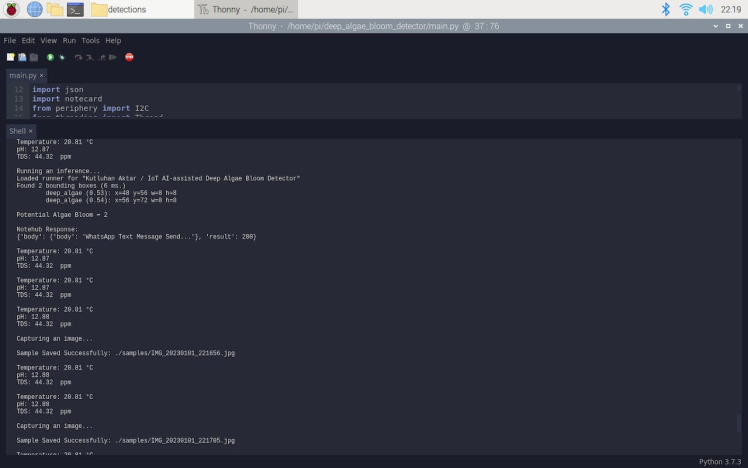

After executing the main.py file on Raspberry Pi:

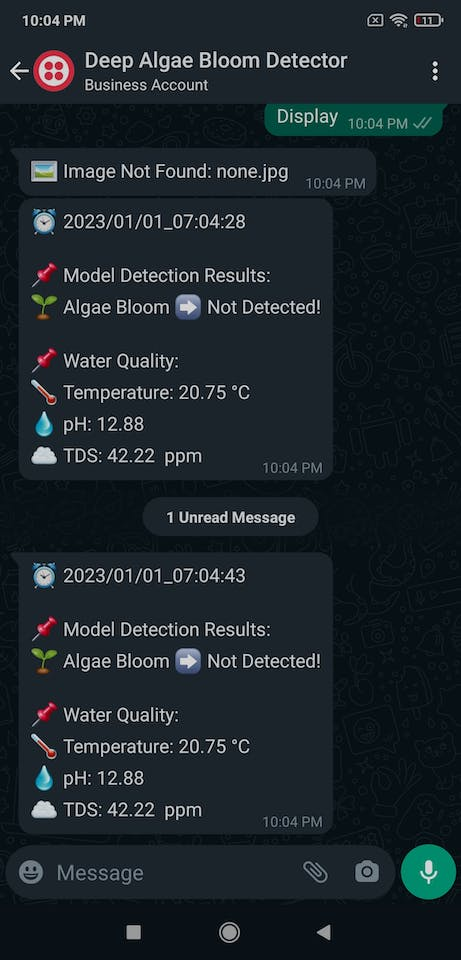

??? When Arduino Nano transfers the collected water quality data via serial communication, the device blinks the RGB LED as magenta and prints the received water quality information on the shell.

??? If the user presses the control button (R) to send the Run Inference! command, the device blinks the RGB LED as green.

??? Then, the device gets the latest frame captured by the borescope camera and runs an inference with the Edge Impulse object detection model.

??? After running an inference successfully, the device counts the detected objects on the given frame. Then, it modifies the frame by adding bounding boxes for each detected object to emphasize potential deep algae bloom.

??? After obtaining the model detection results, the device transfers the detection results and the water quality data sent by Arduino Nano to Notehub.io via Notecard over cellular connectivity.

??? As explained in Step 4, when the Notehub.io project receives the detection results with the collected water quality information, it makes an HTTP GET request to transfer the incoming data to the webhook — /twilio_whatsapp_sender/.

??? As explained in Step 3, when the webhook receives a data packet from the Notehub.io project, it transfers the model detection results with the collected water quality data by adding the current date & time to the verified phone over WhatsApp via Twilio's WhatsApp API.

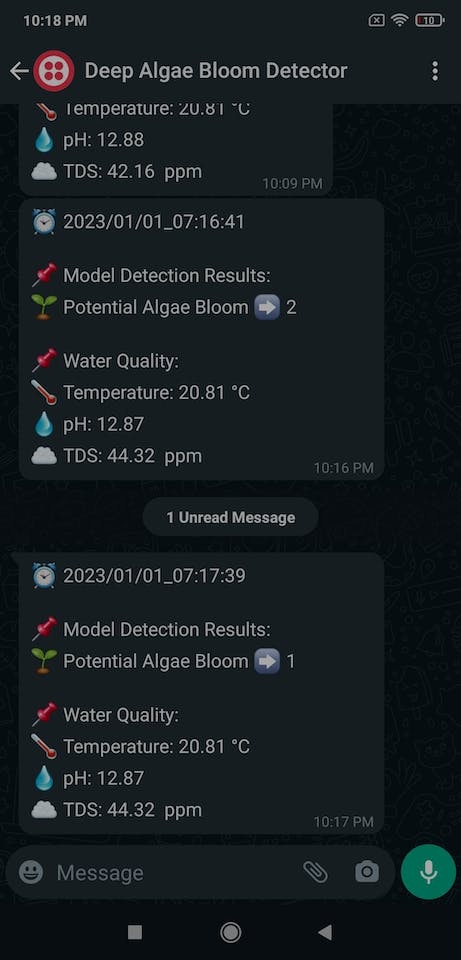

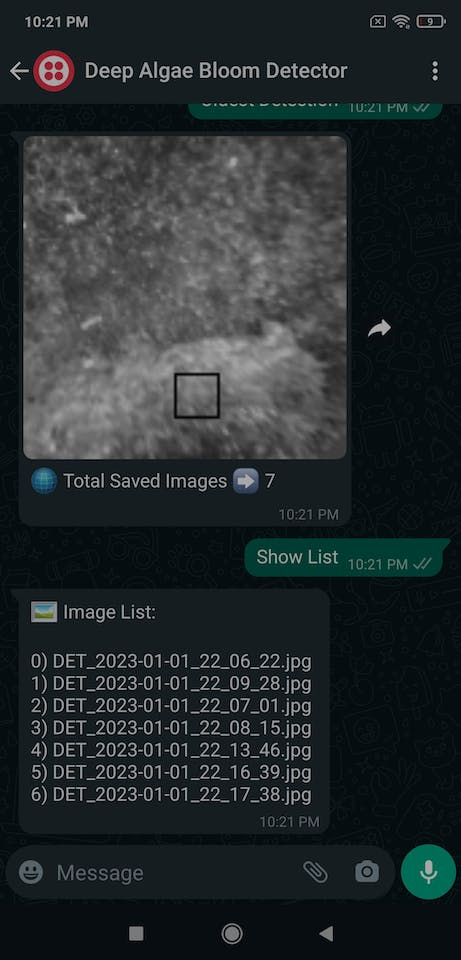

??? When the webhook receives a message from the verified phone over WhatsApp, it checks whether the incoming message includes one of the registered commands regarding the model detection images saved in the detections folder on the server:

- Latest Detection

- Oldest Detection

- Show List

- Display:<IMG_NUM>

??? If the incoming message does not include a registered command, the webhook sends the registered command list to the user as the response.

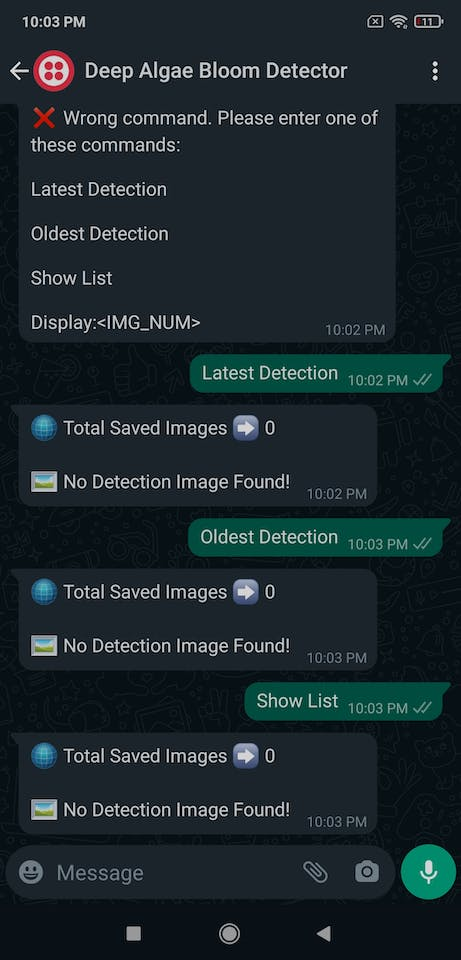

??? If the webhook receives the Latest Detection command, it sends the latest model detection image in the detections folder with the total image number on the server to the verified phone.

??? If there is no image in the detections folder, the webhook informs the user via a notification (text) message.

??? If the webhook receives the Oldest Detection command, it sends the oldest model detection image in the detections folder with the total image number on the server to the verified phone.

??? If there is no image in the detections folder, the webhook informs the user via a notification (text) message.

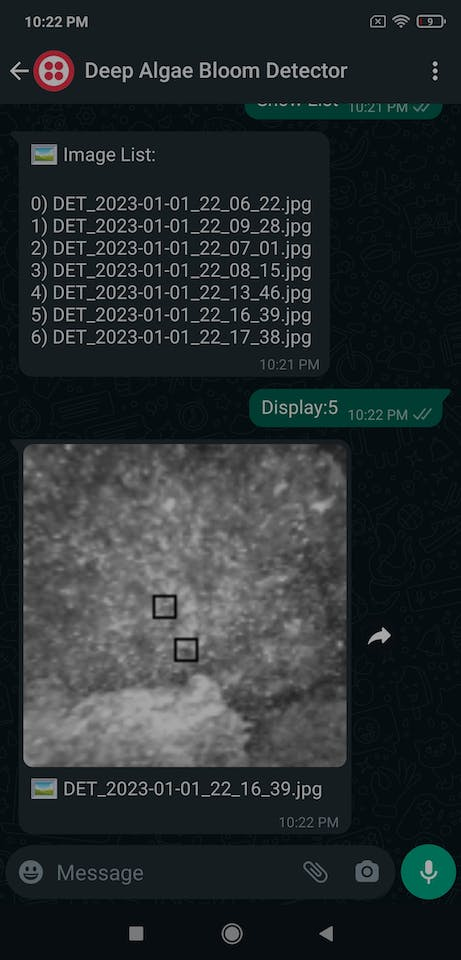

??? If the webhook receives the Show List command, it sends all image file names in the detections folder on the server as a list to the verified phone.

??? If there is no image in the detections folder, the webhook informs the user via a notification (text) message.

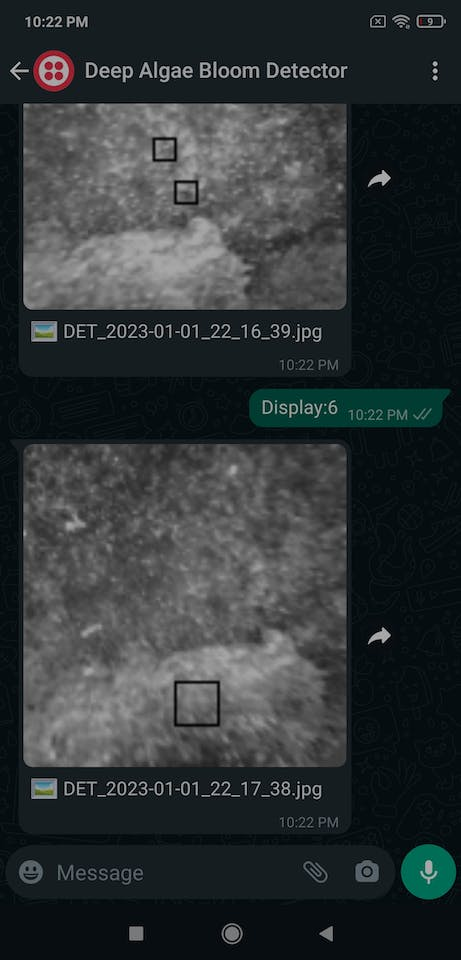

??? If the webhook receives the Display:<IMG_NUM> command, it retrieves the selected image if it exists in the given image file name list and sends the retrieved image with its file name to the verified phone.

Display:5

??? Otherwise, the webhook informs the user via a notification (text) message.

??? Also, the device prints notifications, sensor measurements, Notecard status, and the server response on the shell for debugging.

As far as my experiments go, the device detects potential deep algal bloom precisely, sends data packets to the webhook via Notehub.io, and informs the user via WhatsApp faultlessly :)

By applying object detection models trained on numerous algae bloom images in detecting potential deep algal bloom, we can achieve to:

??? prevent algal toxins that can harm marine life and the ecosystem from dispersing,

??? avert a harmful algal bloom (HAB) from entrenching itself in a body of water,

??? mitigate the execrable effects of the excessive algae growth,

??? protect endangered species.

[1] Algal bloom, Wikipedia The Free Encyclopedia, https://en.wikipedia.org/wiki/Algal_bloom

[2] Algal Blooms, The National Institute of Environmental Health Sciences (NIEHS), https://www.niehs.nih.gov/health/topics/agents/algal-blooms/index.cfm

Schematics, diagrams and documents

CAD, enclosures and custom parts

Code

Credits

kutluhan_aktar

AI & Full-Stack Developer | @EdgeImpulse | @Particle | Maker | Independent Researcher

Leave your feedback...