Revolutionizing Practices: Compound Robot

About the project

A Case Study in Automated Fruit Harvesting and Transport with Compound Robot

Project info

Difficulty: Moderate

Platforms: Raspberry Pi, ROS, OpenCV, Elephant Robotics

Estimated time: 1 hour

License: GNU General Public License, version 3 or later (GPL3+)

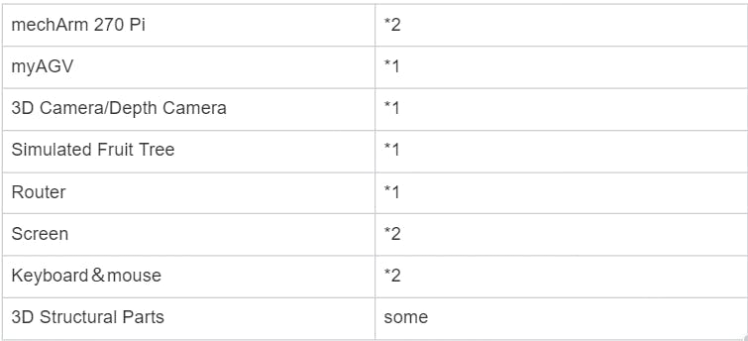

Items used in this project

Hardware components

Story

As the hottest field in technology, robotics is revolutionizing industries and driving innovation across the globe. To meet the growing demand for skilled professionals in this rapidly evolving landscape, Elephant Robotics has developed a groundbreaking robotics education solution for colleges and universities. This innovative solution combines the simulation of an automated fruit picker with the compound robot of automation of fruit sorting and delivery, offering students a comprehensive learning experience in one of the most sought-after and trending areas of technology.

Previously, we have expounded on the potential applications of fruit-picking and sorting robots. Today, we shall delve into the scene of Compound Robot employed for fruit-picking and transportation.

Certainly, let us embark on this exploration with a curious mind! Let us ponder a few questions:

● What are the possible scenarios for the application of Compound Robot?

● What benefits does this scene offer, and what knowledge can we glean from it?

● What distinguishes this particular scene from others?

Compound Robot: Automation of Fruit Sorting and Delivery

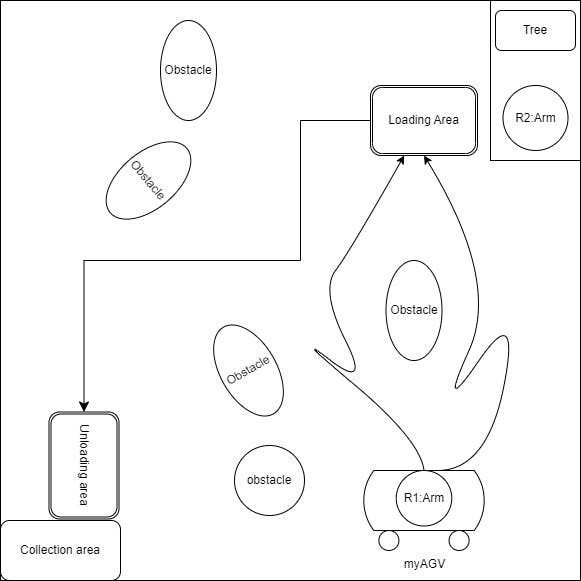

The scene shown in the figure is the application scene of the Compound Robot suit, which consists of the following parts.

It is intriguing to learn about the operational mechanics of this complex assemblage, which comprises numerous modules that serve distinct functions. Let us follow in our footsteps to explore this enigmatic entity.

Here is a schematic diagram of the scene, which delineates its components:

● myAGV+R1 Arm: A Compound Robot that features the mechArm 270-Pi mounted on the myAGV.

● Obstacle: An impediment that obstructs the myAGV's forward motion.

● Tree: A simulated fruit tree that bears fruits.

● R2 Arm: A six-axis robotic arm, the mechArm 270 Pi.

● Loading Area: A location where the Compound Robot arrives and waits for the ARM to load the fruits.

● Unloading Area: A location where the Compound Robot arrives and unloads the fruits.

● Collection Area: An area where the loaded fruits are unloaded for subsequent processing.

The Compound Robot commences its operation from the initial position and undertakes autonomous navigation to circumvent obstacles en route to the Loading Area. After arriving at this location, the R2 Arm retrieves the fruits from the tree and places them on the Compound Robot. The Compound Robot then proceeds to the next destination, the Unloading Area, whereupon the R1 Arm unloads the loaded fruits into the Collection Area.

ProductRobotic Arm - mechArm 270 PiThis is a dainty six-axis robotic arm that is Raspberry-Pi powered and assisted by ESP32 for control. It boasts a centrally symmetrical structure that mimics industrial design. The mechArm 270-Pi weighs a mere 1kg, features a payload of 250g, and operates within a radius of 270mm. Although compact and portable, this arm is remarkably potent and user-friendly, enabling it to work safely and in tandem with humans.

The myAGV is a mobile chassis robot that employs Raspberry Pi 4B as its primary control board. It features competition-level mecanum wheels and a full-enclosure design with a metal frame. The myAGV is equipped with SLAM algorithms that enable it to perform tasks such as radar mapping, auto-navigation, dynamic obstacle avoidance, and more.

The Raspberry Pi 4 Model B boasts a faster processor, more memory, and quicker network connectivity. It facilitates better external device connectivity and network performance, with multiple USB 3.0 and USB 2.0 ports, gigabit Ethernet, and dual-band 802.11ac wireless networking. Moreover, the Raspberry Pi community is a leading global hardware development community that offers numerous exciting use cases and common solutions for developers to exchange ideas and knowledge.

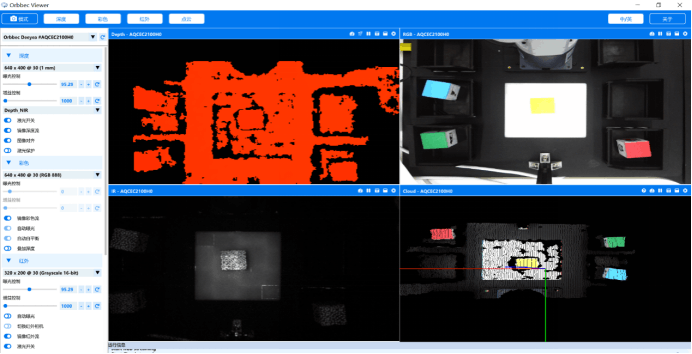

Due to the diversity of usage scenarios, ordinary 2D cameras fail to meet our requirements. In our scenario, we use a depth camera. A depth camera is a type of camera that can obtain depth information of a scene. It not only captures the color and brightness information of a scene, but also perceives the distance and depth information between objects. Depth cameras usually use infrared or other light sources for measurement to obtain the depth information of objects and scenes.

The depth camera can capture various types of information such as depth images, color images, infrared images, and point cloud images.

The adaptive gripper is an end effector used to grasp, grip, or hold objects. It consists of two movable claws that can be controlled by the robotic arm's control system to adjust their opening and closing degree and speed.

Project functionsTo begin with, we must prepare the compiling environment. This scenario is coded using the Python language. Therefore, it is essential to install the environment for using and learning purposes.

# for myAGV to realize some functions

ROS1 Melodic(Gmapping,AMCL,DWA)

# for robotic arm(mechArm 270) to realize some functions

numpy==1.24.3

opencv-contrib-python==4.6.0.66

openni==2.3.0

pymycobot==3.1.2

PyQt5==5.15.9

PyQt5-Qt5==5.15.2

PyQt5-sip==12.12.1

pyserial==3.5We primarily classify our functional analysis into two sections: the first section encompasses the overall project architecture and logical analysis, while the second section delves into the functionality analysis of myAGV. Furthermore, the third section focuses on the control of the robotic arm. The second and third sections are then subdivided into the following functional points.

For myAGV, the functional points are as follows:

● Mapping and navigation

● Static and dynamic obstacle avoidance

For the robotic arm, the functional points are:

● Machine vision recognition algorithm

● Control of the robotic arm and path planning for the robotic arm.

Let's start with the analysis of the process of the project.

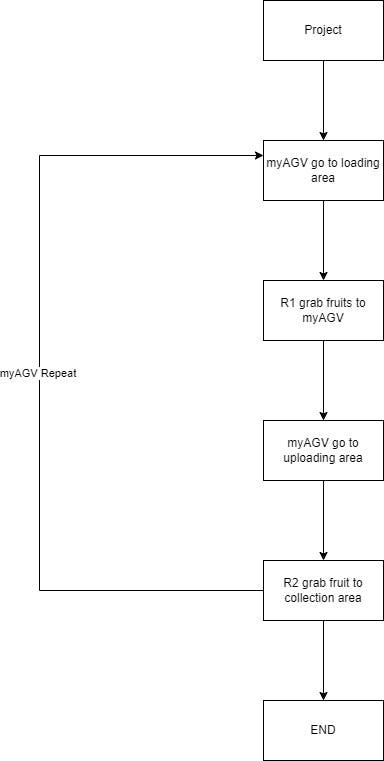

Process of projectThe following flowchart delineates the entire project process. Please analyze it in conjunction with the previously mentioned structural diagram.

Project initiation: myAGV travels to the "loading area" and awaits the robotic arm to retrieve the fruits from the tree and deposit them onto myAGV. MyAGV then transports the fruits to the "unloading area" and unloads them to the "collection area" using the robotic arm. The aforementioned process is cyclic in nature, hence after unloading the fruits, myAGV returns to the "loading area" to load more fruits and the cycle repeats itself.

Let us now analyze the problems that need to be resolved throughout the entire process. The most apparent issue is how the robotic arm knows when to perform the retrieval process when myAGV reaches the designated area. This entails the communication between myAGV and the robotic arm.

Communication:

It is essential to understand our products - mechArm, a fixed six-axis robotic arm, controlled by a Raspberry Pi mainboard, and myAGV, a mobile robot, also controlled by a Raspberry Pi mainboard. Therefore, the most optimal way to communicate is via the TCP/IP protocol. A commonly used library for this purpose is Socket, which operates on a simple principle of establishing a server and a client, similar to the instant messaging features of social media platforms such as Instagram, Twitter, and WhatsApp. Socket allows the exchange of information between the server and the client.

Code:

class TcpServer(threading.Thread):

def __init__(self, server_address) -> None:

threading.Thread.__init__(self)

self.s = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

self.s.bind(server_address)

print("server Binding succeeded!")

self.s.listen(1)

self.connected_obj = None

self.good_fruit_str = "apple"

self.bad_fruit_str = "orange"

self.invalid_fruit_str = "none"

self.target = self.invalid_fruit_str

self.target_copy = self.target

self.start_move = False

class TcpClient(threading.Thread):

def __init__(self, host, port, max_fruit = 8, recv_interval = 0.1, recv_timeout = 30):

threading.Thread.__init__(self)

self.good_fruit_str = "apple"

self.bad_fruit_str = "orange"

self.invalid_fruit_str = "none"

self.host = host

self.port = port

# Initializing the TCP socket object

# specifying the usage of the IPv4 address family

# designating the use of the TCP stream transmission protocol

self.client_socket = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

self.client_socket.connect((self.host, self.port))

self.current_extracted = 0

self.max_fruit = max_fruit

self.recv_interval = recv_interval

self.recv_timeout = recv_timeout

self.response_copy = self.invalid_fruit_str

self.action_ready = TrueBy using Socket communication, we can effectively solve the problem between myAGV and the robotic arm.

Whenever myAGV reaches the designated location, it sends a message to the robotic arm saying "I am here." Once the robotic arm receives this message, it proceeds with the fruit-picking process.

myAGV functionsMap-building and Navigation:

Today's automobiles come equipped with navigation and autonomous driving features, which can be applied in real-life scenarios to achieve unmanned driving. However, this technology is not yet fully developed. So, how do we achieve automatic navigation and obstacle avoidance in Compound Robot?

ROS is an open-source robot operating system that provides a series of tools for robot operation, including the functional packages Gmapping, AMCL, and DWA. ROS is a powerful robot operating system that provides rich libraries and tools, as well as a flexible modular architecture, making robot application development more efficient, flexible, and reliable.

In our daily lives, we use navigation by opening the map, entering the destination, and selecting the route to reach the destination. To achieve navigation in a specific area, we must first have a "map" of that area, which we call "map-building."

GPS (Global Positioning System) is used in various positioning systems, accurately collecting ground information to build an accurate map. This process involves a map-building algorithm called Gmapping.

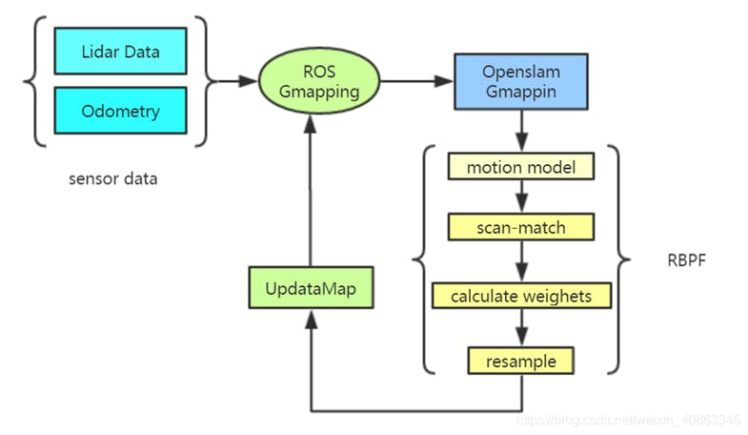

Gmapping is an algorithm used to build environmental maps on robots. It is a SLAM (Simultaneous Localization and Mapping) algorithm based on laser radar data that can construct environment maps in real-time while the robot is in motion and simultaneously determine the robot's position.

Map-building and Navigation:

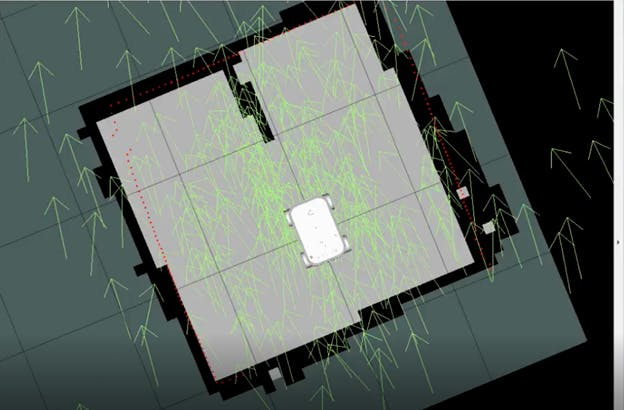

We can see that the map is built by myAGV using radar data to obtain its current position and scan the surrounding environment, marking obstacles in the map. By closing the loop in the system, we can complete the map-building process in our scenario.

Next, we use the navigation function, which as the name suggests, is for "navigation." The prerequisite for navigation is localization, which is achieved by using the AMCL algorithm provided by ROS.

The AMCL algorithm is a probabilistic robot localization algorithm that uses the Monte Carlo method and Bayesian filtering theory to process the sensor data carried by the robot, estimate the robot's position in the environment in real-time, and continuously update the probability distribution of the robot's position.

The AMCL algorithm achieves adaptive robot localization through the following steps:

1. Particle set initialization: First, generate a set of particles around the robot's initial position, representing the possible positions of the robot.

2. Motion model update: Based on the robot's motion state and control information, update the position and state information of each particle in the particle set.

3. Measurement model update: Calculate the weight of each particle (i.e., the degree of matching between the sensor data and the actual data when the robot is at that particle position) based on the sensor data carried by the robot. Normalize the weight to convert it into a probability distribution.

4. Resampling: Resample the particle set according to the particle weight to improve the localization accuracy and reduce the computational complexity.

5. Robot localization: Determine the robot's position in the environment based on the probability distribution of the particle set and update the robot's state estimation information.

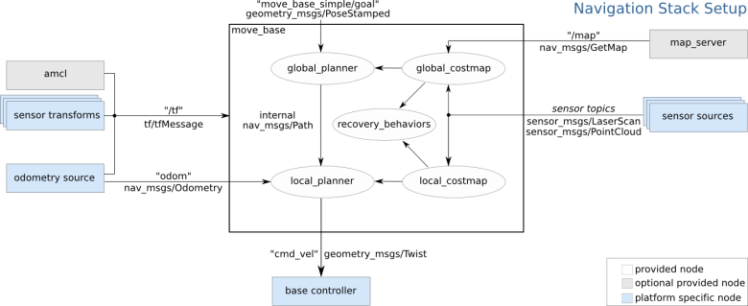

When we use navigation, we select a destination and the navigation software provides us with multiple routes to choose from. Similarly, in this scenario, multiple paths are provided for selection, with the system helping to make the optimal choice. This involves two concepts: global path planning and local path planning. Navigation provides a framework, with the global_planner as the global planner and the local_planner as the local path planner. Some messages, such as the trajectory of the global planner, are transmitted internally within the framework and cannot be tracked by a topic. In summary, ROS' navigation module provides a mechanism that can achieve autonomous navigation of robots by selecting different planners.

From the map above, we can see that, in addition to the planner, the navigation module also includes a cost_map, which is a grid map that contains information about static obstacles, i.e., which areas can be traversed and which cannot. Dynamic obstacle information is published through sensor topics and the cost_map is updated in real-time to achieve dynamic obstacle avoidance. In addition to the map, the navigation module also requires localization information, which is provided by the amcl module. If you want to use another localization module, simply publish the same topic. The navigation module also requires tf information, which describes the transformation between different sensors, a common occurrence in robotics. The robot's pose information is provided by odometry, including the robot's speed, angle, etc., which is used by the local planner to plan the path.

The main force in obstacle avoidance is the DWA algorithm, which aims to enable robots to quickly and safely plan and track paths in complex and dynamic environments. Specifically, the DWA algorithm achieves this through the following steps:

● Generating candidate velocities: Based on the robot's current velocity and direction, a set of candidate velocities and directions are generated according to the robot's dynamic constraints and environmental conditions.

● Evaluating trajectories: For each candidate velocity, the robot's trajectory is predicted for a certain period of time, and the trajectory's safety and reachability are evaluated.

● Selecting the best velocity: Based on the evaluation results of the trajectory, the best velocity and direction that meet the safety and reachability requirements are selected and applied to the robot's control system.

Camera calibration is required before using the depth camera.Here is a tutorial link.

Camera calibration:

Camera calibration refers to the process of determining the internal and external parameters of a camera through a series of measurements and calculations. The internal parameters of the camera include focal length, principal point position, and pixel spacing, while the external parameters of the camera include its position and orientation in the world coordinate system. The purpose of camera calibration is to enable the camera to accurately capture and record information about the position, size, and shape of objects in the world coordinate system.

Our target objects are fruits, which come in different colors and shapes, ranging from red, orange to yellow. To ensure accurate and safe fruit grasping, it is necessary to gather comprehensive information about the fruits, including their width, thickness, and other characteristics, to perform intelligent grasping.

Let us examine the target fruits. Currently, the most prominent difference between them is their distinct colors. We will select the targets with red and orange hues. We will use the HSV color space to locate the targets. The following code is designed to detect the target fruits.

class Detector:

class FetchType(Enum):

FETCH = False

FETCH_ALL = True

"""

Detection and identification class

"""

HSV_DIST = {

"redA": (np.array([0, 120, 50]), np.array([3, 255, 255])),

"redB": (np.array([118, 120, 50]), np.array([179, 255, 255])),

"orange": (np.array([8, 150, 150]), np.array([20, 255, 255])),

"yellow": (np.array([28, 100, 150]), np.array([35, 255, 255])),The first step is to accurately detect the target fruits in order to obtain their coordinates, depth, and other relevant information. We will define the necessary information to be gathered and store it for later use and retrieval.

class VideoCaptureThread(threading.Thread):

def __init__(self, detector, detect_type = Detector.FetchType.FETCH_ALL.value):

threading.Thread.__init__(self)

self.vp = VideoStreamPipe()

self.detector = detector

self.finished = True

self.camera_coord_list = []

self.old_real_coord_list = []

self.real_coord_list = []

self.new_color_frame = None

self.fruit_type = detector.detect_target

self.detect_type = detect_type

self.rgb_show = None

self.depth_show = NoneUltimately, our end goal is to obtain the world coordinates of the fruits, which can be transmitted to the robotic arm for executing the grasping action. By converting the depth coordinates into world coordinates, we have already achieved half of our success. Finally, we only need to transform the world coordinates into the coordinate system of the robotic arm to obtain the grasping coordinates of the target fruits.

# get world coordinate

def convert_depth_to_world(self, x, y, z):

fx = 454.367

fy = 454.367

cx = 313.847

cy = 239.89

ratio = float(z / 1000)

world_x = float((x - cx) * ratio) / fx

world_x = world_x * 1000

world_y = float((y - cy) * ratio) / fy

world_y = world_y * 1000

world_z = float(z)

return world_x, world_y, world_z

When it comes to controlling the robotic arm, some may find it difficult to make it move according to their desired trajectory. However, there is no need to worry. Our MechArm270 robotic arm is equipped with pymycobot, a relatively mature robotic arm control library. With just a few simple lines of code, we can make the robotic arm be controlled.

(Note: pymycobot is a Python library for controlling robotic arm movement. We will be using the latest version, pymycobot==3.1.2.)

#Introduce two commonly used control methods

'''

Send the degrees of all joints to robot arm.

angle_list_degrees: a list of degree value(List[float]), length 6

speed: (int) 0 ~ 100,robotic arm's speed

'''

send_angles([angle_list_degrees],speed)

send_angles([10,20,30,45,60,0],100)

'''

Send the coordinates to robot arm

coords:a list of coordiantes value(List[float]), length 6

speed: (int) 0 ~ 100,robotic arm's speed

'''

send_coords(coords, speed)

send_coords([125,45,78,-120,77,90],50)In this scenario, two robotic arms serve distinct functions: one arm is responsible for fruit harvesting while the other arm is responsible for fruit loading and unloading.

After implementing basic control, our next step is to design the trajectory planning for the robotic arm to grasp the fruits. In the recognition algorithm, we obtained the world coordinates of the fruits. We will process these coordinates and convert them into the coordinate system of the robotic arm for targeted grasping.

#deal with fruit world coordinates

def target_coords(self):

coord = self.ma.get_coords()

while len(coord) == 0:

coord = self.ma.get_coords()

target = self.model_track()

coord[:3] = target.copy()

self.end_coords = coord[:3]

if DEBUG == True:

print("coord: ", coord)

print("self.end_coords: ", self.end_coords)

self.end_coords = coord

return coordIndeed, the same principle applies to path planning for the loading and unloading robotic arm. It is essential to design a trajectory that ensures the arm's movement does not result in collisions or damage to the fruit.

There are several noteworthy technological advancements in the entire project, such as the mapping and navigation of myAGV, automatic obstacle avoidance, the 3D camera-based recognition algorithm, the robotic arm's path planning, and the logical thinking behind the communication between myAGV and the robotic arm. Each of these functionalities has valuable lessons to offer.

Although the algorithms we used in the project are not necessarily the optimal methods, we can still learn from them. For instance, the gmapping algorithm used in mapping has alternatives like the FastSLAM algorithm, which is particle filter-based, and the Topological Mapping algorithm, which is topology-based.

As the saying goes, "machines are dead, humans are alive." We can select the most effective approach based on various external factors. This process requires continuous learning, exploration, and discussion.

SummaryThe continuous development of technology will make machines the greatest helpers for human beings in the future world. The trend of future development is towards intelligence, flexibility, efficiency, and safety. The development of mobile robots mainly includes autonomous navigation, intelligent path planning, multimodal perception, etc. The development trend of robotic arms mainly includes autonomous learning, adaptive control, visual recognition, etc. At the same time, the development of Compound Robot also needs to solve the coordination problem between mobility and operation, and improve the overall performance and efficiency of the robot system.

We established this application scenario to provide makers and students who love robots with a practical opportunity. By operating this scenario, students can deepen their understanding and knowledge of robotic arms. Moreover, they can learn and master technologies such as robotic arm motion control, visual recognition, and object grasping, helping them better understand the relevant knowledge and skills of robotic arms. This scenario can also help students develop their teamwork, innovative thinking, and competitive thinking abilities, laying a solid foundation for their future career development.

Credits

Elephant Robotics

Elephant Robotics is a technology firm specializing in the design and production of robotics, development and applications of operating system and intelligent manufacturing services in industry, commerce, education, scientific research, home and etc.

Leave your feedback...