Story

Details

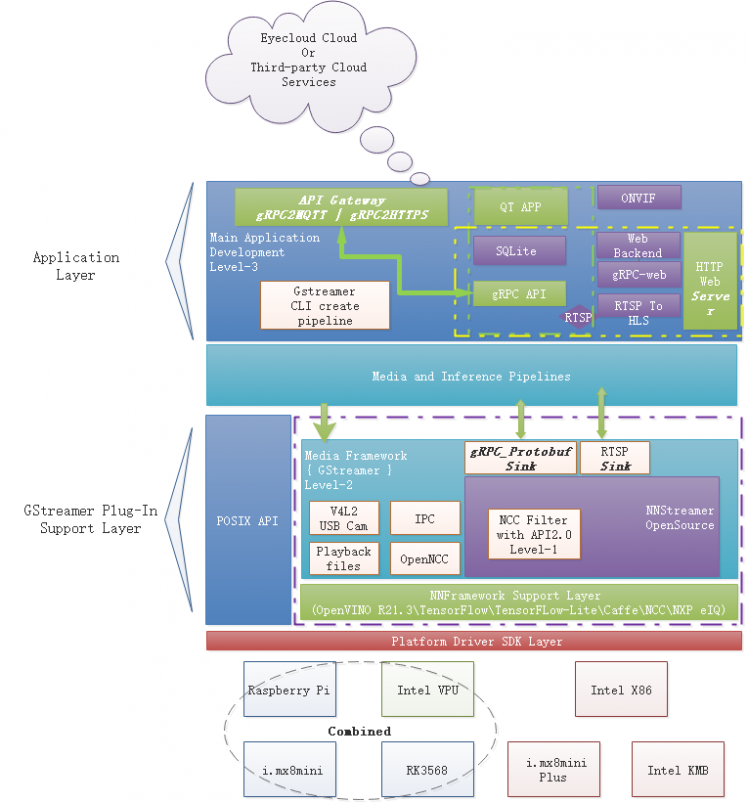

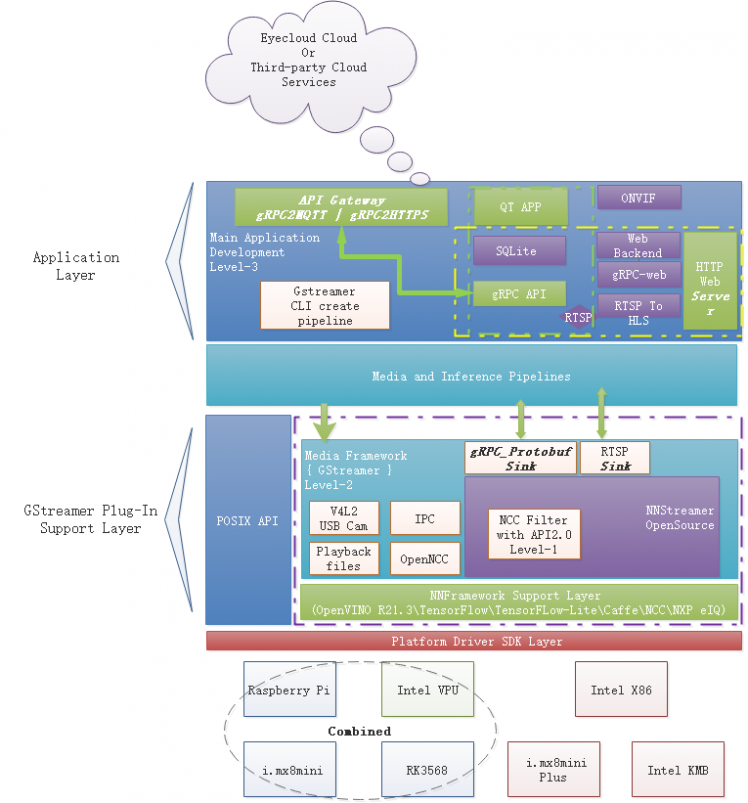

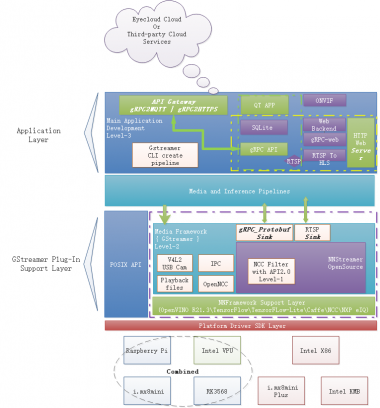

The block diagram of the OpenNCC Frame system is as follows:

Locally, it can be divided into four layers from bottom to top:

- Platform driver layer Provide driver support for AI inference acceleration on different hardware platforms and operating systems

- GStreamer plug-in support layer Various inference frameworks and accelerators (such as OpenNCC native SDK) are encapsulated into plug-ins according to NNStreamer, and business supporting modules (such as statistics and tracking) are encapsulated into GStreamer standard plug-ins to provide a plug-in warehouse for flexible business scenarios

- Media and inference pipeline layer According to the business strategy, the plug-in is combined into an inference pipeline to output events, AI results, and statistical information for the upper business

- Business application and interaction layer Realize business interaction, cloud docking, and local report functions

OpenNCC Native SDK is an upgraded version of OpenNCC API 1.0. It provides cross-platform driver support for OpenNCC USB camera and OpenNCC SoM accelerator, manages and allocates reasoning engine resources, including but not limited to x86, Ubuntu, rk3568, Raspberry Pi, and NVIDIA. OpenNCC Native SDK has been encapsulated as a sub-plug-in of NNStreamer, which provides the underlying resource scheduling interface for the reasoning media pipeline. It is a proprietary development package of OpenNCC Frame supporting OpenNCC hardware products which are based on Intel MA2480 VPU.

OpenNCC Native SDK provides different working modes:

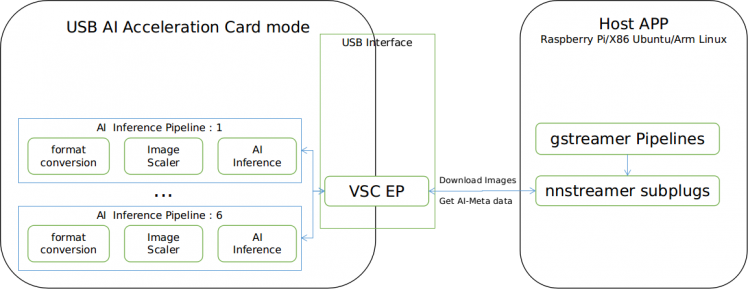

1. USB AI Acceleration Card(As Intel NCS) mode

* In this mode, the Host App obtains the video stream( local file, IPC, webcam, V4L2 Mipi CAM) from the outside, configures the preprocessing module according to the resolution and format of the input file of the reasoning model, sends the picture to the OpenNCC SoM through the OpenNCC Native SDK for reasoning, and returns the reasoning results. The reasoning supports asynchronous and synchronous modes. The reasoning pipeline on OpenNCC SoM is configured through OpenNCC model JSON.

* OpenNCC SoM can support up to 6-way reasoning pipeline configuration locally, and 2-way pipeline can run concurrently in real-time. Users can realize multi-level chain or multi-model concurrency of reasoning through intermediate processing of the Host App.

* Multiple accelerator cards are also supported. Users can expand the number of accelerator cards according to their computing power needs, and the SDK will dynamically allocate computing power.

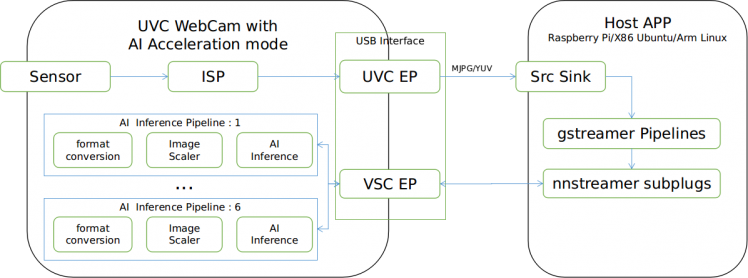

2. UVC Web Camera with AI Acceleration Card(As Intel NCS) mode

* In this mode, OpenNCC is a combination of a USB camera and reasoning accelerate card. The HD and 4K sensors supported by OpenNCC are connected in the front. After completing the ISP on the VPU, it outputs the video stream to the upper computer as a standard UVC camera.

* After the upper computer obtains the video stream, it carries out preprocessing, configures the preprocessing module according to the resolution and format of the input file of the reasoning model, sends the picture to the OpenNCC SoM for reasoning through the OpenNCC Native SDK, and returns the reasoning result. The reasoning supports asynchronous and synchronous modes. The reasoning pipeline on OpenNCC SoM is configured through OpenNCC model JSON.

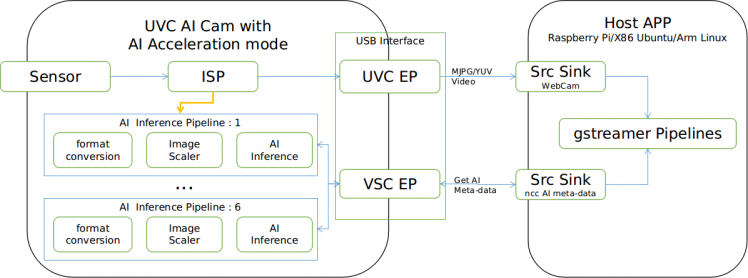

3. UVC AI Camera with AI Acceleration Card(As Intel NCS) mode

* In this mode, OpenNCC is a combination of a USB camera and reasoning speed card. The HD and 4K sensors supported by OpenNCC are connected in the front. After completing the ISP on the Vpu, it outputs the video stream to the upper computer as a standard UVC camera. * Support configuration, means, directly connects the video stream after ISP on the camera to the local reasoning pipeline of the camera, and output the reasoning results to the Host App. This mode avoids downloading pictures to the reasoning engine, reduces the processing delay and saves bandwidth. The reasoning pipeline is configured through OpenNCC model JSON.

- OpenNCC USB powered by Intel Movidius VPU MA2480: Released

- Raspberry Pi with OpenNCC SoM: Released

- RK3568 with OpenNCC SoM: Released

- OpenNCC KMB powered by Intel Movidius KeamBay

- OpenNCC Plus powered by NXP i.mx8mini Plus

The following are supported by NNStreamer subplugins and are also supported:

- Movidius-X via ncsdk2 subplugin: Released

- Movidius-X via OpenVINO subplugin: Released

- Edge-TPU via edgetpu subplugin: Released

- ONE runtime via nnfw(an old name of ONE) subplugin: Released

- ARMNN via armnn subplugin: Released

- Verisilicon-Vivante via vivante subplugin: Released

- Qualcomm SNPE via snpe subplugin: Released

- Exynos NPU: WIP

If you need any help, please open an issue on Git Issues, or leave a message on openncc.com. You are welcome to contact us for your suggestions, and model requests.

Leave your feedback...