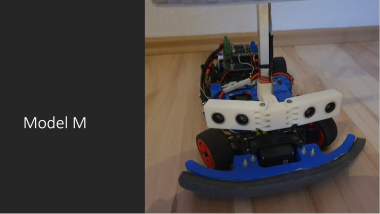

Model M

About the project

Bulding a Autonomous Car

NXP Cup Electromaker Innovation Challenge 2021 contest winner

NXP Cup Electromaker Innovation Challenge 2021 contest winner

Project info

Difficulty: Expert

Estimated time: 6 months

License: GNU General Public License, version 3 or later (GPL3+)

Items used in this project

Hardware components

Software apps and online services

Story

Every year, our university (Hochschule Düsseldorf) offers the possibility to take part in the NXP Cup as a student project. The NXP Cup is a challenge to build your own small self-driving car. Most hardware parts are given by NXP, so the main challenge is to adequately bring the hardware parts together and create the software to run on it.

In the winter semester 2019/2020 we (Moritz and Johannes) took part in the project and started creating our own small self-driving car. From the very beginning our goal was to not be hardware-limited but to always take the newest and best parts available. In the following you will see how that went.

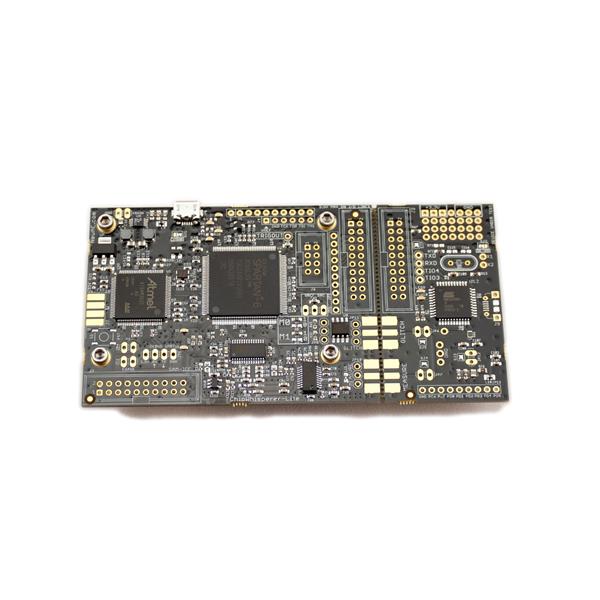

The first month (October) was only meant for theoretical planning and creating a list of additional hardware items to order. Over the past years, our university aquired a small collection of NXP Cup hardware, so we didn't need to buy anything car-related (chassy, wheels etc.). Our focus was on using the then newly available camera Pixy2 as well as a Teensy 4.0 to have a faster chip than the default one provided for the cup (KL25Z). The Teensy also would turn out to be very nice to program as one can use the Arduino IDE.

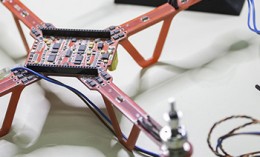

In November, we first got to put our hands onto the car and electronics. First tests were done for the built-in line detection of the Pixy2 as well as addressing the motors and power steering. After just a few days we got the Model M to drive in a very very basic way already. Directly seeing the progress we made was something that motivated us throughout the project. The motors and steering where still unresponsive and messy. The architecture we followed from the beginning was to use the Teensy to read out the camera and other sensors and calculate the corresponding motor and steering actions, send these over to the KL25Z where they get put into the drive controller, which actually addresses the motor and steering. The communication between both chips was done via I2C and to better utilize the Teensy we created our own circuit board to put the Teensy on to. This made it much more easy to include more peripherals like a Bluetooth module and connect them to the Teensy.

During December, we continued to work with the built-in line detection and tried to improve on it. Driving curves turned out to be quite hard. The official race track parts offered from NXP include straights, curves, intersections and "wobble parts". Addressing the motor and steering got smoother every week. Also we added more stuff to our Model M: An LED strip for lighting the track and ultrasonic sensors to detect if we drive against a wall. We drove against many walls.

In January the motor and steering control got better and better. We implemented a first basic brake that made it possible for the Model M to drive faster on straights and brake in front of curves. We turned our attention to being able to drive an 8, including the very tricky intersection, as well as improving our capabilities in curves. In both cases it became more and more obvious that the built-in line detection of the Pixy2 was not working out well for us. We tried to improve the results of it as much as possible, but got stuck on a certain level of accuracy and reliability. Another big problem was that the time per detection of lines varied between 100-200 ms. Meaning we could only correct our steering angle 5-10 times per second; not nearly enough to drive fast into and through curves.

To increase our ability to quickly debug our running program we started developing an additional program for Mac and Windows to receive debug data from Model M via Bluetooth. Later on we added to control the Model M from that program as well: start/stop motor, change various settings like the speed on straights and in curves as well as setting the current drive mode (random track, drive an 8, dodge obstacles, fast/slow zone, emergency brake).

As it became clearer that we needed to get rid of the Pixy2 line detection to further improve Model M's driving abilities, in February we did a complete rewrite of the code on the Teensy. Reading every pixel value from the Pixy2 and writing our own line detection was impossible as it was even slower than using the integrated detection. So we decided to get closer to how other teams were approaching the line detection problem using line scan cameras. We chose three horizontal lines within the Pixy2 to read the pixels from. Starting with the upper line we implemented a very basic edge/line detection: detect the big jump of brightness from the white track to the black lines at its edges.

If there was no line found in the upper line, the pixels from the middle line get read from the Pixy2 and the same edge/line detection algorithm is run. If no line was found, do the same with the lowest line, looking closest to the car. This gave us the possibility to look far out in front on straights and still see the lines close to our car during a curve. We basically had three different line scan cameras built into a single camera.

This approach improved our driving abilities by so much that now the motor and steering control needed further improvement again to keep up. We finetuned the braking and our curve driving and changed the communication protocol between our two boards from I2C to UART, as I2C became unreliable for our use case. With the new changes we were able to basically drive any track with just a few small problems appearing from time to time.

In March we made some more general improvements in all areas and focused to be able to complete all NXP Cup bonus challenges as well. The trickiest part was to utilize ultrasonic sensors for an emergency brake (if an object is detected directly in front of the car) or dodging objects (if an object is on the track but slightly to the left or right).

In the end we were really happy with our results and motivated to participate in the NXP Cup challenge in Munich. But at this point the Cup was already canceled due to the pandemic. We are happy that we hopefully have the chance to participate in a smaller and more local NXP Cup this year (2021) as a plan B.

We took videos of Model M to be able to display its capabilities.

This one shows it driving a difficult track at high speed in our universitiy: https://www.youtube.com/watch?v=aLpIy8BxRCU

And this one shows some bonus challenges completed by Model M: https://www.youtube.com/watch?v=o0adsonZ5lE

Leave your feedback...