Raspberrypi Based Edge-ai Vehicle License Plate Recognition

About the project

See the vehicle license plate recognition application as an example to see how the two-stage model worked out on OpenNCC.

Project info

Difficulty: Moderate

Platforms: Intel, Raspberry Pi, Linux

Estimated time: 1 hour

License: Apache License 2.0 (Apache-2.0)

Story

Cars run to and fro, maybe it's at the intersection or at the gate of the parking lot. But no matter when and where, in a world where cars occupy the streets and lanes and are closely related to our lives, such scenes will only become more and more frequent.

It is difficult for people to notice the "ID card" license plates of these vehicles at the same time, and there is no way to quickly record, track and locate large-scale vehicle flows. But for a camera with AI reasoning ability, it is not difficult to deal with this degree of problem, just needs to match the appropriate model and algorithm.

For example, the license plate recognition algorithm introduced in this blog.

Vehicle license plate recognition

Vehicle license plate recognition

How to realize license plate recognitionLicense plate recognition is a two-stage algorithm, which requires two models, the "vehicle license plate detection barrier-0106" target detection model and the "license plate recognition barrier-0001" CTC character recognition model. In the first stage, the location of the vehicle and license plate is obtained through target detection model recognition, and then in the second stage, the license plate content is judged through a character recognition model. Both of the two models are available at Intel's OpenVINO™ Toolkit Pre-Trained Models (Open Model Zoo).

Now let's see how to deploy the two-stage models to OpenNCC!

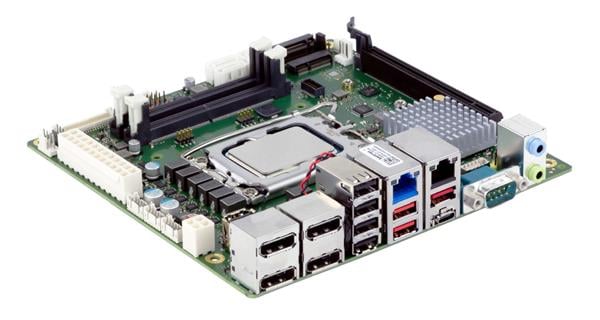

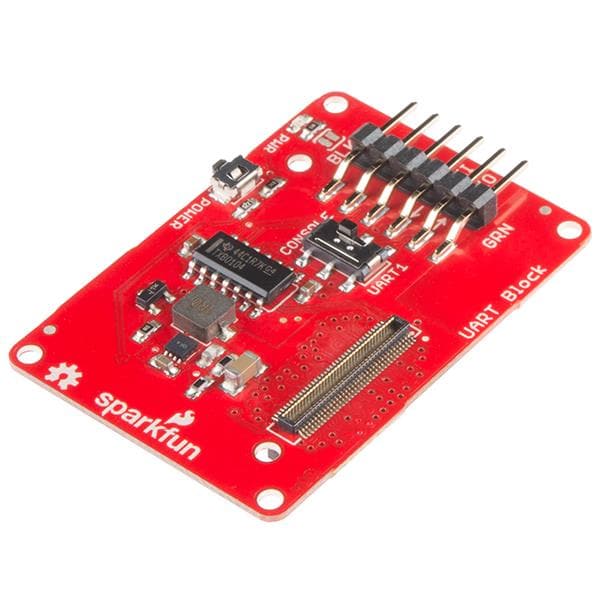

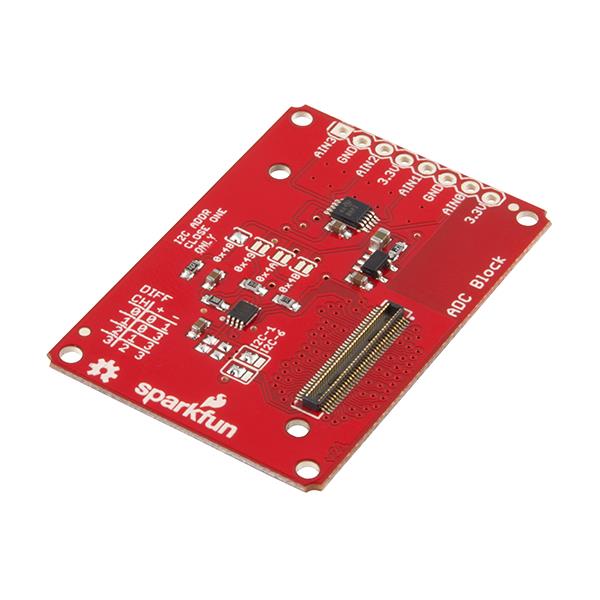

Part 0. PreparationHardware: OpenNCC UB4,Raspberry Pi 4B

Software: OpenNCC SDK

Part 1. Configure the Raspberry Pi 4BThe following commands are operated on the Raspberry Pi 4.

- Install libusb, opencv and ffmpeg

$ sudo apt-get install libopencv-dev -y

$ sudo apt-get install libusb-dev -y

$ sudo apt-get install libusb-1.0.0-dev -y

$ sudo apt-get install ffmpeg -y

- clone openncc repo

$ git clone https://github.com/EyecloudAi/openncc

- Build environment(RaspberryPi)

$ cd openncc/Platform/Linux/RaspberryPi

$./build_raspberrypi.sh

$ cd Example/How_to/OpenNCC/C&C++/Multiple_models

$ make clone

- Compile and run

$ make all

$ cd./bin

$ sudo./Openncc

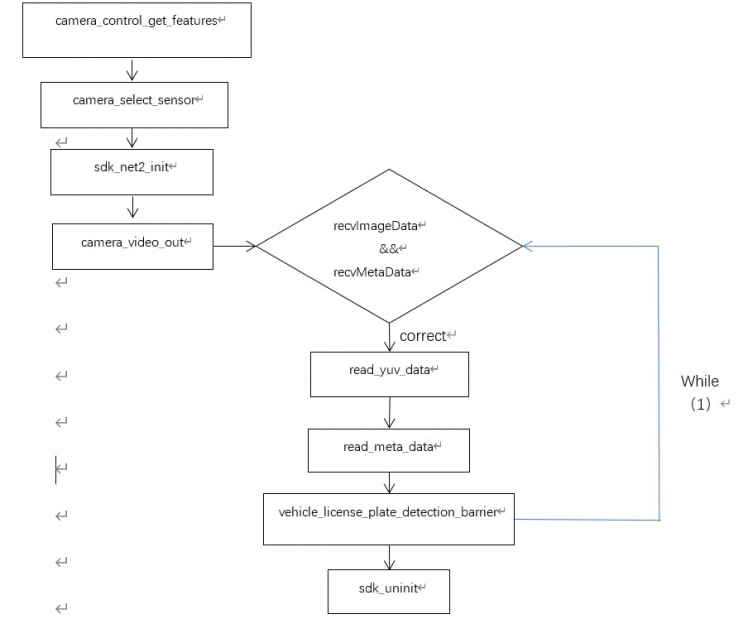

Flow diagram:

diagram

diagram

Part 2. Interface specificationParameter structure initialization (main.cpp #Line 45~78)

- Parameter structure initialization (main.cpp #Line 45~78)

static Network1Par cnn1PrmSet =

{

imageWidth:-1, imageHeight:-1, /* Dynamic acquisition */

startX:-1, startY:-1, /* Set according to the acquired sensor resolution */

endX:-1, endY: -1, /* Set according to the acquired sensor resolution */

inputDimWidth:-1, inputDimHeight:-1, /* Set according to the acquired model parameters */

inputFormat:IMG_FORMAT_BGR_PLANAR, /* The default input is BRG */

meanValue:{0, 0, 0},

stdValue:1,

isOutputYUV:1, /*Turn on YUV420 output function*/

isOutputH26X:1, /*Turn on H26X encoding function*/

isOutputJPEG:1, /*Turn on the MJPEG encoding function*/

mode:ENCODE_H264_MODE, /* Use H264 encoding format */

extInputs:{0}, /* Model multi-input, the second input parameter */

modelCascade:0, /* The next level model is not cascaded by default */

inferenceACC:0,

};

/* Default parameters of the second level model */

static Network2Par cnn2PrmSet =

{

startXAdj:0,

startYAdj:0,

endXAdj:0,

endYAdj:0,

labelMask:{0}, /* The label mask to be processed, will be processed if the corresponding position is 1 */

minConf: 0.99, /* The detection target's confidence level is greater than this value before processing */

inputDimWidth:-1, inputDimHeight:-1, /* Set according to the acquired model parameters */

inputFormat:IMG_FORMAT_BGR_PLANAR, /* The default input is BRG */

meanValue:{0, 0, 0},

stdValue:1,

extInputs:{0}, /* Model multi-input, the second input parameter */

modelCascade:0 /* The next level model is not cascaded by default */

};

First, we initialize the SDK in the main function, for the two-stage model, we need to initialize two kinds of network parameter structures.

Network1par is the first stage algorithm, it is common to the single-stage model. Network2par is the second stage algorithm, now we will focus on the Network2par.

startXAdj, startYAdj, endXAdj, endYAdj (main.cpp #LIne191~200 )

- startXAdj, startYAdj, endXAdj, endYAdj (main.cpp #LIne191~200 )

/* Based on the detection results of the first level, appropriately fine-tune the detection coordinates of the first level to facilitate recognition */

if(1)

{

/*

*Fine-tune the starting point to the upper left(startXAdj,startYAdj )

*The bottom point is fine-tuned to the bottom right(endXAdj,endYAdj) */

cnn2PrmSet.startXAdj = -5;

cnn2PrmSet.startYAdj = -5;

cnn2PrmSet.endXAdj = 5;

cnn2PrmSet.endYAdj = 5;

}

Indicates the offset of the input dimension of the second stage from the first stage.

Usually, the size of the output box of the detection model will be "tight" for the real target, and character recognition requires a very complete character image. Therefore, in the second stage of clipping image input, we expand the clipping box to a certain extent.

minConf (main.cpp #LIne 202)

- minConf (main.cpp #LIne 202)

cnn2PrmSet.minConf = 0.7; //Confidence level setting, the first level target detection is greater than this value before entering the second level model processing

Confidence threshold, corresponding to the output of the detection model.

labelMask(main.cpp #LIne 203, vehicle_license_plate_detection_barrier.cpp #Line 117~126)

cnn2PrmSet.labelMask[2] = 1; // License plate label id = 2,vehicle label id = 1, only handle id=2

/* Do not display boxes with invalid data or too low probability */

if ((coordinate_is_valid(x0, y0, x1, y1) ==0 )

||(conf<nnParm2->minConf)

||(nnParm2->labelMask[label] == 0))

{

continue;

}

Label mask for detection output, because the target may be more than one type when output in the detection model. For example, the "vehicle license plate detection barrier-0106" will output the text messages on the vehicle and license plate at the same time. However, you may not want to get the text messages on the vehicle, so you need to exclude the results corresponding to this type.

inputDimWidth, inputDimHeight(main.cpp #LIne 206~207)

- inputDimWidth, inputDimHeight(main.cpp #LIne 206~207)

/*name1: "data", shape: [1x3x24x94] - An input image in following format [1xCxHxW]. Expected color order is BGR.*/

cnn2PrmSet.inputDimWidth = 94;

cnn2PrmSet.inputDimHeight = 24;

This parameter determines the specific size of the detection frame output in the first stage after expansion and clipping, which is also the input size of the model in the second stage and needs to be consistent with the model characteristics.

For more information about the model, you can visit:

You can also see it at the top of the license-plate-recognition-barrier-0001.xml and see it at the top.

The input size of the “license plate recognition barrier-0001” model is 24x94.

After completing the initialization configuration, let's see how to obtain and process the results.

regMetadata(vehicle_license_plate_detection_barrier.cpp#Line 140~147)

- regMetadata(vehicle_license_plate_detection_barrier.cpp#Line 140~147)

// Get secondary model memdata

uint16_t* regMetadata = (uint16_t*)(firstOutput + secondModelOutputSize * i);

for(int j=0;j<sizeof(regRet)/sizeof(regRet[0]);j++)

{

regRet[j]= (int) f16Tof32(regMetadata[j]);

}

Get the offset address of the secondary model metadata. There are labels corresponding to each character bit.

Find the corresponding characters through the label and splice them back and forth, which is the final recognition content.

char items[][20] = {

"0", "1", "2", "3", "4", "5", "6", "7", "8", "9",

"<Anhui>", "<Beijing>", "<Chongqing>", "<Fujian>",

"<Gansu>", "<Guangdong>", "<Guangxi>", "<Guizhou>",

"<Hainan>", "<Hebei>", "<Heilongjiang>", "<Henan>",

"<HongKong>", "<Hubei>", "<Hunan>", "<InnerMongolia>",

"<Jiangsu>", "<Jiangxi>", "<Jilin>", "<Liaoning>",

"<Macau>", "<Ningxia>", "<Qinghai>", "<Shaanxi>",

"<Shandong>", "<Shanghai>", "<Shanxi>", "<Sichuan>",

"<Tianjin>", "<Tibet>", "<Xinjiang>", "<Yunnan>",

"<Zhejiang>", "<police>",

"A", "B", "C", "D", "E", "F", "G", "H", "I", "J",

"K", "L", "M", "N", "O", "P", "Q", "R", "S", "T",

"U", "V", "W", "X", "Y", "Z"

};

char result[256]={0};

for(int j=0;j<sizeof(regRet)/sizeof(regRet[0]);j++)

{

if (regRet[j] == -1)

break;

strcat(result, items[(int)regRet[j]]);

}

Running effect:

ongoing vehicle license plate recognition

ongoing vehicle license plate recognition

static vehicle license plate recognition

static vehicle license plate recognition

Leave your feedback...