Automating Fruit Harvesting And Sorting: Educational Case

About the project

Automating Fruit Harvesting and Sorting: A Fascinating Educational Case Study with 2 Robotic Arms

Project info

Difficulty: Moderate

Platforms: ROS, M5Stack, OpenCV, Elephant Robotics

Estimated time: 1 hour

License: GNU General Public License, version 3 or later (GPL3+)

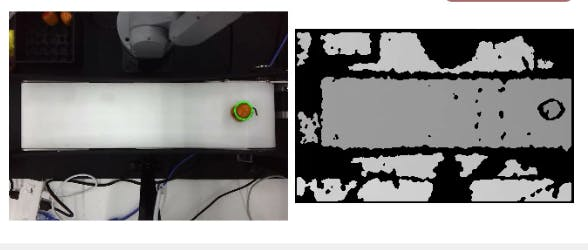

Items used in this project

Hardware components

Software apps and online services

Story

As the hottest field in technology, robotics is revolutionizing industries and driving innovation across the globe. To meet the growing demand for skilled professionals in this rapidly evolving landscape, Elephant Robotics has developed a groundbreaking robotics education solution for colleges and universities. This innovative solution combines the simulation of an automated fruit picker with the compound robot of automation of fruit sorting and delivery, offering students a comprehensive learning experience in one of the most sought-after and trending areas of technology.

In this artcile, we shall expound in detail upon the scenario of fruit harvesting and sorting robots. We shall commence with an introduction to the kit and its employment scenarios, and proceed to elaborate on the robotic arm's functional implementation.

Fruit-Picker Kit

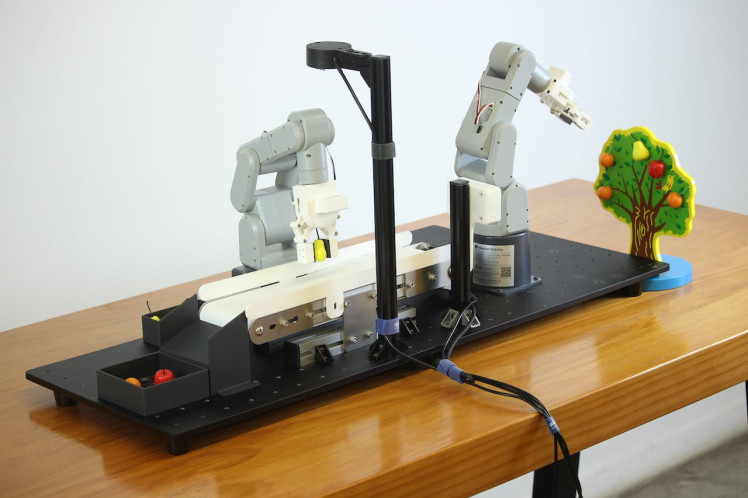

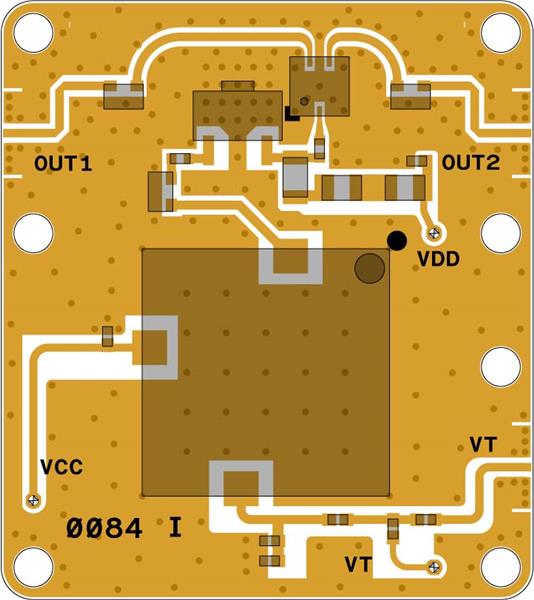

The pic depicts the Fruit-Picker Kit, which comprises of the following components.

Your curiosity must be piqued as to how this kit operates. Allow me to elucidate the operational process of the kit. Firstly, as you can observe, we have two robotic arms that perform distinct functions. The robotic arm closest to the fruit tree is the fruit-picking robot, henceforth referred to as R1. The arm situated in the middle is the sorting robot, abbreviated as R2. Their nomenclature itself implies their respective tasks. R1 is responsible for plucking the fruits from the tree and placing them on the conveyor belt, while R2 sorts out any substandard fruits from the conveyor belt.

Step:

Harvesting: R1 employs a depth camera to identify the fruits on the tree and then locates them. The coordinates of the fruit are sent to R1 for picking.

Transportation: Through R1's picking, the fruit is placed on the conveyor belt. The conveyor belt transports the fruit to the range recognizable by R2 for determining the quality of the fruit.

Sorting: The camera above R2 recognizes the fruit in its sight range and uses an algorithm to determine its quality. If it is deemed as good fruit, the fruit is transported by the conveyor belt to the fruit collection area. If it is deemed as bad fruit, the coordinates of the bad fruit are transmitted to R2, which then grabs the target and places it in a specific area.

The aforementioned process is continuously looped:

Harvesting->Transportation->Sorting->Harvesting->Transportation.

ProductRobotic Arm - mechArm 270 M5StackThis is a compact six-axis robotic arm with a center-symmetrical structure (mimicking an industrial structure) that is controlled by the M5Stack-Basic at its core and assisted by ESP32. The mechArm 270-M5 weighs 1kg and can carry a load of 250g, with a working radius of 270mm. It is designed to be portable, small but highly functional, simple to operate, and capable of working safely in coordination with human beings.

A conveyor belt is a mechanical device used for transporting items, commonly comprised of a belt-shaped object and one or more rolling axes. They can transport various items such as packages, boxes, food, minerals, building materials, and many more. The working principle of the conveyor belt involves placing the item on the moving belt and then moving it to the target location. Conveyor belts typically consist of a motor, a transmission system, a belt, and a support structure. The motor provides power, the transmission system transfers the power to the belt, causing it to move.

Currently, on the market, various types of conveyor belts can be customized according to the user's needs, such as the length, width, height of the conveyor belt, and the material of the track.

3D Depth cameraDue to the diversity of usage scenarios, ordinary 2D cameras fail to meet our requirements. In our scenario, we use a depth camera. A depth camera is a type of camera that can obtain depth information of a scene. It not only captures the color and brightness information of a scene, but also perceives the distance and depth information between objects. Depth cameras usually use infrared or other light sources for measurement to obtain the depth information of objects and scenes.

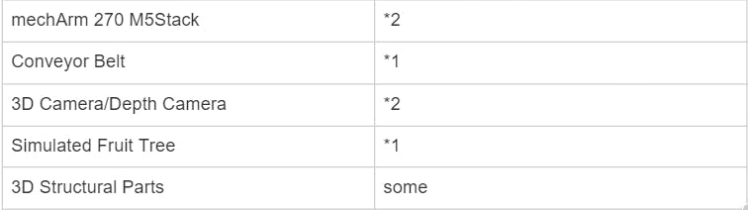

The depth camera can capture various types of information such as depth images, color images, infrared images, and point cloud images.

With the aid of the depth camera, we can accurately obtain the position of the fruit on the tree as well as its color information.

Adaptive gripper - robotic arm end effectorThe adaptive gripper is an end effector used to grasp, grip, or hold objects. It consists of two movable claws that can be controlled by the robotic arm's control system to adjust their opening and closing degree and speed.

Project functionsTo begin with, we must prepare the compiling environment. This scenario is coded using the Python language. Therefore, it is essential to install the environment for using and learning purposes.

numpy==1.24.3

opencv-contrib-python==4.6.0.66

openni==2.3.0

pymycobot==3.1.2

PyQt5==5.15.9

PyQt5-Qt5==5.15.2

PyQt5-sip==12.12.1

pyserial==3.5Let us first delve into the introduction of the machine vision recognition algorithm. The project's functionality can mainly be divided into three parts:

- Machine vision recognition algorithm, including depth recognition algorithm

- Robotic arm control and trajectory planning

- Communication and logical processing among multiple machines

Camera calibration is required before using the depth camera.Here is a tutorial link.

Camera calibration:

Camera calibration refers to the process of determining the internal and external parameters of a camera through a series of measurements and calculations. The internal parameters of the camera include focal length, principal point position, and pixel spacing, while the external parameters of the camera include its position and orientation in the world coordinate system. The purpose of camera calibration is to enable the camera to accurately capture and record information about the position, size, and shape of objects in the world coordinate system.

Our target objects are fruits, which come in different colors and shapes, ranging from red, orange to yellow. To ensure accurate and safe fruit grasping, it is necessary to gather comprehensive information about the fruits, including their width, thickness, and other characteristics, to perform intelligent grasping.

Let us examine the target fruits. Currently, the most prominent difference between them is their distinct colors. We will select the targets with red and orange hues. We will use the HSV color space to locate the targets. The following code is designed to detect the target fruits.

Code:

class Detector:

class FetchType(Enum):

FETCH = False

FETCH_ALL = True

"""

Detection and identification class

"""

HSV_DIST = {

# "redA": (np.array([0, 120, 50]), np.array([3, 255, 255])),

# "redB": (np.array([176, 120, 50]), np.array([179, 255, 255])),

"redA": (np.array([0, 120, 50]), np.array([3, 255, 255])),

"redB": (np.array([118, 120, 50]), np.array([179, 255, 255])),

# "orange": (np.array([10, 120, 120]), np.array([15, 255, 255])),

"orange": (np.array([8, 150, 150]), np.array([20, 255, 255])),

"yellow": (np.array([28, 100, 150]), np.array([35, 255, 255])), # old

# "yellow": (np.array([31, 246, 227]), np.array([35, 255, 255])), # new

}

default_hough_params = {

"method": cv2.HOUGH_GRADIENT_ALT,

"dp": 1.5,

"minDist": 20,

"param2": 0.6,

"minRadius": 15,

"maxRadius": 40,

}

def __init__(self, target):

self.bucket = TargetBucket()

self.detect_target = target

def get_target(self):

return self.detect_target

def set_target(self, target):

if self.detect_target == target:

return

self.detect_target = target

if target == "apple":

self.bucket = TargetBucket(adj_tolerance=25, expire_time=0.2)

elif target == "orange":

self.bucket = TargetBucket()

elif target == "pear":

self.bucket = TargetBucket(adj_tolerance=35)

def detect(self, rgb_data):

if self.detect_target == "apple":

self.__detect_apple(rgb_data)

elif self.detect_target == "orange":

self.__detect_orange(rgb_data)

elif self.detect_target == "pear":

self.__detect_pear(rgb_data)

def __detect_apple(self, rgb_data):

maskA = color_detect(rgb_data, *self.HSV_DIST["redA"])

maskB = color_detect(rgb_data, *self.HSV_DIST["redB"])

mask = maskA + maskB

kernelA = cv2.getStructuringElement(cv2.MORPH_RECT, (8, 8))

kernelB = cv2.getStructuringElement(cv2.MORPH_RECT, (2, 2))

mask = cv2.erode(mask, kernelA)

mask = cv2.dilate(mask, kernelA)

targets = circle_detect(

mask, {"minDist": 15, "param2": 0.5,

"minRadius": 10, "maxRadius": 50}

)

self.bucket.add_all(targets)

self.bucket.update()

def __detect_orange(self, rgb_data):

mask = color_detect(rgb_data, *self.HSV_DIST["orange"])

targets = circle_detect(

mask, {"minDist": 15, "param2": 0.1,

"minRadius": 7, "maxRadius": 30}

)

self.bucket.add_all(targets)

self.bucket.update()

def __detect_pear(self, rgb_data):

mask = color_detect(rgb_data, *self.HSV_DIST["yellow"])

kernelA = cv2.getStructuringElement(cv2.MORPH_RECT, (25, 25))

kernelB = cv2.getStructuringElement(cv2.MORPH_RECT, (3, 3))

mask = cv2.erode(mask, kernelA)

mask = cv2.dilate(mask, kernelA)

mask = cv2.erode(mask, kernelB)

targets = circle_detect(

mask, {"minDist": 15, "param2": 0.1,

"minRadius": 15, "maxRadius": 70}

)

self.bucket.add_all(targets)

self.bucket.update()

def fetch(self):

return self.bucket.fetch()

def fetch_all(self):

return self.bucket.fetch_all()

def debug_view(self, bgr_data, view_all=True):

if view_all:

targets = self.bucket.fetch_all()

else:

targets = self.bucket.fetch()

if targets is not None:

targets = [targets]

if targets is not None:

for target in targets:

x, y, radius = target["x"], target["y"], target["radius"]

# draw outline

cv2.circle(bgr_data, (x, y), radius, BGR_GREEN, 2)

# draw circle center

cv2.circle(bgr_data, (x, y), 1, BGR_RED, -1)Color streaming video on the left, depth video on the right

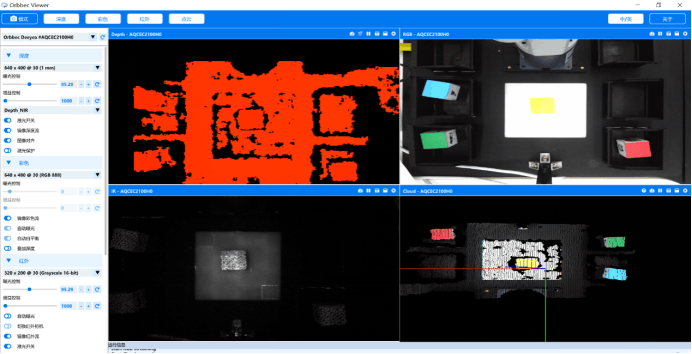

Color streaming video on the left, depth video on the right

The first step is to accurately detect the target fruits in order to obtain their coordinates, depth, and other relevant information. We will define the necessary information to be gathered and store it for later use and retrieval.

Code:

class VideoCaptureThread(threading.Thread):

def __init__(self, detector, detect_type = Detector.FetchType.FETCH_ALL.value):

threading.Thread.__init__(self)

self.vp = VideoStreamPipe()

self.detector = detector

self.finished = True

self.camera_coord_list = []

self.old_real_coord_list = []

self.real_coord_list = []

self.new_color_frame = None

self.fruit_type = detector.detect_target

self.detect_type = detect_type

self.rgb_show = None

self.depth_show = NoneUltimately, our end goal is to obtain the world coordinates of the fruits, which can be transmitted to the robotic arm for executing the grasping action. By converting the depth coordinates into world coordinates, we have already achieved half of our success. Finally, we only need to transform the world coordinates into the coordinate system of the robotic arm to obtain the grasping coordinates of the target fruits.

# get world coordinate

def convert_depth_to_world(self, x, y, z):

fx = 454.367

fy = 454.367

cx = 313.847

cy = 239.89

ratio = float(z / 1000)

world_x = float((x - cx) * ratio) / fx

world_x = world_x * 1000

world_y = float((y - cy) * ratio) / fy

world_y = world_y * 1000

world_z = float(z)

return world_x, world_y, world_zTo proceed, we have successfully detected and obtained the graspable coordinates of the target object, which can now be transmitted to the robotic arm. Our next step is to handle the control and trajectory planning of the robotic arm.

Robotic arm control and trajectory planningWhen it comes to controlling the robotic arm, some may find it difficult to make it move according to their desired trajectory. However, there is no need to worry. Our MechArm270 robotic arm is equipped with pymycobot, a relatively mature robotic arm control library. With just a few simple lines of code, we can make the robotic arm be controlled.

(Note: pymycobot is a Python library for controlling robotic arm movement. We will be using the latest version, pymycobot==3.1.2.)

#Introduce two commonly used control methods

'''

Send the degrees of all joints to robot arm.

angle_list_degrees: a list of degree value(List[float]), length 6

speed: (int) 0 ~ 100,robotic arm's speed

'''

send_angles([angle_list_degrees],speed)

send_angles([10,20,30,45,60,0],100)

'''

Send the coordinates to robot arm

coords:a list of coordiantes value(List[float]), length 6

speed: (int) 0 ~ 100,robotic arm's speed

'''

send_coords(coords, speed)

send_coords([125,45,78,-120,77,90],50)we can transmit the trajectory to the robotic arm in either angle or coordinate format for execution.

Harvesting Robot Trajectory Planning

After implementing basic control, our next step is to design the trajectory planning for the robotic arm to grasp the fruits. In the recognition algorithm, we obtained the world coordinates of the fruits. We will process these coordinates and convert them into the coordinate system of the robotic arm for targeted grasping.

#deal with fruit world coordinates

def target_coords(self):

coord = self.ma.get_coords()

while len(coord) == 0:

coord = self.ma.get_coords()

target = self.model_track()

coord[:3] = target.copy()

self.end_coords = coord[:3]

if DEBUG == True:

print("coord: ", coord)

print("self.end_coords: ", self.end_coords)

self.end_coords = coord

return coordWith the target coordinates in hand, we can now plan the trajectory of the robotic arm. During the motion of the robotic arm, we must ensure that it does not collide with any other structures or knock down any fruits.

When planning the trajectory, we can consider the following aspects:

● Initial posture

● Desired grasping posture

● Obstacle avoidance posture

We should set up various postures according to the specific requirements of the scene.

Sorting robot trajectory planning

The prior subject matter pertained to the harvesting robot, and now we shall delve into the discussion of trajectory planning for sorting robots. In actuality, the trajectory planning for both of these robots is quite similar. The sorting objective is the targets that are present on the conveyor belt, and these targets are sorted out by utilizing a depth camera which captures the coordinates of the spoiled fruit present on the conveyor belt.

The control and trajectory planning for both the harvesting robot and sorting robot have been addressed. Moving on, it is time to shed light on a rather crucial aspect of this set-up. The communication channel between these two robotic arms is of utmost importance. How do they ensure effective communication, avoid entering into a deadlock, and operate in a seamless manner along with the conveyor belt? This is what we shall discuss next.

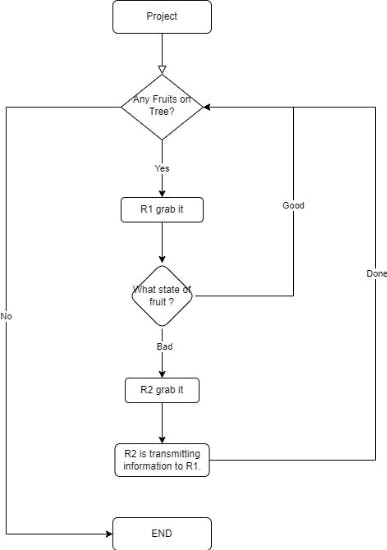

Communication and logical processing among multiple machinesWithout a comprehensive and coherent logic, these two robotic arms would have certainly engaged in a clash by now. Let us examine the flowchart of the entire program.

When R2 detects a spoiled fruit, R1's robotic arm is temporarily halted. After R2 completes its sorting task, it transmits a signal to R1, enabling it to resume harvesting operations.

Socket

Since communication is imperative, the Socket library becomes indispensable. Socket is a standard library that is frequently employed in network programming. It offers a set of APIs for network communication, enabling facile data transfer between different computers. To address the communication issue between R1 and R2, we have devised a solution wherein a server and a client are established.

The following is the relevant code and initialization information of the server establishment.

Code:

class TcpServer(threading.Thread):

def __init__(self, server_address) -> None:

threading.Thread.__init__(self)

self.s = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

self.s.bind(server_address)

print("server Binding succeeded!")

self.s.listen(1)

self.connected_obj = None

self.good_fruit_str = "apple"

self.bad_fruit_str = "orange"

self.invalid_fruit_str = "none"

self.target = self.invalid_fruit_str

self.target_copy = self.target

self.start_move = FalseHere is client.

Code:

class TcpClient(threading.Thread):

def __init__(self, host, port, max_fruit = 8, recv_interval = 0.1, recv_timeout = 30):

threading.Thread.__init__(self)

self.good_fruit_str = "apple"

self.bad_fruit_str = "orange"

self.invalid_fruit_str = "none"

self.host = host

self.port = port

# Initializing the TCP socket object

# specifying the usage of the IPv4 address family

# designating the use of the TCP stream transmission protocol

self.client_socket = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

self.client_socket.connect((self.host, self.port))

self.current_extracted = 0

self.max_fruit = max_fruit

self.recv_interval = recv_interval

self.recv_timeout = recv_timeout

self.response_copy = self.invalid_fruit_str

self.action_ready = True Now that the server and client have been established, we can enable R1 and R2 to communicate just like we do through text messages.

R1: "I am currently harvesting fruits."

R2: "Message received, over."

Technical pointsIndeed, throughout this project, effective communication and logical processing between multiple robotic arms are of utmost importance. There are several ways to establish communication between 2 robotic arms, such as physical communication through serial ports, Ethernet communication through LAN, Bluetooth communication, etc.

In our project, we are utilizing Ethernet communication using the existing TCP/IP protocol, and implementing it through the socket library in Python. As we all know, laying a strong foundation is essential when building a house; similarly, setting up a framework is crucial when starting a project. Furthermore, understanding the principles of robotic arm control is also imperative. It is necessary to learn how to convert the target object into world coordinates and then transform it into the target coordinates in the robotic arm's reference frame.

SummaryThe application of these fruit harvesting and sorting robots is not only beneficial for students to better understand the principles of mechanics and electronic control technology, but also to foster their interest and passion for scientific technology. It provides an opportunity for practical training and competition thinking.

Learning about robotic arms requires practical operation, and this application provides a practical opportunity that allows students to deepen their understanding and knowledge of robotic arms through actual operation. Moreover, this application can help students learn and master technologies such as robotic arm motion control, visual recognition, and object gripping, thereby helping them better understand the relevant knowledge and skills of robotic arms.

Furthermore, this application can also help students develop their teamwork, innovative thinking, and competition thinking abilities, laying a solid foundation for their future career development. Through participating in robot competitions, technology exhibitions, and other activities, students can continuously improve their competition level and innovative ability, making them better equipped to adapt to future social development and technological changes.

Credits

Elephant Robotics

Elephant Robotics is a technology firm specializing in the design and production of robotics, development and applications of operating system and intelligent manufacturing services in industry, commerce, education, scientific research, home and etc.

Leave your feedback...