Virtual Dad - The Parent You Never Knew You Didn't Want.

About the project

Feeling down in the dumps? Need a bit of a laugh? Or a groan? Dads are the best. Always there to make you smile with a terrible joke or some completely irrelevant advice. The Dad Bot is your surrogate father. Terrible Jokes? Check! Bad advice? You betcha! Here's here to cheer you up, even if you don't want to be...

Project info

Difficulty: Moderate

Platforms: Google, Raspberry Pi

Estimated time: 4 hours

License: GNU General Public License, version 3 or later (GPL3+)

Items used in this project

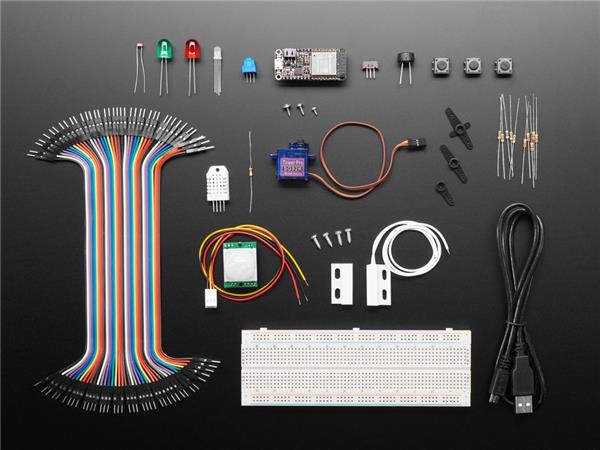

Hardware components

Hand tools and fabrication machines

Story

Background

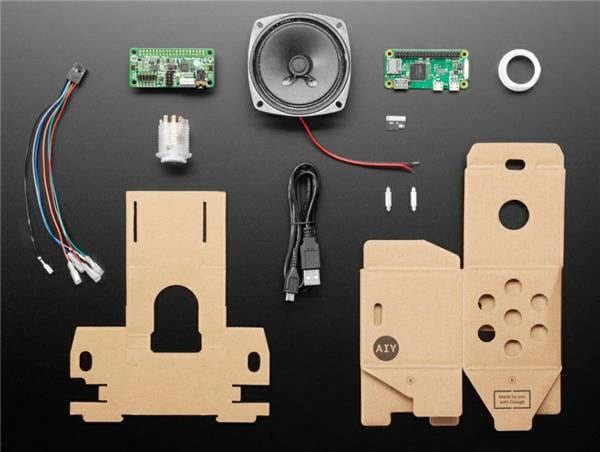

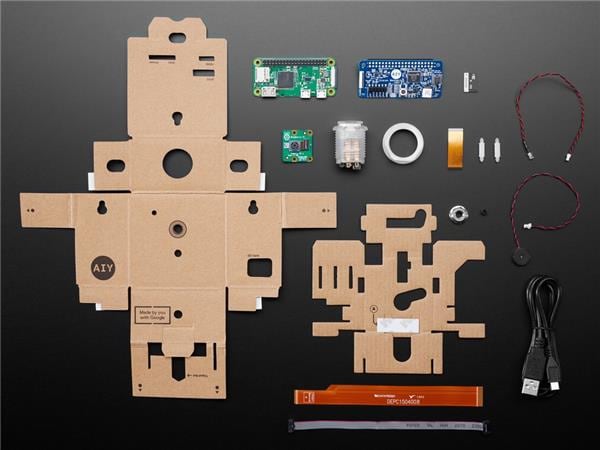

I wanted to create a lighthearted project that would make people smile, and take advantage of all the fantastic hardware included in the AIY Kits.

With the Coronavirus pandemic in full swing, then I thought we could all use a little cheering up - and honestly, what better than a cringey joke-telling robot that detects how happy you are and cracks a terrible pun.

Getting to grips with the kits

First step is to get the kits built and see what they can do. Googe have done a great job with the AIY Vision & Voice kits and construction was made really simple with a website for each dedicated to clear, step-by-step instruction that even a 10-year-old would be hard-pressed to get wrong.

That said, I only messed up about five or six times... But eventually got both kits up and running.

They''re a lot of fun - especially for the kids who delight in pulling stupid faces at the joy detector and making Google Assistant say inappropriate things. So after a few weeks of messing about with the little cardboard cubes, like a true dad - I set about getting under the hood and pretending I knew what I was doing...

Tinkering with the examples

I started with the vision kit. Interested in seeing how the code worked to trigger events when low or high levels of joy were detected, I suspected there was a routine in there somewhere that I could hijack to carry out my own stupid commands.

You can find the Joy Detector demo code buried in the Raspberry Pi at the following location:

~/AIY-projects-python/src/examples/vision/joy/joy_detector_demo.py

Because I SSH in using PuTTY, I used VIM to explore the code:

- sudo vim ~/AIY-projects-python/src/examples/vision/joy/joy_detector_demo.py

And I was right - sitting there at around line 322 is the code:

- if event== ''high'' :

- logger.info(''High joy detected.'')

- player.play(JOY_SOUND)

- elif event == ''low'':

- logger.info(''Low joy detected.'')

- player.play(SAD_SOUND)

I''m not hugely familiar with Python, but I''ve been programming other languages for 25 years, and kids these days are writing games in it while they''re still in nappies, it seems, so it can''t be that hard, right?

Well, digging a little deeper it seems I''m right - after 321 lines of setup and functions and general definitions and logic, my way in to the magic of the AIY Vision kit is those 6 little lines of code.

I want my DadBot to crack an awful joke whenever it detects a face in frame that''s expressing low levels of joy. Because the internet is crammed full of talented and creative weirdos with far too much time on their hands, I came across "icanhazdadjoke.com" which is a web-based API that allows you to programatically retrieve a groan-inducing wisecrack in a variety of different data formats. I couldn''t have asked for anything better. Shout out to Brett Langdon for creating and maintaining this goldmine. Full documentation can be found here.

So, using the Python requests library, we''re able to connect to the api, send the relevant headers to retrieve a joke in plain text format, and boom! instant Dad jokes on demand... Here''s the code:

- import requests

- r=requests.get(''https://icanhazdadjoke.com/'', headers={''accept'':''text/plain''})

- joke=r.text

- print joke

This simple bit of code imports the library, creates a GET request (more information about GET and POST requests here) and sends all the relevant headers to the API. I then load the text of the joke response into the joke variable and print it out.

That''s super-simple, and seems to work a treat. Now all I need to do is plug that into the joy-detector code when it detects "low joy" and we''re one step closer to our goal, so this is what I ended up with (after adding the import to the top of the file with the other library imports):

- if event== ''high'' :

- logger.info(''High joy detected.'')

- player.play(JOY_SOUND)

- elif event == ''low'':

- r=requests.get(''https://icanhazdadjoke.com/'', headers={''accept'':''text/plain''})

- joke=r.text

- print joke

- logger.info(''Low joy detected.'')

- player.play(SAD_SOUND)

Now we have a Raspberry Pi that looks at your face, and if you''re wearing a frown it''ll go find a crappy joke and print it out. Close, but not exactly what we want. We want our robot to SAY the joke out loud.

Overcoming challenges with sound

I thought of a number of approaches to this conundrum, including using the Google assistant and various online text-to-speech solutions, but they were all either too complicated, required signing up with lots of services, or didn''t sound right.

In the end I settled on using Festival Lite. I love the deadpan delivery of the stock voice, and it''s a self-contained, super simple to use little library that is easy to install on a Pi - just run the following from the console:

- sudo apt-get install flite

And once it''s done, it''s as simple as running the following code to get your Raspberry Pi chattering away...

- flite -t "your text here"

I can''t tell you how many hours I spent using this command to tell the kids they smell (they''re not included in the build time estimate for this project...).

But this is where I ran into my first issue. The Raspberry Pi Zero W has no audio jack. Sound is only available through the HDMI connection, or by connecting to a bluetooth speaker. However, though I was able to connect to a wireless speaker, I still couldn''t get the sound to work at all. The Raspberry Pi simply would not make a peep.

After a number of hours spent swearing and panicking as the competition deadline approached, I realised that the Google AIY Kit Raspbian Image is preconfigured for use with both the Vision AND the Voice kits - it expects to see the Voice Bonnet so it can use the external audio hardware for playing sound. The internal sound module is disabled deep in the Linux configuration somewhere, and it was completely beyond my limited expertise to get it working (Read: I couldn''t find the answer on Stackoverflow).

So, I dug out the pinouts of the AIY kit boards with a bit of Googling and excellent pinout.xyz website and discovered the following:

Vision Bonnet Voice Bonnet

If that''s correct, the two boards don''t share any pins except the i2c bus - and that''s MEANT to share devices, right? The Raspberry Pi must be communicating with the bonnet through i2c. So it might just work stacking the two bonnets on top of each other and keeping my fingers crossed...

So I hastily soldered a pin header on top of the vision kit bonnet and stacked the voice kit on top (unfortunately I didn''t take a picture of the unholy Frankensteined mess, so you''ll have to use your imagination) and switched it on... Booting up didn''t pose a problem at all.

But that was where the good luck ran out.

Neither bonnet wanted to work when they were both plugged in. My robot was now deaf, mute and blind. Back to drawing board.

Serial to the rescue

Okay, just one more thing to try - as an avid Arduino fan, I''m used to using the serial port to communicate between devices, and as the Raspberry Pi has pins reserved for serial communication, I figured that the only way I was going to get this to work would be to have the Vision Kit Pi work on spotting faces, and measuring the emotions, and whenever it sees a particularly high or low level of joy, then send a trigger through the serial port to the Voice Kit Pi which can then go find a joke, and say it out loud.

Simply connect the TXD pin (pin 8 - GPIO 14) on one Pi to the RXD pin (pin 10 - GPIO15) of the other, and vice versa, and connect the grounds of the two devices and you''re home and dry - your Pis can now talk to each other. I also connected the 5v rails so the Voice Kit Pi could piggy back off the Vision Kit Pi power supply. Disclaimer: This is not the recommended way to power a Raspberry Pi, so I don''t suggest you do it unless you''re willing to run the risk of damanging one of your Pis - I was being lazy and have a drawer full of spares...

As you can see - I soldered to the UART breakout on the Voice Bonnet, and the underside of the Vision Kit Pi - but soldering direct to the Pi GPIO pins will work, too.

Now there''s just a couple of small additional changes to make in the configuration, you want to enable serial communication on the Pi, so open a terminal on each Pi and run:

- sudo raspi-config

This will fire up the Raspberry Pi configuration tool. Use the arrow keys to select "Interfacing Options" and hit enter. On the next screen, select "Serial" and hit enter. You will now be asked if you want the login shell to be accessible over serial. Be sure to select "No".

Then, you will be asked if you want to make use of the Serial Port Hardware. Select "Yes" and hit Enter to proceed. Once the Raspberry Pi has made the changes, you should see the following:

The serial login shell is disabled The serial interface is enabled

Then you just need to restart the Pis:

- sudo reboot

Now you''re all set up. We can use the Python serial library to get our Pis talking to each other. First, we need to create a serial object and define a few things like port, byte size and timeouts. Then we can use that object to write out to the serial port:

- import serial

- import time

- ser = serial.Serial(

- port=''/dev/Serial0'',

- baudrate = 9600,

- parity=serial.PARITY_NONE,

- stopbits=serial.STOPBITS_ONE,

- bytesize=serial.EIGHTBITS,

- timeout=1

- )

- counter=0

- while 1:

- ser.write(counter)

- time.sleep(1)

- counter += 1

/dev/Serial0 is the port used to communicate with the GPIO serial connection.

And on the Voice Kit side, we just need to set up our serial object in the same way, and listen for any chatter on the channel...

- import time

- import serial

- ser = serial.Serial(

- port=''/dev/Serial0'',

- baudrate = 9600,

- parity=serial.PARITY_NONE,

- stopbits=serial.STOPBITS_ONE,

- bytesize=serial.EIGHTBITS,

- timeout=1

- )

- while 1:

- x=ser.readline()

- print x,

Running both scripts on the relevant devices should result in the Voice Kit Pi sending a count, and the Vision Kit Pi receiving the number and printing it to the terminal. That means I can send a command through serial from the Vision kit every time it detects low or high joy, and the Voice Kit can carry out an action based on that command!

Finally, we have solved the audio issues, and used BOTH the kits, even if it is in the most Heath-Robinson, clumsy way possible. HAHAHA!

It''s ALIIIIVEEEEEE!

Okay, we now have all the component parts in place - all that remains is to mash it all together into a unified whole...

You can download my modified Vision Kit code and the Voice kit code at the end of this tutorial.

You will also see I''m using the os library in there to allow Python to run terminal commands. That way, when I get the relevant trigger, I can retrieve a joke from the web service, and send it directly to Flite. All the additional encoding/decoding is there to ensure all quotes/double quotes are correct and I''m not using any illegal characters that might break the script as I noticed some of the characters in the jokes returned by the API were causing me problems.

I''ve also dressed it up with a few other dadisms that are guaranteed to make you cringe regardless of your mood, and we''re done with the electronics and software.

Last steps

I decided to put everything back inside the Voice kit container and dad it up with a pipe and some socks and sandals. I got rid of the buttons, and the privacy LED (because seriously - who''s dad cares about privacy?!). And that about brings us to a wrap. Delight the kids, embarrass their friends, and generally take joy in the pinnacle of AI and face recognition technology. DadBot.

'CAD, enclosures and custom parts

Code

Credits

joe-hawcroft

Multi-skilled digital idiot with a broad skill set. If it can be bodged, you'll probably find me bodging it.

Leave your feedback...