Now Sequitur

About the project

Make a self-driving car in C using MCUExpresso and Mbed

Project info

Difficulty: Moderate

Platforms: NXP

Estimated time: 6 months

License: GNU General Public License, version 3 or later (GPL3+)

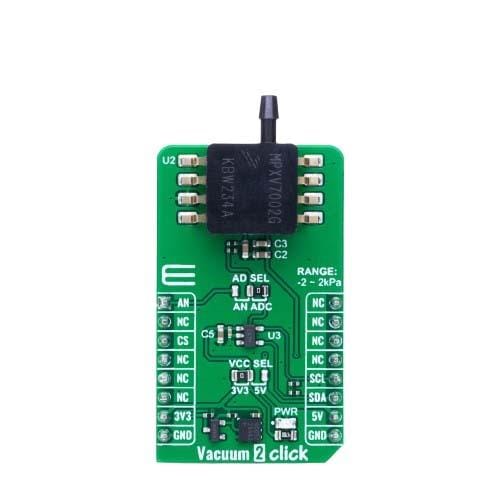

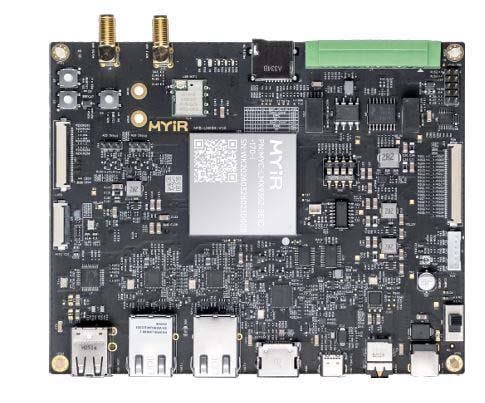

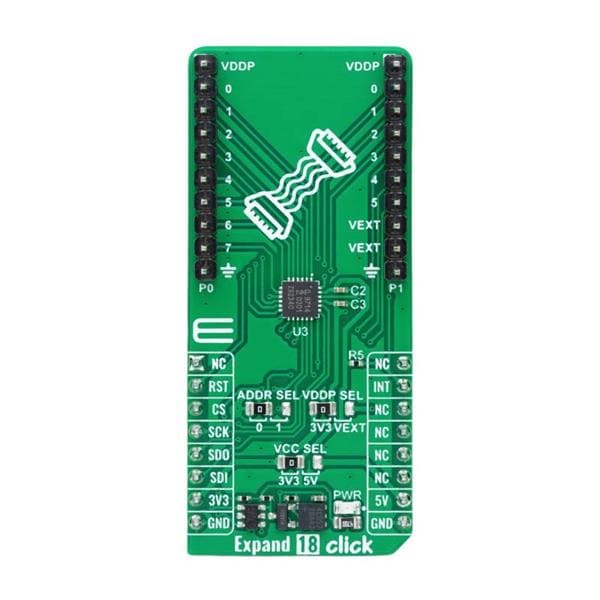

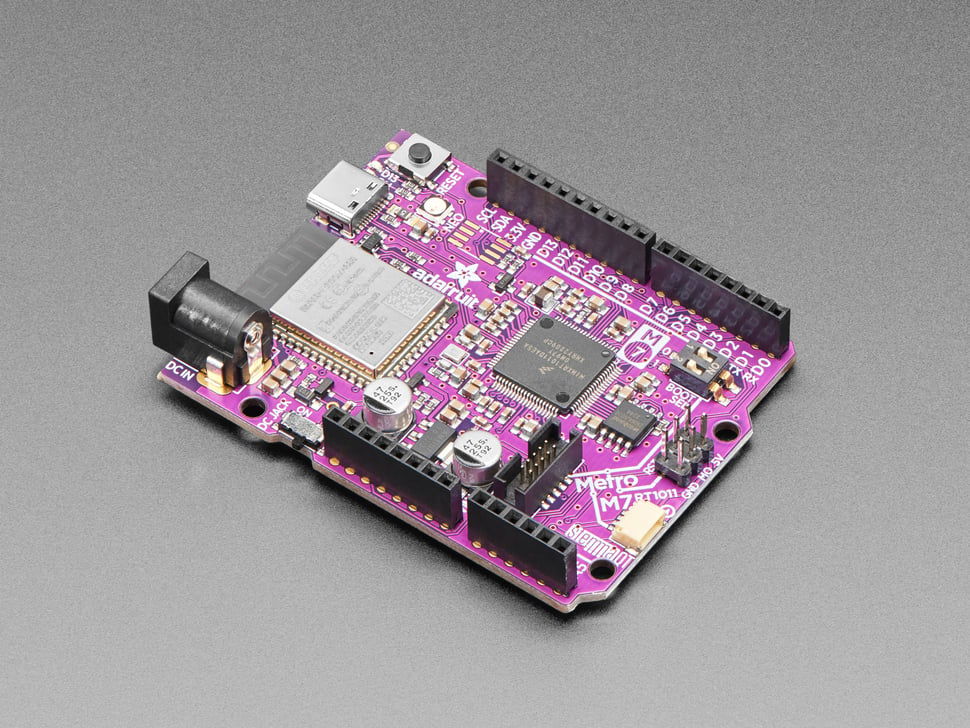

Items used in this project

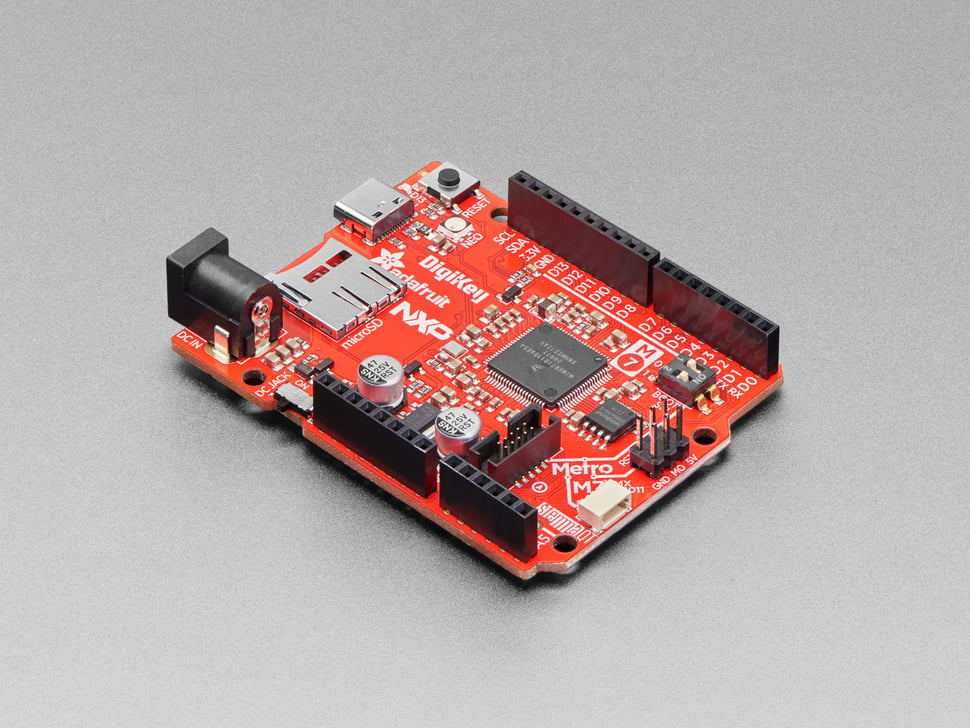

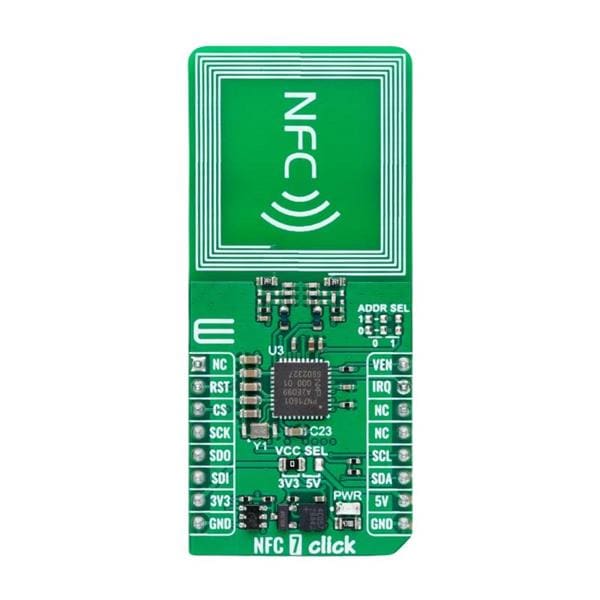

Hardware components

Story

- Scope of the project

Build and program a self-driving car in order to participate in the NXP Cup challenges in 2021.

The challenges consist of a variety of tracks, such as the figure-8 track, speed limit track and the basic timed-race, which will take place on an unknown track.

- Meet the team

We are three students from Vrije Universiteit Amsterdam, currently in our second year of Computer Science. Our passion with self-driving cars started while programming a small car to follow a line during one of our courses, during our first year. Unfortunately, we couldn't participate in the 2020 competition due to the pandemic, however, with a solid base for our code, we are confident we can make our car even better this year!

- Timeline of the development

13.01.2020: Assembling of the car started. We soon realized we had a lot of problems with the hardware (missing pieces, broken parts, etc.)

04.02.2020: Assembly was finally finished, after finding all replacement parts required. The car was able to see at this point.

20.02.2020: The car was able to finish laps without getting out of bounds. It was still very slow and many improvements could be made.

25.02.2020: The original servo couldn't take it anymore. A new one was fitted, which significantly increased performance.

03.03.2020: The best version of the car was created. Upon extensive testing we found that we couldn't increase the speed without adding extra lights on the car (for better readings), although the car could complete the track in a short amount of time and never get out of bounds.

04.03.2020: The code was changed to support the "speed-limit" track. Progress stopped this day due to lock-downs and campus restrictions. Improvements to the car are expected to be made starting in January 2021.

02.03.2021: Work on the car resumed. We quickly noticed the code from last year was not working anymore. We started debugging and rewriting the code to be easier to understand.

07.04.2021: We fitted the camera in the middle of the car with the help of a 3D printed plastic piece. At this point we also managed to develop a reliable, yet slow version of the car.

13.04.2021: We started experimenting with the code (implementing features that we ended up not using; see "other unused tricks" section underneath). We also implemented end line detection.

14.04.2021: We tuned many parameters (speed in individual motors, speed in curves, etc.) to make the car fast and stable.

15.04.2021: The car was prepared to race.

- Explaining the code behind the scenes

Hardware library

Contained in hardware.h are methods that help operate the car. Maybe the most important method is for driving the wheels. This method is useful in two aspects:

1. Adding the minimum speed to the target speed. The wheels only start spinning for speeds > minSpeed (0.35f), and thus a baseline is added to the target speed.

2. Adjusting for wheel speed differences. In our testing, the left wheel has a little more power, resulting in a slight offset to the right when driving (especially noticeable on straight pieces). The speed is adjusted for this offset.

Next, the library contains many wrapper functions for the ugly-named board methods, such as mLeds_Write -> led, or mSwitch_ReadSwitch -> readSwitch. Furthermore, the library houses some helper functions such as a method for turning on only a single board led (enableSingleLed) and a method for the initialization of all sensors (init). Finally, the library still houses some unused functions that were helpful while testing the car, such as for example motorSpeed.

Image Processing

We quickly realized that the SPI Pixy connection isn’t fast enough to be able to query a whole image every frame. We decided to use a horizontal line-scan approach; this would allow us to scan at least two lines (at different heights in the image) and still maintain a high enough number of frames per second. We have also played a bit with the Pixy vector API, but decided against it due to high inconsistency (we don’t have access to the vector algorithm, so if something fails we cannot properly debug or explain it).

Image_processing.h contains an API that takes care of the whole image process. For simplicity, the first step in the image processing pipeline is making the pixels grayscale (by a simple average). In the beginning we hard-coded a threshold for white pixels, that would help separate them from the black ones (and lead to line detection). This doesn’t work very well in practice, due to lighting conditions changing significantly.

In order to account for differences in lighting, we implemented a sampling algorithm that would adjust its value for what is considered a white pixel on startup (it assumes the pixels in the middle of the frame are white).

Line detection

Once we can distinguish the white pixels from the black ones, it’s only a matter of going from the middle and moving towards each side, checking for each pixel whether it’s white or black. Though very simple, this approach is surprisingly effective (partly due to the relatively low dynamic range image that the Pixy cam produces).

We have also tried an approach where we used a sobel filter to detect changes between adjacent pixels, then apply non-maximum suppression and finally pass the pixels through a gaussian function in order to make the black lines stand out. This approach had good accuracy in straight lines, but often failed in corners, due to the differences in color it detected in the floor. After a lot of testing we decided to revert to the simpler approach mentioned above.

Driving logic

We employed fairly basic car driving logic, contained in car.h. First, we check if we are in a straight line by querying a line at the top of the image and checking if we see two black lines on either side. If we can, we slightly adjust to move to the middle of the track. If we determine we are not in a straight line, we query a line at the bottom of the image that would help us determine which way we have to steer and when to stop steering. No line on the left means go left; no line on the right means go right; no line on either side means probably intersection; lines on both sides means we are probably not on the track, but we keep doing whatever we were doing before.

This logic is simple and surprisingly effective. In corners we steer by a fixed amount, as opposed to gradually increasing the steering amount. If we had more time, we would’ve implemented gradual steering.

End line detection

Using a horizontal line-scan it is nearly impossible to detect the two narrow end lines. With the limitation of using the line-scan in mind, we ended up scanning vertical lines in the middle of the track at 10 pixel intervals and determining whether there are two black lines present. If the end line happens to be in a straight line, we have near 100% accuracy detecting it. However, if we exit a curve going into the end line, the accuracy falls to about 50-50 (because the line is no longer aligned with the middle of our camera view every time). Due to time constraints we did not improve this algorithm any further, but we were overall pleased with its accuracy.

Other unused tricks

Gradual curve speed increase: In order to reduce oversteering we tried slowing down in curves, then gradually increasing the speed. This required too much fine tuning and was overall too complicated to properly implement, so we decided against it.

Pixy line detection: As mentioned in the Image Processing section, we did not explore the Pixy vector API further.

Perspective warp: We also looked into warping the perspective of the Pixy cam, in order to get a birds-eye like view of the track. This is perhaps a good idea, but it requires too much time that could otherwise go into fine-tuning other parameters.

Following racing lines: An idea we had was to make the car steer towards the edge before entering a curve. After some testing it became clear that we cannot consistently do it with just a line-scan, so we abandoned the idea.

Leave your feedback...