Robotic Arm Face Recognition Tracking Project Mecharm 270 Pi

About the project

Personal record of the project to achieve robotic arm recognition and tracking.

Project info

Difficulty: Easy

Platforms: Raspberry Pi, M5Stack, OpenCV, Elephant Robotics

Estimated time: 1 hour

License: GNU General Public License, version 3 or later (GPL3+)

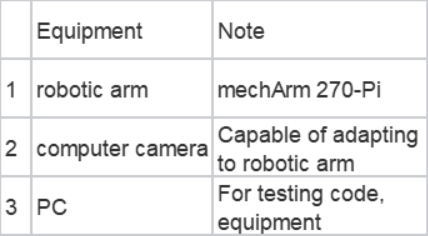

Items used in this project

Hardware components

Software apps and online services

Story

Hi guys, welcome to see the process of documenting the projects I have done.

TopicAnyone who has seen Iron Man knows that Jarvis is a super-powerful artificial intelligence system.With the help of Jarvis, Tony Stark's efficiency in building equipment has been dramatically improved, especially with the recurring intelligent robotic arm. An idea came to my mind to achieve recognition and tracking functions on the robotic arm.

video demo:

EquipmentThe equipment required for this project is in the table below.

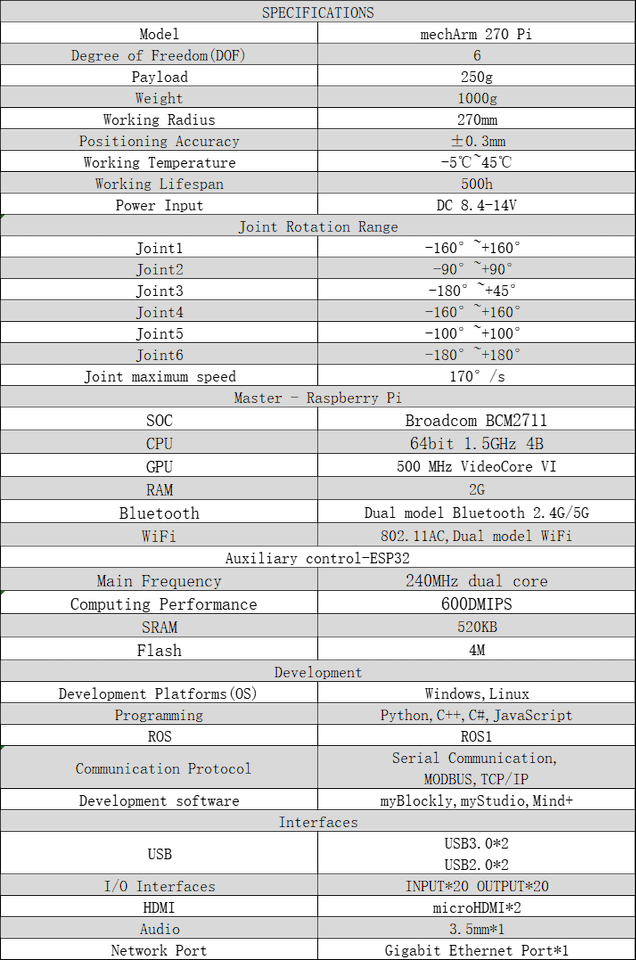

This is a small six-axis robotic arm from Elephant Robotics with a Raspberry Pi 4B microprocessor and ESP32 as auxiliary control in a centrosymmetric structure (imitating an industrial structure).With a body weight of 1 kg, a load of 250 g, and a working radius of 270 mm, the mechArm 270-Pi is compact and portable, small but mighty, easy to operate, and can work safely with people.

Here are its specifications

mechArm is very informative and provides multiple python API interfaces that can be easily provided to me for use.The specifications for the interface at the end of it are available from the official website, and a suitable device can be designed using 3d printing technology.

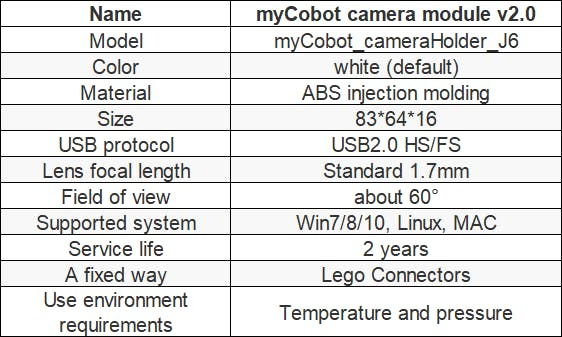

No distortion lens USB cameraHere are the specifications of the camera. It is mainly used for face recognition functions.

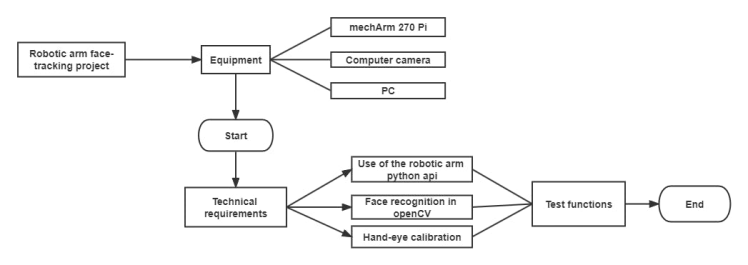

The flow of this project is shown in the diagram below. The main issue is to solve the face recognition function of OpenCV and whether to choose eye in hand or eye to hand for robotic arm calibration.

I will briefly describe these two functions do in the project.

OpenCV-Face Recognition

The full name of OpenCV is Open Source Computer Vision Library, which is a cross-platform computer vision library. It is consisted of a collection of C functions and a handful of C++ classes while providing interfaces to languages such as Python, Ruby, MATLAB, and others. It implements many common algorithms for image processing and computer vision.

Face recognition means that the program discriminates whether a face is present in the input image and identifies the person corresponding to the image with the face. What we often refer to as face recognition generally consists of two parts: face detection and face recognition.

In face detection, the main task is to construct classifiers that can distinguish between instances containing faces and instances not containing faces.

There are three trained cascade classifiers available in OpenCV. As the name implies, the cascade classifier sifts through different features step by step to arrive at the classification to which it belongs. It breaks down a complex classification problem into a simple one. With the cascade condition, many negative samples can be sifted out step by step, significantly improving the speed of the later classification.

I use OpenCV because the OpenCV face recognition library is open source and supports the python interface, which can be called directly.

Here is the download address for the classifier:http://face-rec.org/databases/

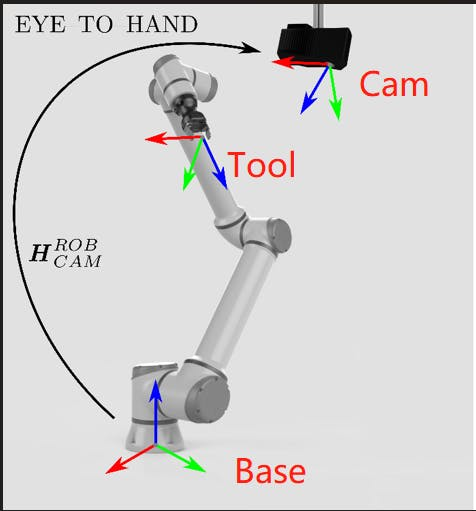

Hand-eye calibrationThe hand-eye calibration lets the robot arm know where the object photographed by the camera is in relation to the robot arm, or in other words, to establish a mapping between the camera coordinate system and the robot arm coordinate system.

There are two ways.

Eye to handThe eye is outside the hand, which means that the camera is fixed to the outside of the robotic arm.

As the positions of the Base and Cam coordinate systems are fixed when the eye is calibrated outside the hand, the eye is mainly used to find the relationship between these two coordinate systems.

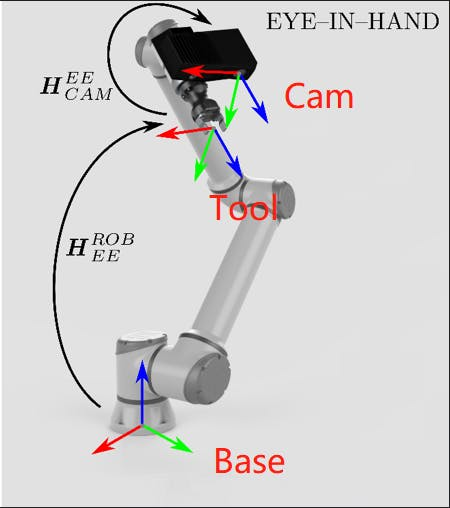

Eye in handThe eye in hand is where camera is fixed to the end of the robotic arm.

The position of the camera coordinate and tool system are relatively constant. Therefore, the relationship between the cam and tool coordinate systems is calibrated.

Start projectPython control of mechArmpymycobot is a library that controls mechArm in python and allows me to call the methods inside directly.

Let me describe a few key approaches.

release_all_servos()

function:release all robot arms

get_angles()

function:get the degree of all joints.

send_angle(id, degree, speed)

function: Send one degree of joint to robot arm.

send_angles(degrees, speed)

function:Send the degrees of all joints to robot arm.

degrees: a list of degree value(List[float]), length 6 or 4.

speed: (int) 0 ~ 100Let me test the results of the code

from pymycobot.mycobot import MyCobot

import time

mc = MyCobot('/dev/ttyAMA0',1000000)

time.sleep(4)

for count in range(2):

mc.send_angles([0,0,0,0,0,0],70)

time.sleep(2)

mc.send_angles([0,38.32,(-6.76),10.01,99.22,(-19.77)],70)

time.sleep(2)

mc.send_angles([(-13.18),(-22.14),17.66,147.12,99.22,(-19.77)],70)

time.sleep(2)

mc.send_angles([98.43,(-2.98),(-95.88),161.01,(-1.23),(-19.77)],70)

time.sleep(2)

print(mc.get_angles())

print(mc.get_coords())

mc.send_angles([0,0,0,0,0,0],70)Video demo

It is easy to use such a robotic arm like mechArm Pi with good manoeuvrability.

Next, let's see how to implement the face recognition.

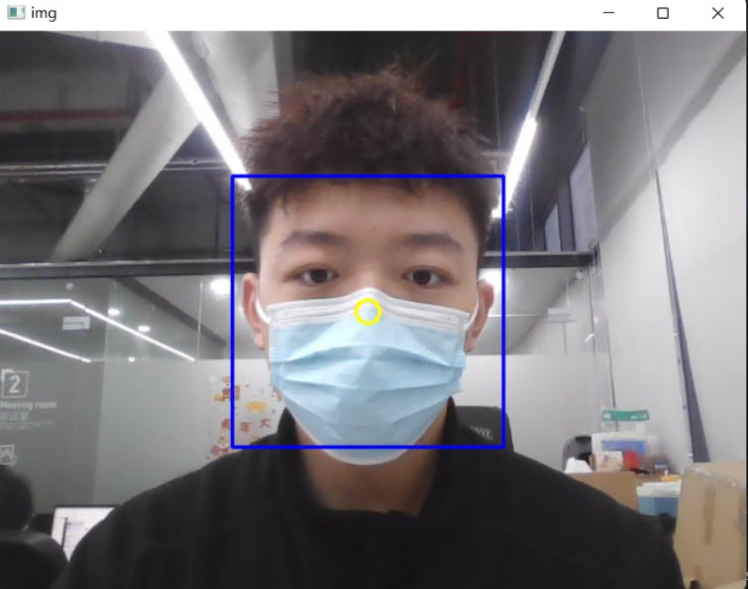

Face RecognitionEarlier I introduced the classifier and I drew a rough flowchart.

I have written the code based on the flowchart, let's execute the code to see the effect.

import cv2

import matplotlib.pyplot as plt

import time

def video_info():

# Loading classifiers

face_cascade = cv2.CascadeClassifier('haarcascade_frontalface_default.xml')

# Input video stream

cap = cv2.VideoCapture(0)

# To use a video file as input

#cap = cv2.VideoCapture('demo.mp4')

while True:

_, img = cap.read()

# Conversion to greyscale

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# Detecting faces

faces = face_cascade.detectMultiScale(gray, 1.1, 4)

# Drawing the outline

for (x, y, w, h) in faces:

cv2.rectangle(img, (x, y), (x+w, y+h), (255, 0, 0), 2)

center_x = (x+w-x)//2+x

center_y = (y+h-y)//2+y

cv2.circle(img,(center_x,center_y),10,(0,255,255),2)

# Display effects

cv2.imshow('img', img)

k = cv2.waitKey(30) & 0xff

if k==27:

break

cap.release()

Honestly, the classifier trained by Opencv is very powerful! Very quickly the faces were recognised.

I completed the first two techniques in question.

One is the basic operation of the robotic arm and the other is the face recognition function.

When I was solving the problem of hand-eye calibration, I encountered difficulties in converting the coordinates in eye in hand and had no way to solve it in a short time.

SummaryThis concludes this project of record. For several technical reasons, hand-eye calibration involves extensive knowledge and requires a great deal of searching and seeking help from various sources.

By the time I return, my project will be a success! If you like this article, like it and leave a comment to support me!

Credits

Elephant Robotics

Elephant Robotics is a technology firm specializing in the design and production of robotics, development and applications of operating system and intelligent manufacturing services in industry, commerce, education, scientific research, home and etc.

Leave your feedback...