Learning Slam And Dynamic Obstacle Avoidance With Myagv

About the project

A Raspberry Pi 4 platform

Project info

Difficulty: Easy

Platforms: Raspberry Pi, ROS, Elephant Robotics

Estimated time: 1 hour

License: GNU General Public License, version 3 or later (GPL3+)

Items used in this project

Story

As technology advances, phrases such as artificial intelligence and autonomous driving navigation have become increasingly prominent. However, at present, autonomous driving has not been widely adopted and still undergoes continuous development and testing. As someone who has had a passion for cars since childhood, I am also very interested in this technology.

By chance, while browsing the internet, I purchased a SLAM car, which can use 2D laser radar for mapping, automatic navigation, and dynamic avoidance, among other functions. Today, I will record my implementation of the dynamic obstacle avoidance function using this SLAM car. The algorithms used for dynamic obstacle avoidance are the DWA and TEB algorithms.

Let me give you a brief introduction to the machine I used

myAGV SLAM platformmyAGV is an automatic navigation intelligent vehicle developed by Elephant Robotics. It features competition-grade Mecanum wheels and a fully enclosed design with a metal frame. It is equipped with several SLAM algorithms to facilitate mapping and navigation learning.

myAGV uses Raspberry Pi 4B as its microcontroller board and Ubuntu as its development system. This is also the reason why it was chosen, as Raspberry Pi has the largest active community in the world and provides many user cases from all around the world for reference.

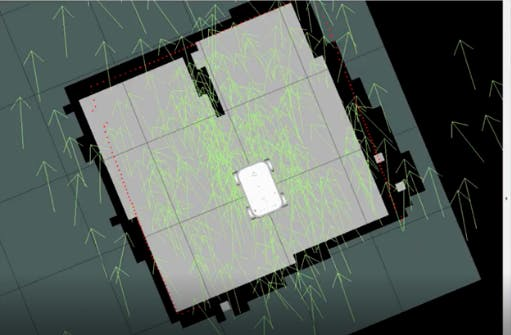

MappingFirstly, I need to set up a scenario for myAGV to perform mapping, automatic navigation, and dynamic obstacle avoidance in the environment I create.

This is the scenario I have set up, featuring some obstacles and occlusions. Now, I will begin mapping. Without a map, how can I navigate? In everyday driving, navigation maps are readily available. Therefore, I need to map the scenario using the gmapping algorithm.

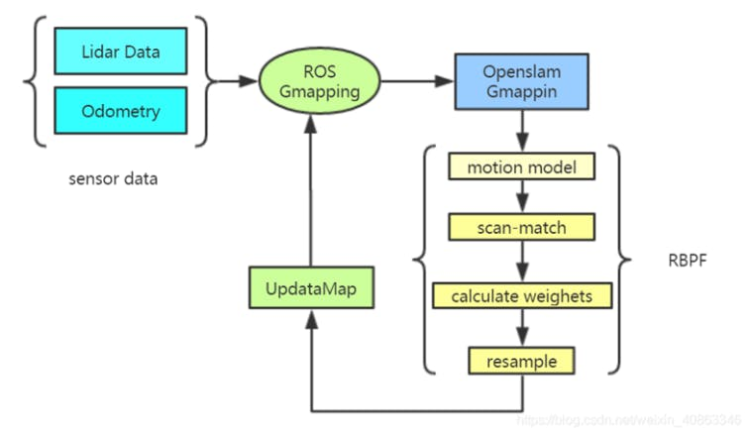

GmappingThe gmapping algorithm is a method used to create environment maps for robots. It is a type of SLAM (Simultaneous Localization and Mapping) algorithm based on laser radar data, which can construct environment maps in real-time while the robot is in motion and simultaneously determine the position of the robot. The gmapping algorithm is a software package in the ROS (Robot Operating System) robot operating system and can be called and used through ROS command-line tools or programming interfaces.

Here, I will refer to Elephant Robotics' Gitbook (which provides detailed operational instructions) to proceed with the operation.

By utilizing sensors like laser radar to collect environmental information, we can combine this data using computer algorithms to create an environmental map. Afterward, I can use this map for navigation and dynamic obstacle avoidance functionality.

Note: After completing gmapping, remember to save the created map.

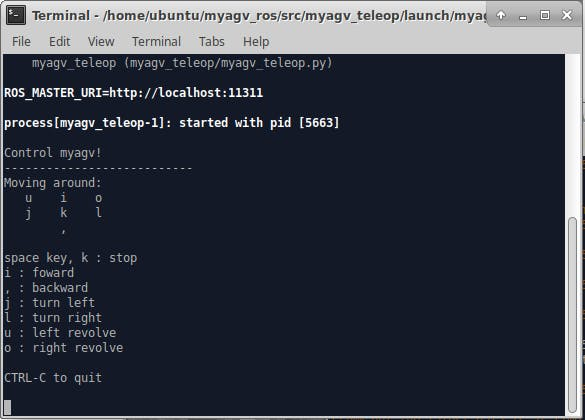

Here, I am using a control method for myAGV, which involves using a keyboard to control myAGV to execute mapping.

I would like to introduce the AMCL algorithm, which is a probabilistic robot localization algorithm based on Monte Carlo Method and Bayesian Filtering theory. It processes sensor data from the robot and estimates the robot's position in the environment in real-time, while continuously updating the probability distribution of the robot's position.

The AMCL algorithm achieves adaptive robot localization through the following steps:

1. Initialization of particle set: Firstly, a set of particles representing possible robot positions is generated around the robot's initial position.

2. Motion model update: Based on the robot's motion state and control information, the position and state information of each particle in the particle set are updated.

3. Measurement model update: Based on the sensor data collected by the robot, the weight of each particle (i.e., the degree of match between the sensor data and actual data when the robot is in that particle position) is calculated. The weight is then normalized and converted into a probability distribution.

4. Resampling: The particle set is resampled according to the weight of each particle, which improves the localization accuracy and reduces computational complexity.

5. Robot localization: The robot's position in the environment is determined based on the probability distribution of the particle set, and the robot state estimation information is updated.

Through the iterative cycle of these steps, the AMCL algorithm can estimate the robot's position in the environment in real-time and continuously update the probability distribution of the robot's position.

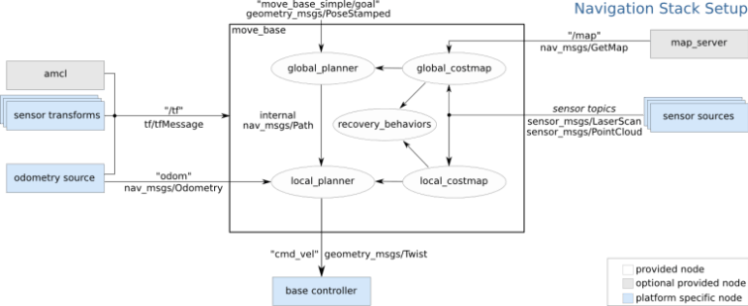

The navigation module provides a framework that allows us to flexibly select global_planner and local_planner to provide path planning functionality. The global_planner is responsible for global planning, while the local_planner is responsible for local path planning. Some messages, such as the trajectory of global planning, are transmitted internally within the framework and cannot be tracked by a topic. In summary, the navigation module of ROS provides a mechanism that enables autonomous navigation of robots by selecting different planners.

In addition to planners, the navigation module also includes cost_map, which is a grid map that contains information about static obstacles, i.e., which areas are passable and which are not. Dynamic obstacle information is published through sensor topics and is used to update the cost_map in real-time to achieve dynamic obstacle avoidance. In addition to maps, the navigation module also requires localization information, which is provided by the amcl module. If you want to use other localization modules, you only need to publish the same topic. Additionally, tf information needs to be provided, which describes the transformation relationship between different sensors, a common requirement in robotics. The robot's pose information, including its speed and angle, is provided by odometry and is used by the local planner to plan paths.

If you don't know much, it is recommended to check the official ROS documentation here.

Let's take a look at how autopilot works

Static obstacle avoidance can be implemented relatively easily. However, many environments have uncertain factors, such as suddenly encountering a person in front of the robot. In this case, if only static obstacle avoidance is relied upon, the robot will collide with the obstacle. This is where dynamic obstacle avoidance comes in.

Dynamic obstacle avoidance refers to the ability of a robot to adjust its path in real-time based on environmental changes to avoid obstacles while in motion. Unlike static obstacle avoidance, dynamic obstacle avoidance requires the robot to perceive changes in the surrounding environment in real-time and make corresponding adjustments to ensure safe movement, thereby improving productivity and safety. For dynamic obstacle avoidance, I mainly use the DWA and TEB algorithms.

SummaryThe algorithms involved in dynamic obstacle avoidance require a lot of knowledge. This article will end here, and I will write about the DWA and TEB algorithms in another article. If you find this article helpful or have any suggestions, please leave a comment below.

I would like to share my experience using myAGV. Firstly, its appearance is impressive, and it can carry a robotic arm as a composite robot to achieve many tasks. I purchased it mainly for learning SLAM car-related knowledge, and to implement autonomous navigation relying on radar-based functions. It is equipped with the Raspberry Pi 4B, which has the world's largest hardware development community and provides many resources. This was the reason I chose it.

However, it is not perfect. Its battery consumption is high, and the maximum standby time is only 2 hours. If used frequently, it will run out of battery in about an hour. This is an area where I believe it needs improvement, and I will continue to update my experience using myAGV in the future.

Credits

Elephant Robotics

Elephant Robotics is a technology firm specializing in the design and production of robotics, development and applications of operating system and intelligent manufacturing services in industry, commerce, education, scientific research, home and etc.

Leave your feedback...