Hardware Acceleration For Short Range Robotics Avoidance

About the project

While hardware acceleration in robotics collision avoidance is not a new concept, the Xilinx KV260 brings scalability to the table.

Project info

Difficulty: Difficult

Platforms: ROS, Elephant Robotics

Estimated time: 1 hour

License: GNU General Public License, version 3 or later (GPL3+)

Items used in this project

Hardware components

Software apps and online services

Story

This project is about creating a stereo camera based path planning solution to prevent the collision of humans and dynamic machines, scalable to larger technologies, such as autonomous vehicles, industrial robotic arms, and drones. This collision avoidance technology relies on the scalability and performance of hardware accelerated image processing or path planning to create a truly robust object detection and routing solution to avoidance of obstacles (humans) for safety applications.

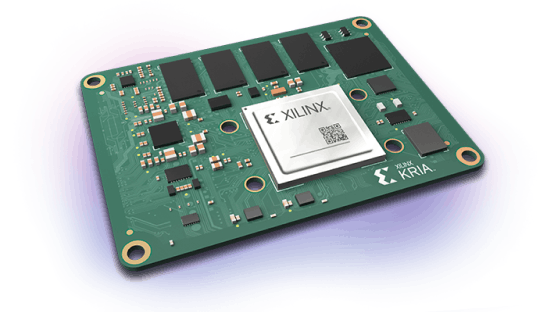

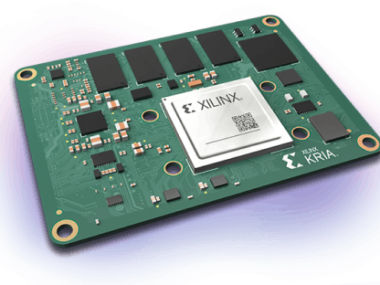

This hardware solution will utilize the new Xilinx Kria KV260 AI starter kit as an AI enabled computer vision processing device in order to run an occupancy mapping and path planning algorithm through ROS MoveIt.

Algorithms will be developed on the Petalinux OS with ROS/ROS2 operating system as robotics middleware. Some experimentation with Xilinx Alpha release software in ROS2 may be utilized, but is not intended for production use per Xilinx.

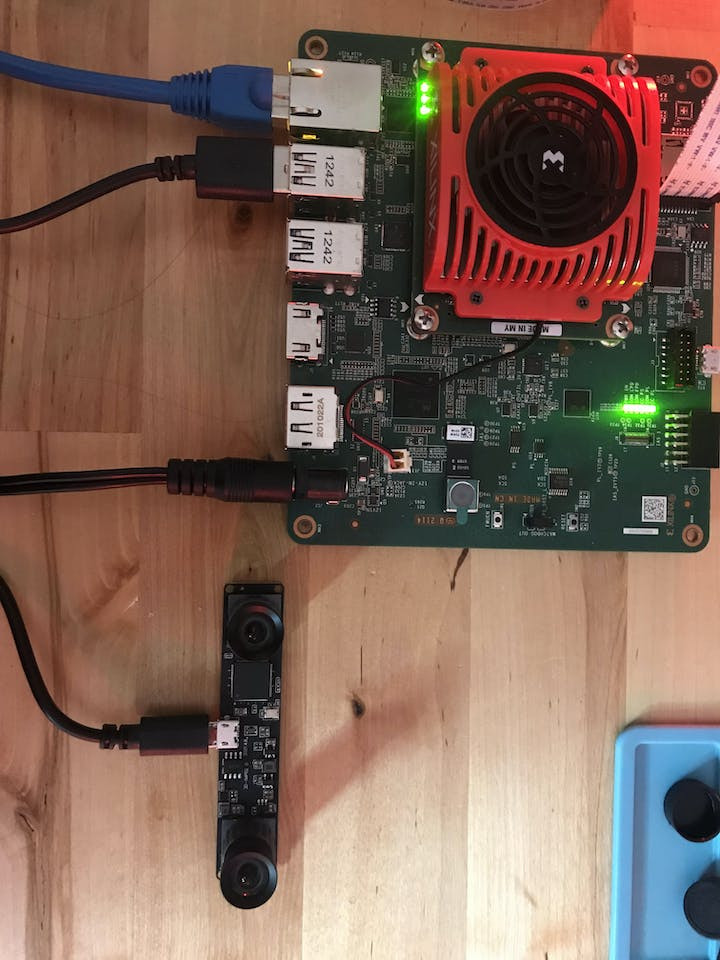

1 / 3 • Xilinx Kria KV260 AI starter kit, shown with Raspberry Pi Cam

This is a proof of concept, using ROS2 image processing and MoveIt perception pipelines as well as use of wide angle stereo depth cameras to create a truly dense point cloud. A dense point cloud requires excessive computation power, more so than the average embedded microprocessor or General Purpose GPU (GPGPU) device, such as the Nvidia Jetson boards or multi-core Arm Cortex based MPUs.

The MoveIt perception pipeline documentation can be found here.

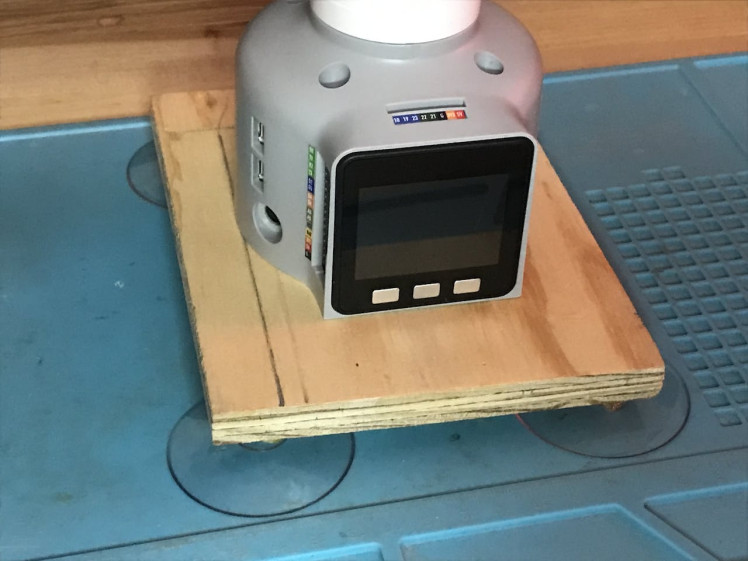

The KV260 was a perfect candidate for these demanding processing capabilities inherent in stereo image processing, 3d point cloud occupancy mapping, and path planning simultaneously. The robotics hardware for this proof of concept project is the Elephant Robotics 6 degree-of-freedom MyCobot robotic arm.

1 / 3 • Elephant Robotics 6DOF MyCobot

Elephant Robotics 6DOF MyCobot

The first step in setting up the MyCobot was the fabrication of a suitable base, made of a cut plywood sheet (6.5"x5") roughly and 4x large suction cups to get the base to adhere to a smooth surface, like a table or bench.

Fabricated MyCobot Base

Fabricated MyCobot Base

The next step was powering the MyCobot and testing the ROS MoveIt slider control and demo applications.

Demo of MyCobot using ROS MoveItThe Xilinx Kria Robotics stack (KRS) required ROS2 as a dependency, while the MyCobot official ROS API was not out of the box compatible with ROS2. My development operating system was Ubuntu 18.04.6, which was another barrier in developing on KRS.

Fortunately, PickNik robotics, the maintainers of MoveIt, released a ROS2 API while my project was under development! Link here. All that was left was to set up a virtual development machine through VirtualBox in order to program the Xilinx Kria KV260 through the KRS and interface with the MyCobot ROS 2 API, or use a ROS1 to ROS2 bridge and pass messages/topics between ROS2 on the Kria and ROS1 on a host workstation/laptop.

The first option as chosen due to isolating the project to the Kria KV260 and not having another host processor in the equation.

The Xilinx Kria Robotics Stack documentation can be found here: https://xilinx.github.io/KRS/sphinx/build/html/index.html

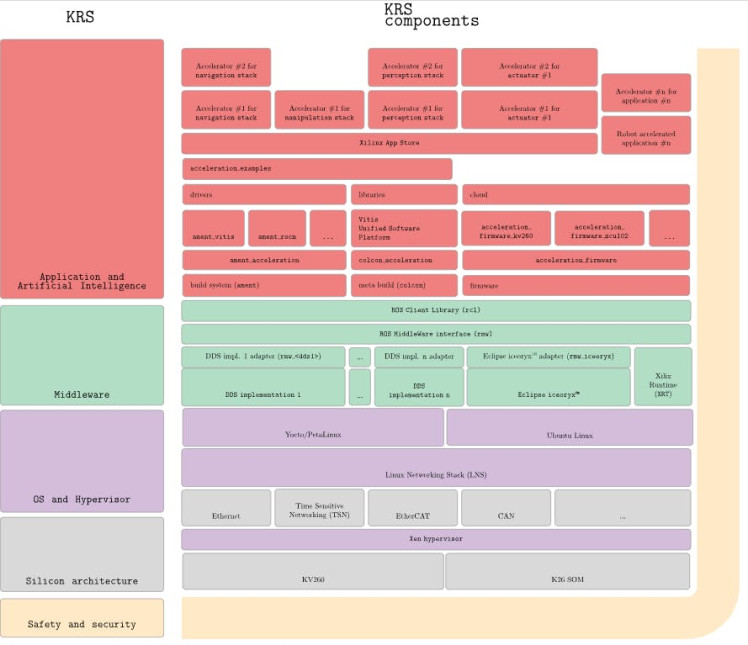

Kria Robotics Stack Layers

Kria Robotics Stack Layers

Due to the aforementioned complexities, this project was split into two phases:

Phase I: Demo of Kria Robotics Stack minimal implementation of hardware acceleration in ROS image pipeline (e.g. a single offloaded node).

Phase II: Final product demonstration with MyCobot hardware and a fully implemented KRS hardware accelerated application in ROS2 image_pipeline consisting of a ROS2 perception computational graph (2-3 nodes) Benchmarking of CPU only vs hardware accelerated application.

Phase III: MoveIt 2 pipelines?

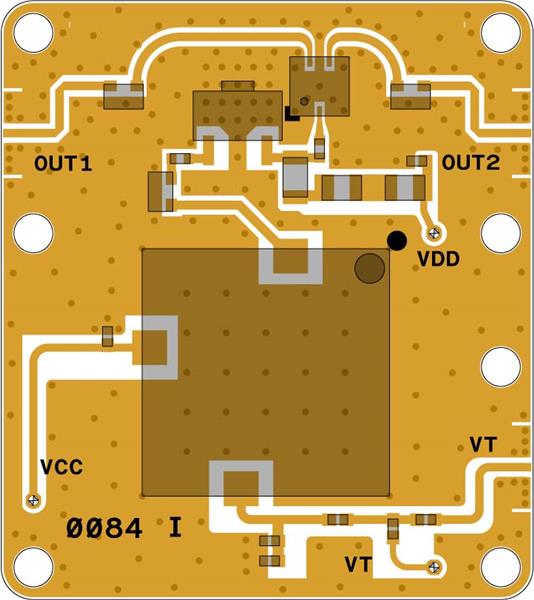

To start Phase 1, I decided to integrate a ROS2 Kria robotics stack application for the stereo block matching algorithm which exists within the Xilinx Vitis Vision library examples. After this application is complete, I will attempt to develop and streamline/optimize a C/C++ kernel (in Vitis) to see how much speed-up can be gained from a hardware accelerated stereo block matching algorithm. I created a ROS client library node based on the Disparity Node found in vanilla ROS2, but with OpenCL calls to FPGA interfaces (AXI4) and the hardware acceleration kernel in Vitis Vision (xf_stereolbm_accel.cpp): pull request can be found here.

Here is a video on setting up the USB 3D stereo depth camera to rectify the image & display disparity maps on the Kria SOM host processor (this app does not incorporate hardware accel.)(code courtesy Xilinx and Víctor Mayoral Vilches):

To get the raw images from the stereo camera seen in the image wheel above, I had to use a UVC video driver in ROS, I turned to this repo. I then ran the ros2 node: '$ ros2 run opencv_cam opencv_cam_main', then in another terminal ran '$ ros2 topic list' to see the /image_raw topic being published to.

Since the USB stereo camera I was using publishes a single 640 x 480 image with both camera feeds stitched together, I created a ros2 node to split the cameras into their own topics.

After running the stereo processing nodes on the host processor on the Kria SOM, I developed an initial hardware accelerated app based on the Vitis Vision library stereolbm (which is based on OpenCV functions) example found here

Code

Credits

Elephant Robotics

Elephant Robotics is a technology firm specializing in the design and production of robotics, development and applications of operating system and intelligent manufacturing services in industry, commerce, education, scientific research, home and etc.

Leave your feedback...