Artificial Life 2

About the project

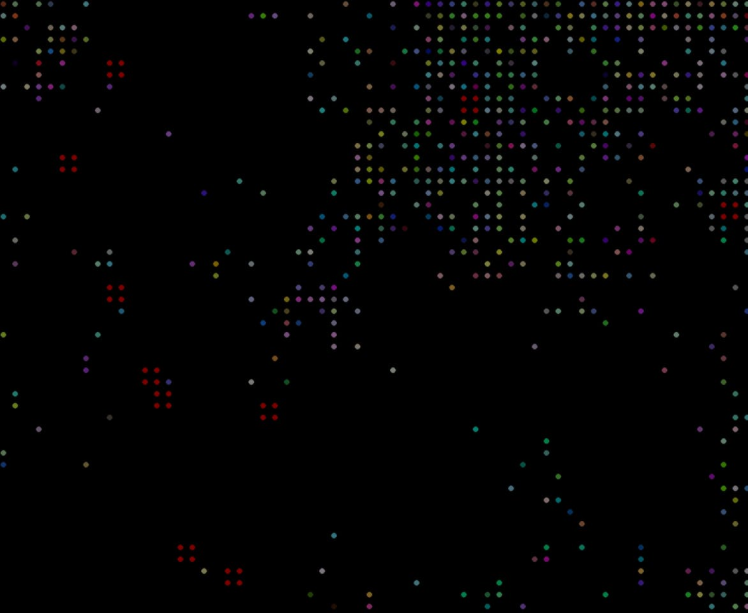

A vastly enhanced version of my Artificial Life project; with extra parameters, behaviours and LED panel compatibility.

Project info

Difficulty: Moderate

Platforms: Raspberry Pi, Pimoroni

Estimated time: 2 hours

License: GNU General Public License, version 3 or later (GPL3+)

Items used in this project

Hardware components

Story

Previously...

In 2017 I made a project called Artificial Life which used an 8x8 Unicorn matrix to display a grid of Artificial Lifeforms that would breed and fight each other.

In 2022 I updated the project to Artificial Life HD - which used a 16x16 Unicorn matrix as well as enhanced code, bug fixes and some extra functionality.

And so here we are in 2023 with 64x32 LED Panel compatibility and a whole ton of extra bug fixes, enhancements/added functionality and optimisations with Artificial Life 2!

Lets dive in.

The Install

This can be used with the Unicorn HAT, the Unicorn HAT HD or a large LED Panel.

It also has a Unicorn HAT simulator built in now so it can be run without the LED hardware.

Clone the repo code from my GitHub:

git clone https://github.com/LordofBone/Artificial_Life --recurse-submodulesThen run:

pip install -r requirements.txtThen for the Unicorn HAT installation go here.

For the Unicorn HAT HD installation here.

And finally, the LED Panel installation here.

With the LED Panel install I found on RPi OS Bullseye 64 bit I found I had to also install Pillow and change scheduling privileges for python:

pip install Pillow

sudo setcap 'cap_sys_nice=eip' /usr/bin/python3.9The simulation can then be run with:

python artifical_life.pyBy default this should launch a 16x16 simulated Unicorn HAT. This, along with other params of the simulation can be configured.

You can set a custom size of the simulated HAT by setting:

hat_model = "CUSTOM"

unicorn_simulator = True

hat_simulator_or_panel_size = x, yAlthough it should be noted there is a bug within the simulator that does not allow x & y values to be different from each other.

If you have a Unicorn HAT installed you can change the param:

hat_model = "HD"And for the Unicorn HD:

hat_model = "HD"Unicorn HAT Mini:

hat_model = "MINI"LED Panel:

hat_model = "PANEL"The Unicorn HATs will most likely require the simulation to be run as super user:

sudo python artificial_life.pyAnd these can all also be passed in via args - to see all available args (full args list also available below):

python artificial_life.py -hThe Enhancements/Optimisations

MaintainingPixel Composure:

I have totally re-worked the way the Lifeforms are written to the screen/panel/unicorn HAT - the way it worked before was:

- Logic loop runs

- Each loop writes an entity position on the display along with RGB values

- At the end of the loop the display writes out and displays all written positions and RGB values at once

Which worked fine for when things were running smooth; but the movement the loop started to run slow i.e. anything below 10 frames per second, the display would become difficult to see what's actually going on with each individual entity as the entire screen would change at once.

Now there is an entirely different submodule that is checked out with the main program: Pixel Composer.

Note: please be careful when changing values with Pixel Composer it can cause flashing lights, especially on very bright LED panels.

This works by the entities now being written into a 'world space' dict which contains their locations and colours (now held as floating point values of R, G, B rather than 0-255) this is then copied over into a 'render plane' within Pixel Composer which then runs asynchronously to display what's currently in the render plane to a frame buffer which is then written out to the screen.

Essentially what this means is that instead of having to wait for the entire process loop to finish before displaying out, each lifeforms' position will move on the display at the same time they are moved within the loop of all lifeforms - even if the entire loop takes 10 seconds to process everything you will still be able to see each movement of a lifeform clearly instead of every single one moving at once.

On top of that this also allows for some cool extra effects such as motion blur, lighting and background shaders, such as rain effects and other such funky things.

Also the full screen shader - which I discovered accidentally which allows all kinds of cool patterns to be displayed by just passing in a single number:

class FullScreenPatternShader(ConfigurableShaderSuper):

def __init__(self, count_number=0, count_number_max=32, count_number_step_up=1, count_number_step_down=1,

addition_shader=True, invert_count=True, shader_colour=(0.5, 0.5, 0.5)):

super().__init__(count_number, count_number_max, count_number_step_up, count_number_step_down, addition_shader,

invert_count, shader_colour)

# found this accidentally; I am not entirely sure how this works, but changing the max number that can be stepped

# to will result in various cool effects as well as plus/minus stepping and whether the stepping will reset

# when maxed or count back the other way

def run_shader(self, pixel):

if self.addition_shader:

self.number_count_step_plus()

else:

self.number_count_step_minus()

pixel_alpha = (self.count_number / self.count_number_max) * self.max_float

shaded_colour = self.blend_colour_alpha(pixel, self.shader_colour, pixel_alpha)

return shaded_colourBy changing the count_number_max variable along with the shader_colour all kinds of cool patterns can be made from still columns that look like the background of a Castlevania game or can producing moving patters that look like rain etc.

And the pillars:

Also as noted - it's now using floating points for colours, this allows for essentially HDR rendering; such as with the lighting shader; it wasn't working so well being limited to calculating the lighting within the 0-255 range. See more about why this happens here and here. I imagine my implementation is very flawed and simple compared to a proper implementation but it at least allows for a better colour/lighting model.

These floating point values are tone-mapped into the 0-1 range and the finally changed from float to RGB for final output to the screen/LED's. This has given it some really nice looking colours and allows for brightness to be set separately - overall it just looks far prettier.

All of these settings can be modified and added to within the artificial_life.py code itself, overriding the class within the rasterizer.py file

Shift + F can now be pushed to also pause & un-pause the rendering, while the logic continues on in the background.

Shift + G will also enable/disable gravity during the simulation.

And the Thanos snap is activated now by Shift + T, a quick way to randomly remove 50% of the lifeforms on the board.

More commands will be added with future iterations.

It was made as a separate submodule as I can see this being helpful in other projects, so I will probably do a separate write up for this at some point with further detail and modifications to make it easier to integrate into other projects.

There is a way to bypass Pixel Composer, by passing in an -ff argument into the artificial_life.py main code or by going into config/parameters and setting:

fixed_function = TrueThis will run faster, but not look as pretty. It will maintain the immediate lifeform movement as soon as they are processed - without having to wait for all lifeforms to process in a loop, however.

There is also now the ability to show the current stats by pressing Shift + S, which will also show the start time of the current simulation.

The original AL code could get very slow and laggy even on a 8x8 Unicorn HAT, with a bunch of optimisations I was able to add functionality and make it run way smoother even on a 16x16 board. There have now been even further optimisations made to allow for faster running on a 64x32 board as well as having new functionality.

A major optimisation made was to change how the collision/spawning code works, previously this worked by:

- Collision detected with another lifeform/edge of display

- New direction chosen randomly

- Check this new direction for collisions

- Repeat until new collision-free direction found or all directions attempted

This worked but it meant that processing would remain on one lifeform for quite some time potentially as all cardinal directions were searched for a free location to move to. This was leading to some lag when lifeforms were bunched up in a corner all looking for collision free areas around them. This was also similar for finding a new position to spawn a new lifeform.

So I changed it so that the collision re-checks are pushed to the next iteration of the loop. So that no matter how bunched up a lifeform is it won't have to check all directions around it every single loop, which was causing the slowdown as each lifeform takes up processing time within the loop through all lifeforms on screen.

So now it works like so:

- Collision check based on current movement

- If free position, move to that position

- If not free position, choose a new direction that will be processed the next time the loop reaches this lifeform and move on to process the next lifeform in the queue

This means the loop will continue and other lifeforms will be processed, speeding things up nicely and resulting in smoother movement, while also maintaining lifeforms ability to collide and bounce off of the edge of the screen/other objects. The bounce functionality is functionally the same and things run faster. Cool.

Also I made the DNA chaos code a bit more efficient so that the DNA from either lifeform when breeding another one is still a mix of the parent lifeforms but hopefully running with faster code, reducing lag in very busy areas.

I've also tried to move as much of the code into the one function 'process' to try and avoid time loss on function calls - which I believe function calling can add up and in a program such as this any time making the lifeform processing faster the better - I could be wrong about this one though but so far along with the other optimisations this has made things run a lot better; especially with the 64x32 board which can get fully populated with 2048 lifeforms that all need processing.

I also removed the setting which would allow lifeforms to stack on each other (so more than one lifeform per-pixel) as this was costly and slow, but I may return this feature if I can find a more optimal way of doing it.

Minecraft mode has also been removed as MC doesn't appear to be packed with Raspberry Pi OS anymore and even then the functionality needed a lot of work and improvement, this is something that will be looked into in the future.

I've also added in:

- Mineable resources, which are seen as red squares on the board and contain lots of 'resources' that can be mined, the lifeforms mining from them can keep the resources and use them to construct walls

- Walls that can be built by lifeforms that will remain static unless a sufficiently strong lifeform knocks them down and gains resources, these take resources to build also; so lifeforms can knock down walls and build new ones from the gathered resources. If they aren't strong enough they will bounce off the wall

- Additional momentum and bounciness added, so when gravity is on and a lifeform bounces off something, momentum will be taken into account and also momentum moved from one lifeform to another

- Also I made some changes to how far lifeforms can move until they try to change direction, to make sure they can move within the board space rather than just constantly bouncing off of the walls because their time to move variable is set so high

- Radiation, which affects lifeforms time to live as well as the chance of random DNA changes when breeding

- Ability for lifeforms to breed but then wait for free space around them, so you can have one stuck with no space to spawn a new lifeform but then when there is free space, spawn offspring; this also works for wall building

- There is now a BaseEntity class which lifeform, resources and walls use as a superclass; this means in future when I add more things to the simulation I can use this superclass and override it where necessary within the child classes

- As mentioned above keyboard commands have been added, such as the ability to cause a Thanos Snap and randomly wipe 50% of the lifeforms off the board (also with a cool fade effect thanks to Pixel Composer). There is also the ability to raise/lower radiation, enable/disable gravity, freeze the rendering and show current stats

- There is a retry function so that when all lifeforms have expired the simulation restarts - I've now added in a session begin datetime stamp that can be seen in the stats. So you can leave a simulation on for ages then come back, see how long the current session has lasted and whether it is still the original session you kicked off

- There is some WIP stuff in the code at the moment where I am trying to work on saving/loading the current state of the simulation but this has proven to be time consuming to get working properly; this will most likely be added in to the next version, but feel free to look at the existing code

- A memory system where a lifeform will breed/mine/win combat and remember the location where that happened and attempt to return to that point. If another event happens at the location it will add 1 to the number of memories at that point and if nothing happens it will remove 1 to the number of memories at that point. They will always return to the point with the most positive memories. This is to give the lifeforms some more interesting movement behaviour. Memories are also passed onto the next generation lifeforms, unless they are 'rebellious' and then they start with a clean memory; this, along with 'forgetfulness' are determined by the lifeforms DNA

- There is also a 'bad memories' dict variable that is currently unused, but in future there will be a system where lifeforms will try to avoid areas where something bad has happened to them

- Split out the HAT controller and the LED Panel controller code into separate modules away from the screen output code - this is to allow for more modular additions of extra screens/LED displays without having to change anything within the main code/Pixel Composer and only a small change of instantiating a different class within the screen controller

The new radiation system adds another layer to the simulation, the radiation settings can be set via args or via the parameters.py file:

initial_dna_chaos_chance = 10

initial_radiation = 0

max_radiation = 90

change_of_base_radiation_chance = 0.001

radiation_dmg_multiplier = 1000

radiation_change = TrueThe DNA chaos variable sets the % chance that there will be new DNA randomly created in the 3 DNA segments that exist when being passed onto a lifeforms offspring. This is now also affected by the new radiation levels, the higher the radiation the higher the chance there will be random DNA passed on - based on a function of this initial number and the radiation level.

The max and initial radiation can be set - which is where if radiation changing is active the numbers will be randomly chosen between these two settings. The max radiation can be increased and decreased during the simulation with Shift+R and R respectively.

If the random changing radiation setting is not on then these keys will adjust the current radiation rather than max - under both settings the current radiation will go up and down within a curve giving a little bit of a variation based around the current level.

The change setting is affected by the chance of change variable, with every loop of processing all lifeforms this is a % chance that the radiation will change (staying within the max radiation variable setting).

The damage multiplier setting affects how much damage radiation does to lifeforms; every time a lifeform is processed there is a calculation run - with no rads the life of a lifeform has 1 take off. However, with radiation this calculation is made:

self.time_to_live_count -= percentage(current_session.radiation * args.radiation_dmg_multi, 1)Where the current radiation is multiplied by the radiation damage multiplier and used as a % on the normal 1 damage. For example: radiation of 100 * 1000 results in a % of 100000 and that percentage of the default one is 1000 - resulting in that amount of damage on the lifeform.

Using the parameters below, a number of walls and resources can be spawned in random places on first run as well as resources for lifeforms to mine - adding a little bit more of an interesting environment for them to navigate around.

This is where you can witness 'mining facilities' where groups of lifeforms remember where a mining event was and try to return to that point; so you see clumps of them sitting around mining resources and seeing some others flying around constructing walls.

There is also a setting to enable lifeforms to build their own walls as well as a multiplier - the higher the setting the more often lifeforms will build a wall.

walls = 0

resources = 0

entities_build_walls = True

wall_chance_multiplier = 512An example of some spawned walls on simulation start:

There is also a full set of params that can be edited to change various things within the simulation these are found under config/parameters.py:

max_trait_number = 1000000

initial_lifeforms_count = 10

population_limit = 50

max_enemy_factor = 8

logging_level = 'INFO'

initial_dna_chaos_chance = 10

initial_radiation = 0

max_radiation = 90

change_of_base_radiation_chance = 0.001

radiation_dmg_multiplier = 1000

retries_on = False

led_brightness = 0.3

hat_model = "HD"

hat_simulator_or_panel_size = 8, 8

hat_buffer_refresh_rate = 60

refresh_logic_link = True

fixed_function = False

unicorn_simulator = False

radiation_change = True

gravity_on = False

trails_on = False

combine_mode = True

walls = 0

resources = 0

entities_build_walls = True

wall_chance_multiplier = 512All of these can also be set by passing in args to the program:

-m MAX_NUM, --max-num MAX_NUM

Maximum number possible for any entity traits

-ilc LIFE_FORM_TOTAL, --initial-lifeforms-count LIFE_FORM_TOTAL

Number of lifeforms to start with

-s LOOP_SPEED, --refresh-rate LOOP_SPEED

The refresh rate for the buffer processing, also sets

a maximum speed for the main loop processing, if sync

is enabled (this is to prevent the display falling

behind the logic loop)

-p POP_LIMIT, --population-limit POP_LIMIT

Limit of the population at any one time

-me MAX_ENEMY_FACTOR, --max-enemy-factor MAX_ENEMY_FACTOR

Factor that calculates into the maximum breed

threshold of an entity

-dc DNA_CHAOS_CHANCE, --dna-chaos DNA_CHAOS_CHANCE

Percentage chance of random DNA upon breeding of

entities

-shs CUSTOM_SIZE_SIMULATOR [CUSTOM_SIZE_SIMULATOR ...], --simulator-hat-size CUSTOM_SIZE_SIMULATOR [CUSTOM_SIZE_SIMULATOR ...]

Size of the simulator HAT in pixels; to use pass in

'-shs 16 16' for 16x16 pixels (x and y)

-c, --combine-mode Enables life forms to combine into bigger ones

-tr, --trails Stops the HAT from being cleared, resulting in trails

of entities

-g, --gravity Gravity enabled, still entities will fall to the floor

-rc, --radiation-change

Whether to adjust radiation levels across the

simulation or not

-w WALL_NUMBER, --walls WALL_NUMBER

Number of walls to randomly spawn that will block

entities

-rs RESOURCES_NUMBER, --resources RESOURCES_NUMBER

Number of resources to begin with that entities can

mine

-r RADIATION, --radiation RADIATION

Radiation enabled, will increase random mutation

chance and damage entities

-mr MAX_RADIATION, --max-radiation MAX_RADIATION

Maximum radiation level possible

-rm RADIATION_DMG_MULTI, --radiation-multi RADIATION_DMG_MULTI

Maximum radiation level possible

-rbc RADIATION_BASE_CHANGE_CHANCE, --radiation-base-change RADIATION_BASE_CHANGE_CHANCE

The percentage chance that the base radiation level

will change randomly.

-be, --building-entities

Whether lifeforms can build static blocks on the board

-wc WALL_CHANCE_MULTIPLIER, --wall-chance WALL_CHANCE_MULTIPLIER

Whether lifeforms can build static blocks on the board

-rt, --retry Whether the loop will automatically restart upon the

expiry of all entities

-sim, --unicorn-hat-sim

Whether to use the Unicorn HAT simulator or not

-hm {SD,HD,MINI,PANEL,CUSTOM}, --hat-model {SD,HD,MINI,PANEL,CUSTOM}

What type of HAT the program is using. CUSTOM only

works with Unicorn HAT Simulator

-l {CRITICAL,ERROR,WARNING,INFO,DEBUG,NOTSET}, --log-level {CRITICAL,ERROR,WARNING,INFO,DEBUG,NOTSET}

Logging level

-sl, --sync-logic Whether to sync the logic loop to the refresh rate of

the screen

-ff, --fixed-function

Whether to bypass pixel composer and use fixed

function for drawing (faster, less pretty)Allowing for full customisation of the experience and easier distribution of the code without having to pass in any arguments while also allowing quick command line level tweaks via the args if necessary.

The Results

Bug as a feature

There is something very interesting I have noticed - and that is that lifeforms can seemingly produce offspring at random without having to interact with another lifeform. Even one lifeform alone can now spawn into many and I've seen 'lifeform cannons' and spawners where new lifeforms are fired out from structures or moving clusters.

I think this is related to the wall building code but I'm really not sure at the moment, I will keep looking to find how this is happening and ensure it stays in as a feature. As this wasn't intentional but it is very cool.

You can see an example of this occurring at 7:40 in the video.

More interesting movement

With the new memory system in place causing lifeforms to return to 'reward areas' the movement become a lot more varied, instead of just random patterns that eventually turn into semi-predictable end states the lifeforms now move and re-arrange themselves in really unexpected ways.

Couple this with the new movement mechanics with gravity on and the random offspring seen above and random 'entity shooters' can be seen, where a little column of lifeforms spawns a stream of new offspring out.

Similar can be seen with clouds or blobs of lifeforms, dropping streams of offspring out as they move around. It's quite similar to some of the creatures seen in Conway's Game of Life - which this project of course shares some similarities with.

As the 'memories' are passed down from generation to generation you can witness mass groups of lifeforms all moving around together with the occasional 'rebel' leaving the mass and heading off on its own journey.

There's an example of this at 8:10 in the video and some very interesting movement at 10:35.

Hitting the wall

When starting a simulation with walls in place it can really change where the lifeforms move and can form separate areas where they become walled off or have to navigate around the structures. The lifeforms will also knock down the walls if they are strong enough, which adds another layer of interesting interactions with the environment.

When gravity is switched on it can cause lifeforms to fall and spill down over the walls, which results in a liquid-like effect, they can also get caught in 'bowls' which is cool to see. This is something I may expand on in future; the addition of passive lifeforms acting as a liquid within the simulation.

When you have lifeforms able to build walls and resources for them to use, you can see clumps of lifeforms around the resources mining away, due to the memory system they will try and return to these mining points. Which as mentioned before can cause dynamically occurring mining facilities.

The walls the lifeforms construct can make some interesting patterns on the board which, much like when walls are generated at the beginning, cause some interesting movements and interactions. Especially when gravity is switched on; I've noticed that they will create things resembling buildings and the lifeforms will sit inside these like little apartments.

The new wall and resource system is very cool and adds a new layer of interactions and options to the simulation - another cool thing is it can leave behind 'ruins', so when all lifeforms have expired all that will remain of their time on the board is the walls they constructed - like the remains of a civilisation.

You can see an example of walls with gravity active at 4:13 in the video.

Fallout 23

The radiation system gives another optional level to the lifeforms, so now with the radiation effecting the passing on of DNA as well as the lifetime of each lifeform the simulation is a less predictable than it was before.

Previously eventually all the lifeforms would find a point where their configurations would be good enough to breed and fill up the entire board where the simulation would stay. But now, they have to compete with the changing rad levels so you can witness waves up and down of numbers for lifeforms.

The ability to change the parameters of the radiation has a cool effect as well - setting it really high and putting retries on will result in lots of extinct lifeforms, but eventually the right combination will spawn and start to show interesting patterns as they try to evolve to beat the rad level.

Also with the radiation level able to be set within a simulation using keyboard commands the number of lifeforms and evolutionary pressures can be impacted in real-time, which adds some user interactivity with the simulation and reduces the predictability of each simulation.

There is an example of high radiation reducing all lifeforms down to a minimum at 7:10 and 8:58 and about 10 seconds prior to that you can see a Thanos snap fade with the shaders.

The Future

As well as utilising more advanced hardware in the future I also would like to find a way to use the GPU onboard the Pi/PC to accelerate some of the shader functions such as the calculations - if not entirely change the way entities are rendered out by using OpenGL or something similar.

This would be a massive change to the rendering subsystem but would vastly increase the speed things can be rendered and thus allow for the program to simulate faster. The extra hardware would also help the main logic loop run faster as this is where things get slower than the shader tech when there are many lifeforms all colliding with each other.

I will also be grabbing a 64x64 LED panel at some point for an Artificial Life 64 and then by the time we reach the confusingly named Artificial Life 3 (maybe I need to look into a less generic name) I am hoping to have enough CPU/GPU power and software optimisations to be able to grab a 128x128 LED panel or beyond and get some seriously advanced simulation happening. As the more space the lifeforms have to move around the more interesting things seem to happen; coupled with the extra features.

On top of this I am looking into making a 3D version using an LED cube. This will take some doing with adding a Z axis in but it would allow for some cool effects and also maybe some additional effects for the 2D versions. For instance within the Pixel Composer Z axis calculations can be added into lighting etc. So that should be interesting, it will take a toll on the CPU, however.

There are also optimisations that I could make around the hat_controller.py module; in terms of how it handles initialising the simulator and the LED hardware - and if these aren't available. At the moment it works fine when no h/w is found and reverts to the simulator but it is a little hacky.

I will also finish the save/load state system and also add in a fourth dimension to the simulation (time), I'd like to find a way to be able to rewind the simulation somehow. No idea how I'd do this yet; but something I will definitely look into.

When I have some h/w acceleration and more optimisations I want to also try either a gigantic simulation using the Unicorn HAT simulator on a large display (128x128 or larger) or by using huge LED Panels. The extra freedom of movement and room to evolve will really make for some interesting outcomes.

Most likely I will make Artificial Life 64 next year, so keep an eye out in 2024 for the sequel! Also keep an eye out for my next Cyberware project which will be finally on the way as my Cyberpunk jacket finally came through; so I can make the video at last!

It will be late and buggy, like the game.

Exciting.

Leave your feedback...