New Era Farming with TensorFlow on Lowest Power Consumption

About the project

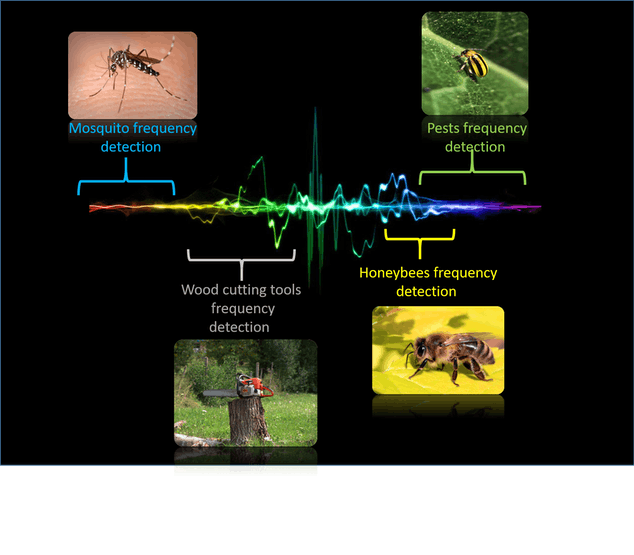

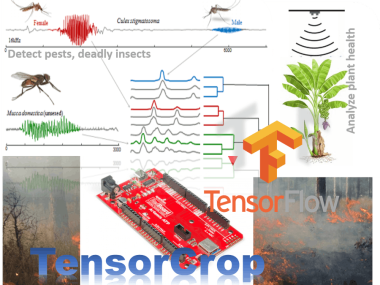

Using ML to detect pests, mosquitoes, cutting trees using audio analysis. Also predicting fire, greenhouse adaptation and plant growth.

Project info

Difficulty: Moderate

Estimated time: 1 hour

License: GNU General Public License, version 3 or later (GPL3+)

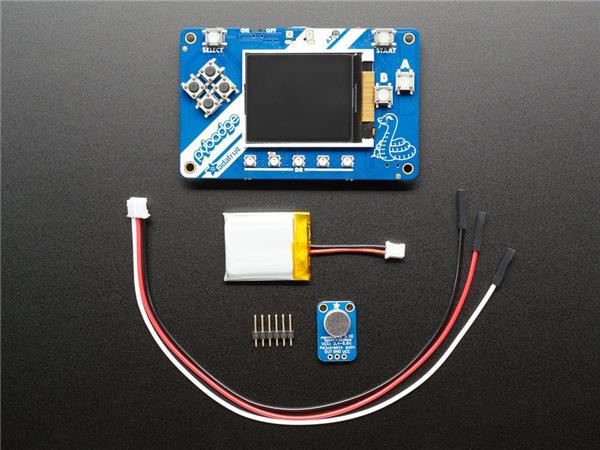

Items used in this project

Hardware components

Software apps and online services

|

|

Solder Wire, Lead Free | |

|

|

Drill, Screwdriver | |

|

|

Tape, Double Sided | |

|

|

Plier, Long Nose | |

|

|

Audacity | |

|

|

Google Colab | |

|

|

TensorFlow | |

|

|

Arduino IDE |

View all

Story

This project demonstrates how to build a device using TensorFlow and Artemis module to solve most of the problems faced by the rural or agricultural communities anywhere. The device uses Machine Learning algorithms to check overall plant health, extreme climate prediction and protection, auto-greenhouse adaptation and detecting deadly disease spreading vectors or illegal logging of forests using audio analysis. The project also demonstrates how we can have more insights for our farm just by collecting and utilizing the data got from our sensors. I got inspiration for making this project from news like Australian wildfires, Indian GDP falling down due to faulty agricultural practices and locust swarm which is damaging the crops in East Africa, Pakistan and many other nations very rapidly, mosquitoes breeding at an alarming rate, neglected tropical diseases, so I found a call within me as an active member of this innovative, committed community.

The important measure taken while making this project was collecting data efficiently and patiently, once the data is collected the job becomes lot more easier (You are bound to fail if you don't collect correct data to feed your hungry ML frameworks). For data collection part I used my past project which just gathers data from the sensors and sends to backend using Sigfox protocols but here I am going to train my device on those data. Here is the link for my past project -https://www.hackster.io/Vilaksh/sigfy-sigfox-farm-yield-with-crop-and-health-monitoring-3d8116 . Try to understand the data collection part first.

The backbone of this project is that you need to tune your device after every stage. So lets get started, enjoy learning with Machine Learning.

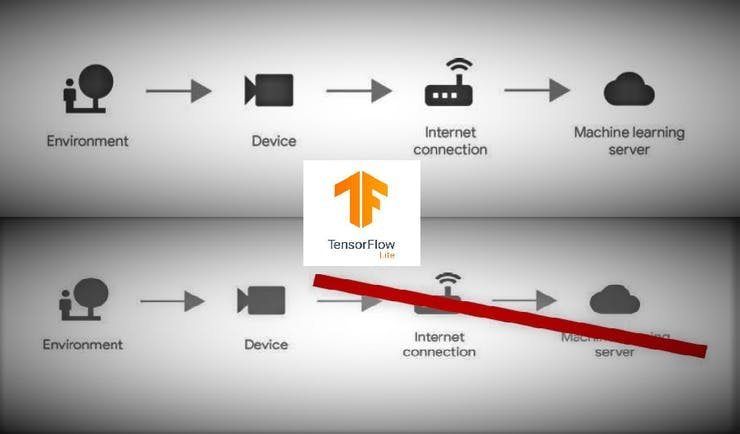

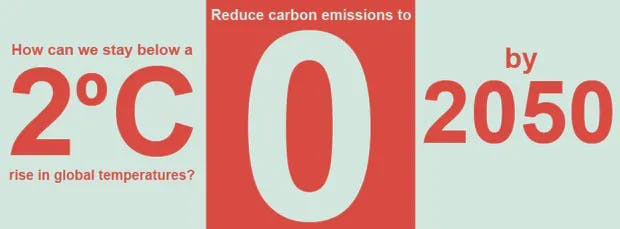

Technical Overview:I still love my village though we have shifted to a modern city. The freshness of village still restores me from any pensive mood but due to pollution all around we are suffering from bad health and whatever crop we grow they too have some undesired effect on our body, so it is important to look after those crops as well as early sign of diseases in plants as well as humans that too without using complex, power-hungry devices which needs lots of maintenance and far beyond the reach of poor farmers or village men. So I decided to use Edge devices capable of using ML features withlowlatencyandalmost no carbon footprint.

1 / 2 • The true machine would run without a heavy computing architecture and internet.

The true machine would run without a heavy computing architecture and internet.

Using TensorFlow on Edge devices can help to reduce global carbon emission and save electricity.

Background:Theme:Nature

1)FarmAnalysis:

With increasing population a sustainable method of farming is important. Previously we just used to play with sensors but now using Tensorflow we can not only sense but analyse, predict and take actions. Using all the collected data we will find abnormalities in crop growth, photosynthesis rate, extreme climate and need for smart green house adaptation. This step will stop the competition among the farmers for using excess fertilizers, pesticides, insecticides to boost production. Using the correct farm analysis the machine would auto-suggest the farmer when to use the chemicals in his farm and thus save his money, efforts and degradation of environment. You can check this website for more problems faced by my country's agricultural community.

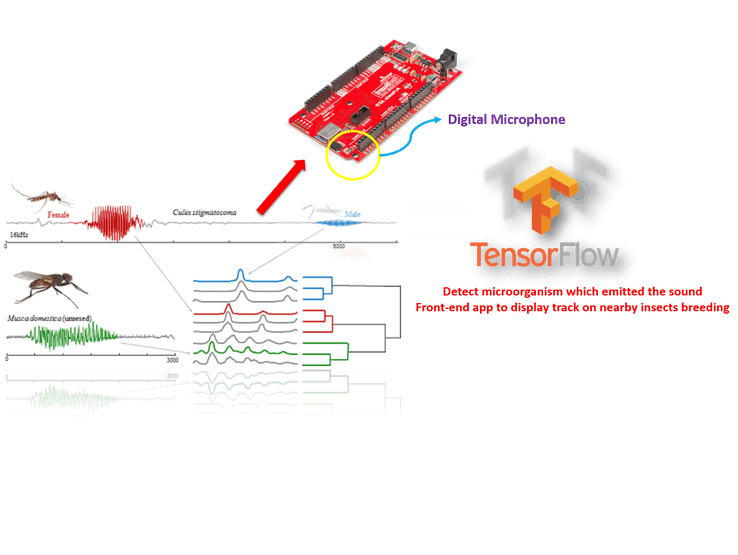

2) Detection of certain beneficial andharmful organisms using audio analysis :

Flowers can hear buzzing bees—and it makes their nectar sweeter

Farmers are using lots of chemicals to boost their farm yield but we tend to forget that due to excessive use of those pesticides, insecticides and inorganic manures, cross pollinating agents, the arthropods( 90% of living organisms found on earth are insects) don't get attracted to those plants or otherwise die due to excessive chemical exposure and pollution. More than 2, 000 pollinating insects are extinct by now and only 500 are left to extinct if not protected.

We also need to detect diseases in crops as early as possible to stop it from spreading in farm. To solve this problem I thought why not to tell farmer to use chemicals only if there are any bad growth patterns or harmful pests, nowadays even if there is no disease farmers uselessly spray chemicals and kill useful insects like honeybees. It will also detect for certain pollinating agents and how frequently they are there on fields, based on the observation it can assist farmer in planting ornamental plants, also it can detect big animals who destroy the crop just by their voice and frequency. For now I trained my device to recognise sounds of several breeds of mosquitoes like aedes, culex and anophleles also honeybees. I will also add crickets, locust and some other pests too since I have a very small dataset for it. The point here is that on the first occurrence of any sign of pest the farmer or government can take action before it gets spread everywhere, also the user gets to know exactly from which device was the signal received. Read this article https://www.aljazeera.com/news/2020/01/200125090150459.html

If the above problem is not solved then the whole world will be in the midst of food crisis. "East Africa locust outbreak sparks calls for international help"

1 / 2 • If we get to know which areas are more prone to dangerous breeding of mosquitoes we can easily render assistance to those people.

If we get to know which areas are more prone to dangerous breeding of mosquitoes we can easily render assistance to those people.

A man walks through a swarm of desert locusts flying over a grazing land in Lemasulani village, Samburu county, Kenya. The plague is about to destroy every crop and spread through whole continent.

3) Stopping deforestation :

There are many illegal cutting of trees so my device would make the concerned authorities aware of the cutting of trees using sound analysis technique, particularly it would detect the tone and frequency of wood cutting tools being operated.

Illegal cutting of trees leading to more air pollution.

Illegal cutting of trees leading to more air pollution.

4) Predicting wildfires and adapting for extreme situations:

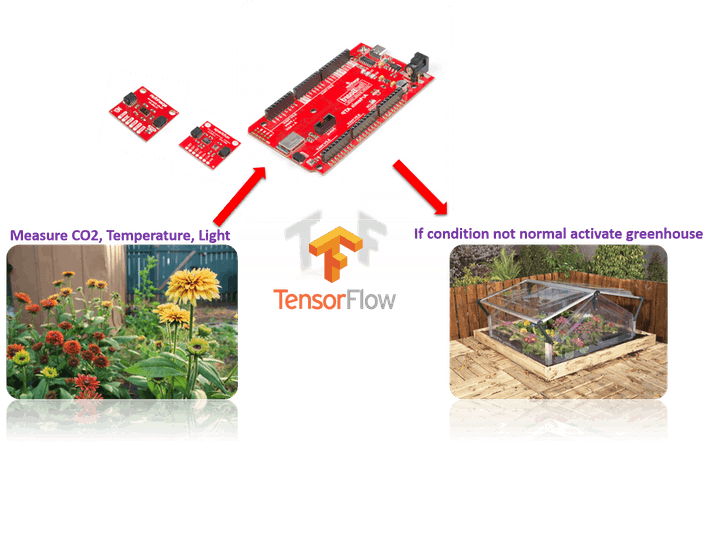

If we are able to analyse the data coming from environmental sensors and gas sensors we can easily predict for wild fires also the device can be made to learn to take action in case if the plant does not get optimum temperature and light so the device can activate greenhouse mode.

Predicting wild fires beforehand.

Predicting wild fires beforehand.

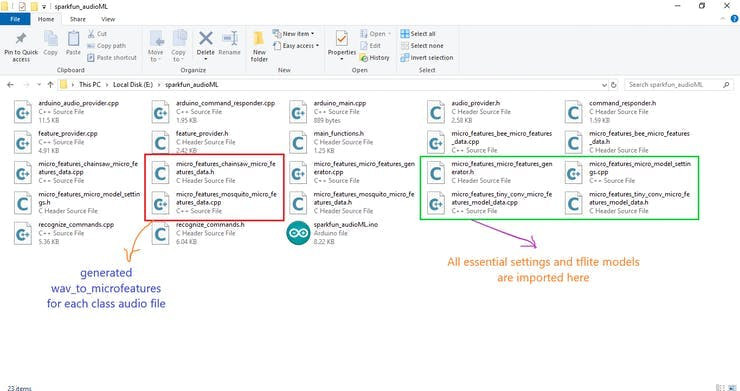

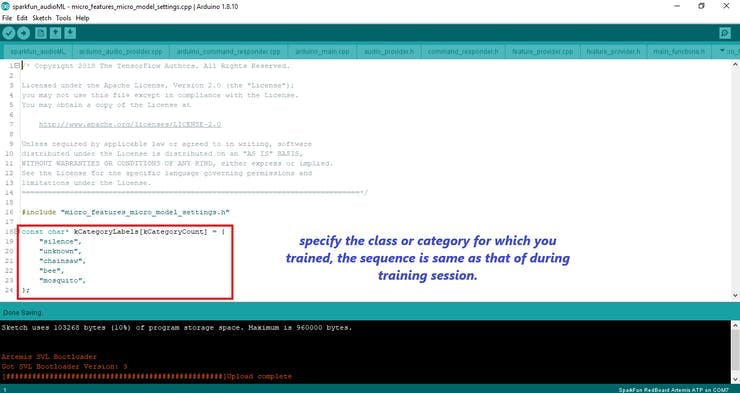

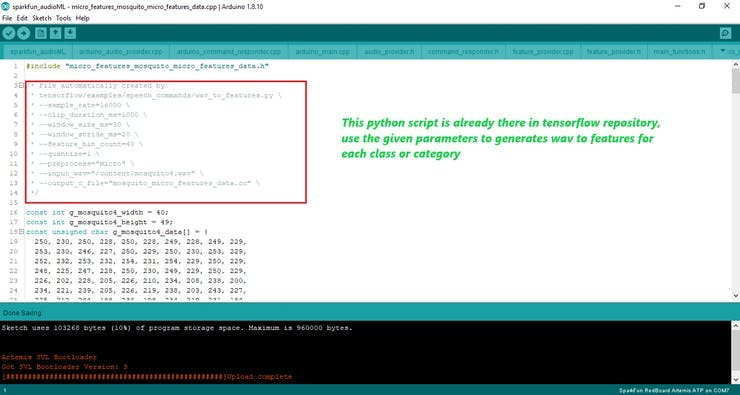

[Note: Please look every image carefully in this section, each image contains critical data required to run model successfully]

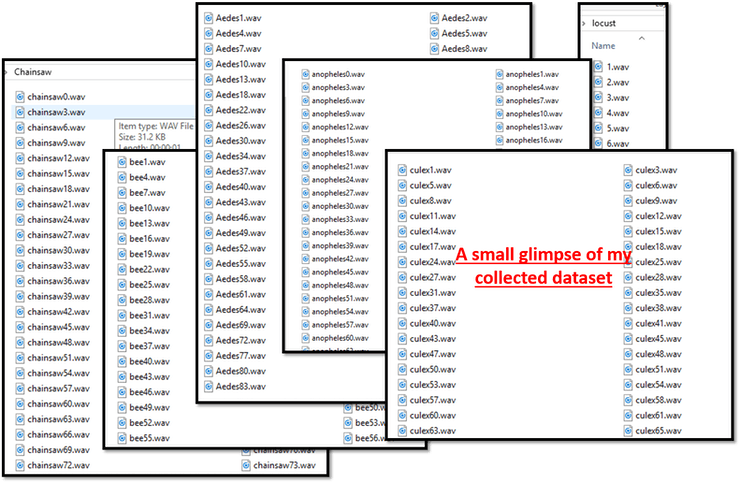

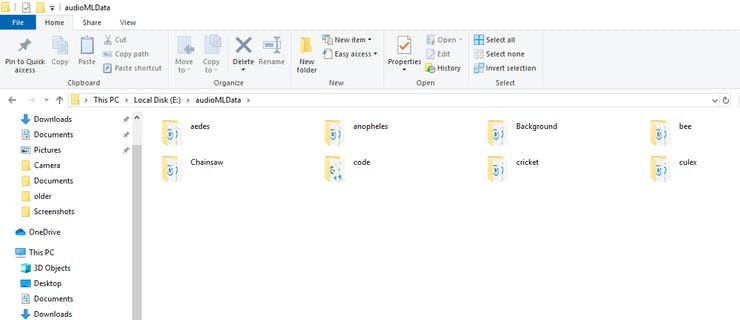

Let's go!Audio Classification:Step 1: Collecting the required dataset for audio analysis:

I got audio files from kaggle dataset for different kinds of mosquito wing beat, honeybee, you can too download it from there, the file is big but we don't need that much dataset since our device memory is small, so we will limit our self to 200 audio files of each class only, of one seconds utterance each. Similarly you can get for chainsaw, locust, cricket in this way. You can also record your own data if you have a silent room with insects, that would be much better and accurate and that would be very much exciting but I wasn't able to do so because of my stressful school exams. Note: If we directly load the audio data downloaded for the training process it won't work because the microphone architecture would differ and the device won't recognize anything. So once the files are downloaded we need to record it again via the Artemis microphone so that we can train on the data which is exact to be used for running inference. So let's configure our Arduino IDE for Artemis Redboard ATP, look the below link for that.

https://learn.sparkfun.com/tutorials/hookup-guide-for-the-sparkfun-redboard-artemis-atp

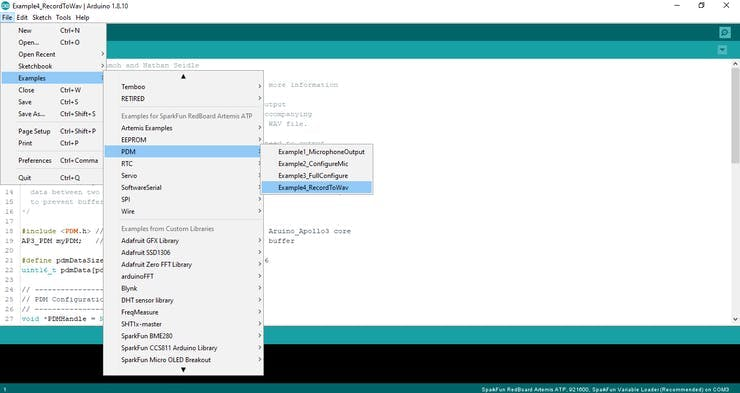

Select the board as Artemis ATP then from File->Examples->Sparkfun Redboard Artemis Example->PDM->Record_to_wav

Along with the code there comes a python script which you need to run to record audio from the on-board mic. It is very necessary because audio files came from different mics so there might be a chance that the board would not be able to recognize the frequencies accurately and treat the sound as noise.

(Protip:While recording make variations of air column by bringing source of sound back and forth slowly as a simulation of real insect coming near the mics so that we get better results. I tried myself and it increased the accuracy.)

#!/usr/bin/python

from __future__ import division

"""

Author: Justice Amoh

Date: 11/01/2019

Description: Python script to stream audio from Artemis Apollo3 PDM microphone

"""

import sys

import serial

import numpy as np

import matplotlib.pyplot as plt

from serial.tools import list_ports

from time import sleep

from scipy.io import wavfile

from datetime import datetime

# Controls

do_plot = True

do_save = True

wavname = 'recording_%s.wav'%(datetime.now().strftime("%m%d_%H%M"))

runtime = 50#100 # runtime in frames, sec/10, set it according to your audio duration default is 5 seconds, I set it to 4 minutes as per my audio duration

# Find Artemis Serial Port

ports = list_ports.comports()

try:

sPort = [p[0] for p in ports if 'cu.wchusbserial' in p[0]][0]

except Exception as e:

print 'Cannot find serial port!'

sys.exit(3)

# Serial Config

ser = serial.Serial(sPort,115200)

ser.reset_input_buffer()

ser.reset_output_buffer()

# Audio Format & Datatype

dtype = np.int16 # Data type to read data

typelen = np.dtype(dtype).itemsize # Length of data type

maxval = 32768. # 2**15 # For 16bit signed

# Plot Parameters

delay = .00001 # Use 1us pauses - as in matlab

fsamp = 16000 # Sampling rate

nframes = 10 # No. of frames to read at a time

buflen = fsamp//10 # Buffer length

bufsize = buflen*typelen # Resulting number of bytes to read

window = fsamp*10 # window of signal to plot at a time in samples

# Variables

x = [0]*window

t = np.arange(window)/fsamp # [x/fsamp for x in range(10)]

#---------------

# Plot & Figures

#---------------

plt.ion()

plt.show()

# Configure Figure

with plt.style.context(('dark_background')):

fig,axs = plt.subplots(1,1,figsize=(7,2.5))

lw, = axs.plot(t,x,'r')

axs.set_xlim(0,window/fsamp)

axs.grid(which='major', alpha=0.2)

axs.set_ylim(-1,1)

axs.set_xlabel('Time (s)')

axs.set_ylabel('Amplitude')

axs.set_title('Streaming Audio')

plt.tight_layout()

plt.pause(0.001)

# Start Transmission

ser.write('START') # Send Start command

sleep(1)

for i in range(runtime):

buf = ser.read(bufsize) # Read audio data

buf = np.frombuffer(buf,dtype=dtype) # Convert to int16

buf = buf/maxval # convert to float

x.extend(buf) # Append to waveform array

# Update Plot lines

lw.set_ydata(x[-window:])

plt.pause(0.001)

sleep(delay)

# Stop Streaming

ser.write('STOP')

sleep(0.5)

ser.reset_input_buffer()

ser.reset_output_buffer()

ser.close()

# Remove initial zeros

x = x[window:]

# Helper Functions

def plotAll():

t = np.arange(len(x))/fsamp

with plt.style.context(('dark_background')):

fig,axs = plt.subplots(1,1,figsize=(7,2.5))

lw, = axs.plot(t,x,'r')

axs.grid(which='major', alpha=0.2)

axs.set_xlim(0,t[-1])

plt.tight_layout()

return

# Plot All

if do_plot:

plt.close(fig)

plotAll()

# Save Recorded Audio

if do_save:

wavfile.write(wavname,fsamp,np.array(x))

print "Recording saved to file: %s"%wavnameOnce the audio is recorded you can use any audio splitter to split audio files into 1 second each. I used the below piece of code with jupyter notebook to achieve the above step.

from pydub import AudioSegment

from pydub.utils import make_chunks

myaudio = AudioSegment.from_file("myAudio.wav" , "wav")

chunk_length_ms = 1000 # pydub calculates in millisec

chunks = make_chunks(myaudio, chunk_length_ms) #Make chunks of one sec

#Export all of the individual chunks as wav files

for i, chunk in enumerate(chunks):

chunk_name = "chunk{0}.wav".format(i)

print "exporting", chunk_name

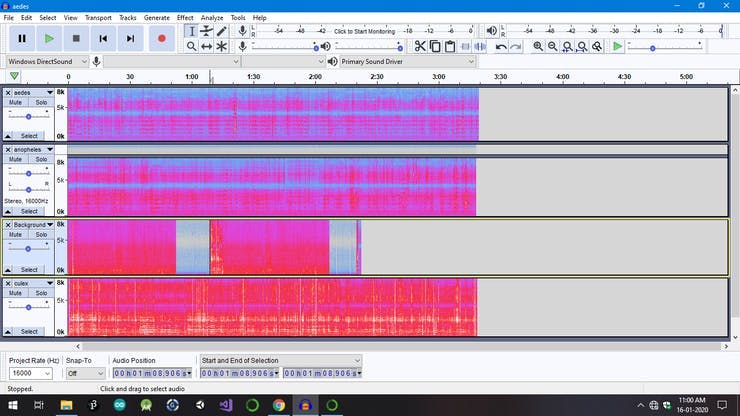

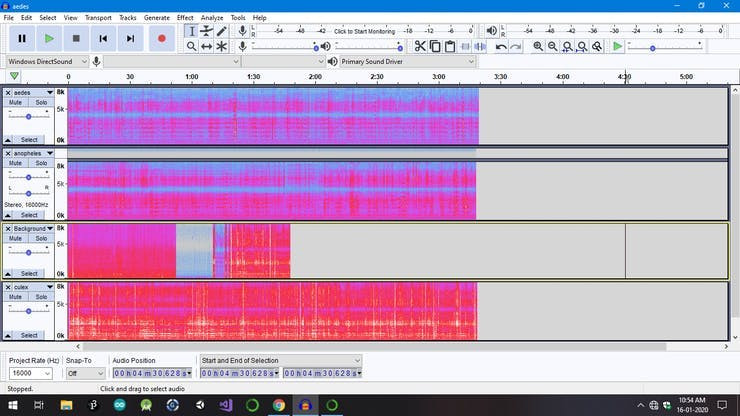

chunk.export(chunk_name, format="wav")I used Audacity to clean up my downloaded audio files before I slice them up, also there are very awesome features which you can use to know whether you audio is pure or not, audio spectrogram.

1 / 3 • Audacity used for deleting all those areas in audio where there is noise or not pure insect sound.

Audacity used for deleting all those areas in audio where there is noise or not pure insect sound.

Once the audio files are sliced up you are ready to train those files for your Artemis. However you need to tweak to make it work perfectly because the data might contain lots of noise due to enclosure or working environment hence I recommend you to train background dataset also so that it can work even there is constant peculiar noise. Background contains all the trimmed audio segment in Audacity software and some distinct noise.

1 / 2

All collected audio files are here in this folder.

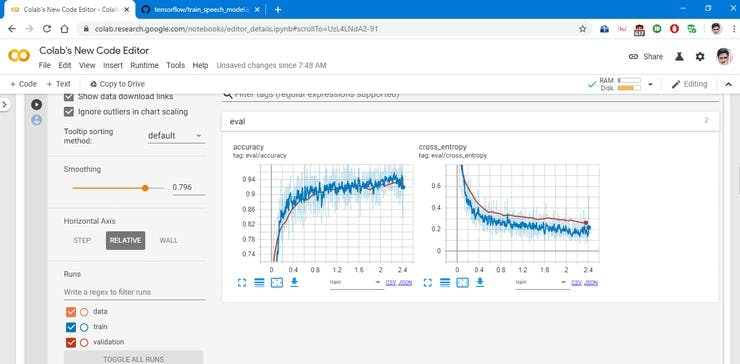

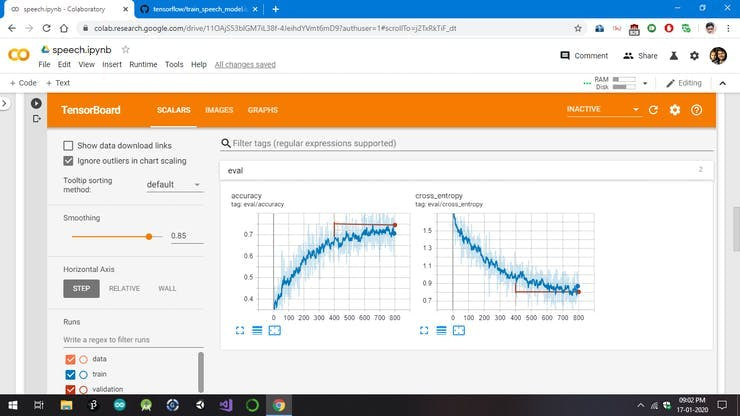

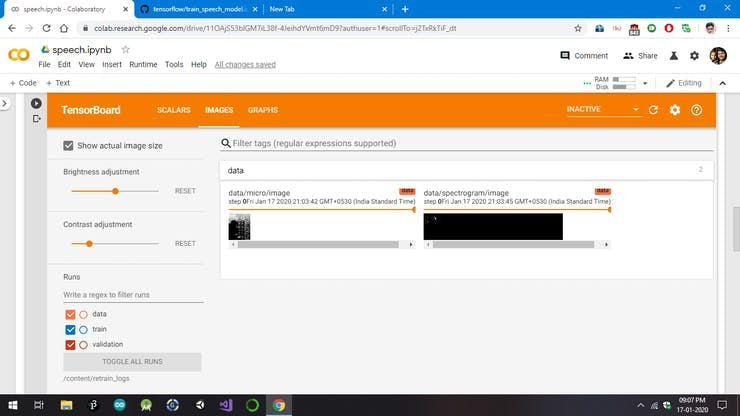

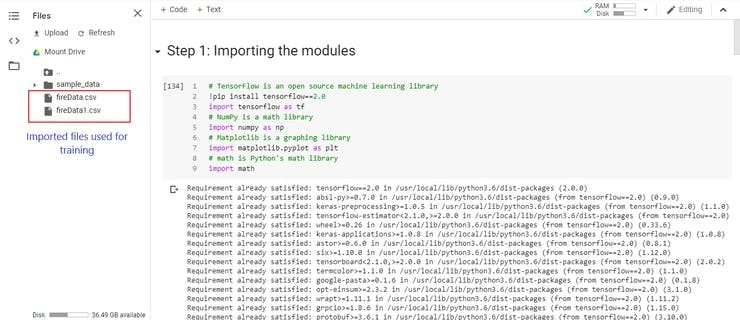

For training I used Google Colab, below is full training process images, you need to upload these files while training in Colab notebook and run on GPU, for me it took nearly 2 hours. I had to train thrice because the notebook kept disconnecting due to my slow internet connection, so I trained first a small dataset only bee, mosquito(no gene or breed classification) and chainsaw after that I trained the whole dataset for two times and luckily I got success.

Step 2:Training theaudio data

Audio Training #1: Labels = mosquito, bee, chainsaw

1 / 3 • Starting the training and it's visualization on TensorBoard.

Starting the training and it's visualization on TensorBoard.

Process completed, 18,000 training steps completed.

Spectogram created for our model

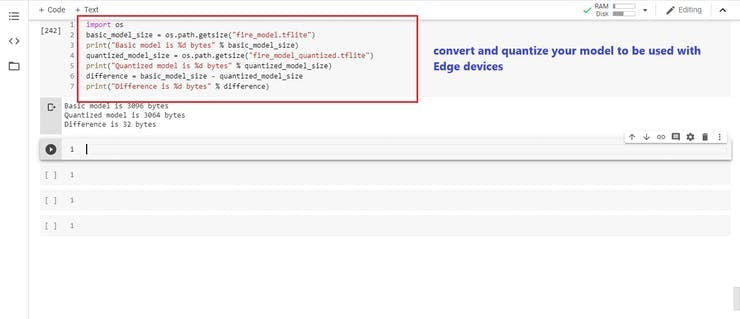

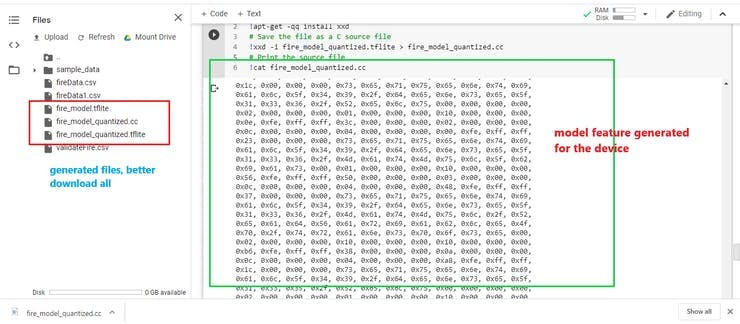

Once the training is complete we need to freeze the model and convert it into lite model to be used in Edge device. I will attach the code along with all required comments.

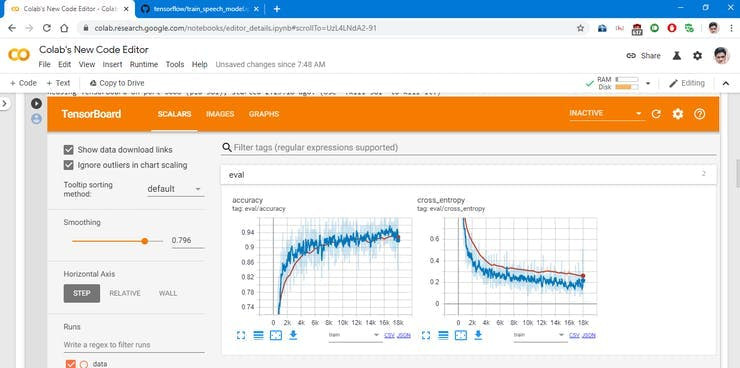

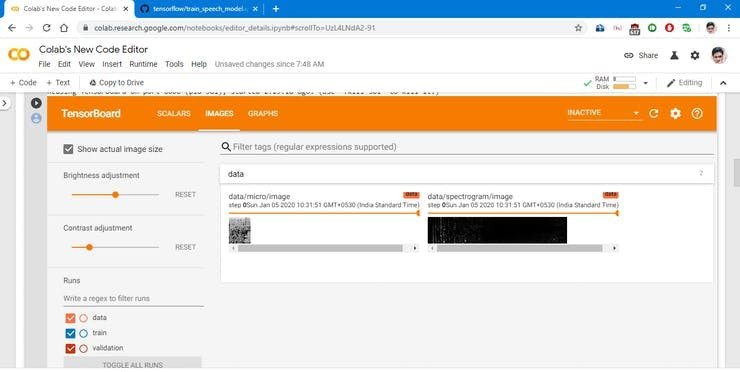

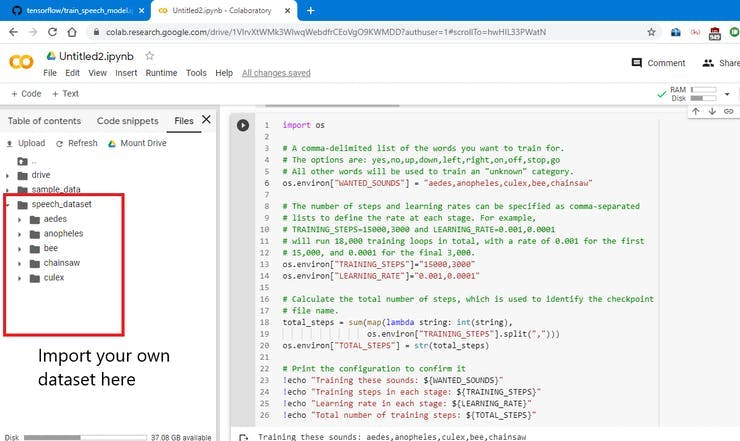

Audio Training #2: Labels = aedes, culex, anopheles, bee, chainsaw

1 / 3 • This same goes for every training, import your own dataset in any folder but remember it's path and name.

This same goes for every training, import your own dataset in any folder but remember it's path and name.

Start of the training

Spectrogram created

Download all the files generated from the above steps, also you need to generate microfeature file for every class audio file, I will explain this when we code the device. Also you can read the TensorFlow Lite for Microcontrollers documentation for more information.

Data Classification:

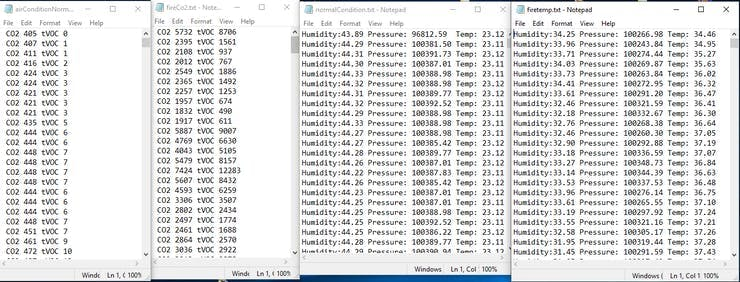

Data Classification:Step 1: Collecting sensor datafor both desired and undesired behaviors

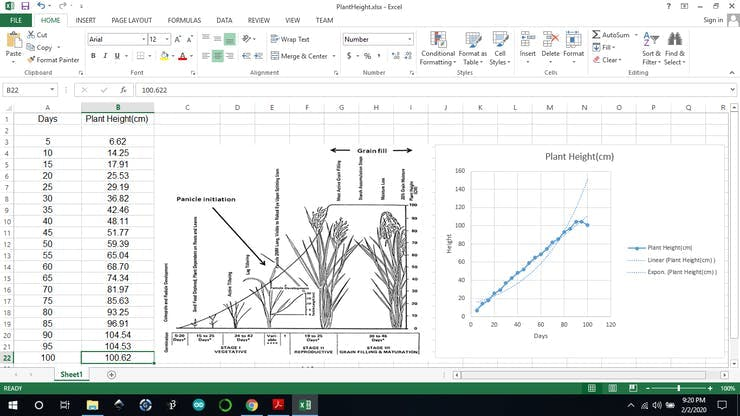

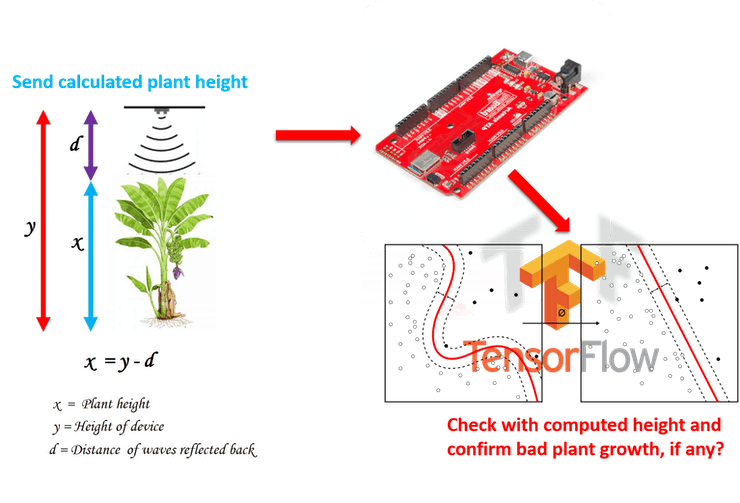

1) Plant height: I used my past project to find out rice plant height after germination but that too was not sufficient because I could keep my device on for so long as there is no SigFox connectivity in India so whatever reading I got I used Excel mathematical statistical functions, regression technique to find all other points and added some noise. I am really sorry for having small dataset for ML but thankfully it suits my application, if I someday make it a business product then I would expand my dataset by generating synthetic data.

1 / 2 • I tried to maintain the data as close to standard data, I found that I still need to normalize all values

I tried to maintain the data as close to standard data, I found that I still need to normalize all values

After normalization of data values

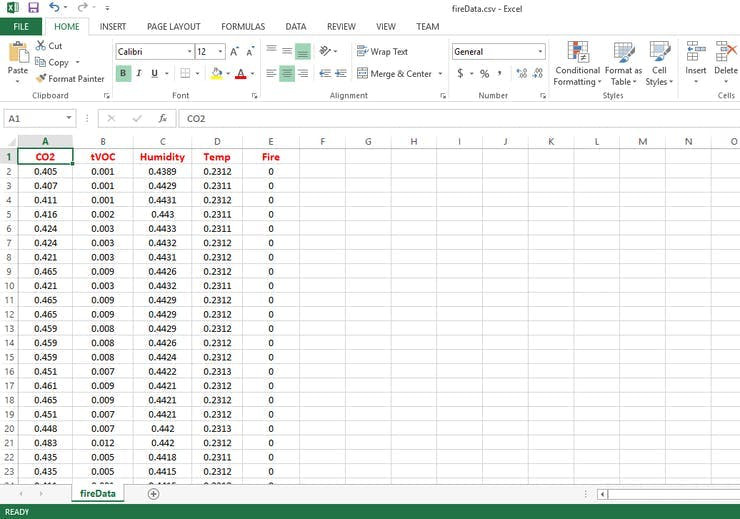

2) Fire prediction: I used the environmental combo quiic sensor to collect the normal readings as well as fire situation reading. For fire we need himidiy, temperatures, tVOC, CO2 readings. So basically we are using making a classification model using regression technique to make predictions. I used the example code of the sensor to take all the readings. It is very essential feature to detect wildfires.

1 / 2 • Save all the reading in a notepad, later we will port it to a csv file

Save all the reading in a notepad, later we will port it to a csv file

The values are normalized so that they work well for training, deep learning works best when data is normalized, 'Fire' column is label either 0 or 1

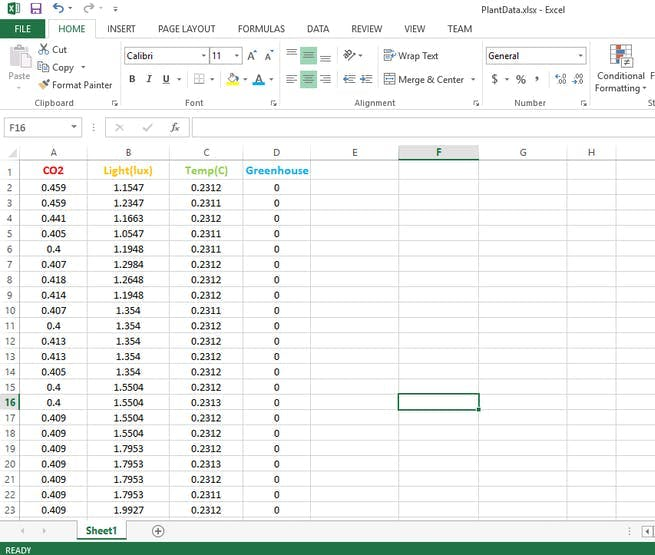

3) Greenhouse Adaptation: I used quiic VCNL4040 module to detect ambient light levels and environmental combo sensor for temperature and CO2 sensing. Based on the data the device can predict when to adapt to greenhouse mode to save the crops. It can be further optimized to protect the crop from hailstorms or heavy snowfalls.

I made up some imaginary data which is near alike real data, greenhouse is the label, here 0 for not needed and 1 for needed greenhouse adaptation

I made up some imaginary data which is near alike real data, greenhouse is the label, here 0 for not needed and 1 for needed greenhouse adaptation

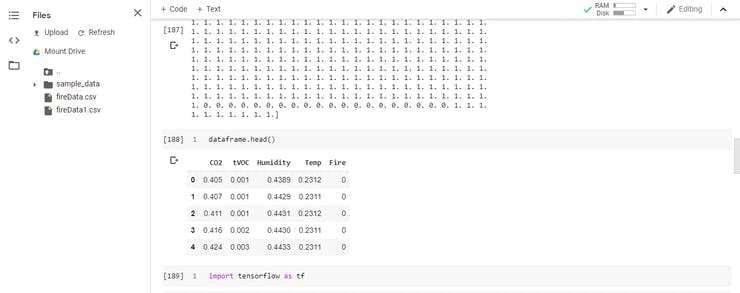

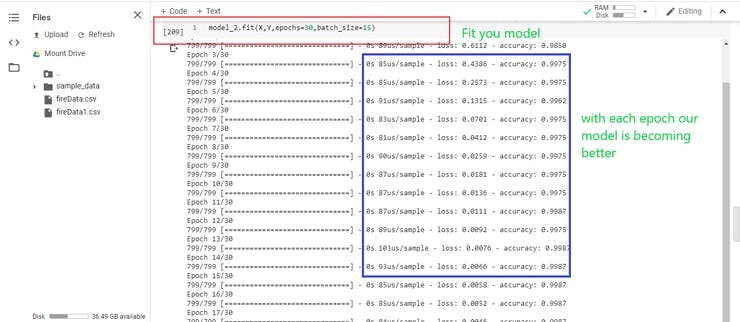

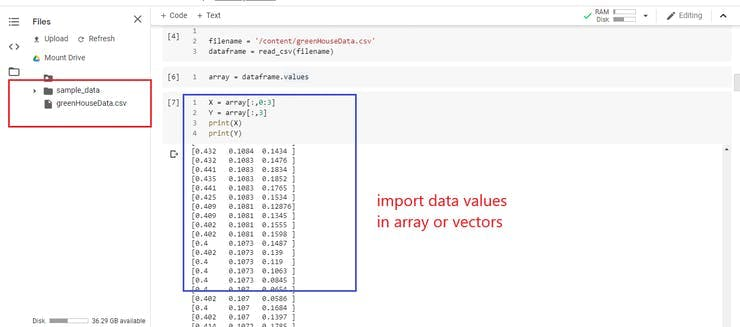

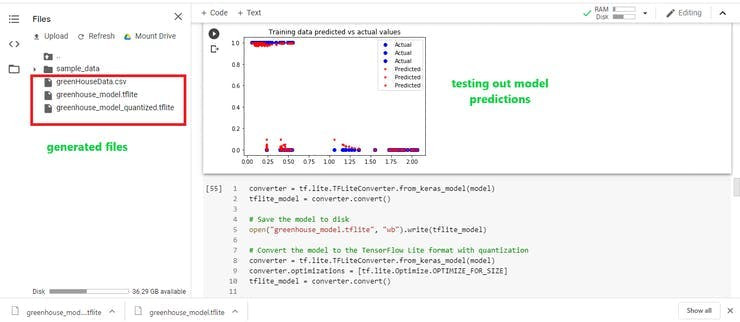

Step 2:Training the data using deep learning

Never proceed any training without normalization as you will never see your model improving it's accuracy. Below are some images for my training process, it is good enough for anyone to know what is happening during the training.

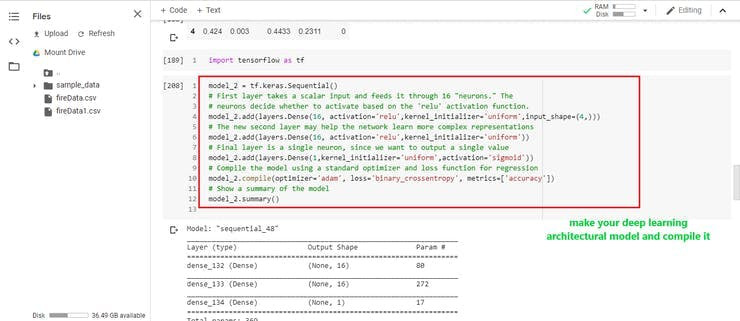

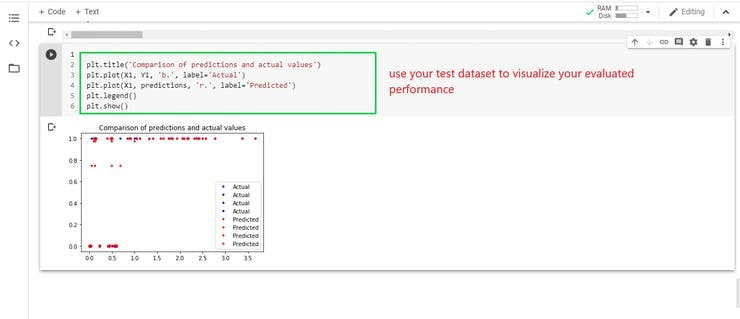

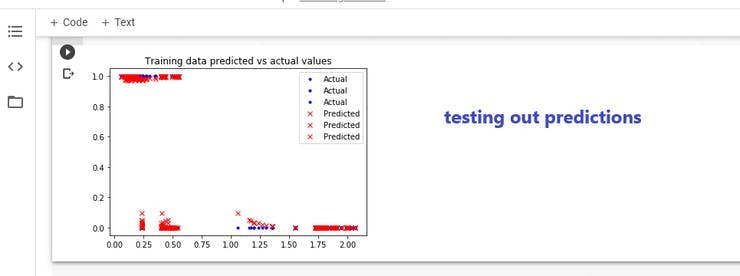

Training #1: Fire prediction

I used 'adam' optimizer and 'binary_crossentropy' loss, they worked better than any other methods, you can use whatever accordingly. The same training step was followed for other datasets also. Also sigmoid is used as activation function for output layer as sigmoid is good for non-linear data and binary predictions

1 / 8

check your arrays or vectors whether it was loaded from the file or not

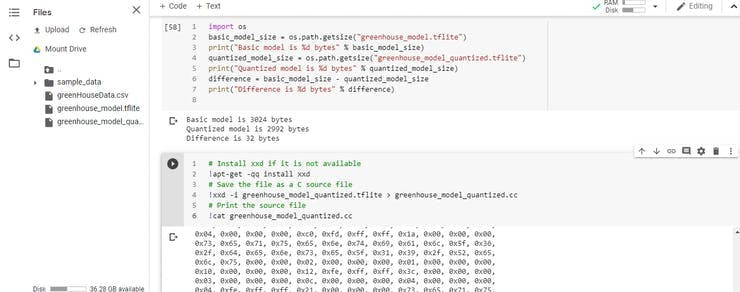

Training #2: Greenhouse adaptation prediction

Same regression model configuration for neural network was used as that of the fire prediction model. The output prediction will help the user to know on the basis of relevant sensor data whether the plants are getting enough CO2, light, warmth or not if not then the device can take action to adapt to greenhouse( I will tell how when we will code the device).

1 / 4

Training #3: Plant growth tracking

Here instead of adam optimizer rmsprop was used and mse as loss function. In this I have trained the underlying neural network to identify the pattern of growth with regard to days, so I will use it to compute plant height from model based upon days and check with my sensor readings if the values differ greatly it means the plant is not growing well in that season.

1 / 2

Since we have completed all our training steps we will head toward programming the device.

Getting our codes ready:Here I will describe all the header files which are common to all our project implementations,

#include "xyz_model_data.h"The model we trained, converted, and transformed into C++ using xxd

#include "tensorflow/lite/experimental/micro/kernels/all_ops_resolver.h"A class that allows the interpreter to load the operations used by our model

#include "tensorflow/lite/experimental/micro/micro_error_reporter.h"A class that can log errors and output to help with debugging

#include "tensorflow/experimental/lite/micro/micro_interpreter.h"The TensorFlow Lite for Microcontrollers interpreter, which will run our model

#include "tensorflow/lite/schema/schema_generated.h"The schema that defines the structure of TensorFlow Lite FlatBuffer data, used to make sense of the model data in sine_model_data.h

#include "tensorflow/lite/version.h"The current version number of the schema, so we can check that the model was defined with a compatible version.

//You need to install Arduino_Tensorflow_Lite library before proceeding.

#include <TensorFlowLite.h>A namespace is defined before void setup() function, namespace is used to solve name conflicts among different packages. Also you need to allocate memory for tensors and other dependent operations.

namespace

{

tflite::ErrorReporter* error_reporter = nullptr;

const tflite::Model* model = nullptr;

tflite::MicroInterpreter* interpreter = nullptr;

TfLiteTensor* input = nullptr;

TfLiteTensor* output = nullptr;

// Create an area of memory to use for input, output, and intermediate arrays.

// Finding the minimum value for your model may require some trial and error.

constexpr int kTensorArenaSize = 6 * 1024;

uint8_t tensor_arena[kTensorArenaSize];

} // namespaceYou can just start with Arduino Tensorflow Lite example in Arduino IDE examples section and just transfer your converted lite model data to model_data.h file and then take any action based upon the output by predicted model.

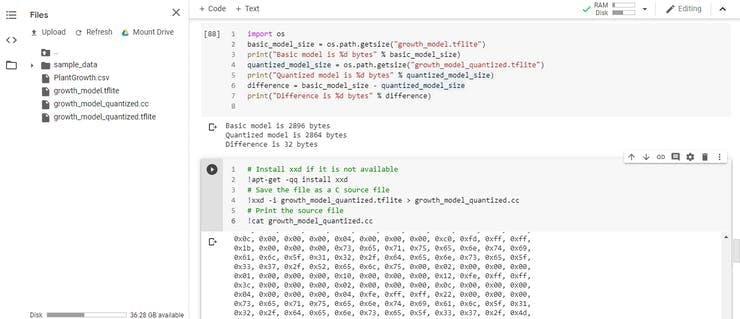

Programming, Model Testing and Connecting the Sparkfun Artemis device:1 / 6

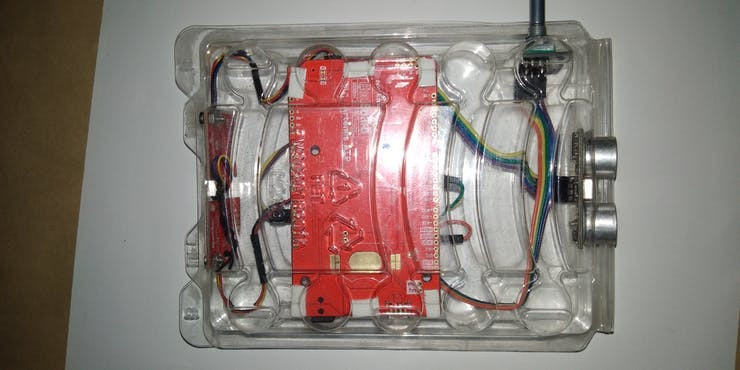

I used hard drive packing case since I don't owe a 3D printer I have always been creative with household stuffs, there is also SHT10 soil moisture sensor which I planned to use but could not use, anyway I will make another version in which I will use it's reading to make smart water management in farm.

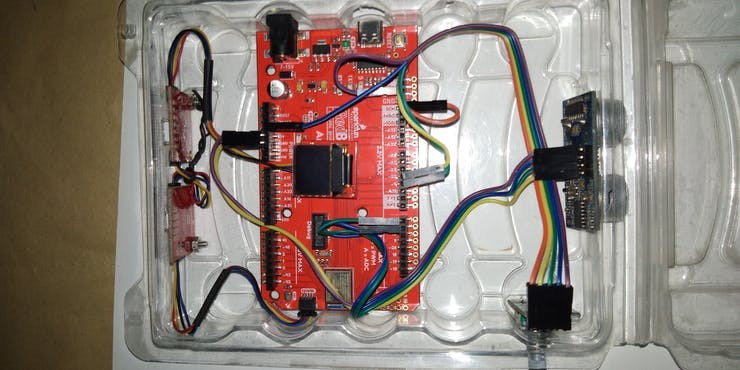

All quiic sensors are in place and also a ultrasonic distance sensor. The sparkfun VCNL4040 is also a distance sensor but I planned to use it as light sensor and it's range is also not as much as ultrasonic sensor. You can also see a rotatry encoder to be used to navigate through menus on screen

Quiic sensors in place, looking adorable and no clumsy wiring with them

Back side I used double sided tape to fix the redboard Artemis

Fixed at bottom to find plant height when installed in farm.

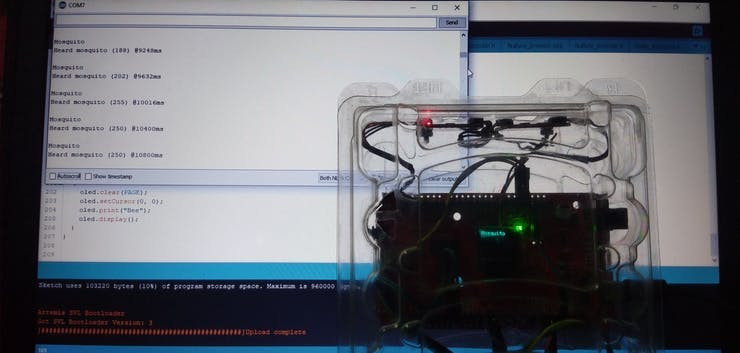

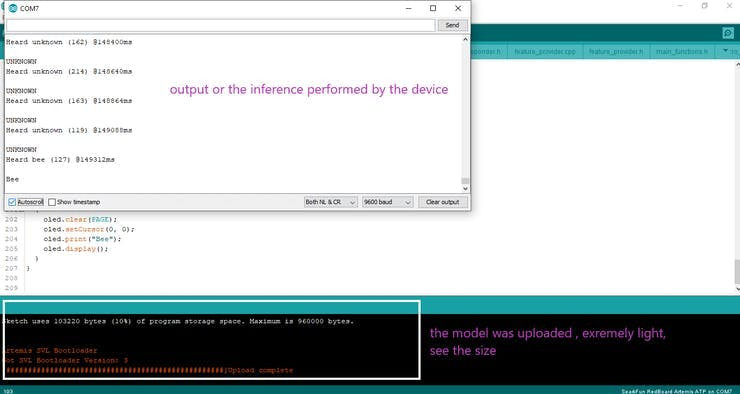

Test #1: Audio Analysis-

Checking our speech model and displaying results on the OLED screen. If you are not confident about programming the whole project you can pick up example project and keeping it as a base modify it, you will easily learn to debug also in this manner. The images don't show in detail hence I have uploaded the codes but you will still need to tweak it as per your project. You can also easily modify it to send the results to a mobile or base stations on the basis of detected sounds, like mosquito, so one can easily see where are the most occurrence of such insects in the locality and prevent it's breeding or make automated drone navigation system to spray particular insecticides, pesticides in farm areas or inform agencies about illegal logging with full precision control. Look below for our test result images.

1 / 8

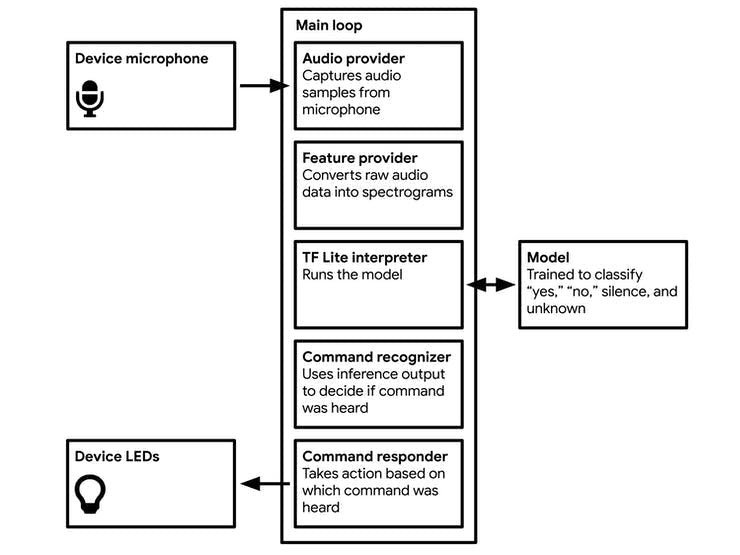

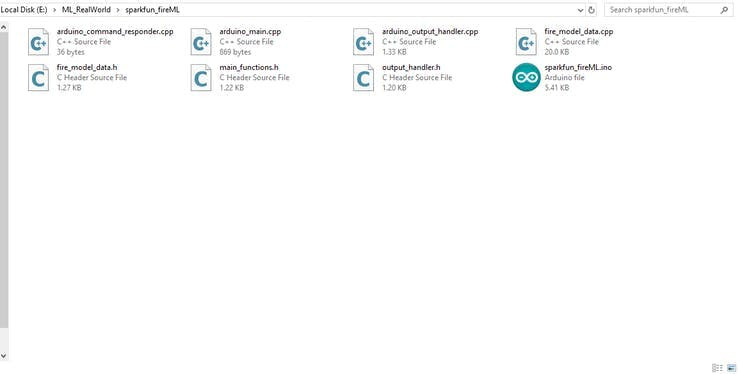

Here is the walk through for the default example provided by the TensorFlow, it shows what things we need to handle to get the output

You need these many essential files for your project.

We have completed our training and programming the audio analysis model for our project, now I will proceed with programming other value based output models.

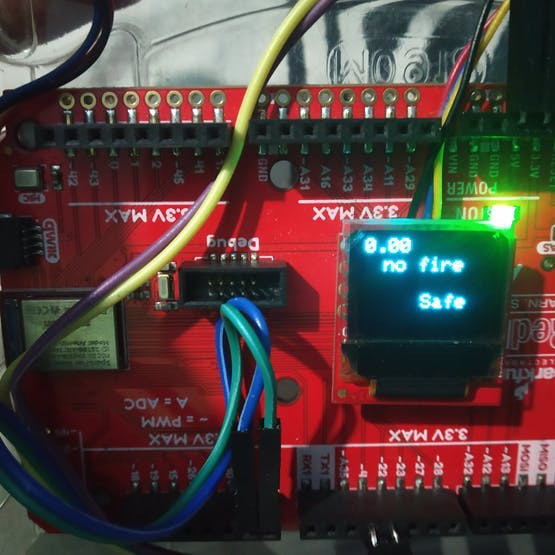

Test #2: Fire prediction-

We will load the data model on the board and then perform real-time predictions for our sensor values, based upon the prediction we will will display the notification on OLED screen, thereby eliminating the chance of widespread of fire in farms. This really took most of my time since my board was giving me compilation error every time however hard I tried. But thanks to Hackster community, who helped me to upload the code successfully.

1 / 5

After compiling and uploading the fire prediction model.

After compiling and uploading the fire prediction model.

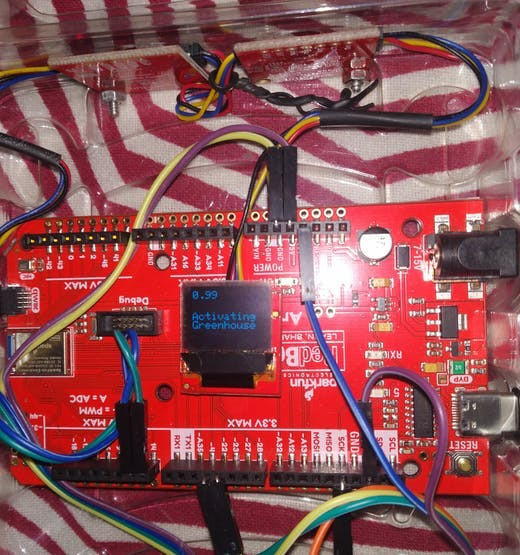

Test #3: Greenhouse prediction

Here we will utilize ambient light, temperature and CO2 concentration detection sensor to predict whether there is sufficient light, temperature and CO2 available for plant growth or not, if the condition is not normal we will direct servo motors activate and protect the plants by bringing a layer over the farm like Greenhouse set-up.

1 / 2 • The servo would get activated to open up greenhouse layer and protect the crops.

The servo would get activated to open up greenhouse layer and protect the crops.

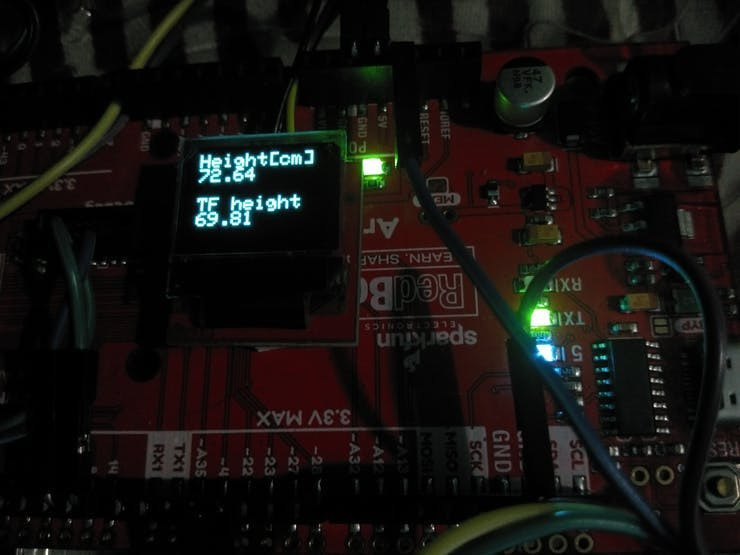

Test #4: Plant Height Determination

We have trained our model to determine plant height through regression but with regression there is one drawback, the drawback is that it determines the values in one linear direction only therefore if left to run forever it would return height of plant in kilometers some day if no upper limit is set. So what we will do is that we will detect height of the plant for corresponding days and check with our model for corresponding day and check whether the height predicted by model differs widely from our measured plant height, it differs widely it means there is some nutritional deficiency.

1 / 2

I have attached all the codes which are commented very well and readable enough for you to implement your own algorithm, don't waste your time thinking about codes, invest your time to make your training dataset as good as possible.

I don't have a 3D printer so I just took a plastic case to build my model, during idea submission also we were required to specify how would our project look like, so here is the installation(not garden as I don't have one) but I tried my level best to do as much innovation and creativity possible at my end. Sorry guys, I couldn't find fritzing parts for this hardware but wiring is fairly simple as I used very common sensors and quiic sensors are plug and play, all images are crisp enough to detail out pins for my sensors.

You can just set your device to whatever mode you want and wherever you want. I also wanted to implement moisture adaptation for my plants I will finish that too later.

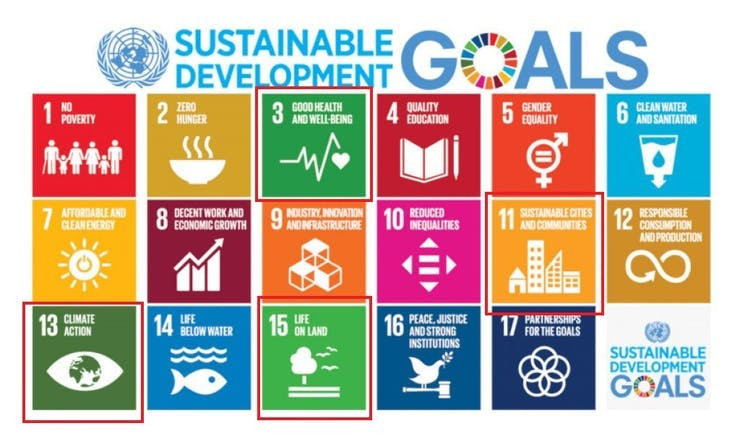

Sustainable Development Goals:

Sustainable Development Goals:

SDG 3: GOOD HEALTH AND WELL-BEING

Using our device we have tried to make locality aware of all the disease carrying vectors present or breeding also we are able to save the crop as soon as we hear sound made by any pest, so it saves lots of efforts of farmer and makes them spray chemicals only when required, eat healthy.

SDG 13 and SDG 15: CLIMATE ACTION AND LIFE ON LAND

UNAim: By 2020, promote the implementation of sustainable management of all types of forests, halt deforestation, restore degraded forests and substantially increase afforestation and reforestation globally.Mobilise significant resources from all sources and at all levels to finance sustainable forest management and provide adequate. incentives to developing countries to advance such management, including for conservation and reforestation. Through our project we are able to recognize illegal logging of trees and carry forth the message to the Forest department to take actions. Thus in no time we are ready to halt deforestation, also I have thought of another inclusion which is to detect gun fire sound and keep government informed about poachers in particular area and take stern actions against them.

Anyone is free to recommend me any new feature which can be easily implemented and be helpful for the community. It was not very comfortable to add lots of code files directly in code section so I have added github link. Thank you for reading my ideas and implementations carefully.

Leave your feedback...