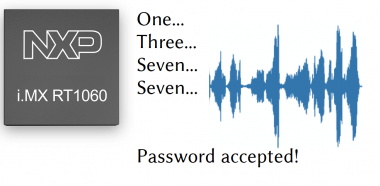

Voice-controlled Lock

About the project

i.MX RT 1060 detecting numbers from speech

Project info

Difficulty: Moderate

Platforms: NXP

Estimated time: 4 hours

License: GNU General Public License, version 3 or later (GPL3+)

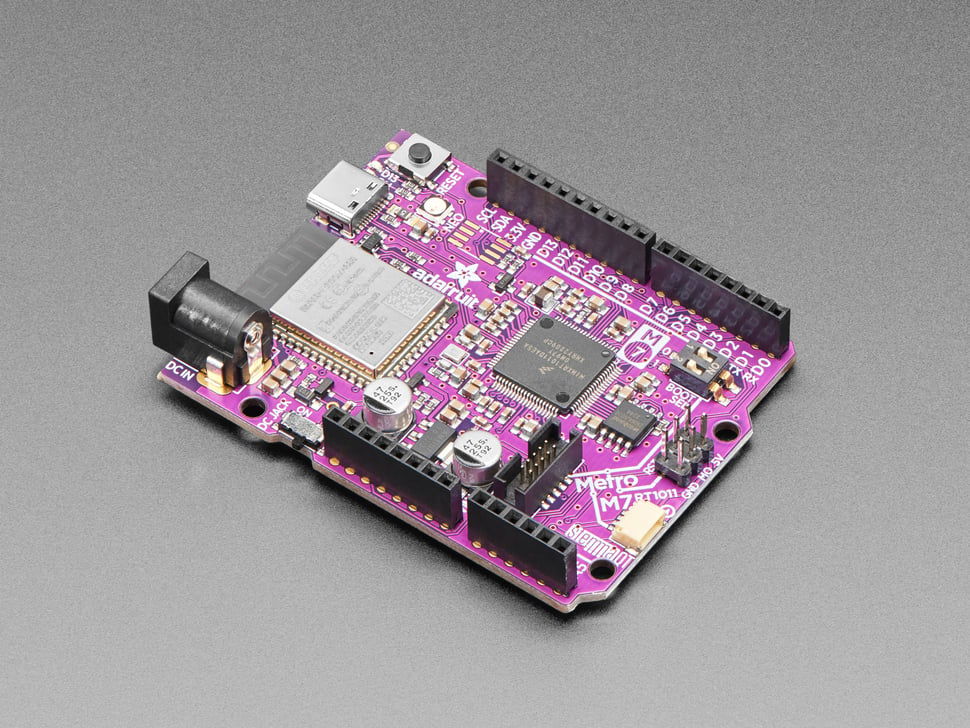

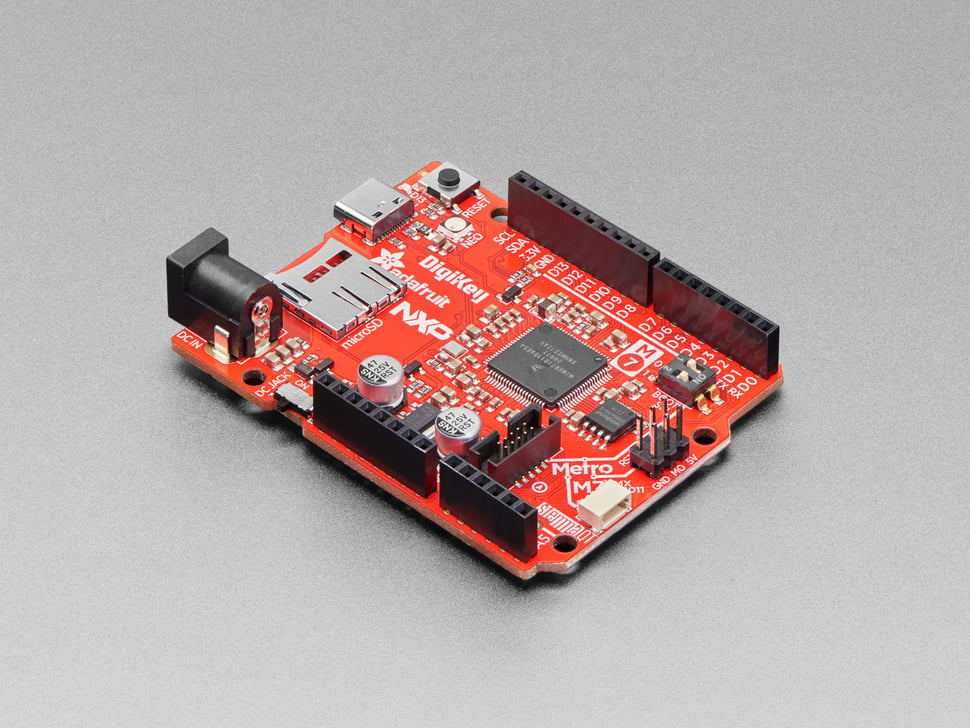

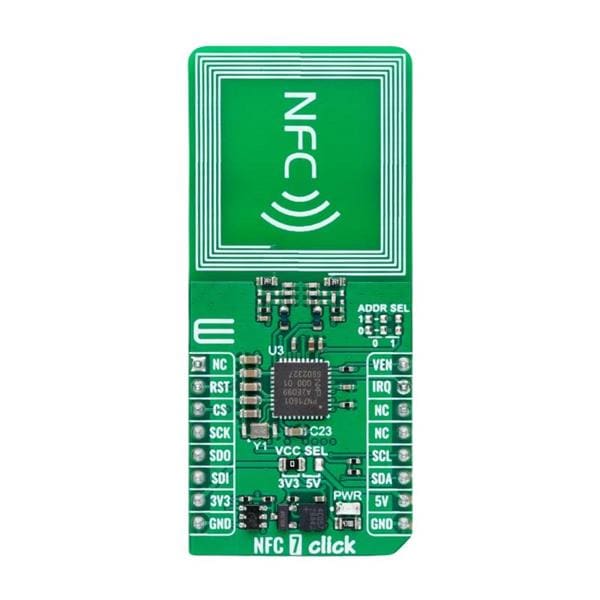

Items used in this project

Story

The reason

I haven't done so far any Machine Learning projects on the MCU target so when I saw the available platforms for that competition, namely the combination of powerful Cortex M7 processor and onboard codec/microphone, I knew a voice recognition project using NN would be an interesting choice to try out these boards.

The Goal

The project was supposed to offer a possibility to unlock a lock when the pass code consisting of several digits was said near the device. Unlocking could mean anything, from driving a servo or motor to unlock a physical lock, through driving an LED, to even displaying some secret on a display. Passphrase should be configurable, ideally through Ethernet with a http server or mqtt client. Additionally board would be able to play a sound notification stating if the passphrase was correct or wrong.

The Path

I started with the model as I expected it to be the most difficult part of the project. I've wanted to reuse project sound-mnist, but apparently because of some changes in used libraries, currently I wasn't able to reach accuracy claimed by the author (nor people on reddit). I've spent a lot of the time adjusting the model training code, finally achieving validation accuracy of ~96%. Code is available on Github, link below.

After finally having a working model it was time to start developing on embedded platform. To speed up the process I based my code on SAI NXP examples, however I changed them to c++ and moved to FreeRTOS based system. I wanted first to port the preprocessing part needed for NN input. During porting I learned that speech feature libraries (python: python_speech_features, librosa, etc.) don't necessary produce the same output, because of the small implementation differences. Thsis in effect forces building a network which uses as the input values computed by the library which has a C/C++ (preferable embedded port) as I was expecting poor performance of the model on target if the real data was processed differently from the training one. Finally python_speech_features and its C implementation were chosen as a way-to-go. Unfortunately the project deadline got me while porting the C implementation for efficient work on Cortex M7.

The Future

There's a lot to be done in that project to meet the entry requirements and I'll definitely work on it in incoming weeks.Idea of joining ML and efficient programming on embedded platform was fun for these couple of weeks.

Code

Credits

jjakubowski

Embedded Systems Engineer. Interested in everything that makes noise or captures it

Leave your feedback...