Chatgpt For Robotics: Design Principles And Model Abilities

About the project

We extended the capabilities of ChatGPT to robotics and controlled multiple platforms such as robot arms, drones with language.

Project info

Difficulty: Moderate

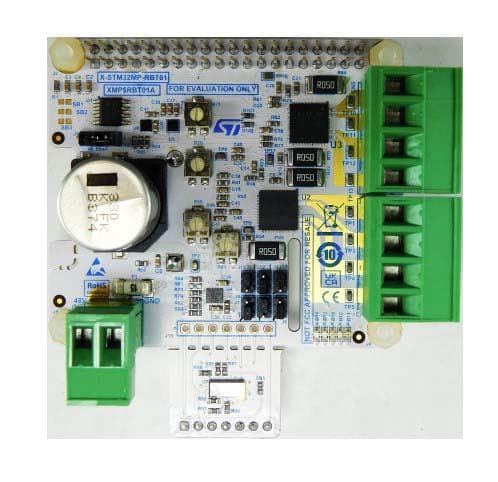

Platforms: Elephant Robotics

Estimated time: 1 hour

License: GNU General Public License, version 3 or later (GPL3+)

Items used in this project

Story

This article is reproduced from Microsoft's release on chatGPT's ability to expand to the field of robots. The following content selects part of the content, about chatGPT controlling the robotic arm, to complete the process of drawing the Microsoft logo.

We extended the capabilities of ChatGPT to robotics, and controlled multiple platforms such as robot arms, drones, and home assistant robots intuitively with language.

Have you ever wanted to tell a robot what to do using your own words, like you would to a human? Wouldn’t it be amazing to just tell your home assistant robot: “Please warm up my lunch“, and have it find the microwave by itself? Even though language is the most intuitive way for us to express our intentions, we still rely heavily on hand-written code to control robots. Our team has been exploring how we can change this reality and make natural human-robot interactions possible using OpenAI‘s new AI language model, ChatGPT.

ChatGPT is a language model trained on a massive corpus of text and human interactions, allowing it to generate coherent and grammatically correct responses to a wide range of prompts and questions. Our goal with this research is to see if ChatGPT can think beyond text, and reason about the physical world to help with robotics tasks. We want to help people interact with robots more easily, without needing to learn complex programming languages or details about robotic systems. The key challenge here is teaching ChatGPT how to solve problems considering the laws of physics, the context of the operating environment, and how the robot’s physical actions can change the state of the world.

It turns out that ChatGPT can do a lot by itself, but it still needs some help. Our technical paper describes a series of design principles that can be used to guide language models towards solving robotics tasks. These include, and are not limited to, special prompting structures, high-level APIs, and human feedback via text. We believe that our work is just the start of a shift in how we develop robotics systems, and we hope to inspire other researchers to jump into this exciting field. Continue reading for more technical details on our methods and ideas.

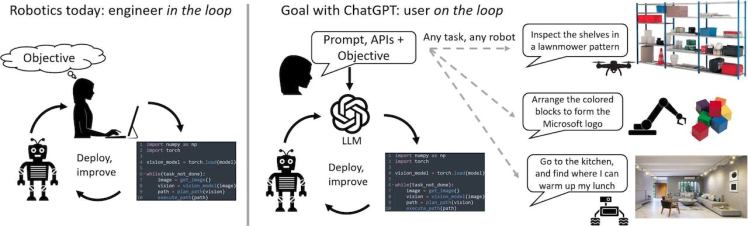

Challenges in robotics today, and how ChatGPT can helpCurrent robotics pipelines begin with an engineer or technical user that needs to translate the task’s requirements into code for the system. The engineer sits in the loop, meaning that they need to write new code and specifications to correct the robot’s behavior. Overall, this process is slow (user needs to write low-level code), expensive (requires highly skilled users with deep knowledge of robotics), and inefficient (requires multiple interactions to get things working properly).

ChatGPT unlocks a new robotics paradigm, and allows a (potentially non-technical) user to sit on the loop, providing high-level feedback to the large language model (LLM) while monitoring the robot’s performance. By following our set of design principles, ChatGPT can generate code for robotics scenarios. Without any fine-tuning we leverage the LLM’s knowledge to control different robots form factors for a variety of tasks. In our work we show multiple examples of ChatGPT solving robotics puzzles, along with complex robot deployments in the manipulation, aerial, and navigation domains.

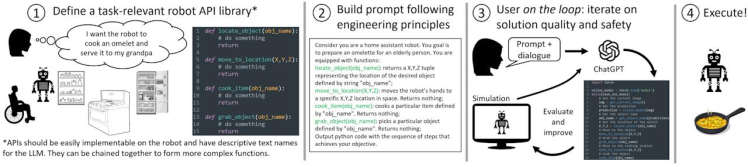

Robotics with ChatGPT: design principlesPrompting LLMs is a highly empirical science. Through trial and error, we built a methodology and a set of design principles for writing prompts for robotics tasks:

1. First, we define a set of high-level robot APIs or function library. This library can be specific to a particular robot, and should map to existing low-level implementations from the robot’s control stack or a perception library. It’s very important to use descriptive names for the high-level APIs so ChatGPT can reason about their behaviors;

2. Next, we write a text prompt for ChatGPT which describes the task goal while also explicitly stating which functions from the high-level library are available. The prompt can also contain information about task constraints,

3. or how ChatGPT should form its answers (specific coding language, using auxiliary parsing elements);The user stays on the loop to evaluate ChatGPT’s code output, either through direct inspection or using a simulator. If needed, the user uses natural language to provide feedback to ChatGPT on the answer’s quality and safety.

4. When the user is happy with the solution, the final code can be deployed onto the robot.

Enough theory… What exactly can ChatGPT do?Let’s take a look at a examples… You can find even more case studies in our code repository.

(Here we only illustrate a more interesting example, there are other examples of robots in the original text)

User on the loop: when a conversation is needed for a complex tasks

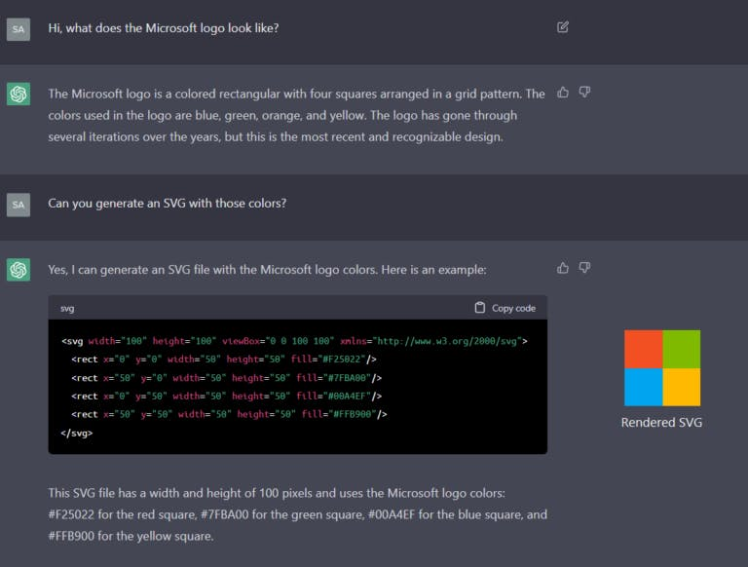

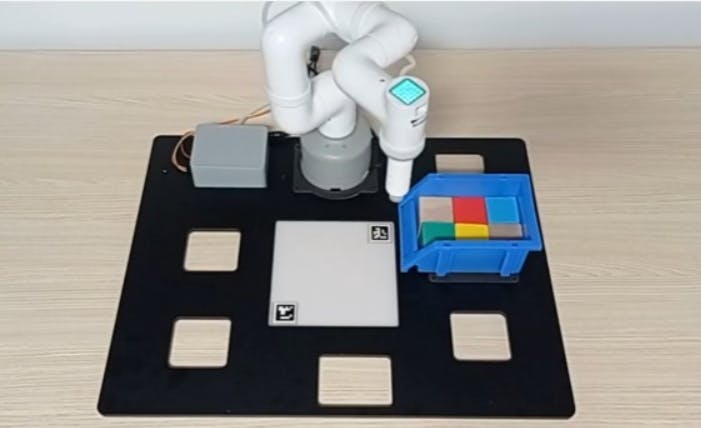

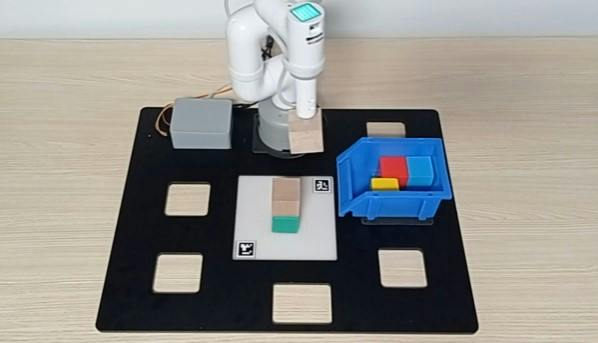

Next, we used ChatGPT in a manipulation scenario with a robot arm. We used conversational feedback to teach the model how to compose the originally provided APIs into more complex high-level functions: that ChatGPT coded by itself. Using a curriculum-based strategy, the model was able to chain these learned skills together logically to perform operations such as stacking blocks.

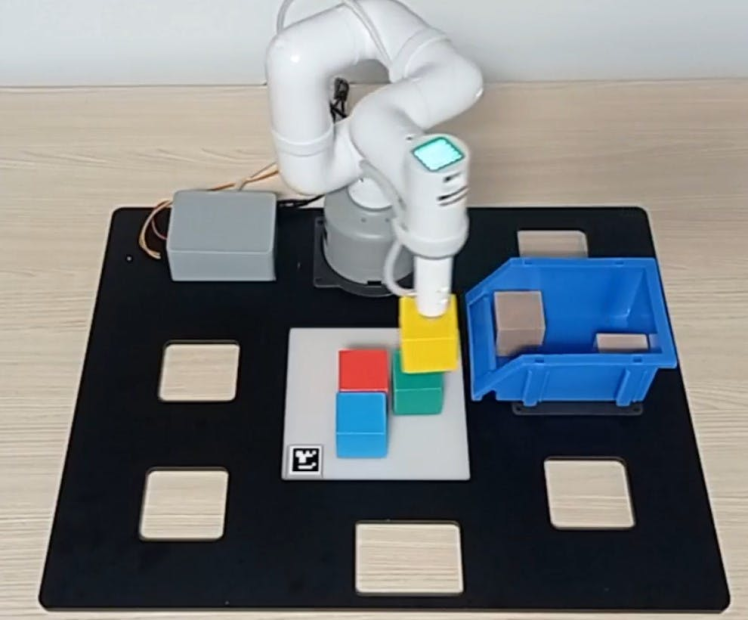

In addition, the model displayed a fascinating example of bridging the textual and physical domains when tasked with building the Microsoft logo out of wooden blocks. Not only was it able to recall the logo from its internal knowledge base, it was able to ‘draw’ the logo (as SVG code), and then use the skills learned above to figure out which existing robot actions can compose its physical form.

The following is a conversation with chatGPT about how to control the robotics arm to make an SVG file with the Microsoft logo colors.

User:

Imagine we are working with a manipulator robot. This is a robotic arm with 6 degrees of freedom that has a suction pump attached to its end effector. I would like you to assist me in sending commands to this robot given a scene and a task.

At any point, you have access to the following functions:

- grab(): Turn on the suction pump to grab an object

- release(): Turns off the suction pump to release an object

- get_position(object): Given a string of an object name, returns the coordinates and orientation of the vacuum pump to touch the top of the object [X, Y, Z, Yaw, Pitch, Roll]

- move_to(position): It moves the suction pump to a given position [X, Y, Z, Yaw, Pitch, Roll].

You are allowed to create new functions using these, but you are not allowed to use any other hypothetical functions.

Keep the solutions simple and clear. The positions are given in mm and the angles in degrees. You can also ask clarification questions using the tag "Question - ". Here is an example scenario that illustrates how you can ask clarification questions.

Let's assume a scene contains two spheres.pick up the sphere.

ChatGPT:

Question - there are two spheres. Which one do you want me to pick up?

User:

Sphere 1, please.Use python code to express your solution.Are you ready?

ChatGPT:

Yes, I'm ready. Please describe the scene and the task.

User:

In the scene there are the following objects: white pad, box, blue block, yellow block, green block, red block, brown block 1, brown block 2. The blocks are cubes with height of 40 mm and are located inside the box that is 80 mm deep. The blocks can only be reached from the top of the box. I want you to learn the skill of picking up a single object and holding it. For that you need to move a safe distance above the object (100 mm), reach the object, grab it and bring it up.

ChatGPT:

Understood. Here's a Python function that takes the name of an object, moves the robot arm above the object, grabs it, and moves the object up to a safe distance of 100 mm above the object. Note that the function assumes that the robot arm is initially at a safe starting position.

import time

def pick_up_object(object_name):

# get the position of the object

object_pos = get_position(object_name)

# move the robot arm to a safe position above the object

safe_pos = [object_pos[0], object_pos[1], object_pos[2]+100, object_pos[3], object_pos[4], object_pos[5]]

move_to(safe_pos)

# move the robot arm to the object's position

move_to(object_pos)

# grab the object

grab()

time.sleep(1) # wait for the object to be firmly attached

# move the robot arm back to the safe position above the object

move_to(safe_pos)To use this function, simply call pick_up_object and provide the name of the object you want to pick up as a string, e.g. pick_up_object("red block").

User:

Great! Now I want you to learn how to place and object in a position. Considering you are holding a generic object, you first need to first move to a safe position above the target location, bring the object down and then release it.

ChatGPT:

Understood. Here's a Python function that takes the position where the object should be placed, moves the robot arm above the position, brings the object down, and releases it. Note that the function assumes that the robot arm is holding an object.

def place_object(position):

# move the robot arm to a safe position above the target location

safe_pos = [position[0], position[1], position[2]+100, position[3], position[4], position[5]]

move_to(safe_pos)

# move the robot arm to the target location

move_to(position)

# release the object

release()

# move the robot arm back to the safe position above the target location

move_to(safe_pos)To use this function, simply call place_object and provide the target position as a list of 6 numbers, e.g. place_object([100, 200, 50, 0, 0, 0]). Note that the units of the position are in mm and the orientation angles are in degrees.

User:

Great! Now I want you to use these skills to pick up green block and and place on the white pad. All blocks have a height of 40 mm.

ChatGPT:

Sure! Here's the Python code to pick up the green block and place it on the white pad:

# get the positions of the green block and white pad

green_pos = get_position("green block")

white_pos = get_position("white pad")

# pick up the green block

pick_up_object("green block")

# calculate the position to place the block on the white pad

place_pos = [white_pos[0], white_pos[1], white_pos[2]+40, 0, 0, 0]

# place the block on the white pad

place_object(place_pos)This code gets the positions of the green block and white pad using the get_position function, picks up the green block using the pick_up_object function we defined earlier, calculates the position to place the block on the white pad, and places the block on the white pad using the place_object function we defined earlier. Note that we add the height of the block (40 mm) to the height of the white pad to ensure that the block is placed on top of the pad.

User:

Great! Now I want you to stack both brown blocks on top of the green block.

ChatGPT:

Sure! Here's the Python code to stack the two brown blocks on top of the green block:

# get the positions of the green block and both brown blocks

green_pos = get_position("green block")

brown1_pos = get_position("brown block 1")

brown2_pos = get_position("brown block 2")

# pick up the first brown block

pick_up_object("brown block 1")

# calculate the position to place the block on top of the green block

place_pos = [green_pos[0], green_pos[1], green_pos[2]+40, 0, 0, 0]

# place the first brown block on top of the green block

place_object(place_pos)

# pick up the second brown block

pick_up_object("brown block 2")

# calculate the position to place the block on top of the first brown block

place_pos = [green_pos[0], green_pos[1], green_pos[2]+80, 0, 0, 0]

# place the second brown block on top of the first brown block

place_object(place_pos)

This code gets the positions of the green block and both brown blocks using the get_position function, picks up the first brown block using the pick_up_object function we defined earlier, calculates the position to place the first brown block on top of the green block, and places the first brown block on top of the green block using the place_object function we defined earlier. Then, it picks up the second brown block, calculates the position to place the second brown block on top of the first brown block, and places the second brown block on top of the first brown block using the place_object function we defined earlier. Note that we add the height of the green block (40 mm) to the height of the previous block to ensure that the block is placed on top of the previous block.

User:

Awesome! I want now to use the blocks to build the logo from Microsoft on top of the white pad. It consists of four colors forming a square, blue on the bottom left, yellow on the bottom right, red on the top left and green on the top right.

ChatGPT:

Sure! Here's the Python code to build the Microsoft logo on top of the white pad using the blocks:

# get the positions of the blocks and the white pad

white_pad_pos = get_position("white pad")

blue_pos = get_position("blue block")

yellow_pos = get_position("yellow block")

red_pos = get_position("red block")

green_pos = get_position("green block")

# pick up the blue block

pick_up_object("blue block")

# calculate the position to place the blue block

place_pos = [white_pad_pos[0]-20, white_pad_pos[1]-20, white_pad_pos[2]+40, 0, 0, 0]

# place the blue block on the white pad

place_object(place_pos)

# pick up the yellow block

pick_up_object("yellow block")

# calculate the position to place the yellow block

place_pos = [white_pad_pos[0]+20, white_pad_pos[1]-20, white_pad_pos[2]+40, 0, 0, 0]

# place the yellow block on the white pad

place_object(place_pos)

# pick up the red block

pick_up_object("red block")

# calculate the position to place the red block

place_pos = [white_pad_pos[0]-20, white_pad_pos[1]+20, white_pad_pos[2]+40, 0, 0, 0]

# place the red block on the white pad

place_object(place_pos)

# pick up the green block

pick_up_object("green block")

# calculate the position to place the green block

place_pos = [white_pad_pos[0]+20, white_pad_pos[1]+20, white_pad_pos[2]+40, 0, 0, 0]

# place the green block on the white pad

place_object(place_pos)This code gets the positions of the white pad and all four blocks using the get_position function, picks up each block in turn using the pick_up_object function we defined earlier, calculates the position to place the block on the white pad, and places the block on the white pad using the place_object function we defined earlier. We use the block colors to ensure that the logo is built correctly. We place the blue block in the bottom left position, the yellow block in the bottom right position, the red block in the top left position, and the green block in the top right position, as specified in the prompt.

We are excited to release these technologies with the aim of bringing robotics to the reach of a wider audience. We believe that language-based robotics control will be fundamental to bring robotics out of science labs, and into the hands of everyday users.

That said, we do emphasize that the outputs from ChatGPT are not meant to be deployed directly on robots without careful analysis. We encourage users to harness the power of simulations in order to evaluate these algorithms before potential real life deployments, and to always take the necessary safety precautions. Our work represents only a small fraction of what is possible within the intersection of large language models operating in the robotics space, and we hope to inspire much of the work to come.

This work is being undertaken by members of the Microsoft Autonomous Systems and Robotics Research Group.The researchers included in this project are: Sai Vemprala,Rogerio Bonatti,Arthur Bucker, and Ashish Kapoor.

Author:Sai Vemprala

Title:ChatGPT for Robotics Design Principles and Model Abilities

Source: Microsoft Autonomous Systems and Robotics Research Group

URL:https://www.microsoft.com/en-us/research/group/autonomous-systems-group-robotics/

Reposted From: Microsoft

Credits

Elephant Robotics

Elephant Robotics is a technology firm specializing in the design and production of robotics, development and applications of operating system and intelligent manufacturing services in industry, commerce, education, scientific research, home and etc.

Leave your feedback...