Assistants Robots Based On Jetson Nano (the Jetson Sisters)

About the project

This is a prototype robot assistant, based on a NANO Jetson. It incorporates deep learning tools.

Project info

Difficulty: Expert

Platforms: Amazon Alexa, NXP, NVIDIA

Estimated time: 2 weeks

License: MIT license (MIT)

Items used in this project

Hardware components

View all

Story

The Jetson Sisters

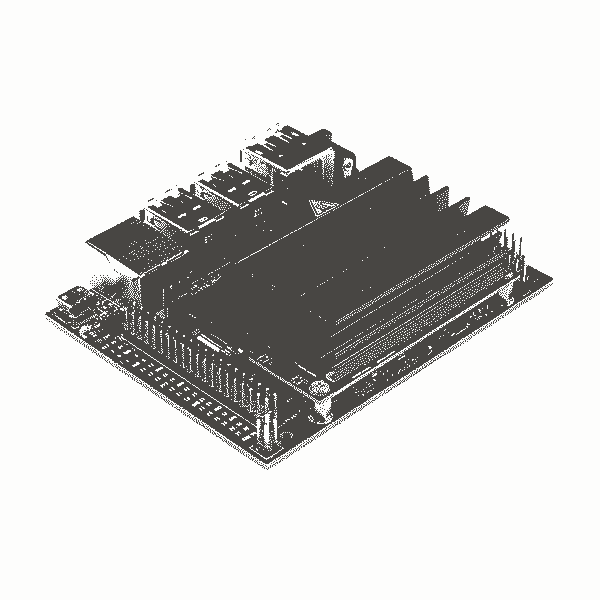

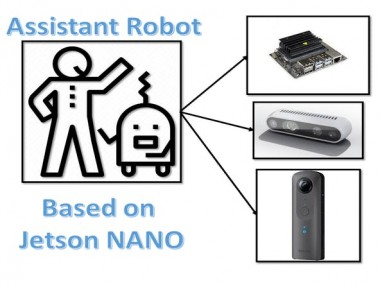

The Jetson sisters are two assistant robots based on the Jetson Nano development system (Figure 1). These robots have been designed as assistant robots for older people or people who live alone. Each of the robots have characteristics that differentiate them from each other, so each can offer skills that allow better interaction with users. The big sister is a robot designed to work outdoors. It is more robust which makes it ideal for work outside the home. Being a robot designed to work outdoors, the big sister can carry more weight, which could allow users to use it as a tool to go shopping at the supermarket or as a companion (this robot has a differential kinematics).

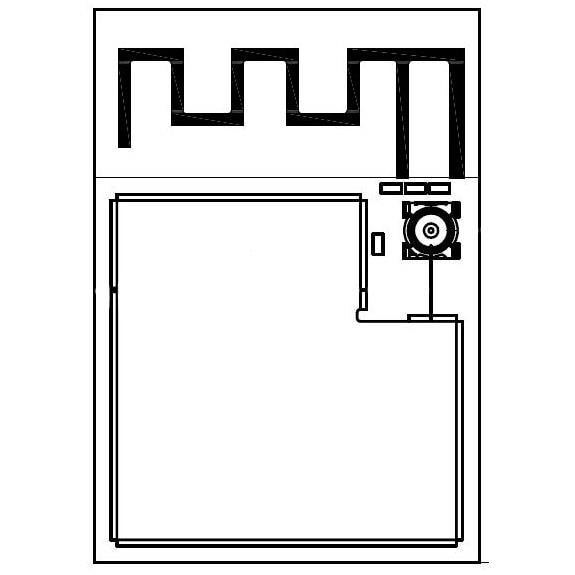

Figure 1, Jetson Nano

On the other hand, the smaller sister, is a smaller and less robust robot and is designed for interiors like a small apartment (this robot has an omnidirectional kinematics). Due to the possible utility of these robots, each incorporates artificial intelligence (AI) algorithms, which allow them to recognize persons, emotions and identify different places within the home. Since the main idea of the Jetson sisters is the interaction with people, we incorporate a connection with the voice assistant Alexa. In this way, users through natural language (voice) can control the sisters, allowing to give orders such as "You can go to the kitchen".

Figure 2, Jetson Sisters

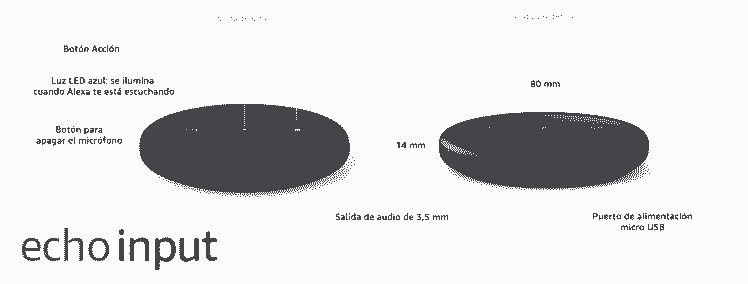

The older sister have embedded an Echo Input (Figure 2). While the younger sister can be controlled through any other device in the Alexa ecosystem (Figure 3).

Figure 2, Echo Input

While the younger sister can be controlled through any other device in the Alexa ecosystem (Figure 4).

Figure 4, Alexa echo system

However, having built a robbery with such a powerful development tool and having the ability to connect to Alexa using voice, it came to my mind... and why not have big sister incorporate a communication through low energy bluetooth (BLE) and thus connect wearable devices and have that information displayed on an LCD screen?. Well, from this rebellion the big sister incorporates a BLE communication system, which allows through alexa, know your beats per minute, number of steps, etc. All this information is obtained from TTGO T-Watch (although this project does not contemplate the possibility of using deeplearning to analyze the signals, in parallel I am doing it and due to time issues they are not shown).

Figure 5, TTGO T-Watch

Figure 5, TTGO T-Watch

Alexa Skill

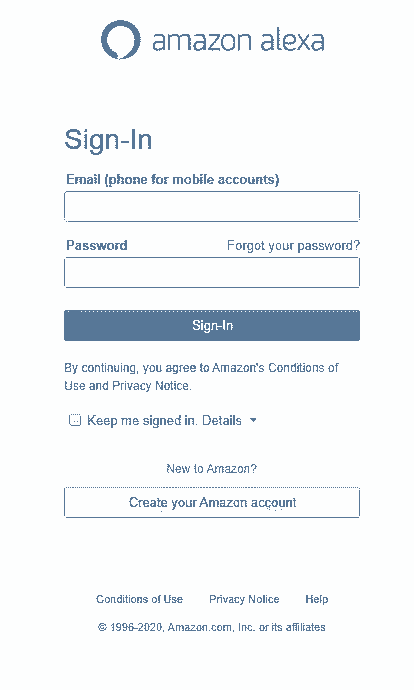

To create a skill for Alexa, we registered an account in the Alexa Amazon (Figura 6).

Figure 6, Alexa Console

Following this step, we create our skill by clicking on the "Create Skill" button:

Figure 7, Creating Alexa Skill

After the skill is created, the user is given the invocation word to begin the interaction with it. For example, if the name of the skill is "robot one", the user need to say “Alexa start the robot one”. Since this project is not about how to make an alexa skill, but about implementing deep learning, robots and jetson nano, you can check this tutorial.

The following is a description of each part of the Jetson sisters. The two systems can have the same vision hardware since the classification algorithms are independent of robots. For budget reasons I had to make a hardware distribution, which means that one sister would have a 360º camera and the other a depth camera :'(.

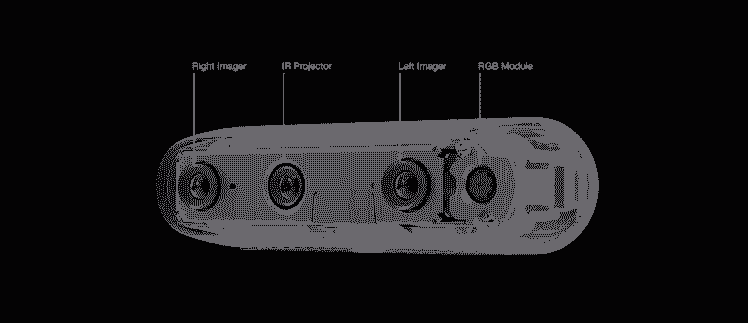

Real Sense Camera

The Intel® RealSense™ depth camera D435 is a stereo tracking solution, offering quality depth for a variety of applications. Its wide field of vision is perfect for applications such as robotics or augmented and virtual reality, where seeing as much of the scene as possible is critical. This camera has a range of up to 10m and its small size allows it to be integrated into any hardware with ease. It comes complete with the Intel RealSense SDK 2.0 and multi-platform support.

Real Sense Camera

The Intel® RealSense™ depth camera D435 is a stereo tracking solution, offering quality depth for a variety of applications. It's wide field of view is perfect for applications such as robotics or augmented and virtual reality, where seeing as much of the scene as possible is vitally important. With a range up to 10m, this small form factor camera can be integrated into any solution with ease, and comes complete with our Intel RealSense SDK 2.0 and cross-platform support.

1 / 2 • Figure 8, RealSense™ D435

Figure 8, RealSense™ D435

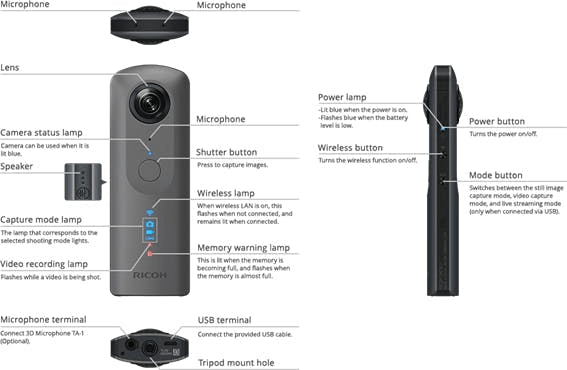

RICOH THETA V

This robot has a single wide-angle lens camera, which was awarded to me by Ricoh in the RICOH THETA Dream and Build Contest. which is located on the front of the robot. However, information is being lost from the sides and back, so the idea of using a 360º camera.

Researching thoroughly on the camera. I discovered that it is not only a camera to capture photos or video at 360º, but it is a very complete and interesting image capture system. The technical characteristics of the camera can be seen in the following link.

Figure 9, Ricoh Theta V

You can capture your entire surroundings with the simple press of the shutter button. Enjoy a new world of images you have never experienced before.

Figure 10, Ricoh Camera 360

One of the features that most caught my attention was its processor, a Snapdragon 625 (Figure 11).

Figure 11, Snapdragon 625

So, I wondered what I could do with this camera, use it to take photos, send them to a web service, analyse them and return the analysed information… mmmmm for me is to it’s a waste of power. That’s why it occurred to me to divide my idea into two parts, a first part (something simple) in which my robot uses the camera in a simple way. Using it as one more camera, so that my robot can have a global vision of its environment.

Hardware Description

In this section I describe the different parts that make up Jetson's two sisters.

Eldest Sister

Up to this point we already have the first part of our system, the next step is the construction of the Eldest Sister. To make this construction I used an aluminum structure (Figure 12):

Figure 12, SWMAKER All Black/Silver Hypercube 3DPrinte

Figure 12, SWMAKER All Black/Silver Hypercube 3DPrinte

Figure 13 shows a 3D design made using the SolidWorks tool, this is one of the many shapes the robot could have. Because, when working with modular systems, the robot could have any shape.

Figure 13, 3D Robot Model

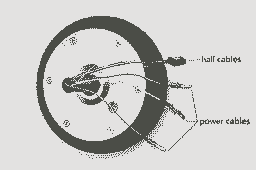

Figure 14 [A to D] below shows the components used to control the wheels of the robots.

Figure 14 A

Figure 14 A

Figure 14 B

Figure 14 B

Figure 14 C

Figure 14 C

Figure 14 D

Figure 14 D

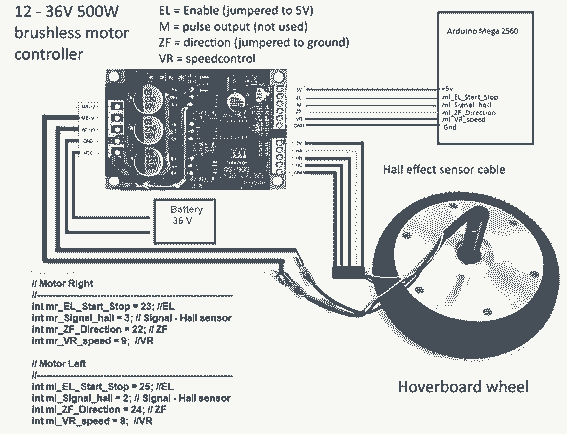

The Eldest Sister was divided into two sections that help control the robot, the first is in charge of an Arduino Mega 2560 and the second uses a Jetson Nano (Figure 10). The Arduino is in charge of controlling everything related to the low level of the robot, i.e. it controls motors and some Sharp GP2Y0A2YK0F distance sensors. These sensors are used to give the robot a reactive control in case of detecting objects at a certain distance. To control the motor robot, the Jetson Nano send a JSON command. The message sent to the Arduino is serialized, which is deserialized by the Arduino extracting the different elements. The message sent to the Arduino is as follows:

"Stop_robot": "False", "direction": "True", "linear_speed": "0.2", "angular_speed": "0.0"}

"Stop_robot": "False", "direction": "True", "linear_speed": "0.2", "angular_speed": "0.0"}This message is composed by 4 fields, two boolean and two float, the boolean fields allow the direction control of the motors (CW or CCW) and the stop of the motors. The other two fields are linear speed and angular speed. For safety reasons the maximum speeds have been limited to 0.2, which in PWM translates to 20% of the total speed.

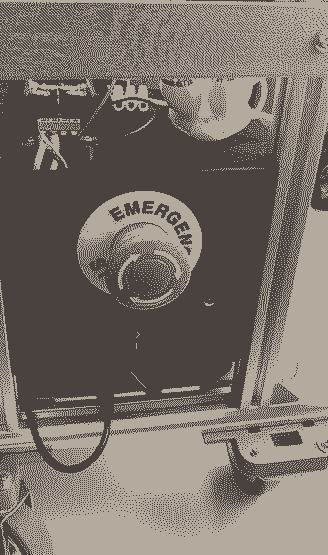

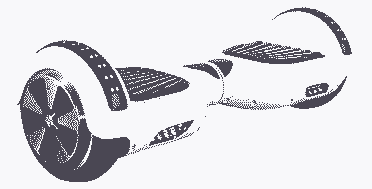

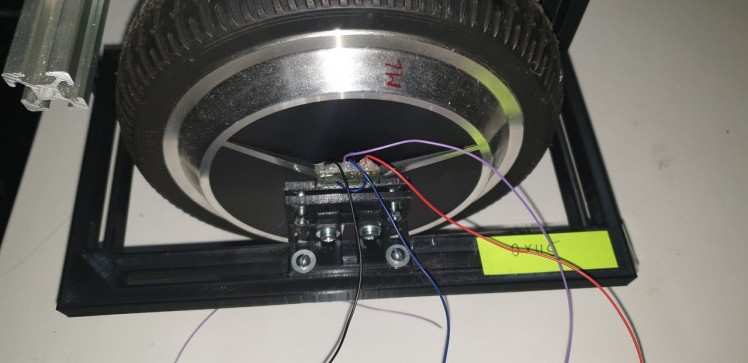

The motors used by the robot are two hooverboard motors (Figure 15).

Figura 15, Hooverboard

Figura 15, Hooverboard

The configuration of the hall encoder connections and the motor power cables are shown in Figure 16.

Figure 16. Motor Connections.

Figure 16. Motor Connections.

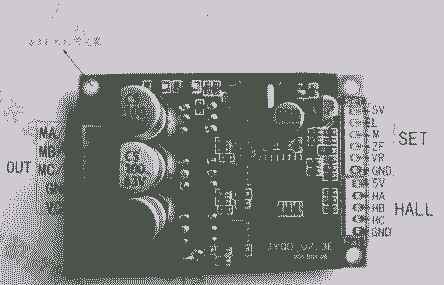

To control these motors, it is necessary to have an ESC control for each motor. This controller has to support a voltage of 36V, for this reason we have used an ESC with MODET transistors this controller is shown below (Figure 17).

Figure 17, Engine ESC Controller

Figurea 18 shows the different connections made to control the motors (The colors of the cables that control the motor and encoder are standard on all motors).

Figure 18, Diagram for the connection of the motors to the controller

However, one problem with these motors is the ecoders, the ESC used to control the robot has an input and an output. This output is used by the arduino interruption to count the rotation of the motor, the only problem is that if the wheel changes the rotation the signal coming through the interruption pin does not change.

For this reason it was necessary to add an external encoder, this encoder can help the robot to know the rotation angle of the wheel and to know the distance travelled. The encoder used to do this is AS5048B-TS_EK_AB.

1 / 2 • Figure 19, Magnetic Encoder

Figure 19, Magnetic Encoder

Younger Sister

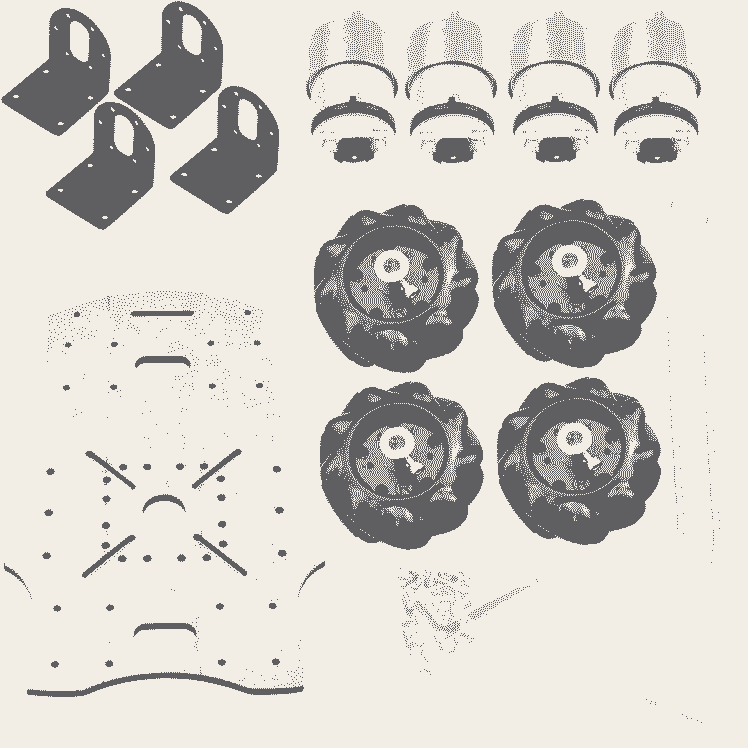

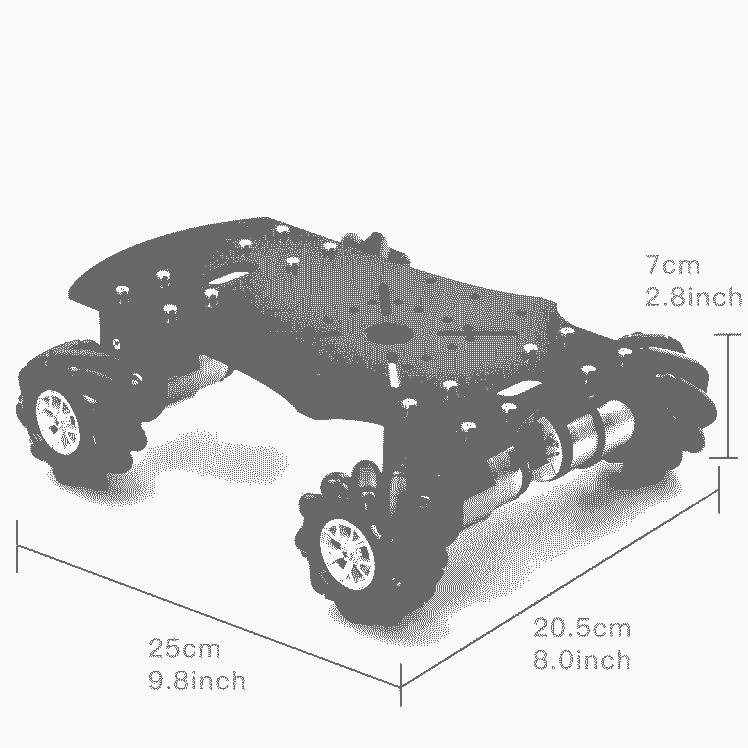

The younger sister was built using an omnidirectional wheeled platform (Figure 20 and 21).

Figure 21, Younger syste parts

Figure 21, Younger syster robot base.

Figure 21, Younger syster robot base.

Figure 22, Younger syster

Software Description

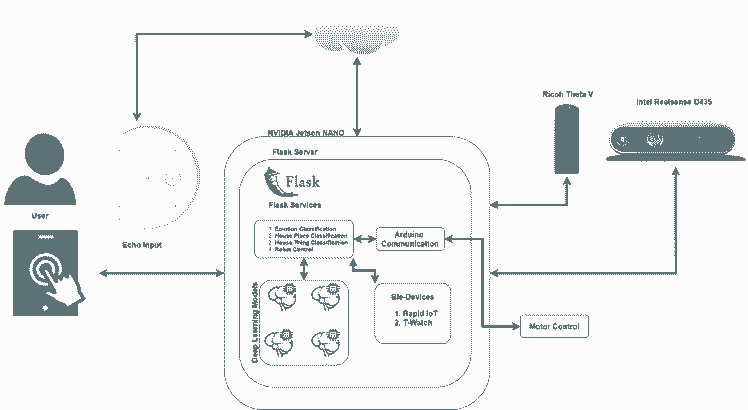

In this section I describe the different parts of the software tools used, so that Jetson tools perform the different interaction and multi-classification tasks. It is important to determine that the sisters use three trained models. Two of them are static (emotion classification and classification of the places in the house), it is to say that they only train once and a third model that can be dynamic. Since the user can dynamically increase the number of classifications. This last model has been called "Things of the house".

Figure 25, Proposed system

Deep Learning Section

One of the most important parts of this huge project is the application of techniques and the use of deep learning tools using the Jetson Nano. It is important to point out that the idea of these robots is that they can work inside a house or in the street, this last one is perhaps the most complicated due to the fact that I don't have a lidar that functions outdoors :'(. my robots use different models to the one of classification at the time, that is to say that the robot uses the same image to determine the place where it is, the emotion of the person (if it detects a face) and the detection of objects inside the home.

Note: This project only works with this software version:

Python Version: 3.6.9

TensorFlow Version: 2.0.0

Keras Version: 2.3.1

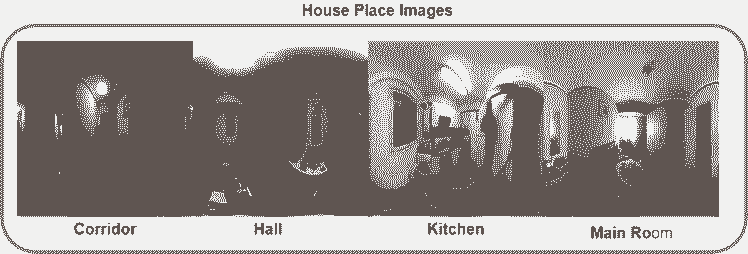

Classification of House Places

For this to be feasible, the robots must have the ability to recognize places within the home. To do this, the robots require some previous processes, ie, the robots need to learn the place where they will unfold. to do this the robots need to be placed in each of the rooms of the home, kitchen, living room, corridor, rooms, etc. they take about 200 images per place. These images are swindled using a 360º Ricoh Theta V camera.

These Figure 26 was resized to a size of 224x224, once resized a data set was created, which would be used for training the network.

Figure 26, House Place Dataset

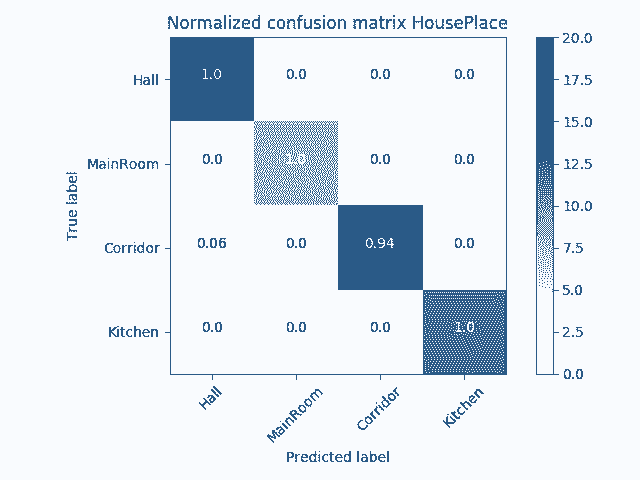

Figure 27 shows the matrix obtained from the classification of the places in the house, in the validation stage. It can be determined from this graph, that the system properly recognizes the places where the robot is located.

Figure 27, House Place Confusion Matrix

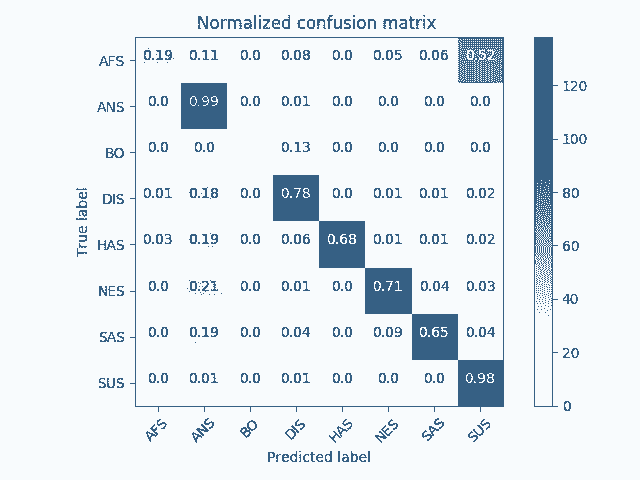

Emotion Classification

The classification of emotion is an important aspect in the robot, since emotions influence human behavior and can affect your health. It is for this reason that I have incorporated an emotion classification model, which can classify 7 emotions: AF = afraid, AN = angry, DI = disgusted, HA = happy, NE = neutral, SA = sad and SU = surprised.

Figure 28, Confusion Matrix Emotion Classificatin

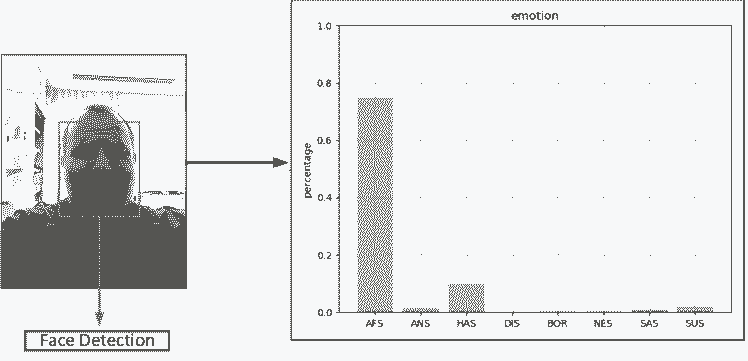

Figure 29, Emotion Classification Test

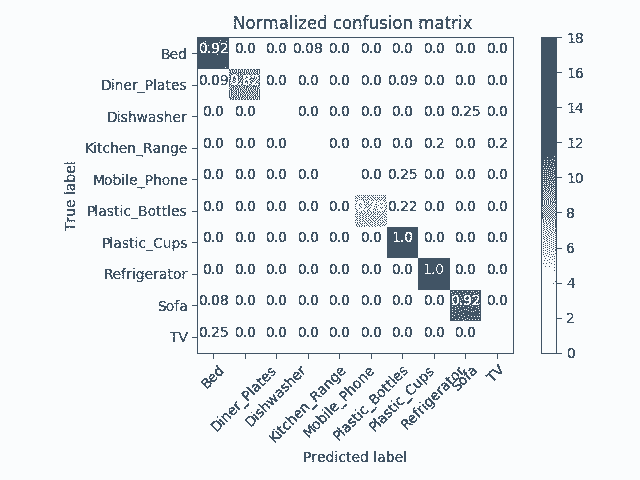

Things of the house

This is a model that takes care of classifying the things that can be found inside a house, this is a dynamic model. This means that the user can mortify it, adding classes inside the model. The dynamism of this model, is mainly due to the fact that not all the houses have the same objects.

Figure 30, Home Thing Confusion Matrix

Appendix

For the robot to have a communication with the alexa skill, it is necessary to use the ngrok tool. Which allows to create a communication tunnel between alexa and the robot.

Port: 3000

sudo ./ngrok http 3000NVIDIA Dev User: Jaime Andres Rincon Arango

Figure 31, HTML Interface.

Figure 31, HTML Interface.

Conclusions and Future work

The project that has been presented is a small part of a personal project that I am doing, the main objective was to integrate the Deep Learning algorithms into a Jetson Nano. At the same time I have been trying to integrate these robots with Amazon's Alexa voice assistant. However, this integration was not successful, due to the time it takes the robot to sort out emotions, place of the house and things in the house. As future work I hope to do an integration with Nvidia Isaac, to reduce the latency when doing the voice interaction. In addition to improving navigation algorithms, use the lidar for the inside (Little Sister) and the outside (Big Sister) and make the robots incorporate learning by reinforcement. To integrate a system based on multi-agent systems so that the two sisters collaborate with each other, in order to improve the quality of life of the user.

Possible Errors

If you have this error wen you use Flask, Keras and Tensorflow, the solution can be seen in this Link.

AttributeError: '_thread._local' object has no attribute 'value'

Note: The project that has been descrived is not a weekend project. It is a complex project, in which different AI technologies are integrated, as well as, different levels of programming.

Related projects

Leave your feedback...