Zerooverflow Smartbin Solution For Smart City Management

About the project

Use Camthink NeoEye301 with Home Assistant to monitor the trash bin status and provide alert with high accuracy.

Project info

Difficulty: Moderate

Platforms: Home Assistant

Estimated time: 1 hour

License: GNU General Public License, version 3 or later (GPL3+)

Items used in this project

Story

CamThinkis a new developer-focused brand from Milesight, created to make edge AI simpler for everyone. We build open, developer-friendly edge AI hardware for both community builders and enterprise engineers. And help them to move from early prototypes to reliable real-world deployments — accelerating your strategy in edge AI.

Camthink NeoEye 301is powered by the STM32N6(Cortex-M55) processor with the Neural-ART" NPU, which delivers real-time Al inference and professional grade image processing with ultra-low power consumption.

Home Assistantis a free, open-source home automation platform designed to be a central "brain" for your smart applications, it prioritizes local control and privacy, As of early 2026, Home Assistant has become significantly more user-friendly through its "Year of Voice" and "Collective Intelligence" initiatives.

City management efficiency is often hidden in plain sight, masked by "inefficiency" we've grown used to. Most sanitation vehicles today follow fixed routes, stopping even at empty bins.

In this use case, we will show you how to manage the trash bin status to by Edge AI solutions to shift "Scheduled Cleaning" to "Demand-driven" for the smart city industries.

Hardware:

- Camthink NeoEye 301, Ultra-Low Power Vision AI Camera. Cellular version is recommended

Software Platform:

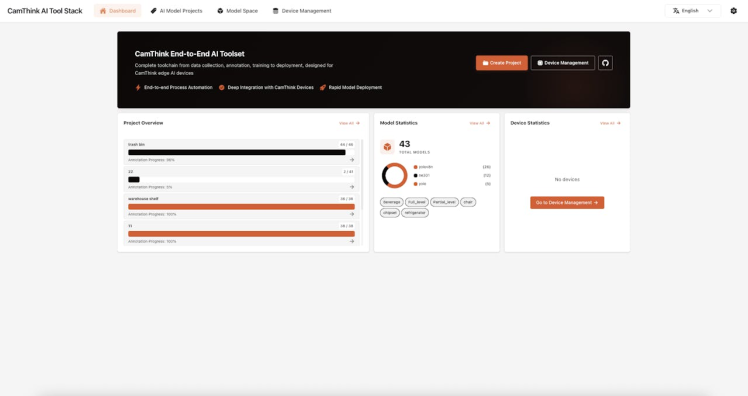

- Camthink AI Tool Stack, an end-to-end AI toolset covering the entire workflow from data collection, annotation, training, quantization, to deployment.

- HomeAssistant Platform, you will need to install it in your server in advance.

In this part, we will show you how to achieve this full usecase step by step.

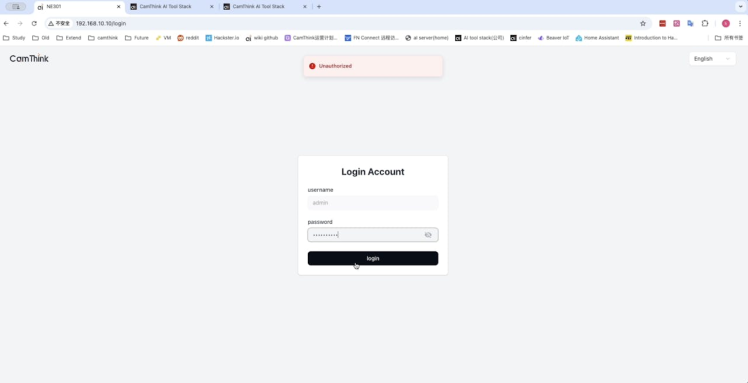

Install the NE301 correctlyFirstly, install camthink NE301 with SIM card inside correctly, press and hold the button for 2 or 3 seconds to activate the WiFi.

Connect the WiFI endpoints start with NE301_<Last 6 MAC digits>, Input the default IP address: 192.168.10.10

Default Username: admin

Default password: hicamthink

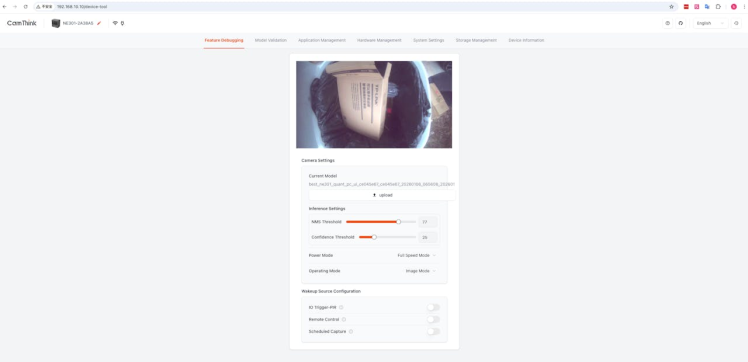

Click Login to see the live view with detailed settings.

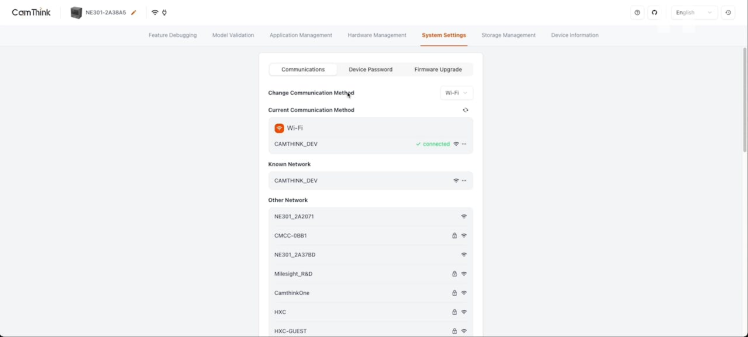

Navigate to System settings to connect the network access.

Camthink NE301 supports WiFi and Cellular modules. Choose the method to make sure the network access is connected.

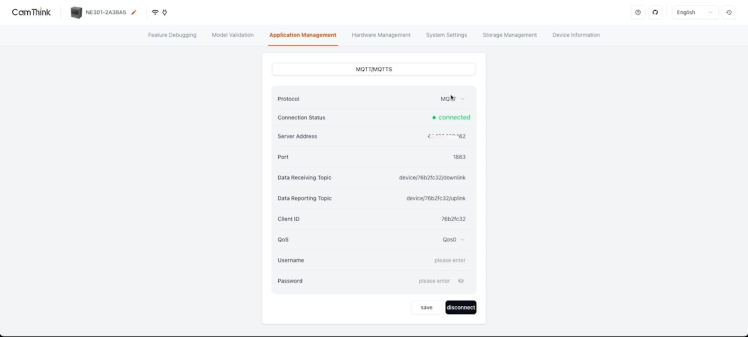

Navigate to Application Management to configure where to forward the data and pictures.

Input the details of your own mqtt broker or AI tool stack server

- Server address: the IP address of the MQTT broker.

- Port: the port of MQTT server, default value: 1883

- Data Receiving Topic: the downlink command topic used to control and trigger the image capture.

- Data Receiving Topic: the uplink command topic used to traismit the data and the pictures

- Client id: the mqtt client id, some servers will verify this value

- Username : the username to join mqtt server, input it according to the server's needs.

- Password: the password to join mqtt server, input it according to the server's needs.

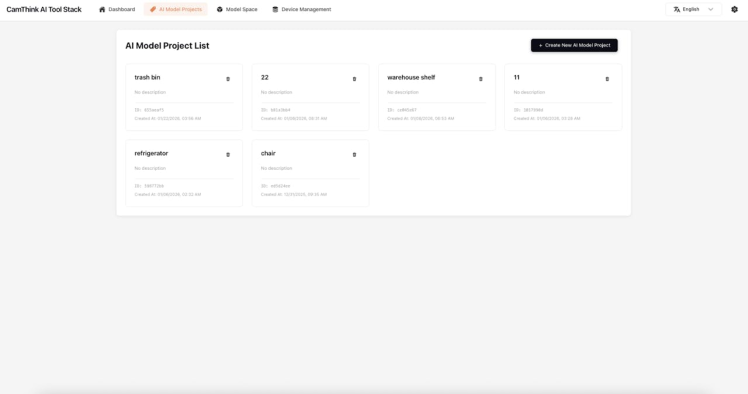

Login Your own AI tool stack server to create a new project

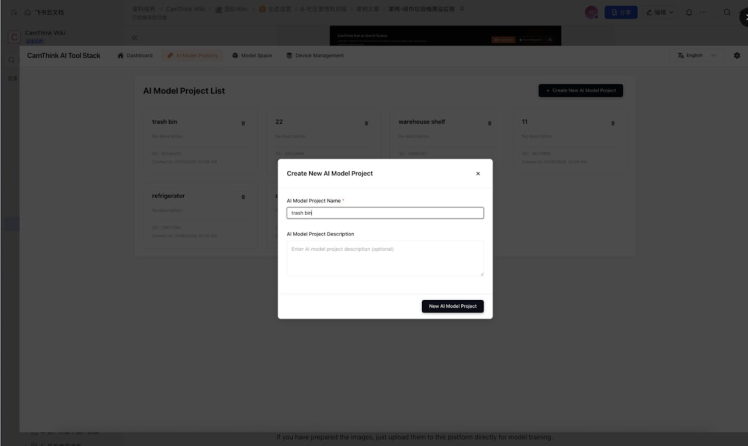

Click Create New AI Model Project, Input the name and description:

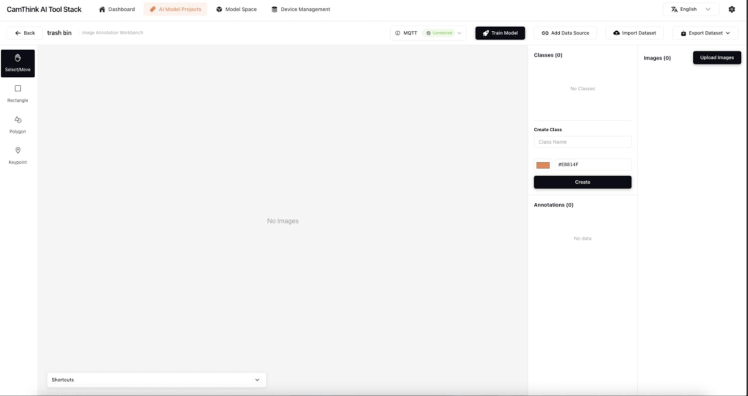

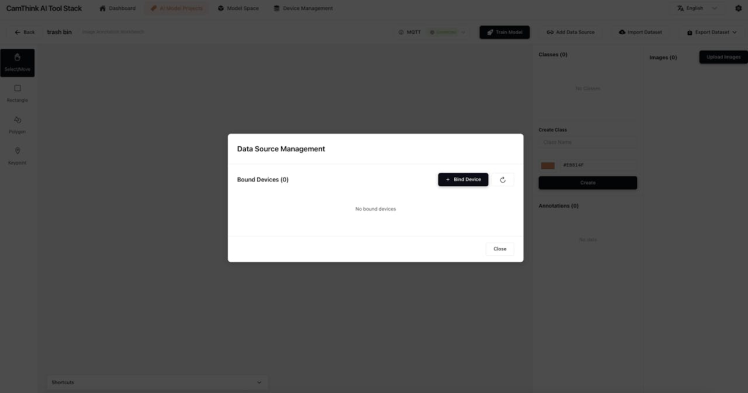

Open this project and bind the device for image collection. You will need to create this device first.

The pictures of the refrigerator captured by NE301 will uplink as configured.

If you have prepared the images, just upload them to this platform directly for model training.

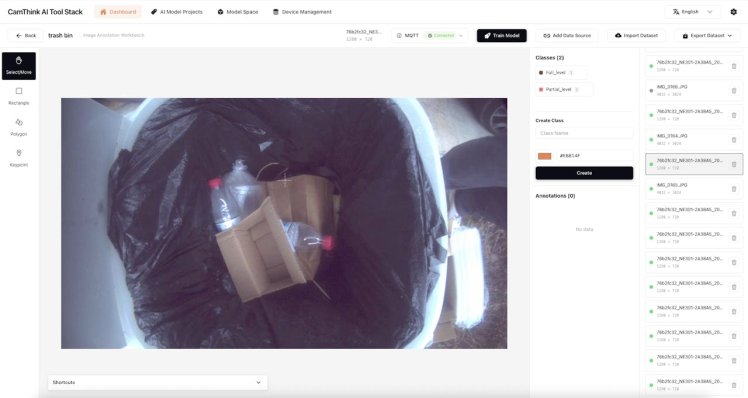

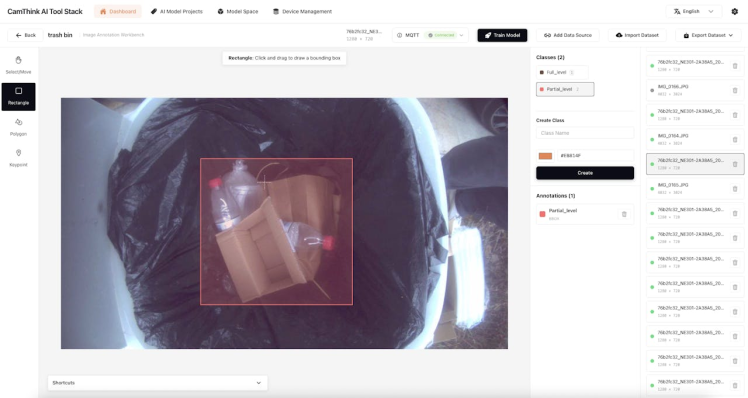

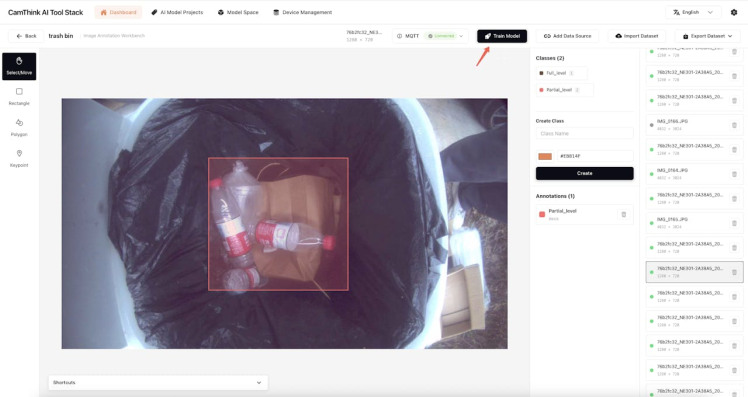

Before starting to train the models, create the Class here, in this example, we create two classes to identify whether the trush bin is Full level or Partial level.

Choose the proper type to tag the object.

Just do it one by one to make sure all the objects are marked correctly.

If you have the datasets already, just upload them here directly

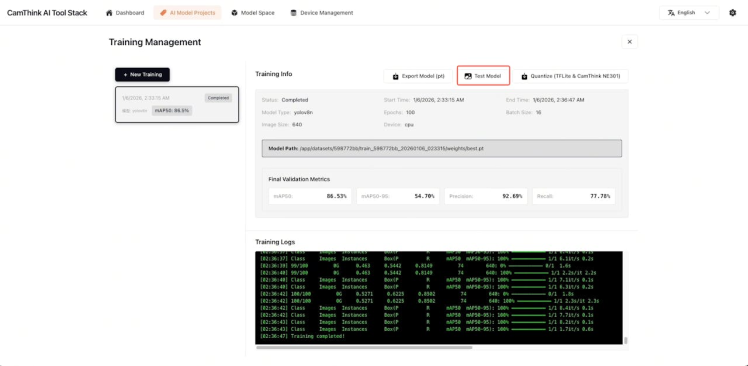

Click 'Train Model' to start the training.

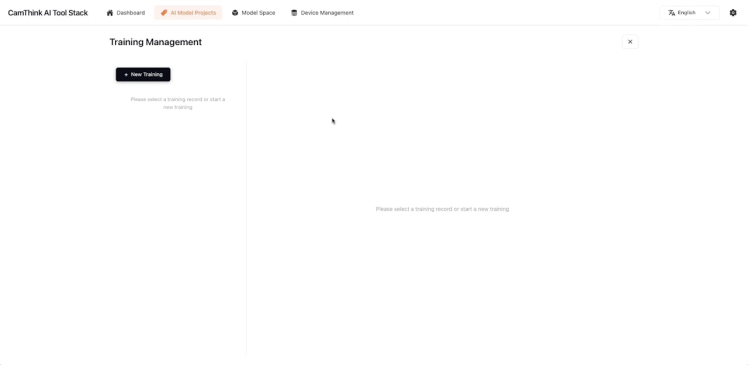

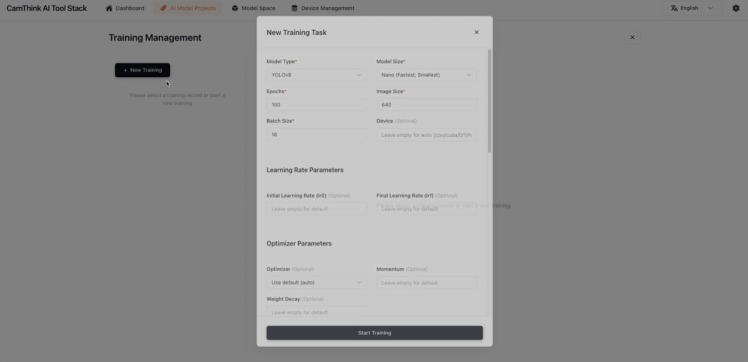

Click 'New Training' to create a new task, keep all the default settings

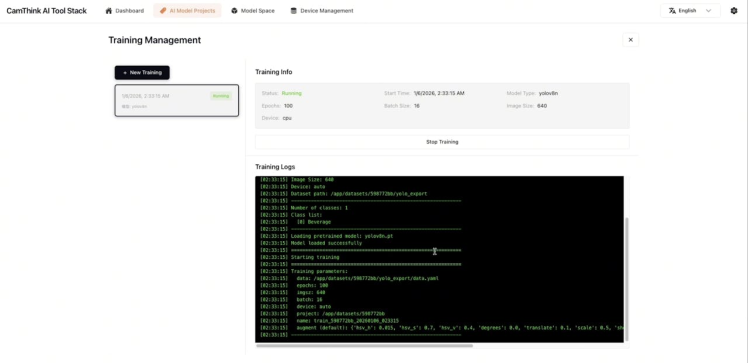

Then start the training. It will take a little while.

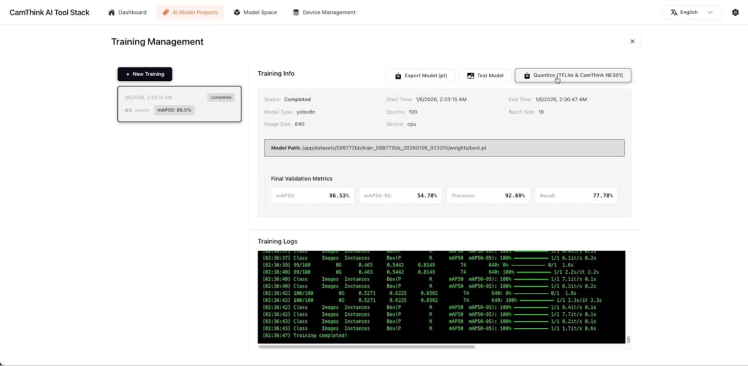

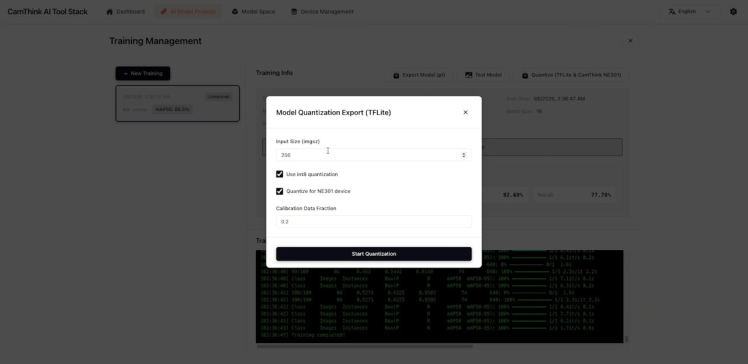

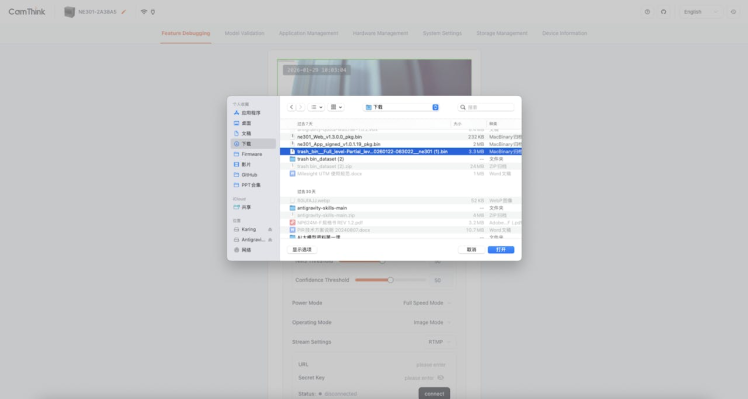

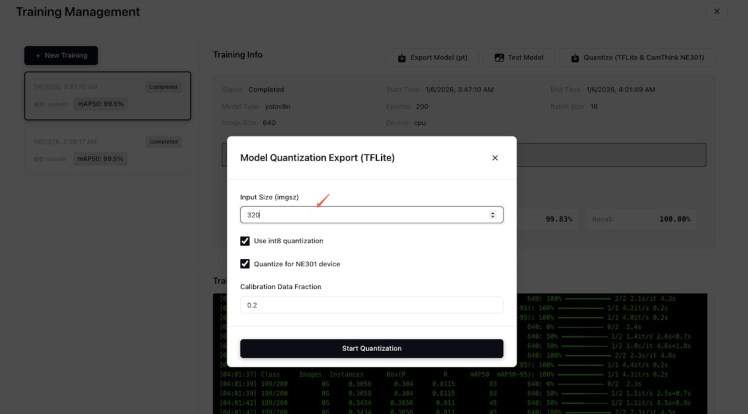

To deploy the model into NE301, we need to quantize it before upload it to the device.

Click the Quantize button.

Click it to start, just keep the default settings here

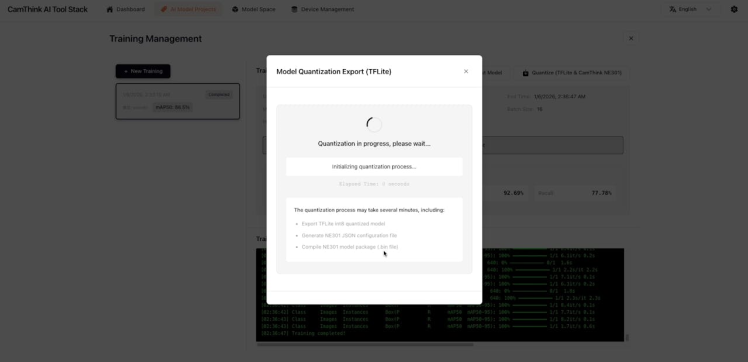

It takes a little while to finish it.

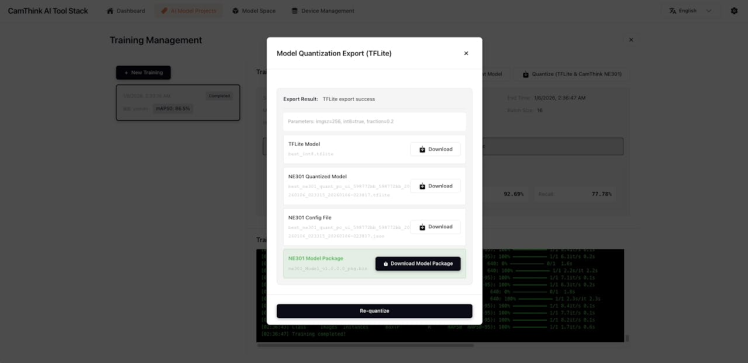

The NE301 Model Package (*.bin) is the exact quantized model. Click to download it.

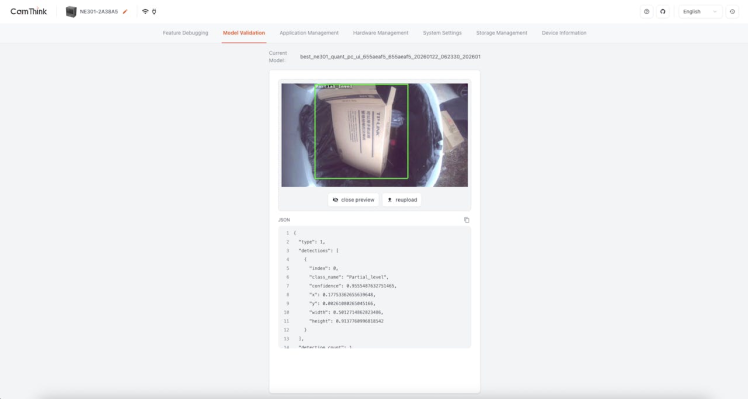

You can also test the model here to confirm if all good

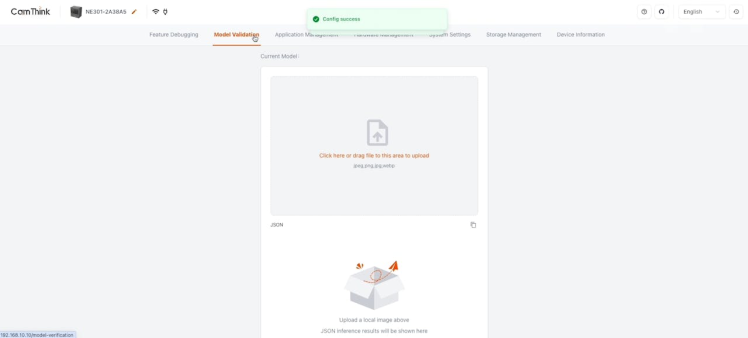

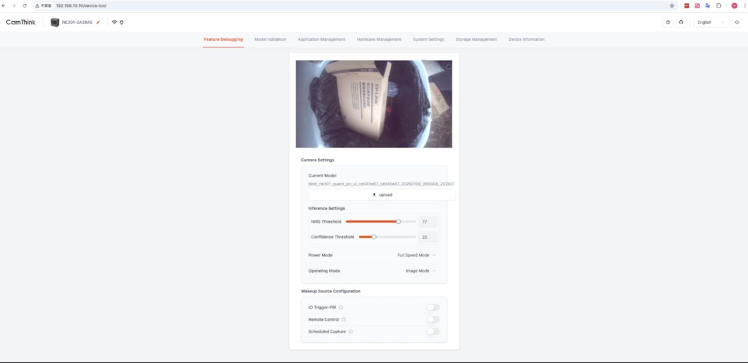

let's back to the device to upload the new model.

Click Upload button to install it

📷📷

When done, the chipsets on the shelf are marked correctly.

You can upload more images to verify the performance.

To make the data values more valuable for customers, we choose HomeAssistant for integration and visualization. You can also connect it to other 3rd-party platforms.

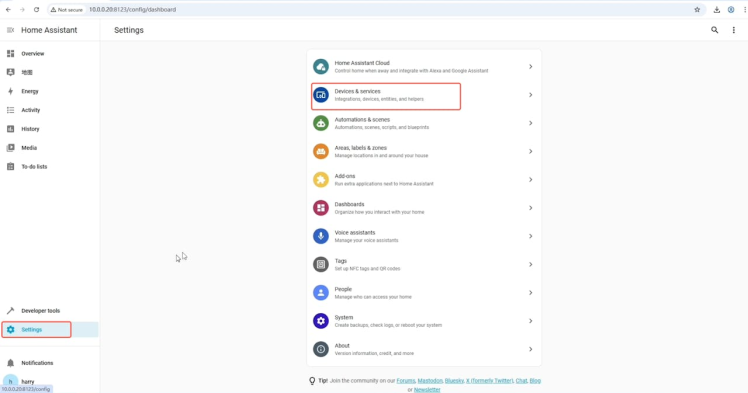

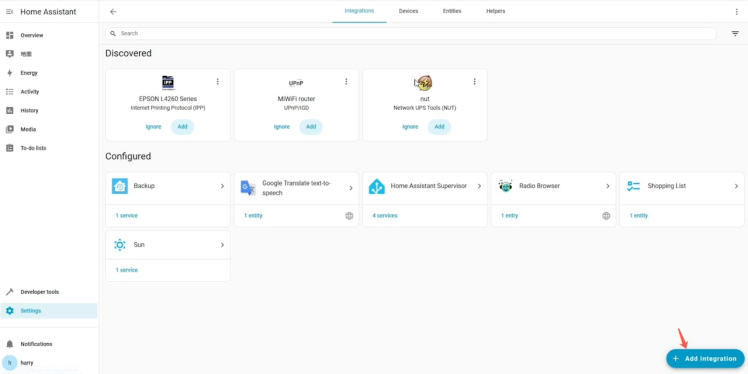

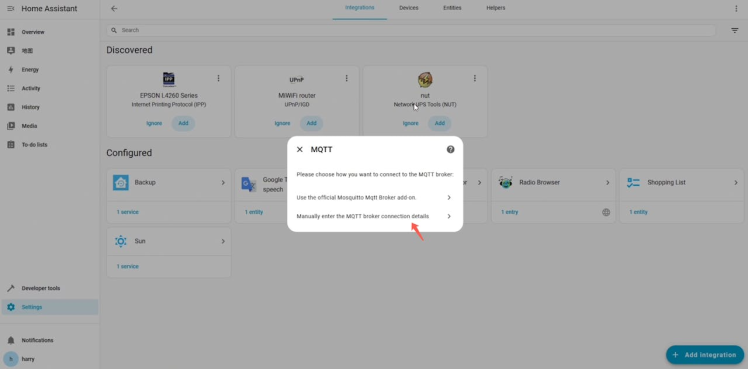

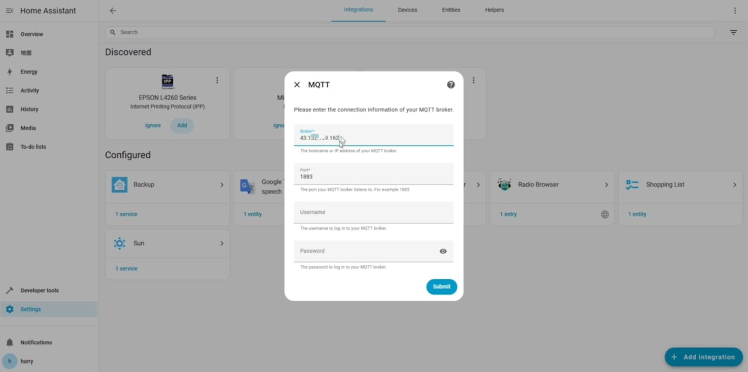

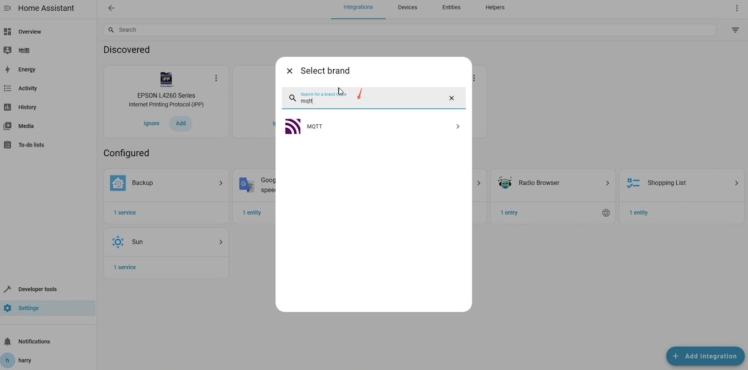

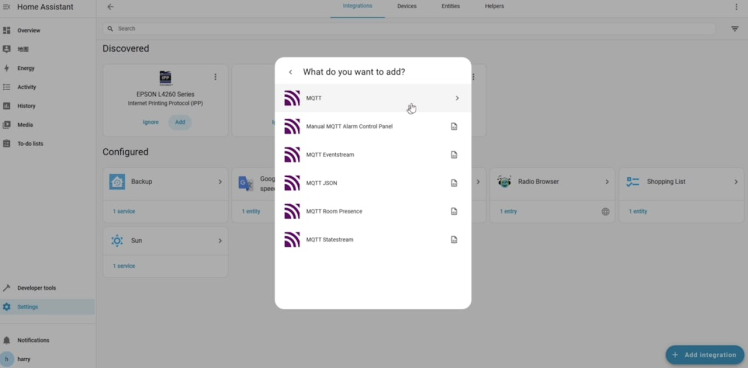

Open the 'Devices & Services' to install the MQTT integration.

Click Add Integration button to install the MQTT addon.

Just input MQTT to search it.

Choose the second one, and input the mqtt broker configured in NE301

Submit to save it. The MQTT connection is ready.

In this guide, we will try a new method to create the mqtt devices by Edit the configuration file /homeassistant/configuration.yaml directly.

Here is the configuration details in this guide:

# Loads default set of integrations. Do not remove.

default_config:

# Load frontend themes from the themes folder

frontend:

themes: !include_dir_merge_named themes

automation: !include automations.yaml

script: !include scripts.yaml

scene: !include scenes.yaml

mqtt:

sensor:

# 1. Bin Status (Resetting ID and logic to force icon display)

- name: "Bin Status"

state_topic: "device/76b2fc32/uplink"

value_template: >

{% if value_json.ai_result is defined and value_json.ai_result.ai_result.detections is defined %}

{% set detections = value_json.ai_result.ai_result.detections %}

{% if detections | length > 0 %}

{% set class = detections[0].class_name %}

{% if class == 'Full_level' %} Full

{% elif class == 'Partial_level' %} Partial

{% else %} Normal {% endif %}

{% else %} Normal {% endif %}

{% else %} Unknown {% endif %}

# Independent icon logic that doesn't rely on state memory

icon: >

{% if value_json.ai_result is defined and value_json.ai_result.ai_result.detections is defined %}

{% set detections = value_json.ai_result.ai_result.detections %}

{% if detections | length > 0 %}

{% set class = detections[0].class_name %}

{% if class == 'Full_level' %} mdi:delete-circle

{% elif class == 'Partial_level' %} mdi:delete-variant

{% else %} mdi:delete {% endif %}

{% else %} mdi:delete {% endif %}

{% else %} mdi:delete-off {% endif %}

# Changed Unique ID to force a fresh start

unique_id: bin_status_sensor_new_v5

# 2. Battery Status

- name: "Battery Status"

state_topic: "device/76b2fc32/uplink"

value_template: "{{ value_json.device_info.battery_percent | default(0) }}"

unit_of_measurement: "%"

device_class: battery

state_class: measurement

unique_id: trash_bin_battery_76b2fc32

# 3. Device Name

- name: "Device Name"

state_topic: "device/76b2fc32/uplink"

value_template: "{{ value_json.device_info.device_name | default('Unknown') }}"

icon: mdi:information-outline

unique_id: trash_bin_device_name_76b2fc32You will need to update the name, state_topic, the class value to yours.

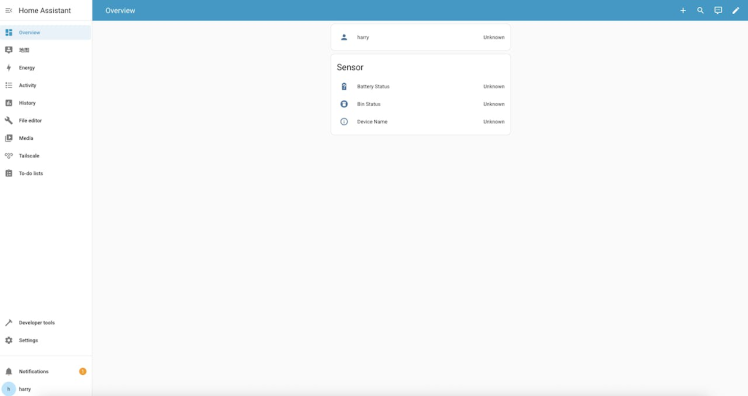

Then apply the changes by click 'Manually configured MQTT entities' in Developer tools page directly

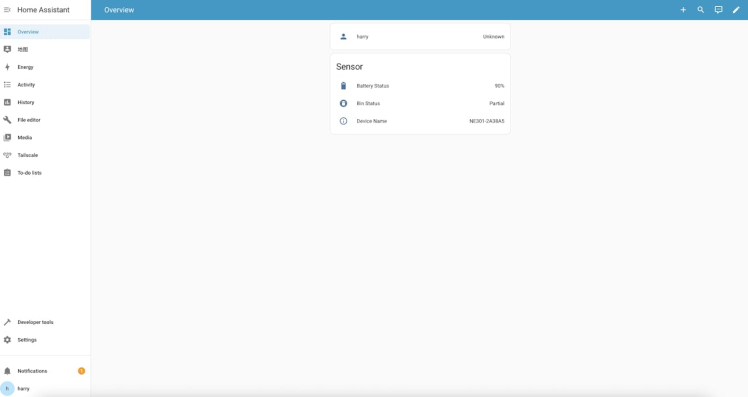

You will find the Sensor is created correctly.

- Quick Test

Let's start to monitor the level status of the trash bin. The status will appear with the values correctly

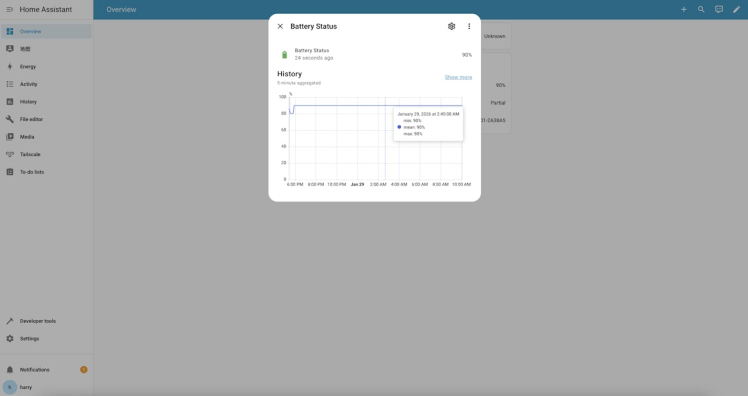

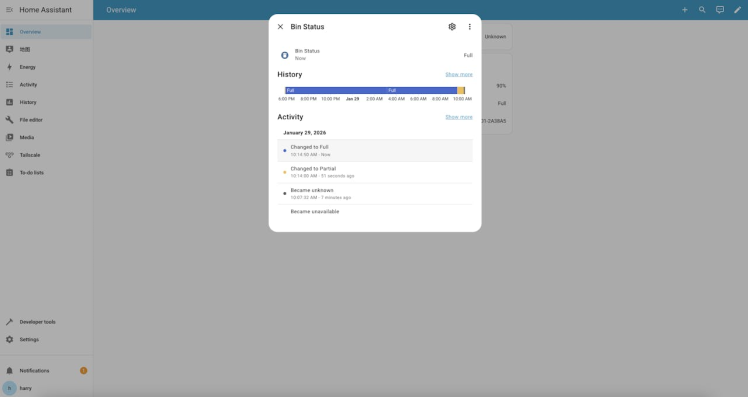

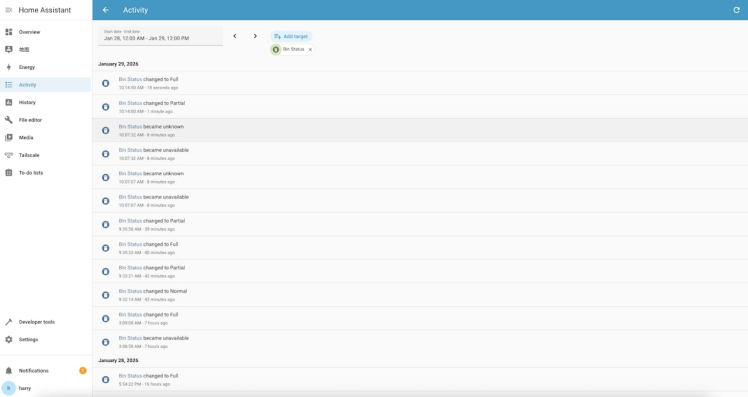

You can also check it in more details in histories

HomeAssistant platform supports the other appplications to trigger the alert, you can configure them to achieve the full management.

Q&AQ: Can I use the same MQTT broker and Camthink AI Tool Stack for Model Training and Quantilization? A: The server in this guide is for internal use. You need to install your own AI Tool Stack on your own

Q: Since the Chipset is smaller, how to improve the model's performance. A: You can try the option of Input Size to be 320 during the quantize process.

Q: How to set the value template in HomeAssistant? A: You can visit the HomeAssistant website to get the details about how to use it. Here is an example of NE301's report data:

{

"metadata": {

"image_id": "cam01_1767603614",

"timestamp": 1767603614,

"format": "jpeg",

"width": 1280,

"height": 720,

"size": 38692,

"quality": 60

},

"device_info": {

"device_name": "NE301-2A38A5",

"mac_address": "44:9f:da:2a:38:a5",

"serial_number": "SN202500001",

"hardware_version": "V1.1",

"software_version": "1.0.1.1146",

"power_supply_type": "full-power",

"battery_percent": 90,

"communication_type": "wifi"

},

"ai_result": {

"model_name": "YOLOv8 Nano Object Detection Model",

"model_version": "1.0.0",

"inference_time_ms": 50,

"confidence_threshold": 0.23999999463558197,

"nms_threshold": 0.55000001192092896,

"ai_result": {

"type": 1,

"detections": [

{

"index": 0,

"class_name": "Full_level",

"confidence": 0.9868782162666321,

"x": 0.33418095111846924,

"y": 0.29501914978027344,

"width": 0.5012714862823486,

"height": 0.37073203921318054

}

],

"detection_count": 1,

"poses": [],

"pose_count": 0,

"type_name": "object_detection"

}

},

"encoding": "base64"

}

Leave your feedback...