Traffic Segmentation Using Ultra96v2 And Vitis-ai

About the project

This is a real time demo running on Ultra96V2 for segmentation a live YouTube video stream from Shibuya Crossing, Tokyo.

Project info

Items used in this project

Story

Overview

Traffic congestion has become a major problem for almost every large metropolitan area. There is a requirement for an intelligent traffic control system to establish a reliable transportation system. In this project I demonstrate a method for data collection for determining traffic congestion on roads using image processing and a pre-trained FPN model.

Ultra96V2 board Setup

The pre-built SD card image can be downloaded from http://avnet.me/avnet-ultra96v2-vitis-ai-1.2-image which includes compiled models with the B2304 (1 x DPU) with low RAM usage configuration. The image is flashed to the SD card using balenaEchter. After powering and booting the Ultra96V2 board it can be connected over wifi (look for SSID similar to Ultra96V2). We can access the default web interface at http://192.168.2.1/ where the wifi can be configured to connect the local network (home/office wifi router) and can use the internet.

Method

I have used Xilinx Vitis-AI development stack for inferencing. The Vitis AI Runtime (VART) API has been used to develop a Python script for inferencing. The code can be found in the GitHub repository mentioned in the code section at the end.

The workflow is to read a YouTube live stream from a video camera mounted at Shibuya Crossing, Tokyo and do segmentation using a Caffe FPN model from Vitis AI Model Zoo. Although the C++ API seems faster but I have chosen Python API for the demonstration and better understanding of the code for beginners. The Ultra96V2 board has wifi so I used it as an edge device without any camera or monitor connected. The Ultra96V2 does not have a hardware video decoder so I am using OpenCV to process the incoming video stream and output is streamed over local network using Gstreamer. The script is using 4 threads: 2 threads for DPU inferencing tasks, 1 thread for capturing live stream and 1 thread is used for streaming output.

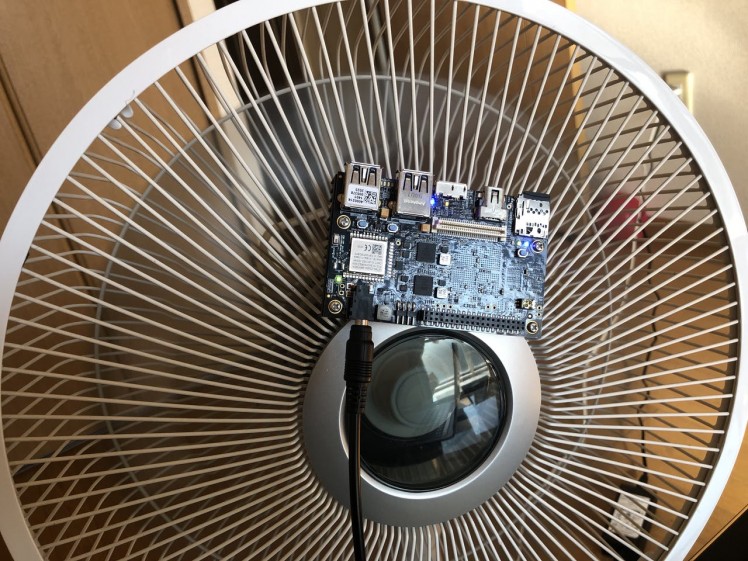

The Ultra96V2 comes with a heatsink which works well but running multithreaded inferencing makes it very hot and CPU gets throttled so I put it on the table fan cover for better cooling.

An FPN (Feature Pyramid Network) model pre-trained on Cityscapes database is used for segmentation task.

Real time Inferencing

Please follow steps below to run inferencing demo.

Connect to the Ultra96V2 board using ssh (default user and password is root)

- $ ssh root@ultra96v2-ip-address

Clone the repository and install prerequisites:

- $ git clone https://github.com/metanav/AdaptiveComputing.git

- $ cd AdaptiveComputing

- $ pip3 install pafy

Please change following variable in the main.py to match your target machine ip address:

ip = '192.168.3.2'Run the inferencing script:

- $ python3 main.py

Install GStreamer in the target machine and run following command:

- $ gst-launch-1.0 udpsrc port=1234 ! application/x-rtp,encoding-name=JPEG,payload=26 ! rtpjpegdepay ! jpegdec ! autovideosink

I would like to thank Avnet for providing the Ultra96-V2 development board.

Leave your feedback...