Phototrap With Object Detection Alarm

About the project

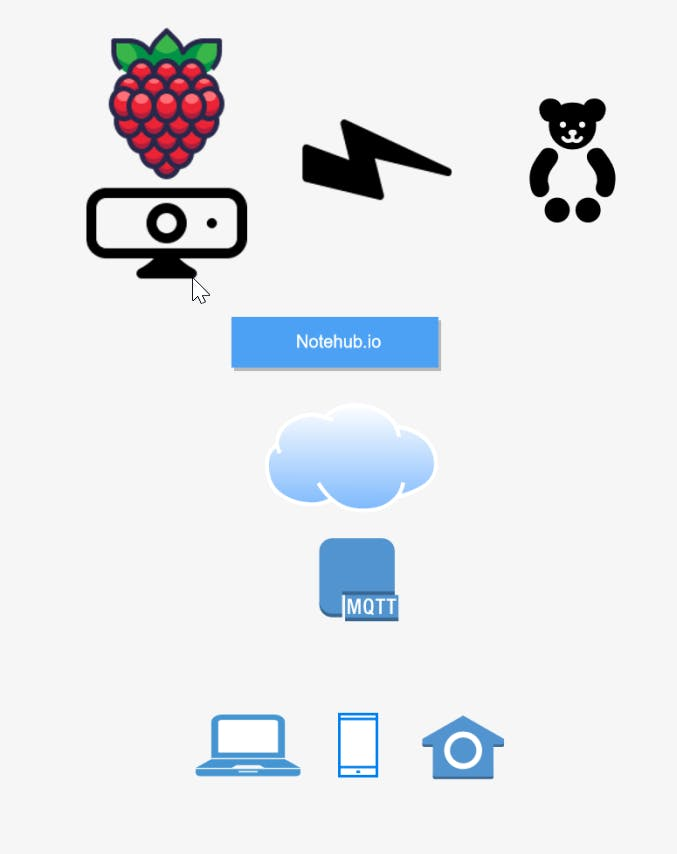

A Design Study to use ML with Edge Impluse to detect a object and use Blue Wireless hardware, notehub. io with Mqtt for notification

Project info

Difficulty: Easy

Platforms: Raspberry Pi, Edge Impulse, MQTT, Blues Wireless

Estimated time: 2 hours

License: GNU General Public License, version 3 or later (GPL3+)

Items used in this project

Hardware components

Story

This is a design study to detect objects by means of edge pulses and to transmit them via Blue Wireless hardware to the cloud in order to transmit a signal or an alarm regardless of location or to evaluate this in a long-term analysis.

My settings with Raspberry Pi and Webcam

Conceivable scenarios here are a wildlife camera with detection of animals or detection of specific animals with alarm.

Of course this also works with people and an alarm or vice versa no alarm if the right person enters the entrance.

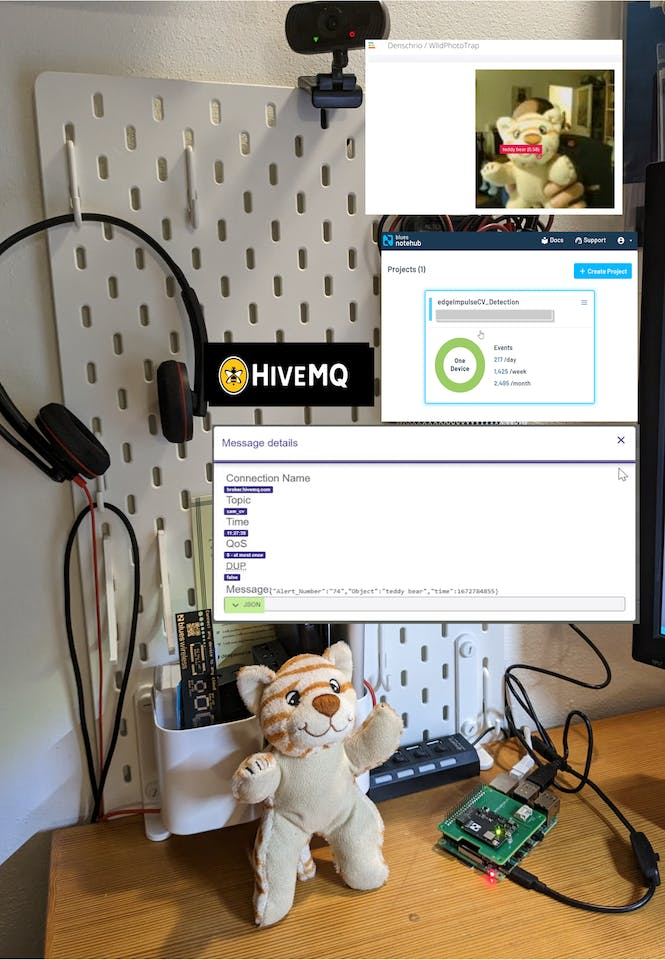

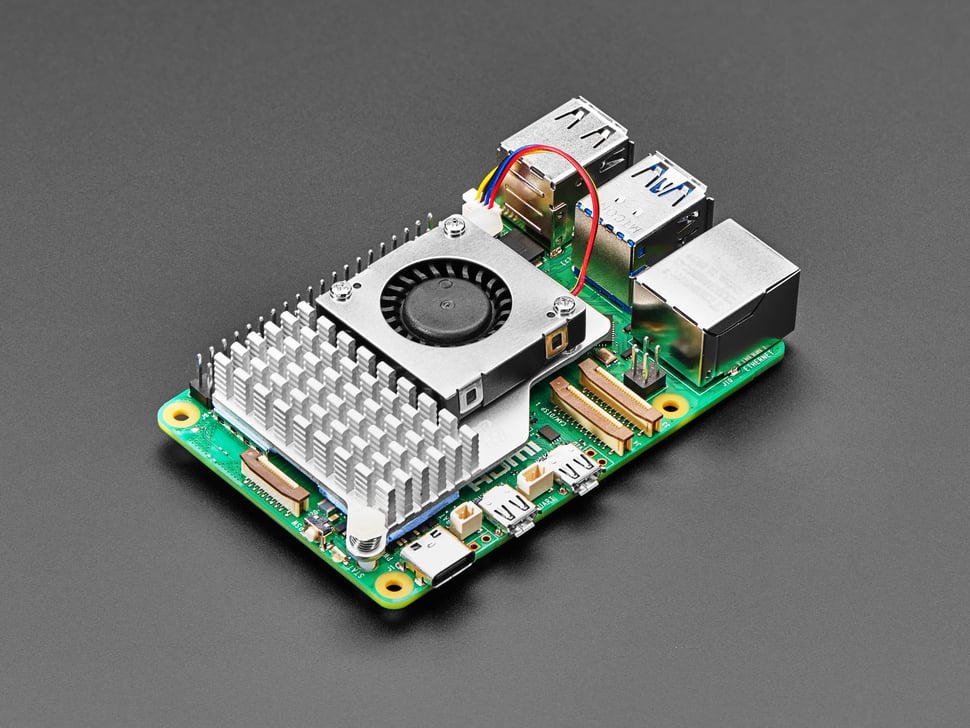

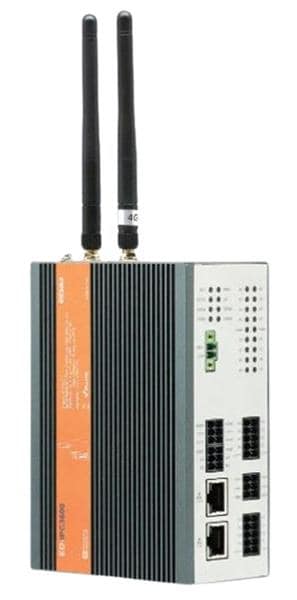

HardwareHere we used a Raspberry Pi 4 2gb with a commercial webcam and the Blue Wireless Notecarrier Pi HAT.

The better the quality of the optics, the better the result, especially in difficult lighting conditions.

If the ML model is trained with the images from the camera, the recognition rate increases significantly.

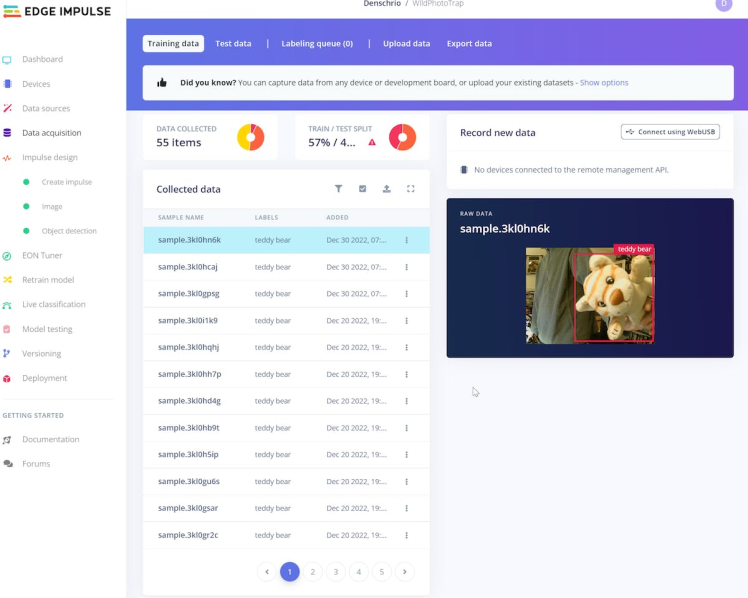

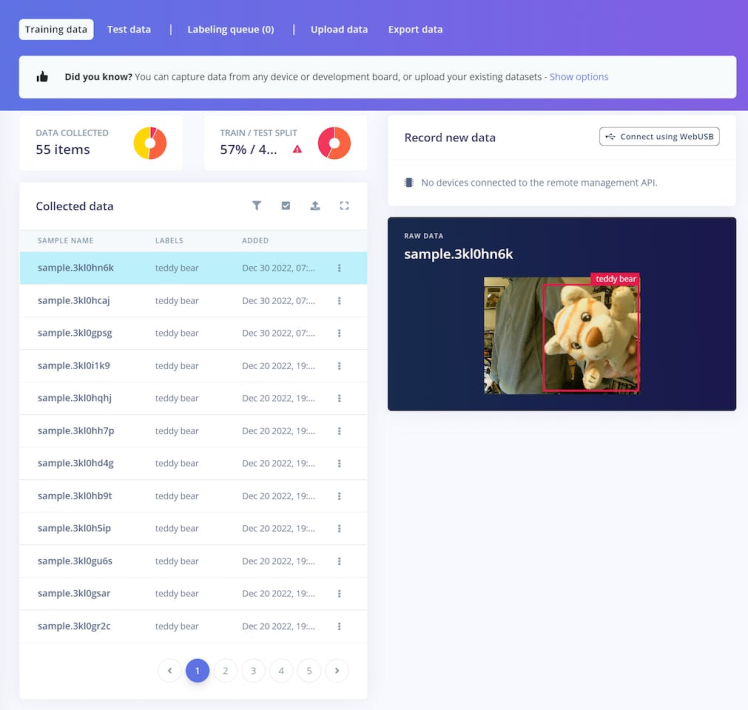

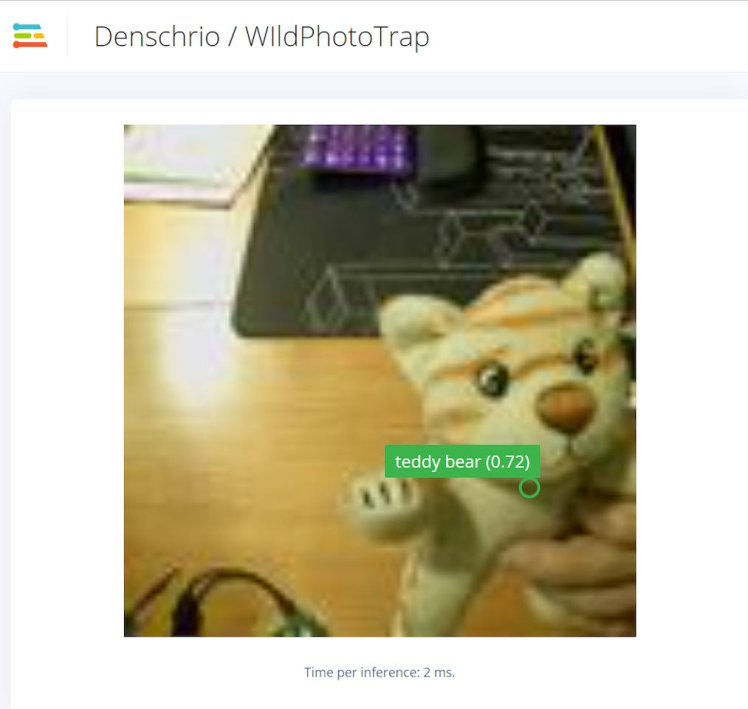

For testing, I took two cuddly toys and trained them with some images from different distances and angles, the footage must then be split into learning and training images.

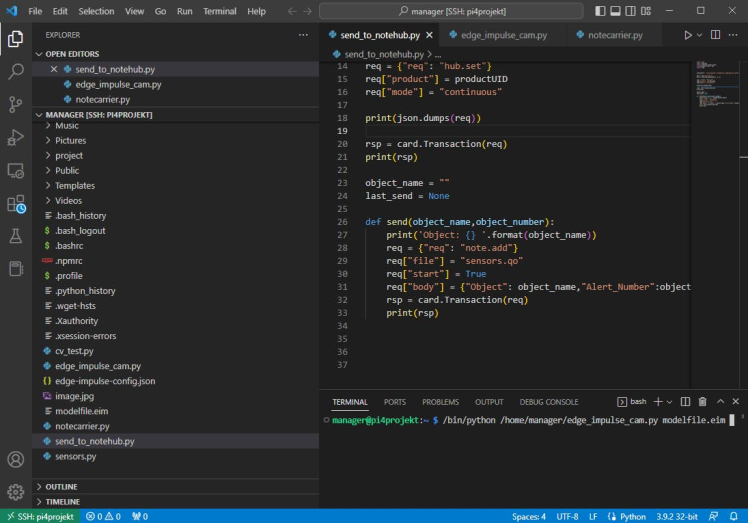

For programming I used Visual Studio Code with an extension (Remote SSH) for remote programming via SSH directly on the Raspberry Pi, which is very cool.

Working live on the Raspberry

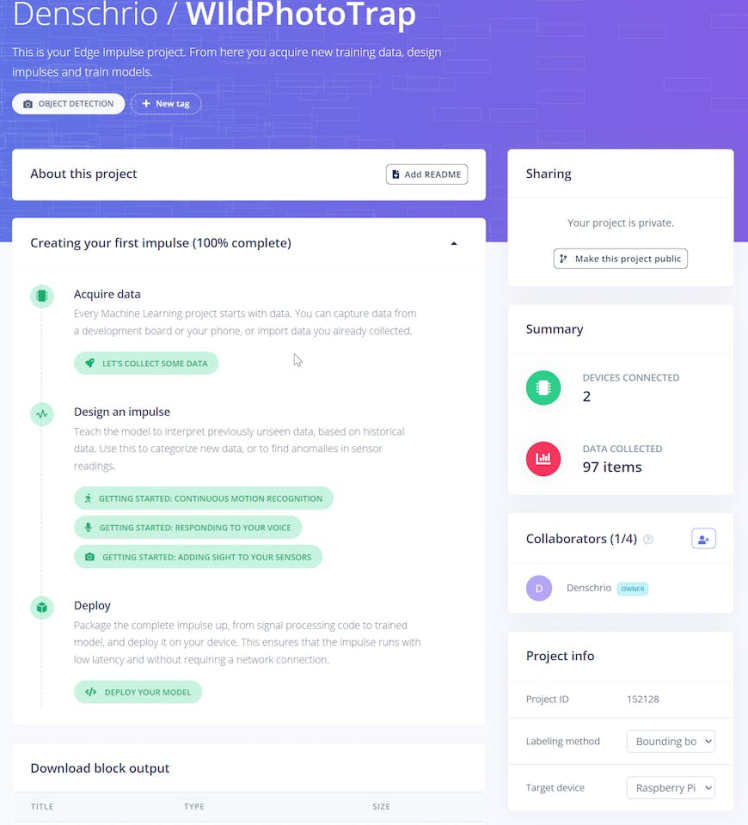

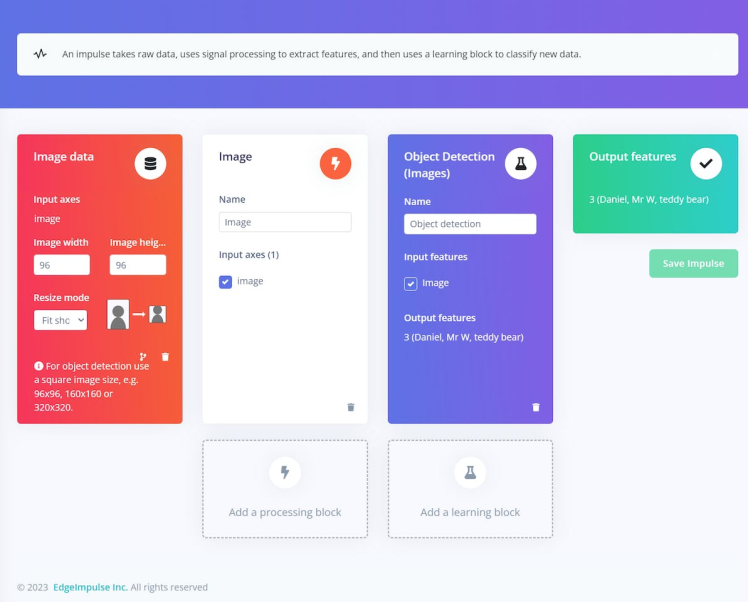

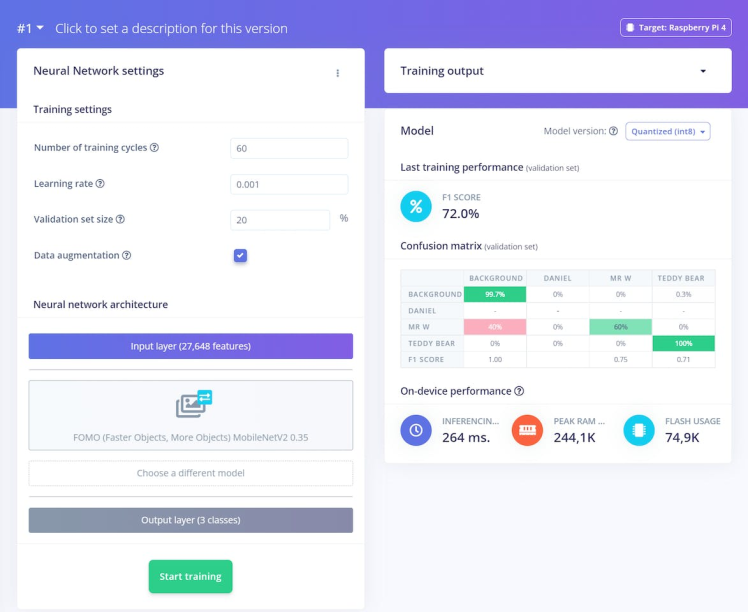

The program was implemented in Python, the camera image is evaluated with the ML model of Edge Impulse and in case of a hit it is transmitted to the Notehub.io in the cloud via the Blue Wireless module (Wlan or LTE modem). The project was created in Edege Impulse:

Here, the images need to be generated and classified accordingly to create and train a model.

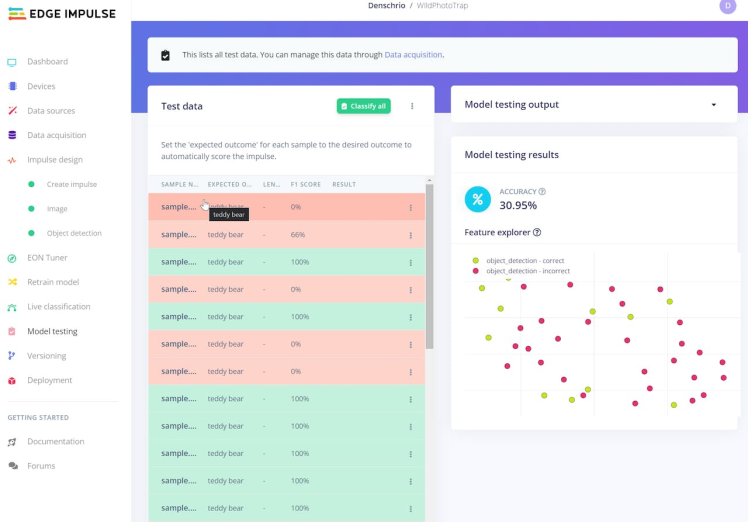

For testing, the ML model of Edge Impulse can be used via.

1 / 2

Edge Impluse Trainigs output

More data (images) = higher accuracy.

What matters is the amount and quality of the data, which is displayed as a website by the Raspberry with a slight delay.

The command

edge-impulse-linux-runner --download modelfile.eimdownloads the ML model locally to the Raspberry.

The command applies this in the Python script

/bin/python /home/manager/edge_impulse_cam.py modelfile.eimIn "edege_impulse_cam.py", the image is evaluated and if detected, it sent to Notehub.io via "send_to_notehub.py" with the Blue Wireless module.

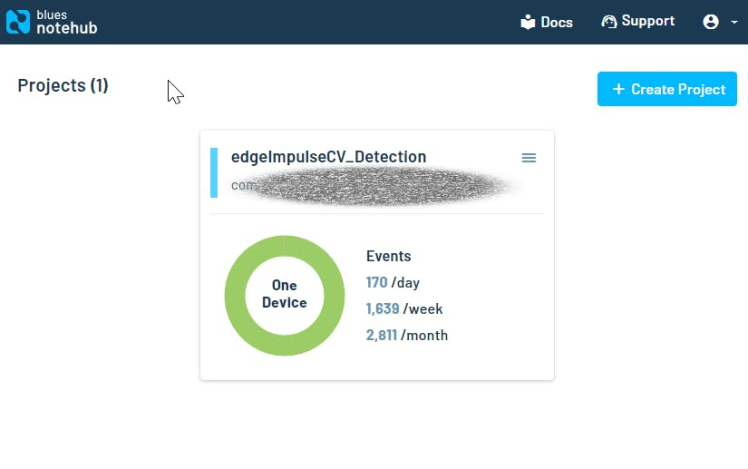

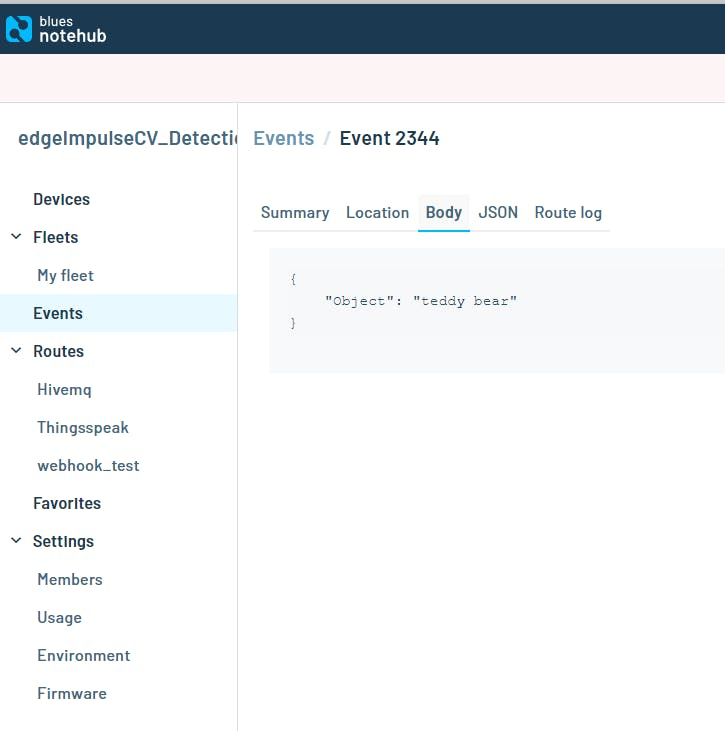

In Notehub.io this is then displayed as follows under Events:

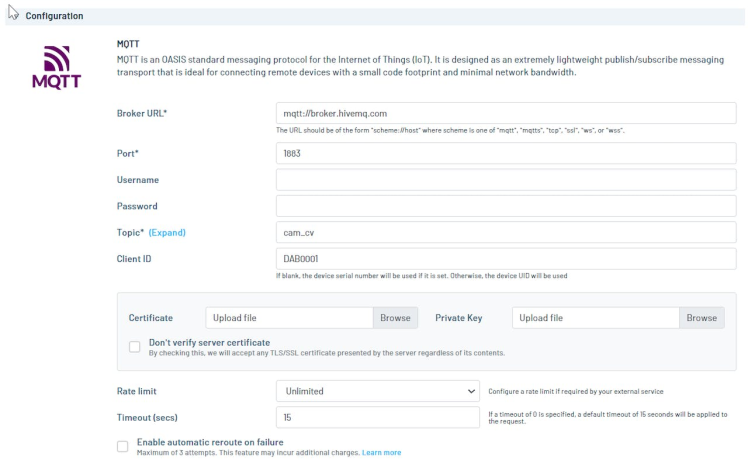

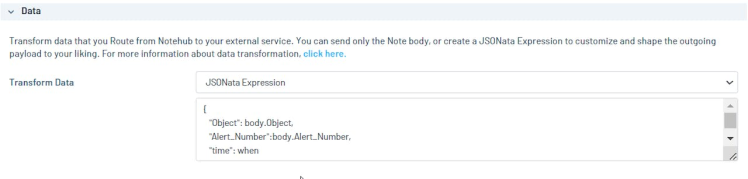

With a registered route, this can then be forwarded to a service. In my case to a Mqtt broker from HiveMQ.

with this JSON declaration

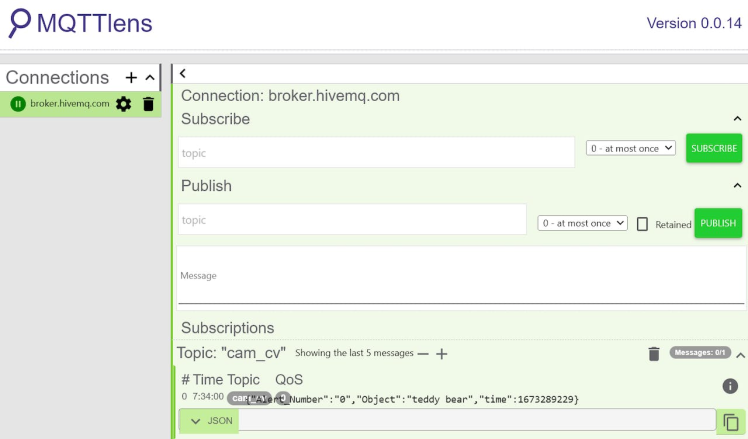

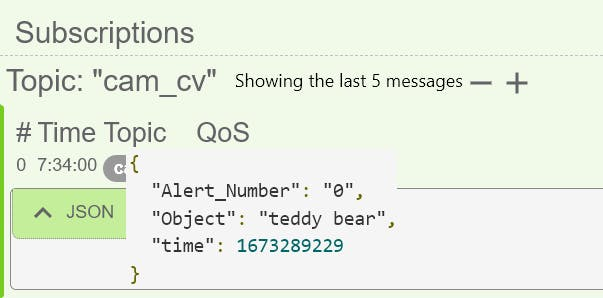

With MQTTlens I can see the message from my Phototrap.

Details of a message

This can also be used in a mobile app or with homeassistant to trigger an action here as a MQTT Client.

For example an alert -> Save my cookies from the bear :-)

Leave your feedback...